- Home

- Azure

- Microsoft Developer Community Blog

- Simple and easy distributed deep learning with Fast.AI on Azure ML

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Fast.AI is a PyTorch library designed to involve more scientists with different backgrounds to use deep learning. They want people to use deep learning just like using C# or windows. The tool uses very little codes to create and train a deep learning model. For example, with only 3 simple steps we can define the dataset, define the model, and start training:

data = ImageDataBunch.from_folder(data_folder, train=".", valid_pct=0.2,

ds_tfms=get_transforms(),

size=sz, bs = bs, num_workers=8)

.normalize(imagenet_stats)

learn = cnn_learner(data, models.resnet34, metrics=dice)

learn.fit_one_cycle(5, slice(1e-5), pct_start=0.8)

The first step defines a dataset where 20% of the samples are reserved for validation, default image transformations are applied, and normalization is performed using the “imagenet_stats” statistics. The second step defines a pretrained ResNet34 CNN model, and the third step launches the training run. You can compare this code to what it would take to run PyTorch directly, for example: Start Your CNN Journey with PyTorch in Python, to find how much work you saved.

Deep learning normally needs heavy computation and benefits greatly from distributed computing. Distributed computing assigns computing to multiple computer (nodes) and multiple GPUs and eventually integrates their results. It saves computational time by using more compute resources. However, it is costly to maintain heavy compute resources but only use them occasionally. Azure Machine Learning (ML) the platform where the compute resources are assigned dynamically: the compute resources are assigned only after you submit a new training run and will be deleted after the training is done. .

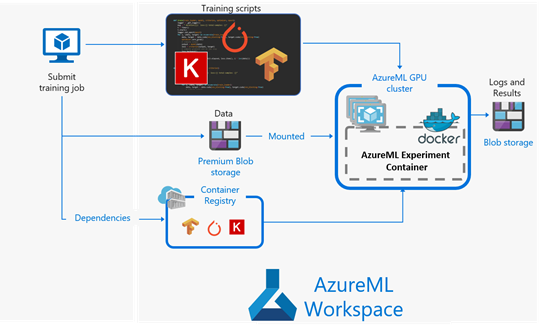

The following figure shows how the training works in the Azure ML workspace,

- Upload your data to premium blob storage and submit your training script.

- Compute resource is assigned, and the training starts.

- The training is done, results are saved, and the compute resource is deleted

Fast.AI only supports the NCCL backend distributed training but currently Azure ML does not configure the backend automatically. We have found a workaround to complete the backend initialization on Azure ML. In this blog, we will show how to perform distributed training with Fast.AI on Azure ML. The code is in this git repo: Distributed-training-Image-segmentation-Azure-ML.

Prepare Azure Resource

First, you need to create/have an Azure subscription, an Azure Storage account, and an Azure ML workspace. In the codes, you need to:

- Fill out the Azure subscription ID, resource group, and workspace name to connect to your workspace.

- Register your Azure storage container (where you have uploaded data) as the datastore to use as the data source.

- Connect or create compute target that will process data from the datastore:

All the codes are in the section "Prepare Azure Resource" of the notebook Ship-segmentation-Azure-ML.

Data Cleaning

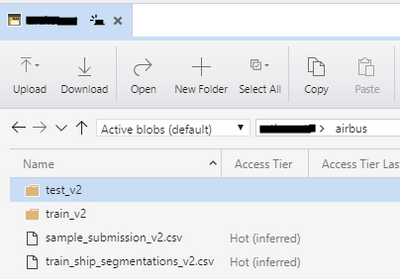

We used the data from a Kaggle challenge: airbus-ship-detection. The project is for detecting and segmenting ships in satellite images. First, create a container in Azure storage and upload the data in the folder "airbus" as shown in following:

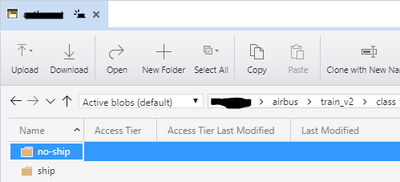

Then we will use a script "clean-data.py" to generate data for training: the folder "class" with 2 sub-folders () for classification:

the folder "label" for the label images, and "256-filter99" for images with ship size > 99, which is for segmentation:

We kick off the data generation in Azure ML: define data reference from datastore as data source; create an experiment to submit the estimator :

est_data = PyTorch(source_directory = script_folder,

compute_target = compute_target,

entry_script = 'clean-data.py', # python script for cleaning

script_params = script_params,

use_gpu = False,

node_count=1,

pip_packages = ['fastai'])

It runs the script "clean-data.py", on 1 node without GPU. The codes can be found in the section "Data Clean" of the notebook Ship-segmentation-Azure-ML.

Distributed deep learning

Referring to Iafoss' solution, we take 2 steps for better ship segmentation

- Classify images with/without ships

- Segmenting ships from ship images

The two tasks will be completed by CNN and U-Net, respectively.

Classification

With Fast.AI, we use 3 for the dataset, modeling, and training, which are similar to the 3 ones in the introduction. Then we setup distributed training. Fast.AI only supports the NCCL backend, and we found a workaround to complete the backend initialization on Azure ML. The initialization functions are defined in the script azureml_adapter.py, to be called for the initialization

local_rank = -1

local_rank = get_local_rank()

global_size = get_global_size()

local_size = get_local_size()

......

set_environment_variables_for_nccl_backend(local_size == global_size)

torch.cuda.set_device(local_rank)

torch.distributed.init_process_group(backend='nccl', init_method='env://')

rank = int(os.environ['RANK'])

Then the defined model can be distributed to the local:

learn = cnn_learner(data, models.resnet34, metrics=dice).to_distributed(local_rank)

The details can be found in the script classification.py.

As shown in the previous section, the classification data are stored in the sub-folder "class". So we will define data reference from it as data source, and also define the estimator :

est_class = PyTorch(source_directory = script_folder,

compute_target = compute_target,

entry_script = 'classification.py', # Classification script

script_params = script_params,

use_gpu = True,

node_count=3, # 3 nodes are used

distributed_training=Mpi(process_count_per_node = 4), # 4 GPU's per node

pip_packages = ['fastai'])

We used 3 nodes and all 4 GPU's (process_count_per_node = 4).

Azure hyper drive can setup multiple runs to tune the training parameters. First define the choices for each parameter:

param_sampling = GridParameterSampling({

'start_learning_rate': choice(0.0001, 0.001),

'end_learning_rate': choice(0.01, 0.1)})

Here we have 2 choices for start & end learning rate, respectively. Then the hyper drive config is defined from our estimator above:

hyperdrive_class = HyperDriveConfig(estimator = est_class,

hyperparameter_sampling = param_sampling,

policy = None,

primary_metric_name = 'dice',

primary_metric_goal = PrimaryMetricGoal.MAXIMIZE,

max_total_runs = 4,

max_concurrent_runs = 4)

We used dice/F1 as metric and set the primary goal as maximizing the metric. After submitting the hyper drive with an experiment, and finishing the runs, we can get the best run with best result by:

best_run = classification_run.get_best_run_by_primary_metric()

The codes can be found in the section "Ship/no ship classification" of the notebook Ship-segmentation-Azure-ML.

Segmentation

We use U-Net to complete the segmentation training – the code still has 3 steps:

data = (SegmentationItemList.from_folder(img_path)

.split_by_rand_pct(0.2)

.label_from_func(get_y_fn, classes=['Background','Ship'])

.transform(tfms, size=sz, tfm_y=True)

.databunch(path=Path('.'), bs=bs, num_workers=0)

.normalize(imagenet_stats))

learn = unet_learner(data, models.resnet34, loss_func=MixedLoss(10.0,2.0), metrics=dice, wd=1e-7).to_distributed(local_rank)

learn.fit_one_cycle(args.num_epochs, slice(args.start_learning_rate,args.end_learning_rate))

The "get_y_fn" is a lambda function to find the labels from image names; the loss method "MixedLoss" is a combination of Focal loss and Dice loss, referred to lafoss. We used the same workaround of distributed initialization. The details can be found in segmentation.py.

Then we defined the estimator and an experiment to submit it. The codes can be found in the section "Segmentation" of the notebook Ship-segmentation-Azure-ML.

Conclusions

In this blog, we show a simple and easy example of running distributed deep learning by using the Fast.AI library on Azure ML, with an image segmentation and a distributed initialization workaround. Since Fast.AI also supports NLP (transformer) models, you can use Fast.AI to distribute your NLP training by using the same distributed initialization workaround on Azure ML.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.