- Home

- Azure

- Microsoft Developer Community Blog

- A Solution Template for Soft Sensor Modeling on Azure - Part 1

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this two-part blog series we explore a solution template for creating models for soft sensors, taking advantage of the scalability and operationalization provided by the Microsoft Azure platform.

This is the first part, where we explore what a soft sensor is, the use case, dataset, common approaches and major steps usually needed to model soft sensors.

In the second part, we will explore how to use Microsoft's Azure Data and AI platforms to process and model the data at scale, in an automated fashion.

The code for this first part is available at this GitHub repository, as a series of Jupyter notebooks showing the steps needed to model the soft sensors according to the use case and dataset presented here.

What is a Soft Sensor

A soft sensor is a mathematical model implemented as a software artifact that is used to model the behavior of a physical sensor. This is usually applied in the context of industrial processes, for the purposes of process optimization and control. Soft sensors use easy to measure process variables, that can be used to estimate hard to measure ones. The latter are hard to measure when there are technological limitations, large measurement delays, high investment costs, harsh environmental conditions, etc., that make it difficult for physical sensors to be used.

You can find more information about soft sensors from the specialized literature, as compiled here.

A Simple Use Case from the Petrochemical Industry

Here we use a well known process in the petrochemical industry to show the major steps in building soft sensors as a data driven approach. This process is the Sulfur Recovery Unit (SRU) process. A simplified schematic diagram of this process is shown in Fig. 1 below:

Fig. 1: simplified schematic diagram of a Sulfur Recovery Unit

(source: Soft Analysers for a Sulfur Recovery Unit)

The Sulfur Recovery process is used to remove sulfur-based environmental pollutants from acid gas streams and to capture elemental sulfur as a by-product. The concentration values of SO2 (sulfur dioxide) and H2S (sulfuric acid) at the process output are needed to control the air flow intake, to keep the process at its maximum efficiency. This is a harsh environment, making it difficult to keep physical sensors working all the time at the process outputs. To help mitigating this, we can model those physical sensors as soft sensors.

The Dataset

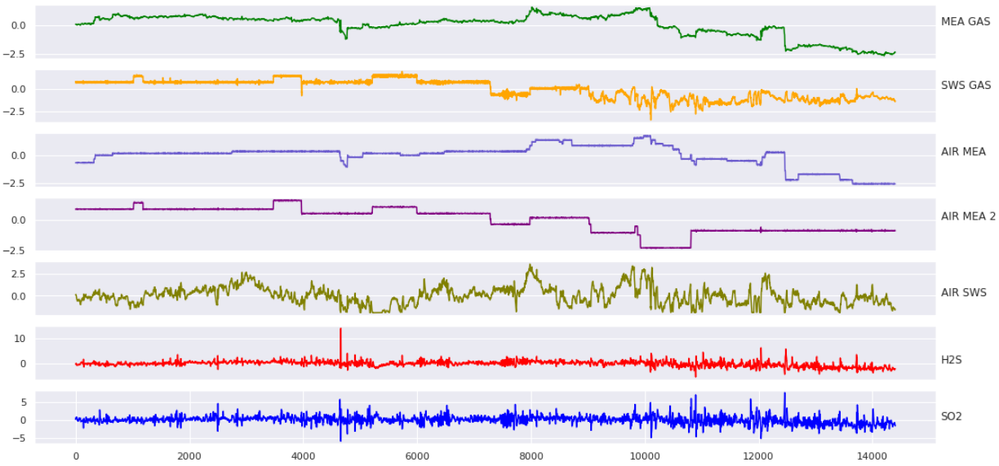

The dataset we use has the five relevant measurement series (our input variables) that can be used to model the concentration of SO2 and H2S at the process output (our output variables). These five input variables are:

- the gas flow in MEA zone (MEA GAS)

- the air flow in MEA zone 1 (AIR MEA1)

- the air flow in MEA zone 2 (AIR MEA 2)

- the air flow in SWS zone (AIR SWS)

- the gas flow in SWS zone (SWS GAS)

In the Fig. 2 below we have a plot of the data collected from the sensors in the SRU process, corresponding to the five input variables and two output variables described above:

Fig. 2: Input and Output Variables from the SRU Process

The dataset can be downloaded from here. You can also find more details about this dataset and relevant chemical processes in the corresponding paper here. You can also refer to the paper mentioned earlier in Fig. 1, for more details about the SRU process and a similar dataset.

In our implementation, we augment the original dataset by introducing some extra features and missing data. We do this because the original dataset is already pre-processed for modeling, but we want a more realistic dataset in order to implement some examples of data pre-processing, as explained later.

Common Approaches for Modeling Soft Sensors

When the necessary process knowledge is available, soft sensors can be created from specialized process simulators, or the mathematical models can be directly used, in the simpler cases when they are known. But this approach is becoming more and more difficult, due to increasing complexity of industrial processes.

As the use of sensors in industrial process started to become more common, data collected from these sensors started to be used to model process variables of interest. Statistical models and simple linear machine learning models were commonly used for that.

With the abundance of sensors and collected process data, soft sensors can now be developed using an AI data-driven approach, eliminating much of the specialized process knowledge once needed. This is the approach we use in this solution template.

Major steps for Soft Sensor Modeling

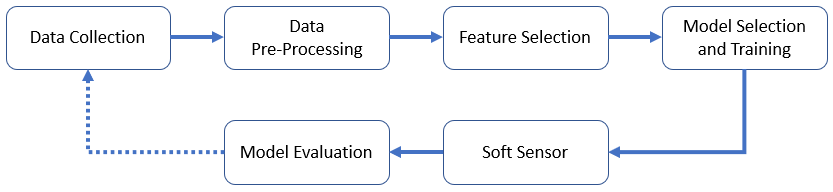

Fig. 3 below shows the major steps usually needed to be implemented for development of soft sensors using an AI data-driven approach:

Fig. 3: Major Steps for Soft Sensor Development

For the data collection we capture and store relevant data from the industrial process for which we want to model soft sensors. Usually this data is captured and stored by specialized systems, such as the OSIsoft PI Server.

In the data pre-processing, the main tasks, such as missing value imputation, resampling, and outlier detection and treatment, are performed to make the data ready for model development. In our implementation we apply bidirectional linear interpolation for missing value imputation and we use Azure Anomaly Detector to identify outlier values in the data.

For the feature selection we usually apply statistical and machine learning techniques, and/or the industrial process knowledge, to identify the most relevant input variables to model the sensors we are interested in. In our implementation, we apply mutual information regression between the output and input variables to find the most important features. Here we also split the dataset into training and testing sets, having the last 7% of the sensor measurements for testing the the rest for training and validating the model.

When modeling soft sensors in a data driven approach using AI techniques, we usually apply some kind of multivariate timeseries regression. We can experiment and train different model architectures and then choose the one that is most adequate for our task. In our implementation, we use the tsai package which is a Python package specialized in timeseries classification and regression. It implements dozens of modern algorithms for those tasks, most of them deep learning-based. In our implementation, we are using a recurrent neural network model architecture with GRU (Gated Recurrent Unit) for the timeseries regression task.

After training the model, we evaluate its performance by running inference using the input features in the test data. Usually we measure the model performance periodically and compare it with actual values from newer data. If the model performance drops under a specified threshold, we trigger a model retraining.

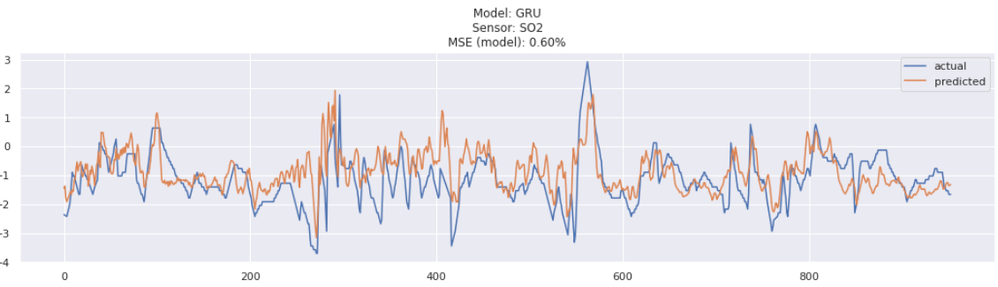

In Fig. 4 below we have a plot showing the actual values for the SO2 output sensor and the corresponding computed values from the model, where we measured a MSE (Mean Squared Error) of 0.6% across the entire test dataset:

Fig. 4: Actual and Predicted Values for SO2 in the Test Dataset

Conclusion

Soft sensors can be an important tool to help in the optimization and control of industrial processes when physical sensors are difficult to be used. This can represent important financial savings, by helping to keep those processes running as close as possible to their maximum efficiency regime.

In this post we presented the core concepts related to soft sensor development, a simple use case and corresponding dataset we used in our solution template, the steps we implemented in our solution development, and some results from our trained model. In the upcoming second part of this series, we will explore how to use Microsoft's Azure Data and AI platforms to implement this solution template at scale, and also making it easier to be integrated and operationalized.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.