- Home

- Microsoft FastTrack

- FastTrack for Azure

- Build medallion architecture using Apache Flink, Trino with Microsoft Fabric and HDInsight on AKS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Author: Snehal Sonwane, Service Engineer, Azure Data & AI, Abhishek Jain & Sairam Yeturi, Product Manager's, HDInsight

In this era of AI, we have many options to build data platforms, and the technology stack is incubating fast.

As the data landscape continues to scale, enterprises become more invested to build the platforms to scale and sustain to meet 3 big V’s - Volume, Velocity and Variety, paving way to modern architecture of data platforms to meet enterprise scale.

Introduction to HDInsight on AKS

Microsoft's Azure HDInsight is a managed, full-spectrum, open-source analytics service in the cloud for enterprises. With HDInsight, you can use open-source frameworks such as, Apache Spark, Apache Hive, LLAP, Apache Kafka, Hadoop and more, in your Azure environment.

With the recent release of HDInsight on AKS, Microsoft has further enhanced the service offering to run Azure Kubernetes Service.

Well, I took the versions for a spin, and running on a performant infrastructure adds to benefits of lower maintenance and management, and allows me to focus on business logic, and with intuitive interface the experience of creating a cluster is now reduced from several minutes to 6 to 10 minutes!

With added features like Auto scale, Configuration management, and Cluster pool setup - this PaaS offering is designed to help pro-developers to ace on building their applications and less worry on the infrastructure or platform issues.

The new version introduces, two new workloads in addition to the wide range of analytics spectrum on the previous version.

HDInsight on AKS, introduced Apache Flink® and Trino - the most coveted analytics workloads on the customers mind, which help compliment the entire stack from ingestion, query to streaming.

Let's talk a bit more on these new technologies, and what they mean to us?

Apache Flink is a framework and distributed processing engine for stateful computations over unbounded and bounded data streams. Flink has been designed to run in all common cluster environments, perform computations and stateful streaming applications at in-memory speed and at any scale. Learn more here.

Trino is an open-source distributed SQL query engine for federated and interactive analytics against heterogeneous data sources. It can query data at scale (gigabytes to petabytes) from multiple sources to enable enterprise-wide analytics. Learn more here.

In addition to these two analytics components, the most loved component in Apache Spark is also added to HDInsight on AKS.

Security becomes an important checkbox on your checklist, then you should hear this - The platform implements secure by default with modern OAuth/Authz around authorization and authentication using our Managed Identities and OAuth. It also supports integrations with VNets, and AAD to make your data platforms secure using Azure native solutions.

Integrations compliment and make life easy for developers, to not end up spending time on integrating two components forever - They've got that covered across the Azure analytics stack - You will find most of your favorite Azure services like ADF, Purview, Azure Monitoring and last but certainly not the least with Microsoft Fabric.

We will now walk you through how you can modernize your architecture!

Implementing medallion architecture using HDInsight on AKS with Apache Flink, Trino, and Apache Spark running on Microsoft Fabric OneLake.

Let's dive into how you can use these workloads together and build an end-to-end enterprise architecture to suit your needs.

Let's get started -

Here is our end-to-end scenario:

- Use Flink to load data in OneLake in Microsoft Fabric

- Read and transform data in Spark on Lakehouse

- Storing results in OneLake

- Using Trino CLI to access transformed data.

- Visualize it in PowerBI.

Here are some pre-requisites for the demo:

- ADLS gen2 storage account

- Microsoft Fabric workspace

- Power BI desktop

- IntelliJ for development

- HDInsight on AKS Cluster pool (subscription and resources)

-

You can create cluster pool and clusters through portal here. If you are a fan of ARM templates, you can also use one-click deployment templates to spin up your clusters.

-

- MSI for your clusters and other resources to communicate securely.

1. Ingesting data using Flink into OneLake Microsoft Fabric

Let's create a flink cluster inside a cluster pool. For the ingestion process we will use a Flink-delta connector.

For purpose of this demonstration, we are using a data generator function to ingest the data, and Flink can easily read data from variety of sources like Apache Kafka, EventHubs, etc.

Writing to Delta sink

The delta sink is used for writing the data to a delta table in ADLS gen2. The data stream is consumed by the delta sink. We will build the jar with required libraries and dependencies and call the delta sink class while submitting the job via Flink CLI. We can specify the path of ADLS gen2 storage account while specifying the delta sink properties.

Before submitting the job, you must create the destination folder in ADLS gen2. As you can see it is empty at the moment (in the below figure).

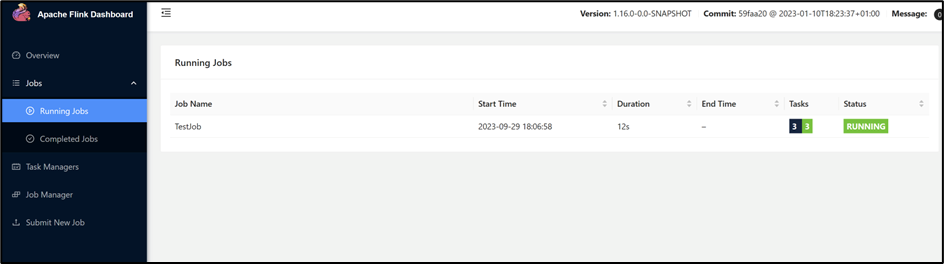

Login to ssh node of your flink cluster and run the job. Once the job is submitted, you can check the status and metrics on Flink UI.

As you can see the data been loaded to the ADLS gen2

Now we will create a shortcut of this ADLS gen2 in OneLake in Fabric workspace.

Before that, create a lakehouse in your Fabric workspace.

Create a lakehouse - Microsoft Fabric | Microsoft Learn

Note: Use dfs endpoint of your ADLS gen2

Give a name to your shortcut and point to the location where delta files are getting generated.

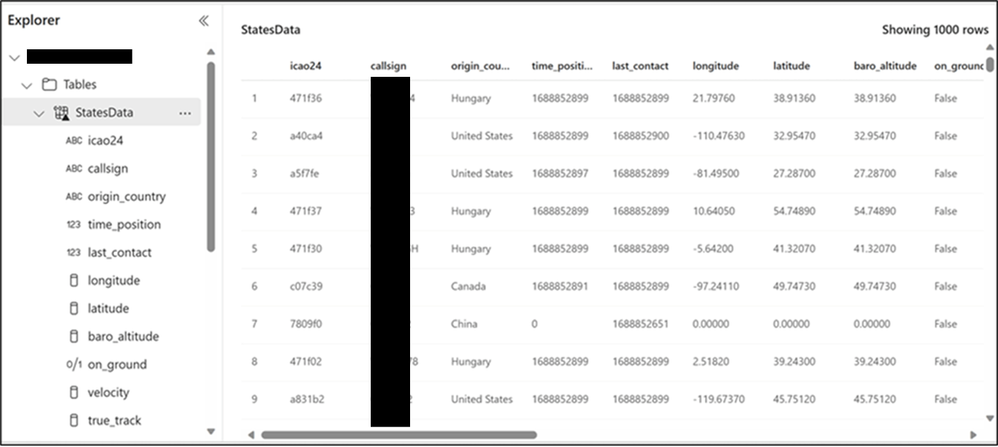

The data now resides on the raw layer of the medallion architecture.

Let's cleanse in silver layer and transform this data for our gold layer to be consumed by end users.

Note: I have used same OneLake to store raw, cleansed, and transformed data for this demo. But it's a good practice to use separate OneLake for each layer.

2. Reading and transforming data using Spark cluster

To access OneLake in Spark notebook provide appropriate permission to the HDInsight managed identity on Microsoft Fabric workspace as documented here

Note: You can also leverage the Spark within Fabric; However, I have used Spark cluster on HDInsight on AKS in this demo.

- Create a Spark cluster on HDI on AKS as here

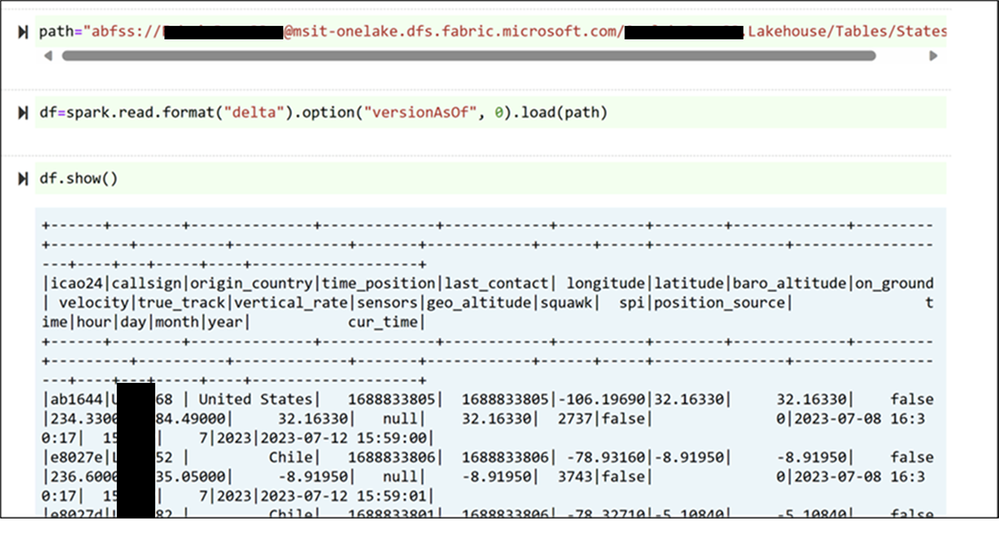

- Open a jupyter notebook from the spark cluster, read data from OneLake, transform the data and load it to a new table in OneLake.

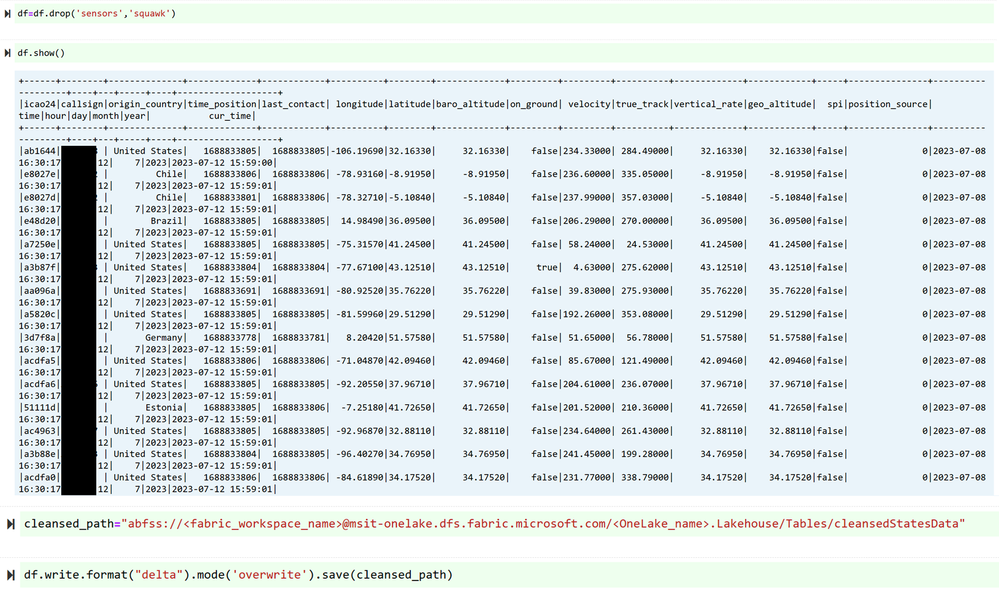

We observed a few null values in our data set, we cleansed this making it ready for transformation at consumption layer.

Let's transform our data now, we have an input data which is stored in ADLS gen2 and will be used to perform aggregations on the data in cleansed layer of OneLake.

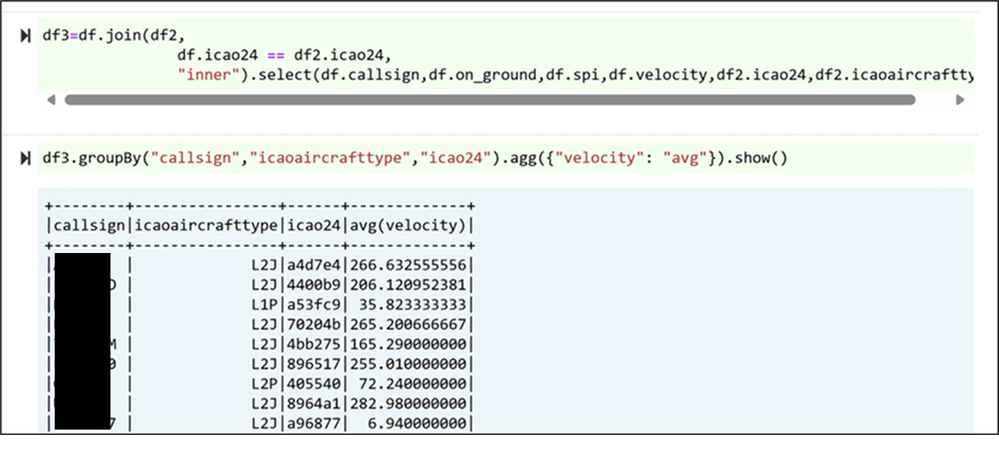

Performing join condition on the two data sets and aggregating the results on average value of velocity.

3. Storing the aggregated and transformed data into OneLake

Let's save our results to the table in OneLake

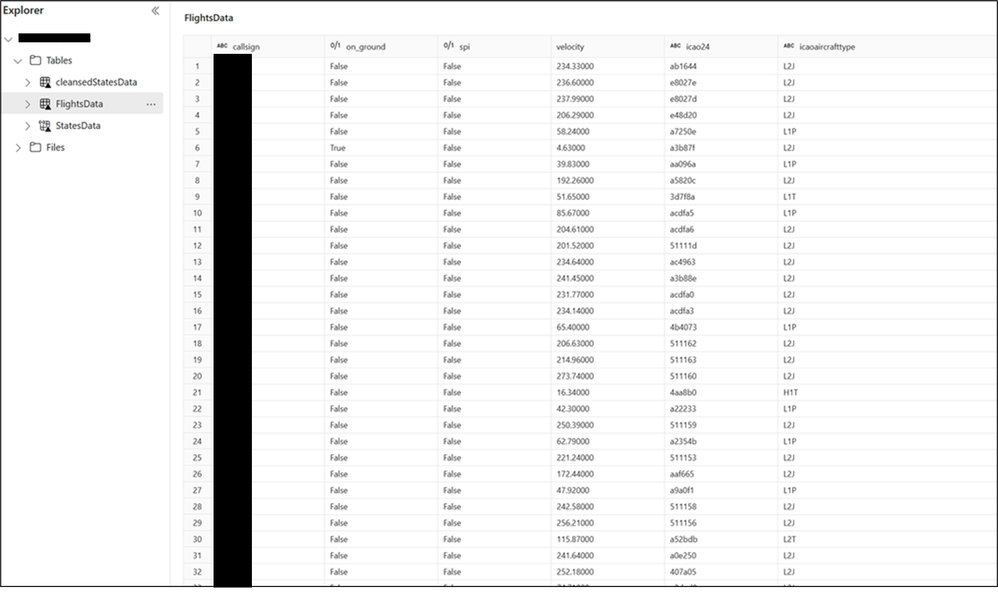

Data is loaded as a new table in OneLake which is gold layer for consumption.

Note: I have used here same OneLake to store my raw data, cleansed data and transformed data for my demo. However, as a best practice you must create a separate OneLake for each layer.

4. Using Trino CLI to access the transformed data

Now, we will access this new transformed table through Trino CLI

- Create a Trino cluster with hive metastore configured as shown here.

- Enable delta catalog as the format of data in OneLake for our transformed table is delta.

- You will have to add few properties in your cluster profile and serviceconfig to enable delta catalog in Trino cluster. Please follow how to configure delta catalog in Trino cluster.

- Refine the ARM template to deploy it using custom template task. Export ARM template in Azure HDInsight on AKS - Azure HDInsight on AKS | Microsoft Learn

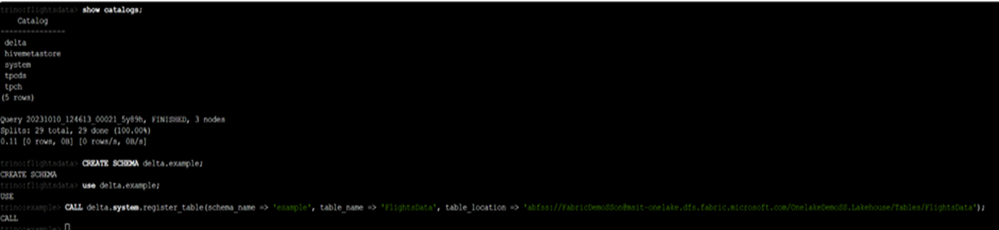

Once Trino cluster is redeployed with delta catalog enabled, now open a Trino cluster CLI and create a new table in Trino schema using delta table in OneLake

CREATE SCHEMA delta.example;

USE delta.example;

CALL delta.system.register_table(schema_name => 'example', table_name => 'FlightsData', table_location => 'abfss://<workspacename>@msit-onelake.dfs.fabric.microsoft.com/<lakehousename>.Lakehouse/Tables/FlightsData');

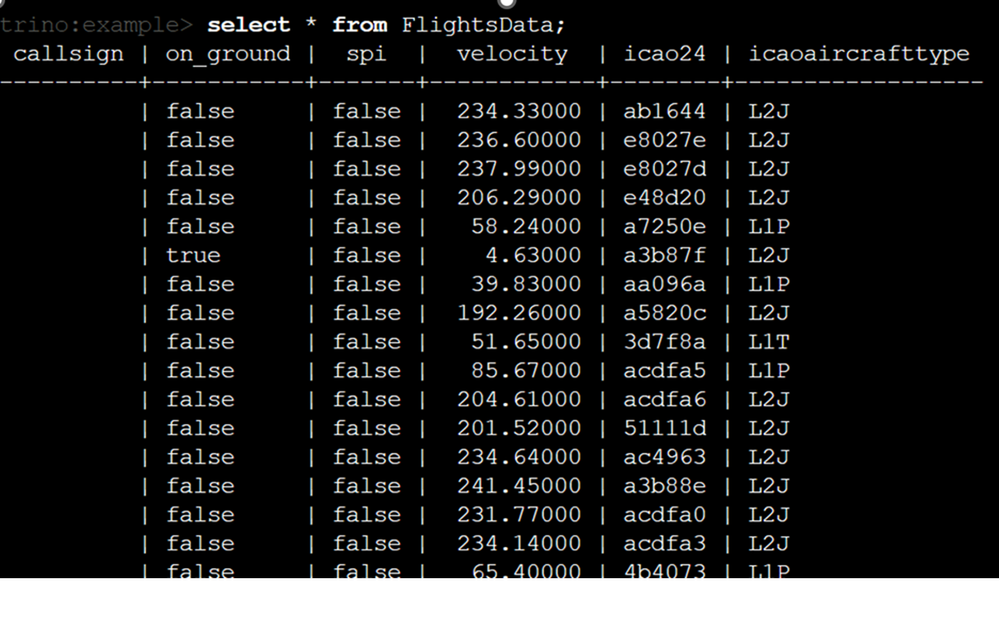

SELECT * FROM FlightsData;

5. Visualize it in PowerBI

Let's query this data in power BI using Trino on AKS connector.

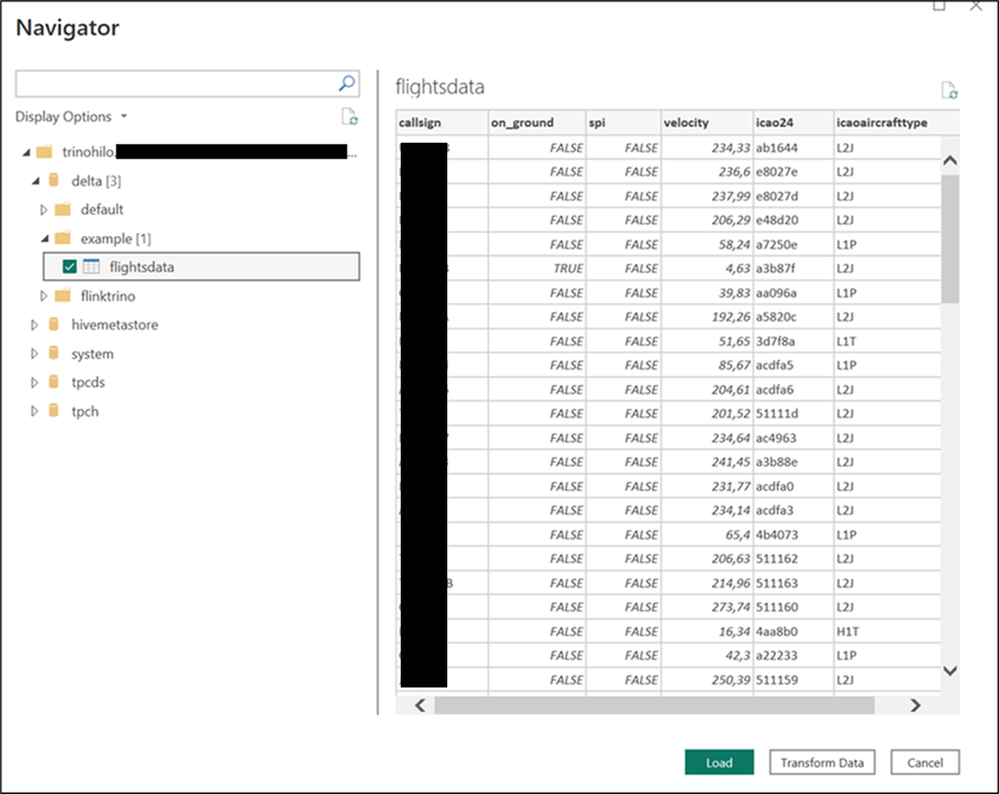

Open Power BI desktop and click on get data. Type trino in the search bar and click connect.

Fill in your trino cluster details (Trino cluster url is available in Overview blade of your Trino cluster in the Azure portal)

Select the table created in the previous step and load the data.

Well, that was fabulous!

Onelake could truly be used as a single unified storage layer for all these big data workloads and can extend the capabilities to a large set of tools and services.

This really makes it a great architecture to bet on all the best technologies on Azure, Let's get you started:

- Signup today - https://aka.ms/starthdionaks

- Read our the HDInsight on AKS documentation - https://aka.ms/hdionaks-docs

- Read our Microsoft Fabric Documentation - Microsoft Fabric documentation - Microsoft Fabric | Microsoft Learn

- Join HDInsight on AKS community, share an idea or share your success story - https://aka.ms/hdionakscommunity

- Join the Microsoft Fabric Community - Home - Microsoft Fabric Community

- Have a question on how to migrate or want to discuss a use case on HDInsight on AKS? - https://aka.ms/askhdinsight

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.