- Home

- Security, Compliance, and Identity

- Core Infrastructure and Security Blog

- Network Policy in Kubernetes using Calico

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction to the problem

All pods in Kubernetes can reach each other. For example, the frontend can reach the backend and the backend can reach the database. That is expected and normal. But this openness can make problems like:

- The frontend can reach the database!

- Applications from different namespaces can talk to each other!

- This means, if a container gets hacked, it will have access to all the containers!

That would be a security issue. We want to apply the principle of least privilege from The Zero Trust Network model: each pod should get access to the strict minimum and only the pods it is supposed to access to. If it doesn’t need access to other pods, there should be a rule to deny that possible access.

In this workshop, we will learn how to setup network policies in Kubernetes to:

- Deny pods to reach pods within all other namespaces.

- Deny pods to reach any pods except specific ones.

NetworkPolicy is defined in Kubernetes API as a specification. But it is not implemented. It is up to us to choose the implementation. There are multiple solutions available, like Calico, Azure Network Policy, Weave, Cilium, Romana, Kube-router…

This workshop is also available as a video on youtube:

We need to choose one for this demo, so we’ll go for Calico as it is one of the widely used. But we can still choose another implementation and the demo still applicable.

Then we need to have a Kubernetes cluster. We can choose between Minikube, GKE, EKS, PKS and all things xKy. We’ll choose here AKS (Azure Kubernetes Service).

In AKS, we can choose between Calico and Azure Network Policy. Until now, Calico could be enabled only on new clusters, not existing ones. Follow the following instructions to create a new AKS cluster and enable Calico.

1. Enabling Calico in AKS

- Enabling Calico from the Azure Portal

When we create a new AKS cluster in the Azure Portal, under the Networking tab, we’ll have the option to select Calico.

- Enabling Calico from the Azure CLI: az

From the az command line, when we create a new AKS cluster, we can add the parameter –network-policy.

az aks create --resource-group <RG> --name <NAME> --network-policy calico

- Enabling Calico from Terraform

In Terraform, we can add the network_policy with value set to “calico” inside “azurerm_kubernetes_cluster” as described in the following link:

https://www.terraform.io/docs/providers/azurerm/r/kubernetes_cluster.html#network_policy

network_profile {

network_plugin = "kubenet"

network_policy = "calico"

}

In the following scenarios, we’ll define a network policy and we’ll test how it works. So, lets create a Nginx Pod that will act as the backend for the application. Then we’ll simulate an Alpine Pod that acts as the frontend who tries to reach the backend.

First, let’s create a namespace with labels. Create a new file with the following content:

# 1-namespace-development.yaml

apiVersion: v1

kind: Namespace

metadata:

name: development

labels:

purpose: development

Then create the namespace with the following command:

kubectl create -f 1-namespace-development.yaml

After that, create the backend Nginx Pod with a Service with the following command:

kubectl run backend --image=nginx --labels app=webapp,role=backend --namespace development --expose --port 80 --generator=run-pod/v1

This command will generate a Pod and Service like the following config here.

Scenario 1: Deny inbound traffic from all Pods

Let’s create a policy to deny access to our backend Pod.

# 1-network-policy-deny-all.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

namespace: development

spec:

podSelector:

matchLabels:

app: webapp

role: backend

ingress: []

Note: we are not referencing the Pod with its name, but with its labels.

Then we apply this policy into Kubernetes:

kubectl apply -f 1-network-policy-deny-all.yaml

We create and run an Alpine Pod in interactive mode (-it):

kubectl run --rm -it --image=alpine network-policy --namespace development --generator=run-pod/v1

The command will give us access to run a command within the alpine pod. We try to connect to the backend pod:

wget -qO- --timeout=2 http://backend

The output would look like the following screenshot, where we see the access fails (‘wget: download timed out’) as we denied access.

kubectl apply -f 1-network-policy-deny-all.yaml

networkpolicy.networking.k8s.io/backend-policy configured

kubectl run --rm -it --image=alpine network-policy --namespace development --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- --timeout=2 http://backend

wget: download timed out

/ # exit

Session ended, resume using 'kubectl attach network-policy -c network-policy -i -t' command when the pod is running

pod "network-policy" deleted

At the end, we type ‘exit’ to exit the current alpine pod. That will delete the pod from the cluster.

Scenario 2: Allow inbound traffic from pods with matching labels

Now let’s update the policy to allow access to the backend pod to only specific pods with matching labels. Note here how we are accepting inbound (ingress) traffic from any Pod with specific labels, in any namespace.

# 2-network-policy-allow-pod.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

namespace: development

spec:

podSelector:

matchLabels:

app: webapp

role: backend

ingress:

- from:

- namespaceSelector: {}

podSelector:

matchLabels:

app: webapp

role: frontend

Let’s deploy this policy:

kubectl apply -f 2-network-policy-allow-pod.yaml

Then let’s run interactively an alpine pod with labels matching the ones required by the policy:

kubectl run --rm -it frontend --image=alpine --labels app=webapp,role=frontend --namespace development --generator=run-pod/v1

Now within the console of the alpine pod, we try to connect from it to the backend:

wget -qO- http://backend

The final output should be like the following, where access succeed (Nginx is returning it’s HTML hello page):

kubectl apply -f 2-network-policy-allow-pod.yaml

networkpolicy.networking.k8s.io/backend-policy configured

kubectl run --rm -it frontend --image=alpine --labels app=webapp,role=frontend --namespace development --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- http://backend

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

… removed for brievety

</html>

To prove pods without matching labels cannot access the backend pod, lets run the following experiment showing its failure:

kubectl run --rm -it --image=alpine network-policy --namespace development --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- --timeout=2 http://backend

wget: download timed out

Scenario 3: Allow inbound traffic from pods with matching labels and within a defined namespace

In this scenario, we want to restrict access to the backend pod not only to pods with matching labels, but also to pods within the same namespace. Which generally makes sense. For example, we don’t want the backend from production environment to connect to our database in development environment. Which can happen accidentally by selecting the wrong service name.

Let’s first show that there’s no restriction for communications between pods in different namespaces. We create a new namespace called production. Then we add labels to it. And create a new pod from that namespace trying t connect to backend pod in development namespace.

kubectl create namespace production

namespace/production created

kubectl label namespace/production purpose=production

namespace/production labeled

kubectl run --rm -it frontend --image=alpine --labels app=webapp,role=frontend --namespace production --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- http://backend.development

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

… removed for brievety

</html>

Note: Note here how we are attaching the backend service name to its namespace name to reach the backend pod: http://backend.development

Now let’s update the policy to accept inbound traffic from pods with matching labels and within specific namespace using the following template:

# 3-network-policy-allow-pod-namespace.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

namespace: development

spec:

podSelector:

matchLabels:

app: webapp

role: backend

ingress:

- from:

- namespaceSelector:

matchLabels:

purpose: development

podSelector:

matchLabels:

app: webapp

role: frontend

Note: The namespaceSelector relies on labels to identify namespaces, not the namespace name itself.

We apply the policy:

kubectl apply -f 3-network-policy-allow-pod-namespace.yaml

networkpolicy.networking.k8s.io/backend-policy configured

We run first test with pod with matching labels and within production namespace (not matching labels). Then we try to connect from that pod to the backend pod sitting in development namespace. Of course, that will fail.

kubectl run --rm -it frontend --image=alpine --labels app=webapp,role=frontend --namespace production --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- --timeout=2 http://backend.development

wget: download timed out

Now we run another test with pod with matching labels and within matching namespace. That will succeed.

kubectl run --rm -it frontend --image=alpine --labels app=webapp,role=frontend --namespace development --generator=run-pod/v1

If you don't see a command prompt, try pressing enter.

/ # wget -qO- http://backend

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

… removed for brievety…

</html>

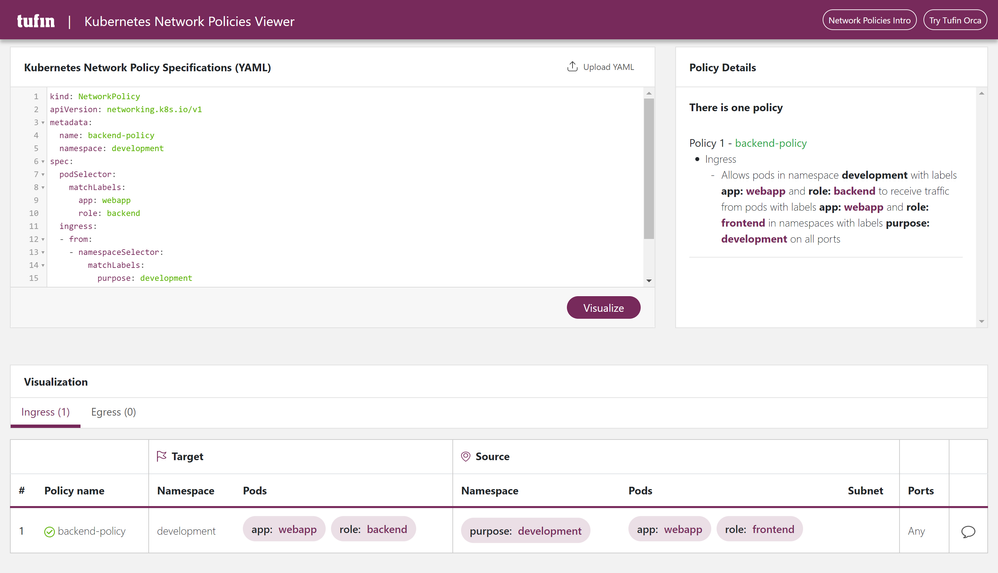

Note: Kubernetes Network Policies Viewer is a tool for ‘explaining’ the network policy using a designer view: https://orca.tufin.io/netpol/

Scenario 4: Allow outbound traffic to pods with matching labels

In all the above samples, we have worked only with ingress/inbound traffic to the pod. So that only the pod is reachable by only other matching pods. But we can also add rules to the egress/outbound traffic to the pod. This will make it possible to the pod to talk only to other matching pods.

The syntax for adding an egress rule is like the following, note how we are using ‘egress’ and ‘to’ the same way we used ‘ingress’ and ‘from’.

egress:

- to:

- namespaceSelector:

matchLabels:

purpose: development

podSelector:

matchLabels:

app: webapp

role: database

Scenario 5: allow inbound and outbound traffic from and to pods within the same namespace

A good practice and easy to implement rule is to start by denying pods to reach other pods from a different namespace.

# 5-network-policy-allow-within-namespace.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-same-namespace

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- podSelector: {}

Conclusion

This was how to get started creating basic network policies to limit inbound and outbound traffic between pods. However, there are many other concepts and features not covered here like creating rules based on IP address and Port numbers. Also creating rules based on HTTP methods like Get, Post, Delete, Put, etc. And also creating rules for Service Accounts.

More resources

Official Calico documentation: https://docs.projectcalico.org/v3.9/security/

This is the article that inspired me to write this blog: https://docs.microsoft.com/en-us/azure/aks/use-network-policies

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.