Video Transcript:

-What happens when you combine vision and language in an AI model? Well, today, I’ll show you, with a closer look at the latest vision AI model in Azure Cognitive Services, from using natural language to fetch visual content without needing any metadata, including location, to generating automatic and detailed descriptions of images using the model’s knowledge of the world, to how this extends even to video content, where it can serve up results based on your verbal description of what you want to search for. And keep watching until the end to see how you can easily customize the model and use it in your own apps.

-We’ve all heard of, and even tried large language models, like GPT from OpenAI, which is a foundational model pre-trained on billions of data parameters and provides a powerful way to build apps that interact with data using natural language. Now they say a picture tells a thousand words, and Cognitive Services Vision AI is another foundational model. It’s been updated to take advantage of Project Florence, Microsoft’s next generation AI for visual recognition. The model combines both natural language with computer vision and is part of the Azure Cognitive Services suite of pre-trained AI capabilities. It can carry out a variety of vision-language tasks including automatic image classification, object detection, and image segmentation. It’s multimodal, and you can programmatically use these capabilities in your apps. What makes it powerful, as I’ll show you, is its ability to visually process information within a wide range of situations, scenes and contexts, similar to the way we visually process things as humans.

-Now you can try these capabilities out in the Azure Vision Studio, and if you use the sample data, you don’t even need to sign in. And you can see we’ve created several examples for you to play with, including video summary and frame locator, searching photos with natural language, adding captions to images, as well as dense captions, and detecting common objects in images, all of which I’ll show you today.

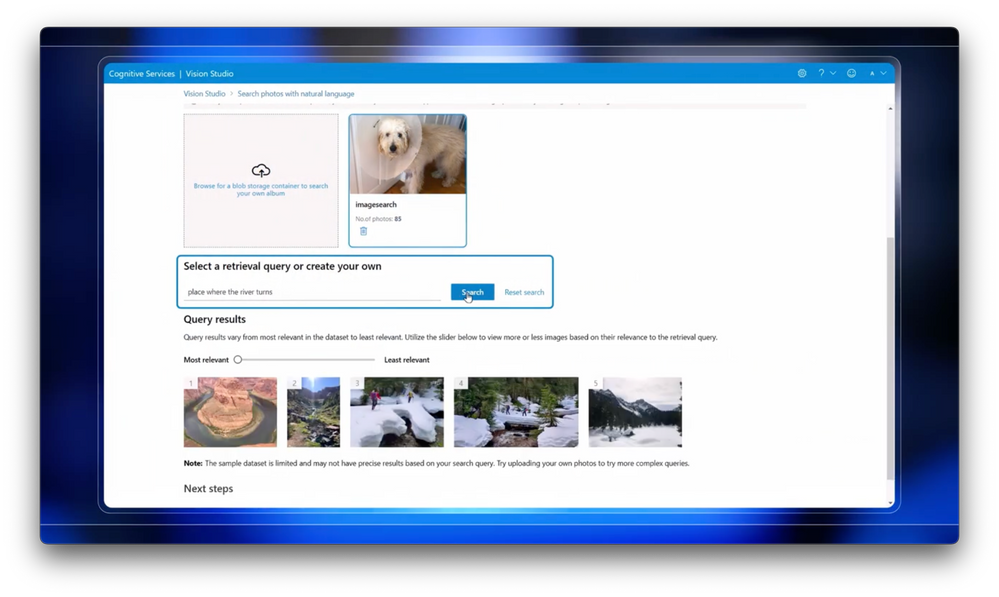

-And you can, of course, also bring your own visual material to try it out. In fact, I’m going to test it out with a dataset of pictures using my personal photos that include my pets and pictures taken on various trips. Now these aren’t labeled and there’s no metadata or GPS information associated with them. So I’ll do a search using natural language for “pictures with hoodoos,” which are a unique type of rock formation, and you can see it finds a few images from my trip to Bryce Canyon. So let’s try another one. I’ll search for “place where the river turns,” and it’s able to look through each photo and the first one’s pretty interesting. It’s actually a picture of the Horseshoe Bend in the Colorado River. Again, without there being any metadata associated with the image, it was able to recognize it because it’s a landmark.

-What makes this possible is what we call “open-world recognition.” This differs from previous “closed world” training methods which use a controlled environment to train the model on a limited set of meticulously labeled objects and scenes and can only recognize the specific objects and scenes it’s trained on. Instead, with Open World Recognition, the model is trained across billions of images across millions of object categories, allowing it to accurately recognize objects and scenes in a wide range of situations and contexts. And because we’ve combined Vision AI with our large language model, it’s capable of even greater image retrieval. Now as you expand and refine your text query in real time, you’re expanding the prompt, which it uses to better predict the intent and context of your search.

-This also works the other way around to describe everything in an image, or what we call dense captioning. It can create descriptions of multiple areas of interest within an image. For example, in this image of a family barbecue, there are a number of things happening. And the model is able to accurately deconstruct the scene with specific descriptions of the person grilling, the girl in the background, and everything else it discovers within the image.

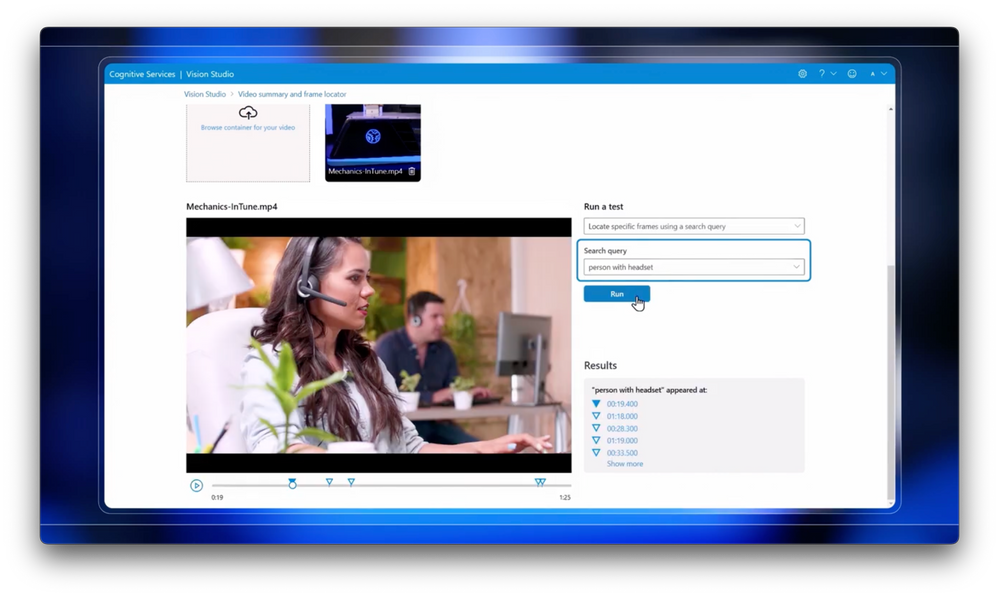

-Now this applies to video-based content as well. After all, a video is just a sequence of images, and using the model, we can break a video down to locate specific objects or traits in a frame of a video. Now we’ve uploaded a recent Microsoft Mechanics show that presented to Vision Studio to try this out. You can run two types of tests, the frame analysis is like what we did before with the image and search across the entire video to locate specific frames. So I’m going to choose search test. I’ll paste in my first search, “person with headset,” and hit run, and it’s super accurate. It found the person we featured with the headset. So I’ll try another one. “Person drinking from a cup,” and takes me to that exact frame.

-The experience also lets me leverage the combined vision and language model to get a detailed frame analysis describing the image. So I’ll search for another frame in this video with a bit more going on, So let’s type “people with hard hats.” I’ll pick the second frame and change the test type to “run frame analysis” and I’ll run it. And that’ll take a moment to analyze, and when it’s done, you can see a very comprehensive list of almost a dozen items discovered in the frame. And as I hover over the image, it calls out what it sees. Now image and video recognition capabilities can be used within your apps and services to improve accessibility and automatically generate alt text. Everything I’ve shown you works well out-of-the-box.

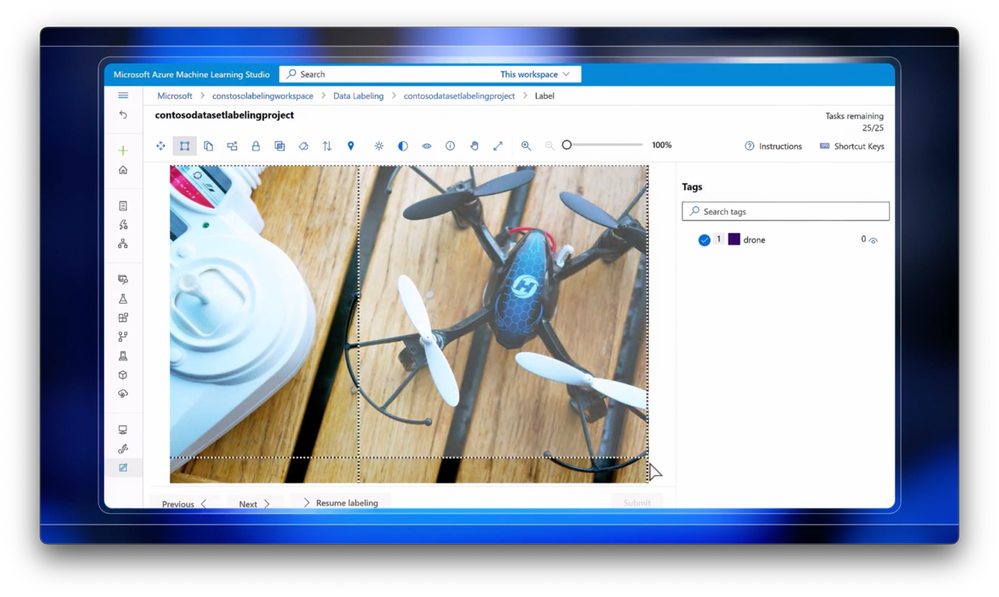

-You can also customize models using few shot learning, where you can provide the model additional training data and context to guide it. In fact, let me show you how easy it is to train a custom model using the Azure Vision Studio. In my case, I want to train the model to detect quadcopter-style drones. In advance, I’ve created an image dataset in blob storage. And with the images loaded, I can label them by selecting the object and tagging the images with drone labels. I’ll do that a few times. And once more. And now that I’m finished with labeling, I’ll go back to Vision Studio to import the labeling data, which uses the common COCO file format. From there, I can import that file into the studio, point to the file, my other parameters and confirm.

-Now, with the training data labeled, I can head over to custom models and start the model training. So, I’ll start the training process by giving the model a name. I’ll select an object detection model, and provide it with my custom training dataset. Now this is a small dataset, so I’ll choose the smallest training budget, then review and start training. Once the model is trained, it produces a report with metrics that help me understand the model’s accuracy. Now I’m going to test out the model I just trained, and I’ll use a drone picture that was not in the original training set. And you’ll see it’s able to find the drone in the image. Now even though this is a small model test, model customization using this approach doesn’t need as much training data. In many cases, it takes just 1/10 of the data compared to other approaches.

-Now let’s do one more thing and look at how you can build this into a custom app. So this is a mobile app that we’ve created to help people with low vision, called Seeing AI, which has been updated to use our Florence-enhanced Azure Vision service. The app helps narrate the world around you. And you can see everything it can detect listed in the menu. We’ve tested the app out on a cluttered room to make it a bit more challenging. Now by pointing the back camera in the direction of the object, it can infer what it is seeing and describe it to you when you tap the location of the object on the screen. So here, we’ve tapped on the right side…

-[Computer] Television.

-And there, it audibly described the object correctly. And even with this less obvious example on the left…

-[Computer] A wine rack with bottles on top of it.

-It was able to easily recognize and add detail to what it was seeing. So now let me show you a sample of the code behind an app like this, and how it calls the Azure Vision service. So first, using a Computer Vision instance, as you’ll see in the code, you need to provide the key and the endpoint URL. Next, you select your image file for running the Vision AI model. Then you specify options, in this case, we show how to use captions with English language for the output of the image description. And last, you invoke the analyze method and then print the results and you’re done.

-So that’s how you can combine both vision and language to build apps to visually process information in a wide range of situations, scenes and contexts. To learn more, check out aka.ms/CognitiveVision. Keep checking back to Microsoft Mechanics for the latest updates and we’ll see you next time.