- Home

- Microsoft FastTrack

- FastTrack for Azure

- Implementing Pagination with the Copy Activity in Microsoft Fabric

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction:

APIs often return a large amount of data. Pagination allows you to retrieve a manageable subset of this data at a time, preventing unnecessary strain on resources and reducing the likelihood of timeouts or errors.

In this example, the client starts by requesting the first page of data from the server. The server responds with both the data and metadata indicating the current page, the total number of records. The client then proceeds to request subsequent pages of data until it reaches the last page. This approach allows for efficient data retrieval and processing without overwhelming the client or the server.

we want to get a file in ADLS containing all data from API without the need to use other activities like until/forEach, we want the Copy activity to perform all the pagination needed to collect all the data.

Prerequisites:

1. Basic knowledge in Rest API.

2. Workspace in Microsoft Fabric.

3. ADLS storage account.

API used:

https://pokeapi.co/api/v2/pokemon

default limit according to pokeapi documentation is 20 records per request.

In this tutorial, i want to limit records up to 500 records per request like so:

https://pokeapi.co/api/v2/pokemon?limit=500&offset=501

The initial API call will be made using the following URL:

pokeapi.co/api/v2/pokemon?limit=500&offset=0

Subsequently, the API calls will proceed as follows:

Second call:

pokeapi.co/api/v2/pokemon?limit=500&offset=501

Third call:

pokeapi.co/api/v2/pokemon?limit=500&offset=1002

In each successive request, the offset value will be incremented by 500 to retrieve the next set of records.

Steps:

Step1: Prepare your workspace.

In your fabric workspace, navigate to Data Factory component and add a pipeline to your workspace, after that drag a copy activity to your canvas.

Follow steps mentioned in MS documentation: Module 1 - Create a pipeline with Data Factory - Microsoft Fabric | Microsoft Learn

Step2: Configure the Copy activity.

1. Source settings:

data store type: External

connection: add new -> click on Rest connection.

Fill connection settings like so:

click on Create.

Relative URL:

pokemon?limit=500&offset=pageOffset

here I'm adding a value to the offset parameter, pageOffset is a variable that will get value from the pagination rule.

In Advanced: under Pagination Rule, add a value to the variable 'pageOffset'

which indicates that we will run from 1 to 1281 with an offset of 500, so each call to the API we will jump by 500 records as mentioned above.

2. destination

I would like to write data as a .csv file,named outputPartitioning.csv , i added my ADLS connection to my lake house, follow the steps in MS documentation: Create an Azure Data Lake Storage Gen2 shortcut - Microsoft Fabric | Microsoft Learn

3. Mapping tab:

After we configured both source and destination, now we need to map our data, so data from the API comes as a Json with these attributes:

{

"count": 1281,

"next": "https://pokeapi.co/api/v2/pokemon?offset=3&limit=2",

"previous": "https://pokeapi.co/api/v2/pokemon?offset=0&limit=1",

"results": [

{

"name": "ivysaur",

"url": "https://pokeapi.co/api/v2/pokemon/2/"

},

{

"name": "venusaur",

"url": "https://pokeapi.co/api/v2/pokemon/3/"

}

]

}

now we don't care about metadata provided by the API (like count,next,previous keys), we only want the results array.

click on import schemas, after that add in collection reference:

$['results']

delete extra results that you see below, and make sure to make name and url keys is saved as String in destination like so:

Step3: Run copy activity.

after running copy activity, you should see output file in your ADLS storage account and activity marked as success in the Fabric workspace.

Output:

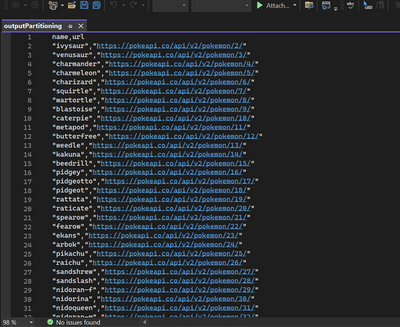

downloaded my file from my ADLS storage account and opened it in Visual Studio.

we can see that we got 1281 records as promised from the API, so pagination worked.

Links:

- Module 1 - Create a pipeline with Data Factory - Microsoft Fabric | Microsoft Learn

- Create an Azure Data Lake Storage Gen2 shortcut - Microsoft Fabric | Microsoft Learn

- Documentation - PokéAPI (pokeapi.co)

- How to configure REST in a copy activity - Microsoft Fabric | Microsoft Learn

Call-To-Action:

- Make sure to establish all connections in ADLS and in Fabric workspace.

- check MS documentation on pagination in copy activity.

- Please help us improve by sharing your valuable feedback.

- Follow me on LinkedIn: Sally Dabbah | LinkedIn

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.