As part of the Python advocacy team, I help maintain several open-source sample AI applications, like our popular RAG chat demo. Through that work, I’ve learned a lot about what makes LLM-powered apps feel fast, reliable, and responsive.

One of the most important lessons: use an asynchronous backend framework. Concurrency is critical for LLM apps, which often juggle multiple API calls, database queries, and user requests at the same time. Without async, your app may spend most of its time waiting — blocking one user’s request while another sits idle.

The need for concurrency

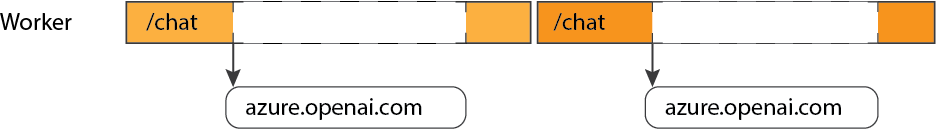

Why? Let’s imagine we’re using a synchronous framework like Flask. We deploy that to a server with gunicorn and several workers. One worker receives a POST request to the "/chat" endpoint, which in turn calls the Azure OpenAI Chat Completions API.

That API call can take several seconds to complete — and during that time, the worker is completely tied up, unable to handle any other requests. We could scale out by adding more CPU cores, workers, or threads, but that’s often wasteful and expensive.

Without concurrency, each request must be handled serially:

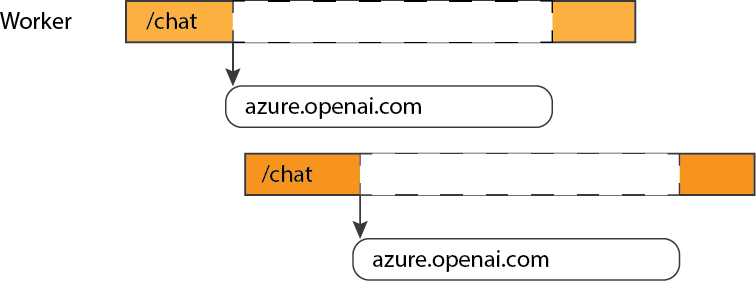

When your app relies on long, blocking I/O operations — like model calls, database queries, or external API lookups — a better approach is to use an asynchronous framework. With async I/O, the Python runtime can pause a coroutine that’s waiting for a slow response and switch to handling another incoming request in the meantime.

With concurrency, your workers stay busy and can handle new requests while others are waiting:

Asynchronous Python backends

In the Python ecosystem, there are several asynchronous backend frameworks to choose from:

- Quart: the asynchronous version of Flask

- FastAPI: an API-centric, async-only framework (built on Starlette)

- Litestar: a batteries-included async framework (also built on Starlette)

- Django: not async by default, but includes support for asynchronous views

All of these can be good options depending on your project’s needs. I’ve written more about the decision-making process in another blog post.

As an example, let's see what changes when we port a Flask app to a Quart app.

First, our handlers now have async in front, signifying that they return a Python coroutine instead of a normal function:

async def chat_handler():

request_message = (await request.get_json())["message"]

When deploying these apps, I often still use the Gunicorn production web server—but with the Uvicorn worker, which is designed for Python ASGI applications. Alternatively, you can run Uvicorn or Hypercorn directly as standalone servers.

Asynchronous API calls

To fully benefit from moving to an asynchronous framework, your app’s API calls also need to be asynchronous. That way, whenever a worker is waiting for an external response, it can pause that coroutine and start handling another incoming request.

Let's see what that looks like when using the official OpenAI Python SDK. First, we initialize the async version of the OpenAI client:

openai_client = openai.AsyncOpenAI(

base_url=os.environ["AZURE_OPENAI_ENDPOINT"] + "/openai/v1",

api_key=token_provider

)Then, whenever we make API calls with methods on that client, we await their results:

chat_coroutine = await openai_client.chat.completions.create(

deployment_id=os.environ["AZURE_OPENAI_CHAT_DEPLOYMENT"],

messages=[{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": request_message}],

stream=True,

)

For the RAG sample, we also have calls to Azure services like Azure AI Search. To make those asynchronous, we first import the async variant of the credential and client classes in the aio module:

from azure.identity.aio import DefaultAzureCredential

from azure.search.documents.aio import SearchClient

Then, like with the OpenAI async clients, we must await results from any methods that make network calls:

r = await self.search_client.search(query_text)

By ensuring that every outbound network call is asynchronous, your app can make the most of Python’s event loop — handling multiple user sessions and API requests concurrently, without wasting worker time waiting on slow responses.

Sample applications

We’ve already linked to several of our samples that use async frameworks, but here’s a longer list so you can find the one that best fits your tech stack:

| Repository | App purpose | Backend | Frontend |

| azure-search-openai-demo | RAG with AI Search | Python + Quart | React |

| rag-postgres-openai-python | RAG with PostgreSQL | Python + FastAPI | React |

| openai-chat-app-quickstart | Simple chat with Azure OpenAI models | Python + Quart | plain JS |

| openai-chat-backend-fastapi | Simple chat with Azure OpenAI models | Python + FastAPI | plain JS |

| deepseek-python | Simple chat with Azure AI Foundry models | Python + Quart | plain JS |

Microsoft

Microsoft