- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Harnessing the Power of Open-Source Models for Image Retrieval in Azure Machine Learning

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Context

Recent advances in vision-language pretraining and in self-supervised pretraining for vision have led to very powerful representation models, many of which are open-source. Together with efficient algorithms for indexing and search, they constitute highly effective building blocks for text-to-image and image-to-image retrieval. In this post, we showcase a complete image retrieval solution in Azure Machine Learning (AzureML), and offer insight into how well the most prominent open-source models work for retrieval.

Image Retrieval in AzureML

We created a notebook that uses the CLIP model for text-to-image retrieval on a sample dataset. The notebook demonstrates how to deploy CLIP from the AzureML model catalog, how to use the real-time inference endpoint to populate an Azure AI Search Index, and how to retrieve results from this index. Note that the model is available in Azure AI Studio as well.

The notebook performs the following high-level steps:

1. Deploy the CLIP-B model from the AzureML model catalog (or the Azure AI Studio model catalog) to an online endpoint and create an Azure AI Search Index

import requests

SEARCH_SERVICE_NAME = "<SEARCH SERVICE NAME>"

SERVICE_ADMIN_KEY = "<admin key from the search service in Azure Portal>"

INDEX_NAME = "fridge-objects-index"

API_VERSION = "2023-07-01-Preview"

CREATE_INDEX_REQUEST_URL = "https://{search_service_name}.search.windows.net/indexes?api-version={api_version}".format(

search_service_name=SEARCH_SERVICE_NAME, api_version=API_VERSION

)

create_request = {

"name": INDEX_NAME,

"fields": [ ... ],

"vectorSearch": { ... }

}

response = requests.post(

CREATE_INDEX_REQUEST_URL,

json=create_request,

headers={"api-key": SERVICE_ADMIN_KEY},

)

2. Populate the search index with image embeddings created by sending images to the CLIP endpoint

from tqdm.auto import tqdm

ADD_DATA_REQUEST_URL = "https://{search_service_name}.search.windows.net/indexes/{index_name}/docs/index?api-version={api_version}".format(

search_service_name=SEARCH_SERVICE_NAME,

index_name=INDEX_NAME,

api_version=API_VERSION,

)

image_paths = [

os.path.join(dp, f)

for dp, dn, filenames in os.walk(dataset_dir)

for f in filenames

if os.path.splitext(f)[1] == ".jpg"

]

for idx, image_path in enumerate(tqdm(image_paths)):

ID = idx

FILENAME = image_path

MAX_RETRIES = 3

# get embedding from endpoint

embedding_request = make_request_images(image_path)

response = None

request_failed = False

IMAGE_EMBEDDING = None

for r in range(MAX_RETRIES):

try:

response = workspace_ml_client.online_endpoints.invoke(

endpoint_name=online_endpoint_name,

deployment_name=deployment_name,

request_file=_REQUEST_FILE_NAME,

)

response = json.loads(response)

IMAGE_EMBEDDING = response[0]["image_features"]

break

except Exception as e:

print(f"Unable to get embeddings for image {FILENAME}: {e}")

print(response)

if r == MAX_RETRIES - 1:

print(f"attempt {r} failed, reached retry limit")

request_failed = True

else:

print(f"attempt {r} failed, retrying")

# add embedding to index

if IMAGE_EMBEDDING:

add_data_request = {

"value": [

{

"id": str(ID),

"filename": FILENAME,

"imageEmbeddings": IMAGE_EMBEDDING,

"@search.action": "upload",

}

]

}

response = requests.post(

ADD_DATA_REQUEST_URL,

json=add_data_request,

headers={"api-key": SERVICE_ADMIN_KEY},

)

3. Query the index with text embeddings created by sending a text query to the CLIP endpoint

TEXT_QUERY = "a photo of a milk bottle"

K = 5 # number of results to retrieve

def make_request_text(text_sample):

request_json = {

"input_data": {

"columns": ["image", "text"],

"data": [["", text_sample]],

}

}

with open(_REQUEST_FILE_NAME, "wt") as f:

json.dump(request_json, f)

make_request_text(TEXT_QUERY)

response = workspace_ml_client.online_endpoints.invoke(

endpoint_name=online_endpoint_name,

deployment_name=deployment_name,

request_file=_REQUEST_FILE_NAME,

)

response = json.loads(response)

QUERY_TEXT_EMBEDDING = response[0]["text_features"]

QUERY_REQUEST_URL = "https://{search_service_name}.search.windows.net/indexes/{index_name}/docs/search?api-version={api_version}".format(

search_service_name=SEARCH_SERVICE_NAME,

index_name=INDEX_NAME,

api_version=API_VERSION,

)

search_request = {

"vectors": [{"value": QUERY_TEXT_EMBEDDING, "fields": "imageEmbeddings", "k": K}],

"select": "filename",

}

response = requests.post(

QUERY_REQUEST_URL, json=search_request, headers={"api-key": SERVICE_ADMIN_KEY}

)

neighbors = json.loads(response.text)["value"]

4. Visualize results

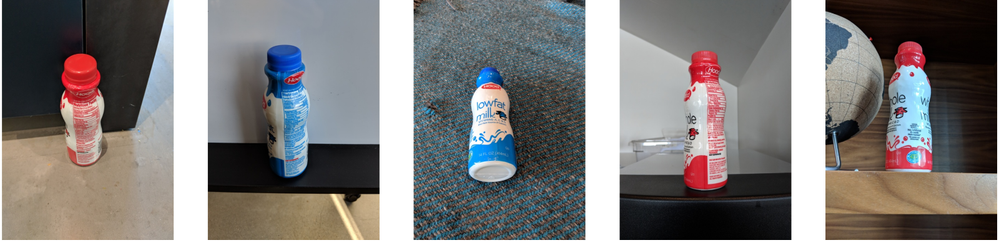

Text query: “A photo of a milk bottle”

What Model to Use for Image Retrieval?

The above example uses an efficient but not always optimal model. We aim to characterize the performance of prominent multimodal and vision-only models to provide high level guidance for use in image retrieval applications. We evaluated a few models on text-to-image and image-to-image retrieval tasks, comparing them against the state of the art on well-known datasets. Our goal is to inform model choice, i.e. what models are likely to do well, and state of the art served as a yardstick for model performance.

We used the metric recall at k (R@k), defined as the fraction of time the top k results include a relevant result, which is a standard metric for the datasets we considered, as well as a popular metric for image retrieval in general. For each dataset, the training-validation-test split followed the existing literature.

Text-to-image

For text-to-image retrieval, a widely used dataset in the research literature is Flickr30K. We also used RSICD, a satellite imagery dataset, since it has attracted significant attention from the remote sensing community. The table below shows the results of evaluation on Flickr30K and RSICD:

|

|

SOTA |

CLIP-B/32 |

CLIP-L/14@336 |

BLIP2 |

|

Flickr30K (training: 30,000; validation: 1,000; test: 1,000) R@1/R@5 |

||||

|

pretrained finetuned |

89.7/98.1 [1] 90.3/98.7 [3] |

N/A N/A |

68.7/90.6 [2] 86.5/97.9 [4] |

89.7/98.1 [1] N/A |

|

RSICD (training: 8,734; validation: 1,039; test: 1,094) R@1/R@5 |

||||

|

pretrained finetuned |

6.4/19.8 [5] 11.6/33.9 [6] |

6.2/17.7 12.0/34.6 |

6.2/21.6 14.9/39.9 |

10.2/29.5 18.4/47.1 |

For Flickr30K, we only report the numbers already available in the literature, while for RSICD we evaluated the models ourselves, both in their pretrained state and after fine-tuning. We froze the text-related parts of the models (CLIP’s text encoder and BLIP2’s text decoder/encoder+decoder) but used the original losses.

For both datasets, pretrained BLIP2 offers a competitive alternative to fine-tuning, as it is close behind the state of the art fine-tuned results. Fine-tuned on RSICD, both BLIP2 and CLIP-L@336 are capable of significant gains over the state of the art.

Image-to-image

For image-to-image retrieval, two very popular datasets in the metric learning literature are CUB200 and SOP. The table below shows the results of evaluation on these two datasets:

|

|

SOTA |

CLIP-L/14@336 (vis. enc.) |

BLIP2 (vis. enc.) |

ViTL |

DinoV2G |

|

CUB200 (training: 5,994; test: 5,794) R@1/R@4 |

|||||

|

pretrained finetuned |

- 87.8 [7]/94.4 [8] |

77.1/93.9 86.3/94.7 |

76.4/90.9 88.9/94.9 |

79.5/91.7 88.4/94.8 |

89.5/95.2 90.5/95.4 |

|

SOP (training: 59,551; test: 60,502) R@1/R@10 |

|||||

|

pretrained finetuned |

- 86.5/95.2 [9] |

67.8/79.2 90.9/97.5 |

68.2/83.9 91.8/97.8 |

58.0/73.1 88.0/96.0 |

52.9/71.5 91.0/97.4 |

We finetuned using the ProxyAnchor loss [10] and similar training settings to those used in the metric learning literature. In addition to vision pretrained backbones, we used visual encoders of vision-language pretrained ones.

State of the art methods achieve high recall on CUB200 and SOP, but the models we investigated surpass them, which is not surprising since they are larger than the ViT-S backbone used by most of the state of the art. In some cases, even the pretrained model versions are very competitive, e.g. DinoV2G on CUB200. It is interesting to note that on SOP, CLIP-L is highly competitive with DinoV2G, a much larger model, which highlights the value of text labels. BLIP2 exceeds the performance of CLIP-L, but by a small amount.

Conclusion

The CLIP-L@336 and BLIP2 models offer strong performance for both text-to-image and image-to-image search, especially when finetuned. In some cases, pretrained BLIP2 and pretrained DinoV2G have very competitive results. By supporting inference with strong open-source models and by providing an effective search infrastructure, AzureML allows users easy access to high quality embeddings. We are working on adding finetuning capabilities – let us know how else we can help you build your search application!

Code and Additional Training Details

For text-to-image retrieval, the finetuning setup, including the data loading and data augmentation, was largely inspired by a HuggingFace blog post [11]. We used the HuggingFace Transformers trainer and ran hyperparameter sweeps over learning rate and weight decay using AzureML hyperparameter tuning. Training was done on a single machine.

For image-to-image retrieval, we finetuned with the code in the HIER repository for metric learning [12]. Minimal hyperparameter optimization was performed and training was done on a single machine.

References

[2] Learning Transferable Visual Models From Natural Language Supervision (arxiv.org)

[3] Image as a Foreign Language: BEIT Pretraining for Vision and Vision-Language Tasks

[4] Learning Customized Visual Models With Retrieval-Augmented Knowledge

[5] RS5M: A Large Scale Vision-Language Dataset for Remote Sensing Vision-Language Foundation Model

[6] Parameter-Efficient Transfer Learning for Remote Sensing Image-Text Retrieval

[7] Deep Factorized Metric Learning

[8] HIER: Metric Learning Beyond Class Labels via Hierarchical Regularization

[9] STIR: Siamese Transformer for Image Retrieval Postprocessing

[10] Proxy Anchor Loss for Deep Metric Learning

[11] Fine tuning CLIP with Remote Sensing (Satellite) images and captions (huggingface.co)

[12] HIER: Metric Learning Beyond Class Labels via Hierarchical Regularization

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.