- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Empower Azure Video Indexer Insights with your own models

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

Azure Video Indexer (AVI) offers a comprehensive suite of models that extract diverse insights from the audio, transcript, and visuals of videos. Recognizing the boundless potential of AI models and the unique requirements of different domains, AVI now enables integration of custom models. This enhances video analysis, providing a seamless experience both in the user interface and through API integrations.

The Bring Your Own (BYO) capability enables the process of integrating custom models. Users can provide AVI with the API for calling their model, define the input via an Azure Function, and specify the integration type. Detailed instructions are available here.

Demonstrating this functionality, a specific example involves the automotive industry: Users with numerous car videos can now detect various car types more effectively. Utilizing AVI's Object Detection insight, particularly the Car class, the system has been expanded to recognize new sub-classes: Jeep and Family Car. This enhancement employs a model developed in Azure AI Vision Studio using Florence, based on a few-shots learning technique. This method, leveraging the foundational Florence Vision model, enables training for new classes with a minimal set of examples – approximately 15 images per class.

The BYO capability in AVI allows users to efficiently and accurately generate new insights by building on and expanding existing insights such as object detection and tracking. Instead of starting from scratch, users can begin with a well-established list of cars that have already been detected and tracked along the video, each with a representative image. Users can then use only numerous requests for the new Florence-based model to differentiate between the cars according to their model.

Note: This article is accompanied by a step-by-step code-based tutorial. Please visit the official Azure Video Indexer “Bring Your Own” Sample under the Video Indexer Samples...

High Level Design and Flow

To demonstrate the usage of building customized AI pipeline, we will be using the following pipeline that leverages several key aspects of Video Indexer components and integrations:

1. Users employ their existing Azure Video Indexer account on Azure to index a video, either through the Azure Video Indexer Portal or the Azure Video Indexer API.

2. By default, the Video Indexer account is configured with Diagnostic Settings that facilitate the publication of Audit and Event Data to a chosen destination, such as a Storage Account, Log Analytics Account, or Event Hubs. In this workflow, we have directed the event stream to an Event Hubs namespace. This choice is motivated by the need for enhanced durability and persistence, making the data accessible to a wide range of consumers. For more comprehensive information on the various options available for video index collection, please consult the Monitor Azure Video Indexer | Microsoft Learn.

3. Indexing operation events (such as “Video Uploaded,” “Video Indexed,” and “Video Re-Indexed”) are streamed to Azure Event Hubs. Azure Event Hubs enhances the reliability and persistence of event processing and supports multiple consumers through “Consumer Groups.”

4. A dedicated Azure Function, created within the customer's Azure Subscription, activates upon receiving events from the EventHub. This function specifically waits for the “Indexing-Complete” event to process video frames based on criteria like object detection, cropped images, and insights. The compute layer then forwards selected frames to the custom model via Cognitive Services Vision API and receives the classification results. In this example it sends the crops of the representative image for each tracked car in the video.

Note: The integration process involves strategic selection of video frames for analysis, leveraging AVI's car detection and tracking capabilities, to only process representative cropped images of each tracked car in the custom model.

5. The compute layer (Azure Function) then transmits the aggregated results from the custom model back to the Azure API to update the existing indexing data using the Update Video Index API Call.

6. The enriched insights are subsequently displayed on the Video Indexer Portal. The ID in the custom model matches the ID in the original insights JSON.

Note: for more in-depth step-by-step tutorial accomplished with code sample, please consult the official Azure Video Indexer GitHub Sample under the “Bring-Your-Own” Section.

Result Analysis

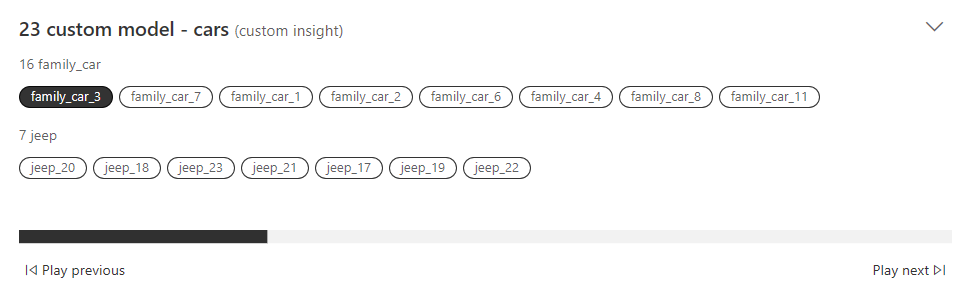

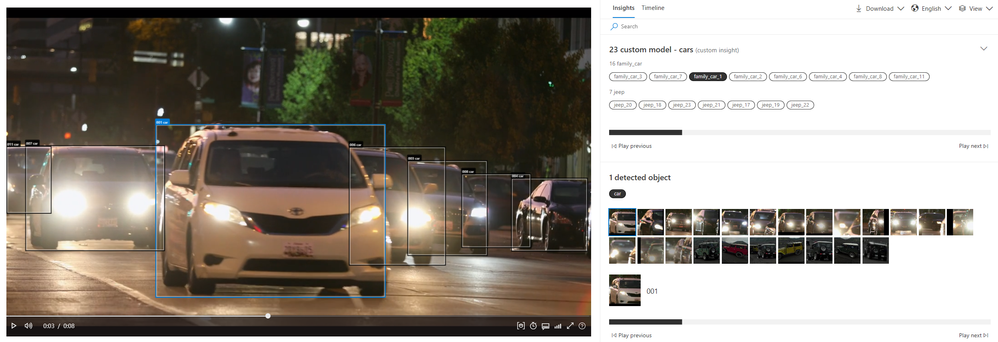

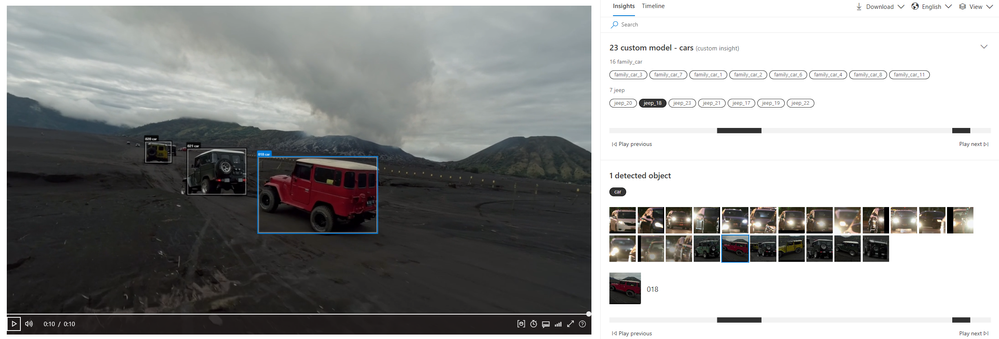

The outcome is a novel insight displayed in the user interface, revealing the outcomes from the custom model. This application allowed for the detection of a new subclass of objects, enhancing the video with additional, user-specific insights. In the examples provided below, each car is distinctly classified: for instance, the white car is identified as a family car (Figure 3), whereas the red car is categorized as a jeep (Figure 4).

Conclusions

With only a handful of API calls to the bespoke model, the system effectively conducts a thorough analysis of every car featured in the video. This method, which involves the selective use of certain images for the custom model combined with insights from AVI, not only reduces expenses but also boosts overall efficiency. It delivers a holistic analysis tool to users, paving the way for endless customization and AI integration opportunities.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.