- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Comparative study of Azure Open AI GPT model and LLAMA 2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction to LLMs

Large Language Models (LLMs) have revolutionized natural language processing by leveraging extensive training on massive datasets with tens of millions to billions of weights. Employing self-supervised and semi-supervised learning, exemplified by models like GPT-3, GPT-4, and LLAMA, these models predict the next token or word in input text, showcasing a profound understanding of contextual relationships. Notably, recent advancements feature a uni-directional (autoregressive) Transformer architecture, as seen in GPT-3, surpassing 100 billion parameters and significantly enhancing language understanding capabilities.

GPT

OpenAI's GPT (generative pre-trained transformer) models have been trained to understand natural language and code. GPTs provide text outputs in response to their inputs. The inputs to GPTs are also referred to as "prompts". Designing a prompt is essentially how you “program” a GPT model, usually by providing instructions or some examples of how to successfully complete a task.

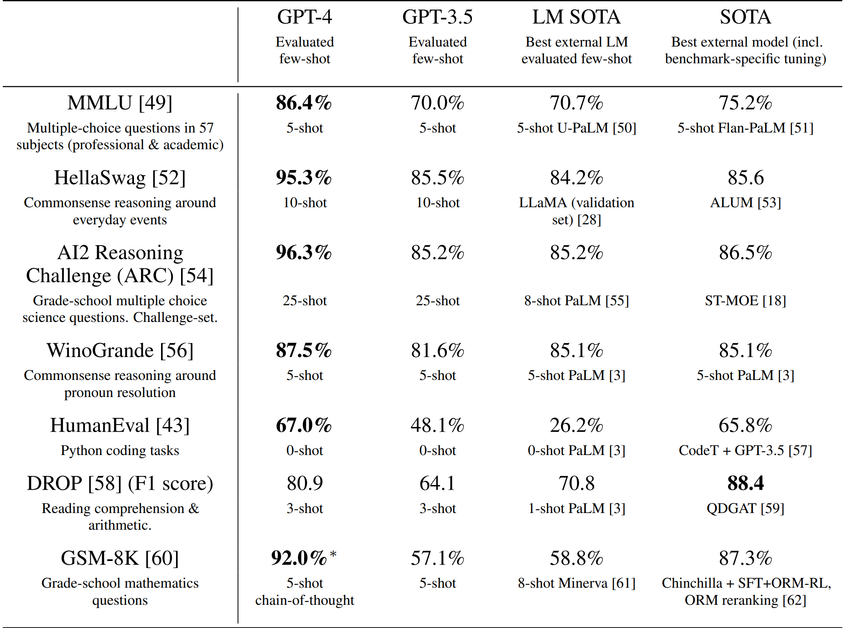

Model Evaluation

- Pre-trained base GPT-4 model assessed using traditional language model benchmarks.

- Contamination checks performed for test data to identify overlaps with the training set.

- Few-shot prompting utilized for all benchmarks during the evaluation.

- GPT-4 exhibits superior performance compared to existing language models and previous state-of-the-art systems.

- Outperforms models that often employ benchmark-specific crafting or additional training protocols.

- GPT-4's performance evaluated alongside the best state-of-the-art models (SOTA) with benchmark-specific training.

- GPT-4 outperforms existing models on all benchmarks, surpassing SOTA with benchmark-specific training on all datasets except DROP.

- GPT-4 outperforms the English language performance of GPT 3.5 and existing language models for the majority of languages, including low-resource languages such as Latvian, Welsh, and Swahili.

- GPT-4 substantially improves over previous models in the ability to follow user intent. On a dataset of 5,214 prompts submitted to ChatGPT and the OpenAI API, the responses generated by GPT-4 were preferred over the responses generated by GPT-3.5 on 70.2% of prompts.

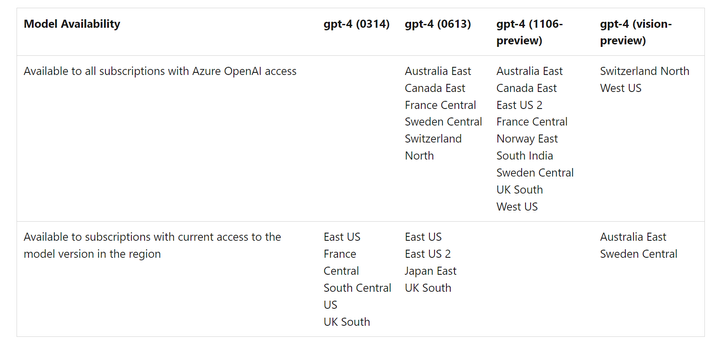

Azure OpenAI service

- Azure OpenAI Service provides REST API access to OpenAI's powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series.

- These models can be easily adapted to your specific task including but not limited to content generation, summarization, semantic search, and natural language to code translation.

- Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

GPT-4

GPT-4 can solve difficult problems with greater accuracy than any of OpenAI's previous models. Like GPT-3.5 Turbo, GPT-4 is optimized for chat and works well for traditional completions tasks. Use the Chat Completions API to use GPT-4.

GPT-3.5

GPT-3.5 models can understand and generate natural language or code. The most capable and cost effective model in the GPT-3.5 family is GPT-3.5 Turbo, which has been optimized for chat and works well for traditional completions tasks as well.

LLAMA 2

Llama 2 is a large language model (LLM) developed by Meta that can generate natural language text for various applications. It is pretrained on 2 trillion tokens of public data with a context length of 4096 and has variants with 7B, 13B and 70B parameters. In this blog post, we will show you how to use Llama 2 on Microsoft Azure, the platform for the most widely adopted frontier and open models.

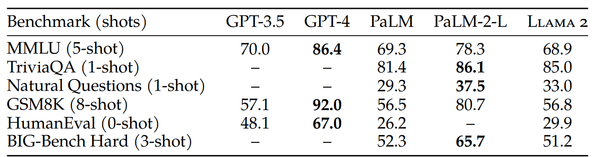

Comparison to closed-source models

- LLAMA 2 is not good at coding as per the statistics below but goes head-to-head with Chat GPT in other tasks.

Human Evaluation

Human evaluation results for Llama 2-Chat models compared to open- and closed-source models across ~4,000 helpfulness prompts with three raters per prompt. The largest Llama 2-Chat model is competitive with ChatGPT. Llama 2-Chat 70B model has a win rate of 36% and a tie rate of 31.5% relative to ChatGPT. Note: 4k prompt set does not include any coding- or reasoning-related prompts.

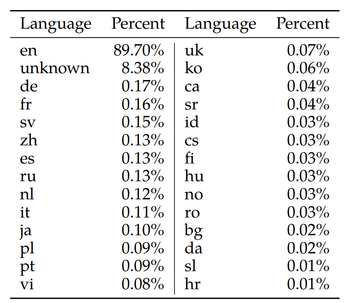

Language distribution in pretraining data

Most data is in English, meaning that Llama 2 will perform best for English-language use cases. The large unknown category is partially made up of programming code data. Model is not trained with enough coding data and hence not capable of solving coding related problems.

The model’s performance in languages other than English remains fragile and should be used with caution.

Performance with tool use

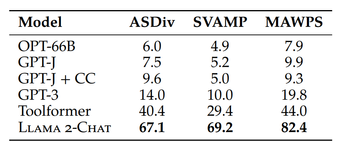

Evaluation on the math datasets

Llama 2-Chat is able to understand the tools’s applications, and the API arguments, just through the semantics, despite never having been trained to use tools

Example:

Ultimately, the choice between Llama 2 and GPT or ChatGPT-4 would depend on the specific requirements and budget of the user. Larger parameter sizes in models like ChatGPT-4 can potentially offer improved performance and capabilities, but the free accessibility of Llama 2 may make it an attractive option for those seeking a cost-effective solution for chatbot development.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.