- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- A Guide to Optimizing Performance and Saving Cost of your Machine Learning (ML) Service - Part 2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Return to Part 1: Introduction to ML Service

In this section, we will explore some of the options and best practices for deploying your ML model service on Azure, especially using Azure Machine Learning.

We will cover how to choose the appropriate Azure SKU for your ML service, as well as some of the settings and limits of Azure ML that you should be aware of.

Azure VM SKUs

After optimizing the model and framework utilization, it's essential to save costs by selecting the best VM SKU. Selecting the correct VM can help enhance performance and latency. However, we must be clear that the end goal is to reduce the cost of inference, not to get the best latency. For example, if SKU A setup can run 20% faster than SKU B but is 40% more expensive, SKU A may not be the best option.

Understand VM Configurations

First, we must understand the VM description. You must pay close attention to the following fields:

- CPU type; some VMs support several CPU types. They can have many instructions, some of which can aid machine learning execution. As an example:

- AVX-512, which is designed to boost vector processing performance in Skylake and Cascade Lake Intel CPUs. Broadwell CPUs, Haswell processors, and AMD processors do not support AVX-512.

- There is no hyperthreading on the HC-series, NDv2-series, HBv3-series, and so on. This might be handy if you have a high context transition rate in the OS or a high L3-cache miss rate.

- High frequency chipsets on FX-series(4.0GHz), HBv3-series(3.675GHz), etc.

- Memory size; if you have a large model or several models, pay close attention to this field.

- Disk:

- The default OS disk size is 40GB. All models and code will be stored on this drive via AzureML.

- SSD is desirable if you have code and want to write files regularly (not recommended).

- Network bandwidth: If the model request and/or response payload is substantial, you must pay close attention to network bandwidth. Different VM SKUs may have varying network bandwidth constraints. It should be noted that AzureML has its own limitations for Managed Endpoints. (Manage resources and quotas - Azure Machine Learning | Microsoft Learn)

- GPU: The N*-series are all GPU SKUs. They are using the most recent NVIDIA driver and CUDA version. Although all GPUs can handle inference workloads, some of them may not be cost-effective. Some GPUs, such as the T4 (NCasT4_v3-series), are tuned for inference execution using a specific machine learning framework (TensorRT).

Understanding VM Availability and Pricing

The price of different SKUs varies. Please refer to the Virtual Machine series | Microsoft Azure for the most up-to-date VM SKU list, available regions, and pricing. Some SKUs may be available in certain regions but not others.

If the client of a model service is a web service hosted in Azure, we preferably want the model service and the client service in the same region to minimize network latency. If cross-region access can't be avoided, you can find more details about network latency between Azure regions here.

In order to decide which SKU is the best fit, there are various profiling tools you could leverage. More on this in the later parts of this series.

Additionally, here are the SKU supported by Managed Online Endpoints ref. Managed online endpoints VM SKU list - Azure Machine Learning | Microsoft Learn

AzureML Settings and Limits

The AzureML settings and limits related to model service throughput and latency fall into two categories: network stack settings and container settings.

Network Stack

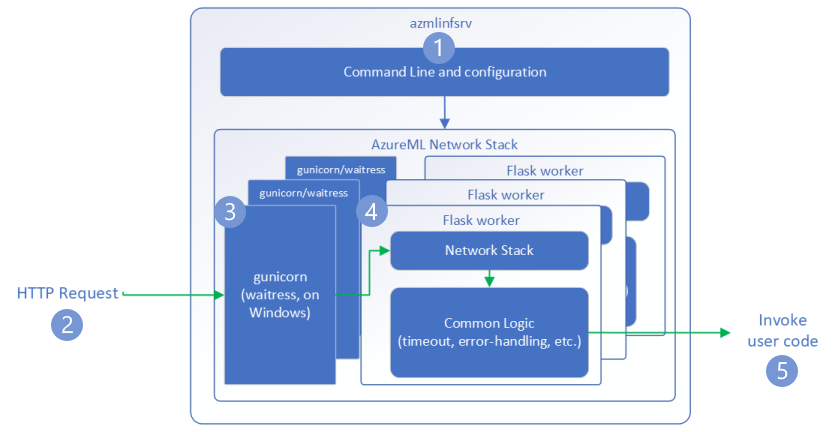

Here is how the AzureML network stack request flow looks like:

Azure Machine Learning inference HTTP server - Azure Machine Learning | Microsoft Learn

These limits are either hardcoded or related to your deployment, such as the number of cores. Refer here for the resource limits Manage resources and quotas - Azure Machine Learning | Microsoft Learn

There is one deployment configuration you need to pay attention to:

max_concurrent_requests_per_instance

If you are using AzureML container image or AzureML pre-built inference image, this number needs to be set the same as WORKER_COUNT (discussed below). If you are using an image built by yourself, then you need to set it to an appropriate number.

This setting defines the concurrent level at load balance time. Usually, the higher this number, the higher the throughput. However, if this number is set higher than what the model and machine learning framework can handle, it will cause requests to wait in the queue, eventually leading to longer end-to-end latency.

If the request per second is greater than (max_concurrent_requests_per_instance * number_of_instance), the client side will receive an HTTP status code 429.

For default value for request settings, refer here CLI (v2) managed online deployment YAML schema - Azure Machine Learning | Microsoft Learn

Container

Bring your own container

If you are using a Docker image built by yourself, please make sure it can accept environment variables to tune the setup. Then during deployment, make sure the environment variables are set properly.

Here is an example:

Assume “mymodelserver” can read an environment variable “MY_THREAD_COUNT” at runtime. Here is an example of your Dockerfile:

FROM <base image>

...

ENV MY_THREAD_COUNT

...

ENTRYPOINT [“mymodelserver”, “param1”, “param2”]

At deployment time, you can set the “MY_THREAD_COUNT” to a proper number to decide different parallelism level on different SKU.

AzureML container

If you are using the AzureML container image or AzureML prebuilt inference image, then WORKER_COUNT is one of the most important environment variables you need to set properly.

In AzureML provided images, the Python HTTP server can have multiple worker processes to serve concurrent HTTP requests. Each of the worker processes will load a model instance and process requests separately. WORKER_COUNT is an integer to define how many worker processes and default value is one (1). This means if you do not set this environment variable to a proper number, even if you choose a SKU that has multiple CPU cores, the container will still only process one request at a time!

How to determine WORKER_COUNT?

This value is determined using an iterative process. You can use the following process to determine the value of WORKER_COUNT

- Determine number of cores that can be used in the selected SKU.

- For example: F32s v2 SKU, there are 32 cores that can be utilized by the model.

- For each worker, determine the number of CPU cores the model execution actually needs.

- You can get the number by running profiling against single worker with different machine learning framework and library setup.

- Note: Do not over optimize for latency. As long as it can meet the requirement, you can start with the result.

Then, WORKER_COUNT = floor(result_1 / result_2). Make sure you slightly reserve some cores and memory for system components on the same VM.

Return to Part 1: Introduction to ML Service

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.