- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Vision Transformer Learning with AML (Part 1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

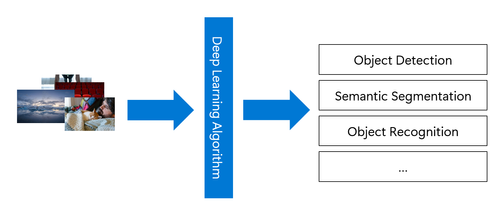

Applications of Computer Vision such as object recognition, semantic segmentation, object classification and others have seen recent adoption in healthcare, satellite imagery and visual surveillance among other fields.

This blog series aims to communicate some of the Azure Machine Learning (AML) platform features that have shown to be highly useful for Enterprise-scale Vision model training and inference.

This blog is organized in two parts:

- Part 1 offers a brief overview of vision learning approaches and quickly describes common challenges for large-scale Vision Transformer model training.

- Part 2 describes how Azure Machine Learning (AML) may be used to overcome many (if not all!) of these challenges for real-world, Enterprise applications.

Vision Transformers - An Overview

Vision Transformers is set of Representation Learning algorithms that are trained on visual information (i.e.: images and pictures) to produce various types of output.

While excellent results have been achieved with relatively simple algorithms such as Deep and Convolutional Neural Networks (DNN, CNN), usage in real-world applications is often challenging as many standard approaches require lots of labeled training data. CNN, for example, may be trained to recognize objects, but requires a large number of highly curated images with pristine, validated labels to be successful. Collecting, curating, labeling and updating such datasets is often prohibitively expensive and intractable at scale.

Case for Self-supervision

Many modern visual information processing approaches attempt to address the labeled data scarcity by leveraging the concept of Self-supervision. Self-supervision stems from the research on text embeddings, which has shown that useful context may be learned from the data's intrinsic structures. Commonly used text embedding approaches (e.g.: word2vec) learn meaningful structures in text by leveraging relative information between words and sentences. For image processing, self-supervision aims to discover similar semantics from relative positions of pixel patches.

[2]

An interesting consequence of self-supervised approaches is that the trained network appears to capture non-trivial latent context that seems to generalize at the category level [5]. That is, the self-supervised model appears to spontaneously learn meaningful components across images (such as visual objects, their parts and segments) without any human intervention. This emergent property makes self-supervised algorithms ideal for real-world applications where quality labeled data may be unavailable. Further still, recent research has shown that coupling self-supervised models with other machine learning algorithms (e.g.: classification) produces excellent results with significantly reduced training data requirements [1,2,3,4,5].

Autoencoders

Autoencoders are mathematical objects that learn latent representation of input. For example, PCA and k-means are autoencoders because they map high-dimensional input to lower-dimensional projections with little loss of relative information [3]. For image processing, autoencoders are often used to capture semantic relationships between image sections in a way similar to how language models (e.g.: GPT) generalize linguistic concepts in text.

Many autoencoder algorithms have been proposed, with Vision Transformer (ViT) and Masked Autoencoder (MAE) gaining popularity in recent years.

Vision Transformer (ViT)

Conceptually, ViT is a special case of the Transformer architecture that has been popular since the advents of BERT and ChatGPT. Transformers is a flexible machine learning approach that couples autoencoders with decoding layers (of various complexity) to generate predictions based on the low-dimensional generalized semantic encoder learning. ViT is an implementation of the Transformer architecture for the image data.

[5]

Masked Autoencoder (MAE)

Masked Autoencoders (MAE) is a type of ViT [3] that operates on smaller subsets of the training data to significantly reduce compute and memory requirements.

[4]

Challenges at scale

While ViT, MAE and other approaches have been successful in various applications, there are many technical hurdles that must be overcome to deliver effective real-world solutions. This section outlines some of those challenges and Part 2 of this blog will show how the Azure Machine Learning (AML) platform features may be used to deliver quality visual learning models at scale.

Model Sizing

While vision transformer models can deliver state of the art performance on a variety of tasks, their large sizes make them difficult to train. Indeed, training these models on large image datasets requires a lot of GPU memory to store training inputs, model weights, gradients and optimizer states. Very often, the memory needed is more than a single GPU or Virtual Machine can deliver. Distributing the training process across multiple GPUs in a cluster of VMs is becoming the norm to efficiently train these models.

However, in order to fully reap the benefits of distributed model training, teams need to do a significant amount of configuration and tuning. For example, ensuring high bandwidth inter-GPU communication, selecting the appropriate training parallelism strategy or minimizing GPU idle time is paramount in order to speed up the model training. Getting all of this right is complex and can take days if not weeks.

In part 2 of this series, we will explore how Azure Machine Learning simplifies the procurement, configuration and scaling of the training infrastructure as well as optimizes distributed training workloads to speed them up without the headaches.

Data Wrangling

Some of the challenges of large-scale machine learning stream from the preprocessing steps where raw data must be manipulated to fit an appropriate tensor. The next section of this blog will discuss how AML components, such as Pipeline Designer, may be used to simplify Data Wrangling at scale.

Effective Debugging

Large-scale ML model training (vision or otherwise) must often distribute training processes over a large number of highly choreographed computation and data access resources in order to achieve the desired model quality. Debugging such processes is often challenging as distributed computation is often difficult to replicate in development environments.

Azure Machine Learning offers a number of ways to simplify and streamline debugging efforts by exposing effective logging facilities, direct connectivity to computation cluster members and extensive performance dashboards and metrics.

Model Deployment

Unlike model training, which is often accomplished in batches, model inference is often a continuous process that must be scaled to accept frequent requests from many concurrent users. While simple models (e.g.: scikit-learn) may be scaled easily with cheap CPUs, modern visual models often require several GPUs for the inference stage.

In addition, model performance indicators such as Drift are often difficult to capture and visualize. In the next second, we will discuss how AML may be used to track model performance over time and automatically manage model retraining based on monitoring of relevant KPIs.

Please stay tuned for the following blog to discuss how AML platform features may be used to simplify Model Sizing, Data Wrangling, Debugging and Model Deployment.

References:

1.Revisiting Self-Supervised Visual Representation Learning

2. Unsupervised Visual Representation Learning by Context Prediction

3. Masked Autoencoders Are Scalable Vision Learners

4. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

[2010.11929] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (arxiv.org)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.