First published on TECHNET on Oct 14, 2016

[caption id="attachment_7045" align="aligncenter" width="879"] This tiny two-server cluster packs powerful compute and spacious storage into one cubic foot.[/caption]

This tiny two-server cluster packs powerful compute and spacious storage into one cubic foot.[/caption]

The Challenge

In the Windows Server team, we tend to focus on going big. Our enterprise customers and service providers are increasingly relying on Windows as the foundation of their software-defined datacenters, and needless to say, our hyperscale public cloud Azure does too. Recent big announcements like support for 24 TB of memory per server with Hyper-V, or 6+ million IOPS per cluster with Storage Spaces Direct, or delivering 50 Gb/s of throughput per virtual machine with Software-Defined Networking are the proof.

But what can these same features in Windows Server do for smaller deployments? Those known in the IT industry as Remote-Office / Branch-Office (“ROBO”) – think retail stores, bank branches, private practices, remote industrial or constructions sites, and more. After all, their basic requirement isn’t so different – they need high availability for mission-critical apps, with rock-solid storage for those apps. And generally, they need it to be local, so they can operate – process transactions, or look up a patient’s records – even when their Internet connection is flaky or non-existent.

For these deployments, cost is paramount. Major retail chains operate thousands, or tens of thousands, of locations. This multiplier makes IT budgets extremely sensitive to the per-unit cost of each system. The simplicity and savings of hyper-convergence – using the same servers to provide compute and storage – present an attractive solution.

With this in mind, under the auspices of Project Kepler-47 , we set about going small …

Meet Kepler-47

The resulting prototype – and it’s just that, a prototype – was revealed at Microsoft Ignite 2016.

[caption id="attachment_7055" align="aligncenter" width="879"] Kepler-47 on expo floor at Microsoft Ignite 2016 in Atlanta.[/caption]

Kepler-47 on expo floor at Microsoft Ignite 2016 in Atlanta.[/caption]

In our configuration, this tiny two-server cluster provides over 20 TB of available storage capacity, and over 50 GB of available memory for a handful of mid-sized virtual machines. The storage is flash-accelerated, the chips are Intel Xeon, and the memory is error-correcting DDR4 – no compromises. The storage is mirrored to tolerate hardware failures – drive or server – with continuous availability. And if one server goes down or needs maintenance, virtual machines live migrate to the other server with no appreciable downtime.

(Did we mention it also has not one, but two 3.5mm headphone jacks? Hah !)

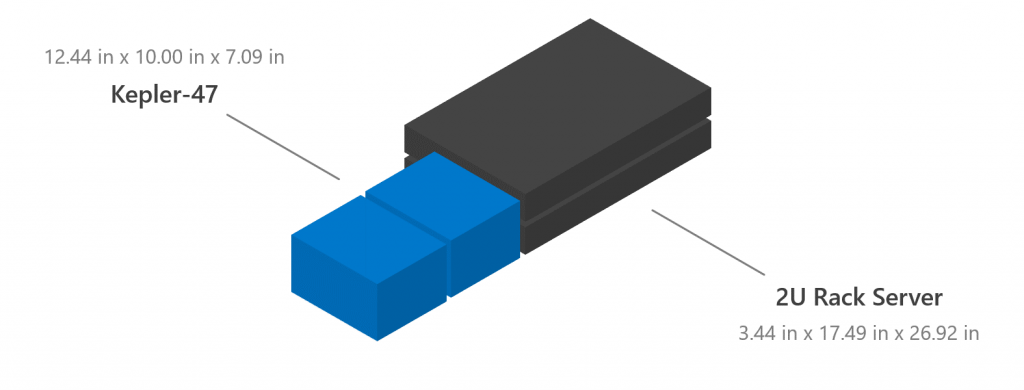

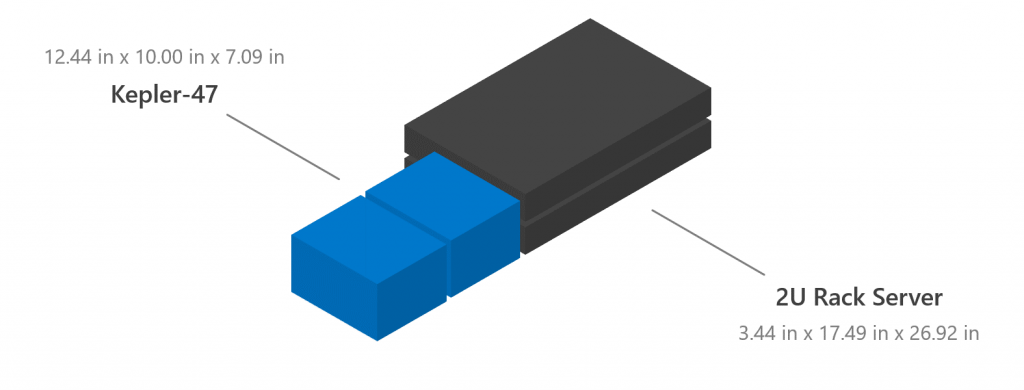

[caption id="attachment_7005" align="aligncenter" width="879"] Kepler-47 is 45% smaller than standard 2U rack servers.[/caption]

Kepler-47 is 45% smaller than standard 2U rack servers.[/caption]

In terms of size, Kepler-47 is barely one cubic foot – 45% smaller than standard 2U rack servers. For perspective, this means both servers fit readily in one carry-on bag in the overhead bin!

We bought (almost) every part online at retail prices. The total cost for each server was just $1,101. This excludes the drives, which we salvaged from around the office, and which could vary wildly in price depending on your needs.

[caption id="attachment_7015" align="aligncenter" width="840"] Each Kepler-47 server cost just $1,101 retail, excluding drives.[/caption]

Each Kepler-47 server cost just $1,101 retail, excluding drives.[/caption]

Technology

Kepler-47 is comprised of two servers, each running Windows Server 2016 Datacenter . The servers form one hyper-converged Failover Cluster , with the new Cloud Witness as the low-cost, low-footprint quorum technology. The cluster provides high availability to Hyper-V virtual machines (which may also run Windows, at no additional licensing cost), and Storage Spaces Direct provides fast and fault tolerant storage using just the local drives.

Additional fault tolerance can be achieved using new features such as Storage Replica with Azure Site Recovery.

Notably, Kepler-47 does not use traditional Ethernet networking between the servers, eliminating the need for costly high-speed network adapters and switches. Instead, it uses Intel Thunderbolt™ 3 over a USB Type-C connector, which provides up to 20 Gb/s (or up to 40 Gb/s when utilizing display and data together!) – plenty for replicating storage and live migrating virtual machines.

To pull this off, we partnered with our friends at Intel, who furnished us with pre-release PCIe add-in-cards for Thunderbolt™ 3 and a proof-of-concept driver.

[caption id="attachment_7025" align="aligncenter" width="879"] Kepler-47 does not use traditional Ethernet between the servers; instead, it uses Intel Thunderbolt™ 3.[/caption]

Kepler-47 does not use traditional Ethernet between the servers; instead, it uses Intel Thunderbolt™ 3.[/caption]

To our delight, it worked like a charm – here’s the Networks view in Failover Cluster Manager. Thanks, Intel!

[caption id="attachment_7036" align="aligncenter" width="879"] The Networks view in Failover Cluster Manager, showing Thunderbolt™ Networking.[/caption]

The Networks view in Failover Cluster Manager, showing Thunderbolt™ Networking.[/caption]

While Thunderbolt™ 3 is already in widespread use in laptops and other devices, this kind of server application is new, and it’s one of the main reasons Kepler-47 is strictly a prototype. It also boots from USB 3 DOM, which isn’t yet supported, and has no host-bus adapter (HBA) nor SAS expander, both of which are currently required for Storage Spaces Direct to leverage SCSI Enclosure Services (SES) for slot identification. However, it otherwise passes all our validation and testing and, as far as we can tell, works flawlessly.

(In case you missed it, support for Storage Spaces Direct clusters with just two servers was announced at Ignite!)

Parts List

Ok, now for the juicy details. Since Ignite, we have been asked repeatedly what parts we used. Here you go:

[caption id="attachment_7035" align="aligncenter" width="879"] The key parts of Kepler-47.[/caption]

The key parts of Kepler-47.[/caption]

* Just one needed for both servers.

Practical Notes

The ASRock C236 WSI motherboard is the only one we could locate that is mini-ITX form factor, has eight SATA ports, and supports server-class processors and error-correcting memory with SATA hot-plug. The E3-1235L v5 is just 25 watts, which helps keep Kepler-47 very quiet. (Dan has been running it literally on his desk since last month, and he hasn’t complained yet.)

Having spent all our SATA ports on the storage, we needed to boot from something else. We were delighted to spot the USB 3 header on the motherboard.

The U-NAS NSC-800 chassis is not the cheapest option. You could go cheaper. However, it features an aluminum outer casing, steel frame, and rubberized drive trays – the quality appealed to us.

We actually had to order two sets of SATA cables – the first were not malleable enough to weave their way around the tight corners from the board to the drive bays in our chassis. The second set we got are flat and 30 AWG, and they work great.

Likewise, we had to confront physical limitations on the heatsink – the fan we use is barely 2.7 cm tall, to fit in the chassis.

We salvaged the drives we used, for cache and capacity, from other systems in our test lab. In the case of the SSDs, they’re several years old and discontinued, so it’s not clear how to accurately price them. In the future, we imagine ROBO deployments of Storage Spaces Direct will vary tremendously in the drives they use – we chose 4 TB HDDs, but some folks may only need 1 TB, or may want 10 TB. This is why we aren’t focusing on the price of the drives themselves – it’s really up to you.

Finally, the Thunderbolt™ 3 controller chip in PCIe add-in-card form factor was pre-release, for development purposes only. It was graciously provided to us by our friends at Intel. They have cited a price-tag of $8.55 for the chip, but not made us pay yet. :-)

Takeaway

With Project Kepler-47 , we used Storage Spaces Direct and Windows Server 2016 to build an unprecedentedly low-cost high availability solution to meet remote-office, branch-office needs. It delivers the simplicity and savings of hyper-convergence, with compute and storage in a single two-server cluster, with next to no networking gear, that is very budget friendly.

Are you or is your organization interested in this type of solution? Let us know in the comments!

// Cosmos Darwin ( @CosmosDarwin ), Dan Lovinger, and Claus Joergensen ( @ClausJor )

[caption id="attachment_7045" align="aligncenter" width="879"]

This tiny two-server cluster packs powerful compute and spacious storage into one cubic foot.[/caption]

This tiny two-server cluster packs powerful compute and spacious storage into one cubic foot.[/caption]

The Challenge

In the Windows Server team, we tend to focus on going big. Our enterprise customers and service providers are increasingly relying on Windows as the foundation of their software-defined datacenters, and needless to say, our hyperscale public cloud Azure does too. Recent big announcements like support for 24 TB of memory per server with Hyper-V, or 6+ million IOPS per cluster with Storage Spaces Direct, or delivering 50 Gb/s of throughput per virtual machine with Software-Defined Networking are the proof.

But what can these same features in Windows Server do for smaller deployments? Those known in the IT industry as Remote-Office / Branch-Office (“ROBO”) – think retail stores, bank branches, private practices, remote industrial or constructions sites, and more. After all, their basic requirement isn’t so different – they need high availability for mission-critical apps, with rock-solid storage for those apps. And generally, they need it to be local, so they can operate – process transactions, or look up a patient’s records – even when their Internet connection is flaky or non-existent.

For these deployments, cost is paramount. Major retail chains operate thousands, or tens of thousands, of locations. This multiplier makes IT budgets extremely sensitive to the per-unit cost of each system. The simplicity and savings of hyper-convergence – using the same servers to provide compute and storage – present an attractive solution.

With this in mind, under the auspices of Project Kepler-47 , we set about going small …

Meet Kepler-47

The resulting prototype – and it’s just that, a prototype – was revealed at Microsoft Ignite 2016.

[caption id="attachment_7055" align="aligncenter" width="879"]

Kepler-47 on expo floor at Microsoft Ignite 2016 in Atlanta.[/caption]

Kepler-47 on expo floor at Microsoft Ignite 2016 in Atlanta.[/caption]

In our configuration, this tiny two-server cluster provides over 20 TB of available storage capacity, and over 50 GB of available memory for a handful of mid-sized virtual machines. The storage is flash-accelerated, the chips are Intel Xeon, and the memory is error-correcting DDR4 – no compromises. The storage is mirrored to tolerate hardware failures – drive or server – with continuous availability. And if one server goes down or needs maintenance, virtual machines live migrate to the other server with no appreciable downtime.

(Did we mention it also has not one, but two 3.5mm headphone jacks? Hah !)

[caption id="attachment_7005" align="aligncenter" width="879"]

Kepler-47 is 45% smaller than standard 2U rack servers.[/caption]

Kepler-47 is 45% smaller than standard 2U rack servers.[/caption]

In terms of size, Kepler-47 is barely one cubic foot – 45% smaller than standard 2U rack servers. For perspective, this means both servers fit readily in one carry-on bag in the overhead bin!

We bought (almost) every part online at retail prices. The total cost for each server was just $1,101. This excludes the drives, which we salvaged from around the office, and which could vary wildly in price depending on your needs.

[caption id="attachment_7015" align="aligncenter" width="840"]

Each Kepler-47 server cost just $1,101 retail, excluding drives.[/caption]

Each Kepler-47 server cost just $1,101 retail, excluding drives.[/caption]

Technology

Kepler-47 is comprised of two servers, each running Windows Server 2016 Datacenter . The servers form one hyper-converged Failover Cluster , with the new Cloud Witness as the low-cost, low-footprint quorum technology. The cluster provides high availability to Hyper-V virtual machines (which may also run Windows, at no additional licensing cost), and Storage Spaces Direct provides fast and fault tolerant storage using just the local drives.

Additional fault tolerance can be achieved using new features such as Storage Replica with Azure Site Recovery.

Notably, Kepler-47 does not use traditional Ethernet networking between the servers, eliminating the need for costly high-speed network adapters and switches. Instead, it uses Intel Thunderbolt™ 3 over a USB Type-C connector, which provides up to 20 Gb/s (or up to 40 Gb/s when utilizing display and data together!) – plenty for replicating storage and live migrating virtual machines.

To pull this off, we partnered with our friends at Intel, who furnished us with pre-release PCIe add-in-cards for Thunderbolt™ 3 and a proof-of-concept driver.

[caption id="attachment_7025" align="aligncenter" width="879"]

Kepler-47 does not use traditional Ethernet between the servers; instead, it uses Intel Thunderbolt™ 3.[/caption]

Kepler-47 does not use traditional Ethernet between the servers; instead, it uses Intel Thunderbolt™ 3.[/caption]

To our delight, it worked like a charm – here’s the Networks view in Failover Cluster Manager. Thanks, Intel!

[caption id="attachment_7036" align="aligncenter" width="879"]

The Networks view in Failover Cluster Manager, showing Thunderbolt™ Networking.[/caption]

The Networks view in Failover Cluster Manager, showing Thunderbolt™ Networking.[/caption]

While Thunderbolt™ 3 is already in widespread use in laptops and other devices, this kind of server application is new, and it’s one of the main reasons Kepler-47 is strictly a prototype. It also boots from USB 3 DOM, which isn’t yet supported, and has no host-bus adapter (HBA) nor SAS expander, both of which are currently required for Storage Spaces Direct to leverage SCSI Enclosure Services (SES) for slot identification. However, it otherwise passes all our validation and testing and, as far as we can tell, works flawlessly.

(In case you missed it, support for Storage Spaces Direct clusters with just two servers was announced at Ignite!)

Parts List

Ok, now for the juicy details. Since Ignite, we have been asked repeatedly what parts we used. Here you go:

[caption id="attachment_7035" align="aligncenter" width="879"]

The key parts of Kepler-47.[/caption]

The key parts of Kepler-47.[/caption]

| Function | Product | View Online | Cost |

| Motherboard | ASRock C236 WSI | Link | $199.99 |

| CPU | Intel Xeon E3-1235L v5 25w 4C4T 2.0Ghz | Link | $283.00 |

| Memory | 32 GB (2 x 16 GB) Black Diamond ECC DDR4-2133 | Link | $208.99 |

| Boot Device | Innodisk 32 GB USB 3 DOM | Link | $29.33 |

| Storage (Cache) | 2 x 200 GB Intel S3700 2.5” SATA SSD | Link | - |

| Storage (Capacity) | 6 x 4 TB Toshiba MG03ACA400 3.5” SATA HDD | Link | - |

| Networking (Adapter) | Intel Thunderbolt™ 3 JHL6540 PCIe Gen 3 x4 Controller Chip | Link | - |

| Networking (Cable) | Cable Matters 0.5m 20 Gb/s USB Type-C Thunderbolt™ 3 | Link | $17.99* |

| SATA Cables | 8 x SuperMicro CBL-0481L | Link | $13.20 |

| Chassis | U-NAS NSC-800 | Link | $199.99 |

| Power Supply | ASPower 400W Super Quiet 1U | Link | $119.99 |

| Heatsink | Dynatron K2 75mm 2 Ball CPU Fan | Link | $34.99 |

| Thermal Pads | StarTech Heatsink Thermal Transfer Pads (Set of 5) | Link | $6.28* |

* Just one needed for both servers.

Practical Notes

The ASRock C236 WSI motherboard is the only one we could locate that is mini-ITX form factor, has eight SATA ports, and supports server-class processors and error-correcting memory with SATA hot-plug. The E3-1235L v5 is just 25 watts, which helps keep Kepler-47 very quiet. (Dan has been running it literally on his desk since last month, and he hasn’t complained yet.)

Having spent all our SATA ports on the storage, we needed to boot from something else. We were delighted to spot the USB 3 header on the motherboard.

The U-NAS NSC-800 chassis is not the cheapest option. You could go cheaper. However, it features an aluminum outer casing, steel frame, and rubberized drive trays – the quality appealed to us.

We actually had to order two sets of SATA cables – the first were not malleable enough to weave their way around the tight corners from the board to the drive bays in our chassis. The second set we got are flat and 30 AWG, and they work great.

Likewise, we had to confront physical limitations on the heatsink – the fan we use is barely 2.7 cm tall, to fit in the chassis.

We salvaged the drives we used, for cache and capacity, from other systems in our test lab. In the case of the SSDs, they’re several years old and discontinued, so it’s not clear how to accurately price them. In the future, we imagine ROBO deployments of Storage Spaces Direct will vary tremendously in the drives they use – we chose 4 TB HDDs, but some folks may only need 1 TB, or may want 10 TB. This is why we aren’t focusing on the price of the drives themselves – it’s really up to you.

Finally, the Thunderbolt™ 3 controller chip in PCIe add-in-card form factor was pre-release, for development purposes only. It was graciously provided to us by our friends at Intel. They have cited a price-tag of $8.55 for the chip, but not made us pay yet. :-)

Takeaway

With Project Kepler-47 , we used Storage Spaces Direct and Windows Server 2016 to build an unprecedentedly low-cost high availability solution to meet remote-office, branch-office needs. It delivers the simplicity and savings of hyper-convergence, with compute and storage in a single two-server cluster, with next to no networking gear, that is very budget friendly.

Are you or is your organization interested in this type of solution? Let us know in the comments!

// Cosmos Darwin ( @CosmosDarwin ), Dan Lovinger, and Claus Joergensen ( @ClausJor )

Updated Apr 10, 2019

Version 2.0Cosmos_Darwin Microsoft

Microsoft

Microsoft

MicrosoftJoined December 08, 2016

Storage at Microsoft

Follow this blog board to get notified when there's new activity