First published on TECHNET on Nov 01, 2013

Hi all,

Here is another great Work Folders blog post that shares information about work folders performance in large scale deployments. The content below was compiled and written by Sundar Srinivasan who is one of the software engineers in the Work Folders product team.

One of the exciting new features introduced in Windows Server 2012 R2 is Work Folders. Please refer to this Technet article and this blog post for broader details about the Work Folders feature and its deployment. During the development of this feature, I worked on testing the performance of Work Folders on a typical enterprise-scale deployment. In this blog post, we are going to look at the performance and scalability aspects of Work Folders.

There are three scenarios of sync that we modeled for this experiment. Once a user configures Work Folders on her device, the user tends to move all her work-related files to the Work Folders triggering a sync that populates the data in the Sync Share set up on her organization’s Windows 2012 R2 file server with Work Folders feature enabled. This scenario is termed as “first time sync”.

The second scenario is the user adding new devices. As the user configures Work Folders on her personal devices like laptops, Surface Pro or Surface RT, a sync will be triggered from each of these devices to sync the work-related files to her devices.

Beyond this point, the user changes their Work Folders data like editing their Word documents and creating new PowerPoint files on a daily basis, on one or more devices. Any such change will trigger what we call an “ongoing sync” to the server. For the purpose of measuring the scalability of the file server enabled for synchronizing Work Folders data, which we will refer to as the sync server, we are very much interested in studying how many concurrent ongoing sync session the server can handle without affecting the experience of an individual user.

First section of this blog explains the topology that we used for simulating 5,000 users and a heuristic model that explains how we derive the concurrent sync sessions the sync server serves on average at any given time. Then we will look at the results of our experiments and what resources become bottleneck. This blog will also give pointers to some Windows Performance Counters that can help analyzing the performance of the sync server in production and give some guidance on configurations.

We used a mid-level 2-node failover cluster server. The storage for the server is through external JBODs of SAS drives connected through an LSI controller. Our definition of the server hardware looks like this

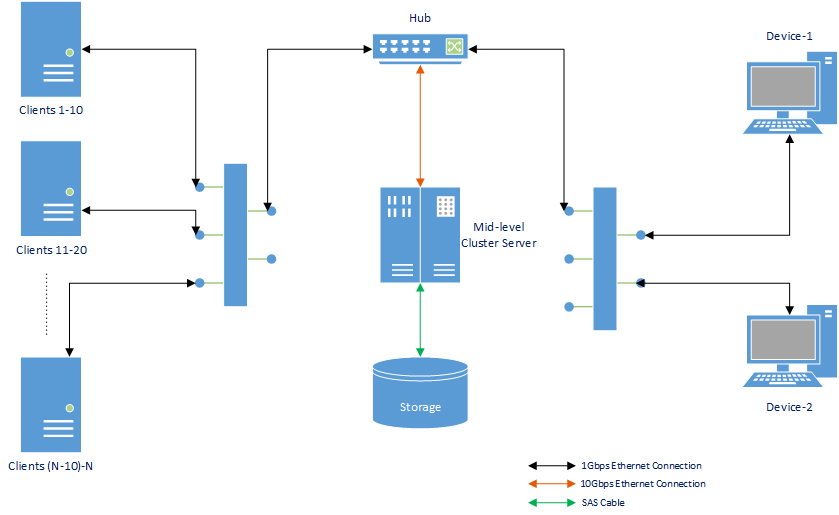

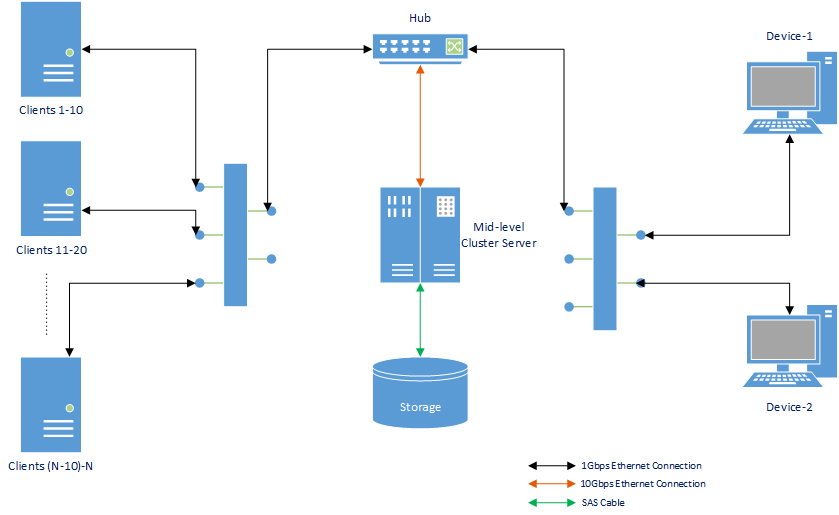

We set up the machine with the data of 5000 users on the sync server distributed across 10 Sync Shares. To simulate multiple devices with Work Folders configured, we use a small set of entry-level servers which will simulate multiple sync sessions from each of them, as if they originate from multiple devices. The server has a 10Gbps connection to the hub, while all the clients have 1Gbps connections.

The hardware topology that we used in this test is as shown in the below diagram:

The list of clients on the left-hand side of the diagram represents the entry-level servers which simulate multiple sync session from each of them, as if they originate from multiple devices. We would like to measure the experience of the users, when the server and the infrastructure is loaded with multiple sync sessions. So we use two desktop-class devices and we measure the time taken for the changes on device 1 to reach device 2. The time taken for the data to be synced to the second device should not be fairly different compared to the time taken when the server is free.

Dataset that we used for each user is about 2 GB each in size with 2500 files and 500 folders. Based on our background knowledge on Offline Files feature , the distribution of file sizes on a typical dataset look like this:

Although 99% of the sync users fall under this category, we also included some users to test our dataset scale limits. About 1% of the users have a 100GB dataset, with some users having 250,000 files and 500,000 folders and 10,000 files in a single folder, and with some users with files being as large as 10GB.

We tested with 5000 users in this setup across ten Sync Shares. In order to test the scale limit on number of users supported per Sync Share, we created a single Sync Share with 1000 users and distributed the other 4000 users across nine Sync Shares: five Sync Shares with 400 users each and four Sync Shares with 500 users each.

We have developed a heuristic model to calculate the number of simultaneous sync sessions on the sync server, by looking at the distribution of user profiles. In Work Folders, any file or folder change made locally will trigger a sync. But if there is no change made on a particular device with Work Folders configured, the device still polls the server once in 10 minutes to determine if there is any new file or folder or new version of existing file available on the server for download. So we just need to model the user activity during this 10 minutes polling interval.

In this model, we assume that each user has 3 devices with Work Folders configured - out of them on an average 1.5 devices/user will be active and we assume that on an average 20% of users are offline. The other users are classified based on their usage of Work Folders into 5 profiles - from inactive users whose devices are online but passively receiving changes to hyperactive users who make bulk file copies into the Work Folders:

Our model is based on the educated assumptions made in the distribution of the users with our background knowledge of the user data synchronization scenario. We also derive the number of simultaneous sessions on the server based on our empirical knowledge about the duration of a sync session and polling session on the server. We applied this model to experiment if the mid-level server that we described above can support 5000 users without noticeably degrading the sync user-experience of individual users. The results of our experiment is described in the section below.

In this section, we will discuss what the results look like and compare it with the results when the server is inactive. We will also show how to identify network bottleneck during synchronization.

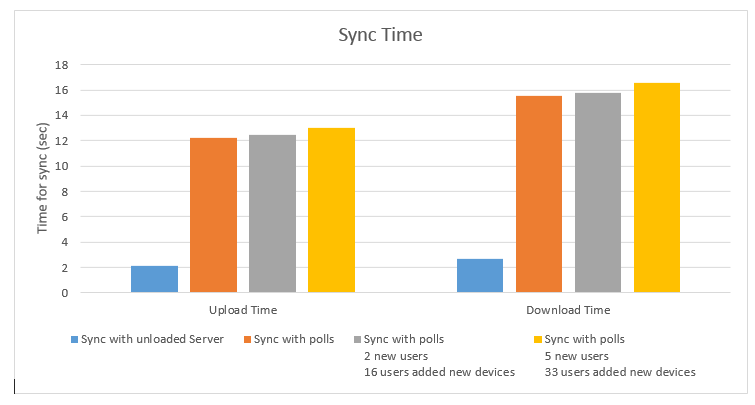

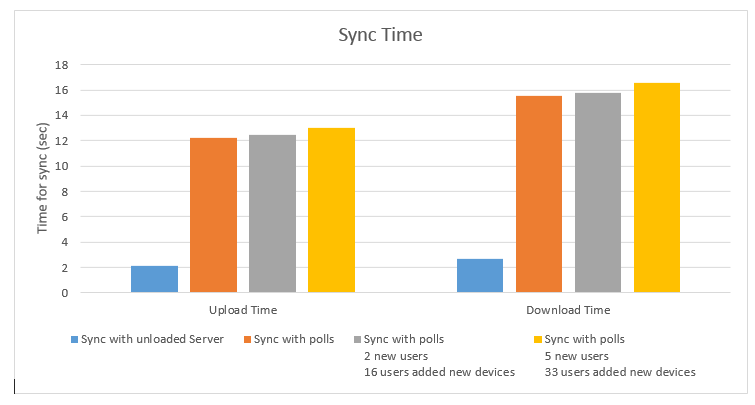

To measure the sync time, our reference user falls in the class of a very active user who has made 10 independent file changes and two new files created in a new folder. Our test results of sync time of the reference user is as shown below:

The chart above shows the time taken for the local changes to be upload to the server and the remote changes to be downloaded to a different device. To put this in the context of user experience, a common concern in any sync system is a simple question posed by the user: “Will my files be available in all my devices immediately?” From Work Folders point of view, the answer to this question depends on several factors like frequency of synchronization, amount of data transferred to effect that sync, and the speed of the network connection from the device to the server. So the end-user experience of Work Folders is defined in terms of the perceived delay after which a user can expect the data to be available across multiple devices As the number of concurrent sync sessions to the server increases, we expect that the user will not experience a significant delay in changes from one device synchronizing to her other devices.

The following graph shows the time in seconds the reference user has to wait for their changes (10 independent file changes and two new files created in a new folder) to be available across multiple devices.

As we notice from the graph above, there is a less than 5% impact on the user experience.

However the previous chart shows that the upload time and download time increases from 2 seconds to 12 seconds, when the sync server is loaded with several sync and polling requests. We proceed forward in studying which resources are becoming a bottleneck on the server to cause this increase in the sync time from 2 seconds to 12 seconds.

The following chart gives the CPU utilization on the server during this process.

The CPU utilization remains within 36% with a median value of 28%, so we do not think CPU was becoming a bottleneck at this stage. Memory used by the process was 12GB, but it was primarily due to the database residing in memory for faster access. The server has a 64GB RAM and so it was not becoming a bottleneck. In other testing, we saw that if the operating system was indeed under memory pressure, the memory used by the database for caching would decrease.

The utilization of other network and disks are shown in the table below.

From the table above, it is evident that the network is becoming a bottleneck as the number of concurrent sync sessions and polling sessions increase. As already explained in the hardware topology, the client machines have a 1Gbps NIC, whereas the sync server has a 10Gbps NIC hooked up to a 10Gbps port of the same network hub. Since the network usage increases, the clients experience delay in communication. However we noticed that none of the HTTP packets were rejected.

From this experiment, we found that the performance of sync operation on high-scale is IO-bound. It is possible to achieve such a high performance even on an entry-level server with 4GB memory and quad-core single socket CPU, as long as the network has a higher bandwidth and storage sub-system has a higher throughput.

Although Work Folders does not introduce any new performance counters, existing performance counters of IIS, network and disk in Windows Server 2012 R2 can be used to understand which resource is becoming overused or bottlenecked. We have listed some important performance counters in the table below

Work Folders on server is highly network and IO intensive. On sync server, Work Folders runs as a separate request queue named SyncSharePool. The table above contains the counters specific to the SyncSharePool that would be useful. If the rejection rate goes above 0% or if the number of rejected requests shoots up, then the network is clearly getting bottlenecked. Apart from these counters, there are other generic IIS counters and network counters that can give information about the network utilization.

The number of \HTTP Service Url Groups(*)\CurrentConnections gives the existing connections to the server that includes the combined count of both sync sessions and polling sessions. If the network utilization reaches 100%, we will notice the output queue length increasing as the packets get queued up. If the packets dropped greater than zero indicates that the network is getting choked up.

In general, if there are multiple Sync Shares in a single server, it is advisable to configure the Sync Shares in different volumes in different virtual disks if possible. Configuring Sync Shares in different volumes will translate the incoming requests to different Sync Shares as file IOs into multiple virtual disks. We have also listed some counters specific to logical disks that can be used to determine if the incoming requests are targeted towards them. Any time, if the average disk queue length goes above 10, the disk IO latency is going to increase.

When deploying Work Folders across the enterprise, it is a good idea to plan the rollout in phases so that you can limit the number of new users trying to enroll and upload their data all at once causing heavy network traffic. This will ensure that the users will not try to enroll and upload their data all at once causing heavy network traffic.

During this test, we wanted to explore if the mid-level server can support 5000 users. Based on the heuristic model, we classified the users under different profiles and simulated sync sessions accordingly. We monitored the server to collect at the vital statistics to ensure that its continuous stability. We measured the sync time from desktop-class hardware to understand the user-experience when the server is supporting 5000 users. With this experiment, we found out that the mid-level server with exact or similar configuration as mentioned above should be able to support 5000 users without affecting the user-experience. We found out that the network utilization on the server averaged at 60% and the sync slowed down by about 10 seconds due to the load. The total time taken for typical users’ change to appear across all their devices is not affected by more than 5%, even with a busy server with sync data of 5000 users.

Our study of performance counters shows that the performance of sync operation on high-scale is IO-bound. It is possible to achieve such a high performance even on an entry-level server with 4GB memory and quad-core single socket CPU, as long as the network has a higher bandwidth and storage sub-system has a higher IO throughput.

We hope you find this information helpful when you plan and deploy Work Folders in your organization.

Sundar Srinivasan

Hi all,

Here is another great Work Folders blog post that shares information about work folders performance in large scale deployments. The content below was compiled and written by Sundar Srinivasan who is one of the software engineers in the Work Folders product team.

Overview

One of the exciting new features introduced in Windows Server 2012 R2 is Work Folders. Please refer to this Technet article and this blog post for broader details about the Work Folders feature and its deployment. During the development of this feature, I worked on testing the performance of Work Folders on a typical enterprise-scale deployment. In this blog post, we are going to look at the performance and scalability aspects of Work Folders.

There are three scenarios of sync that we modeled for this experiment. Once a user configures Work Folders on her device, the user tends to move all her work-related files to the Work Folders triggering a sync that populates the data in the Sync Share set up on her organization’s Windows 2012 R2 file server with Work Folders feature enabled. This scenario is termed as “first time sync”.

The second scenario is the user adding new devices. As the user configures Work Folders on her personal devices like laptops, Surface Pro or Surface RT, a sync will be triggered from each of these devices to sync the work-related files to her devices.

Beyond this point, the user changes their Work Folders data like editing their Word documents and creating new PowerPoint files on a daily basis, on one or more devices. Any such change will trigger what we call an “ongoing sync” to the server. For the purpose of measuring the scalability of the file server enabled for synchronizing Work Folders data, which we will refer to as the sync server, we are very much interested in studying how many concurrent ongoing sync session the server can handle without affecting the experience of an individual user.

First section of this blog explains the topology that we used for simulating 5,000 users and a heuristic model that explains how we derive the concurrent sync sessions the sync server serves on average at any given time. Then we will look at the results of our experiments and what resources become bottleneck. This blog will also give pointers to some Windows Performance Counters that can help analyzing the performance of the sync server in production and give some guidance on configurations.

Hardware Topology

We used a mid-level 2-node failover cluster server. The storage for the server is through external JBODs of SAS drives connected through an LSI controller. Our definition of the server hardware looks like this

We set up the machine with the data of 5000 users on the sync server distributed across 10 Sync Shares. To simulate multiple devices with Work Folders configured, we use a small set of entry-level servers which will simulate multiple sync sessions from each of them, as if they originate from multiple devices. The server has a 10Gbps connection to the hub, while all the clients have 1Gbps connections.

The hardware topology that we used in this test is as shown in the below diagram:

The list of clients on the left-hand side of the diagram represents the entry-level servers which simulate multiple sync session from each of them, as if they originate from multiple devices. We would like to measure the experience of the users, when the server and the infrastructure is loaded with multiple sync sessions. So we use two desktop-class devices and we measure the time taken for the changes on device 1 to reach device 2. The time taken for the data to be synced to the second device should not be fairly different compared to the time taken when the server is free.

Dataset that we used for each user is about 2 GB each in size with 2500 files and 500 folders. Based on our background knowledge on Offline Files feature , the distribution of file sizes on a typical dataset look like this:

Although 99% of the sync users fall under this category, we also included some users to test our dataset scale limits. About 1% of the users have a 100GB dataset, with some users having 250,000 files and 500,000 folders and 10,000 files in a single folder, and with some users with files being as large as 10GB.

We tested with 5000 users in this setup across ten Sync Shares. In order to test the scale limit on number of users supported per Sync Share, we created a single Sync Share with 1000 users and distributed the other 4000 users across nine Sync Shares: five Sync Shares with 400 users each and four Sync Shares with 500 users each.

Modeling the sync sessions on server

We have developed a heuristic model to calculate the number of simultaneous sync sessions on the sync server, by looking at the distribution of user profiles. In Work Folders, any file or folder change made locally will trigger a sync. But if there is no change made on a particular device with Work Folders configured, the device still polls the server once in 10 minutes to determine if there is any new file or folder or new version of existing file available on the server for download. So we just need to model the user activity during this 10 minutes polling interval.

In this model, we assume that each user has 3 devices with Work Folders configured - out of them on an average 1.5 devices/user will be active and we assume that on an average 20% of users are offline. The other users are classified based on their usage of Work Folders into 5 profiles - from inactive users whose devices are online but passively receiving changes to hyperactive users who make bulk file copies into the Work Folders:

Our model is based on the educated assumptions made in the distribution of the users with our background knowledge of the user data synchronization scenario. We also derive the number of simultaneous sessions on the server based on our empirical knowledge about the duration of a sync session and polling session on the server. We applied this model to experiment if the mid-level server that we described above can support 5000 users without noticeably degrading the sync user-experience of individual users. The results of our experiment is described in the section below.

Results & Analysis

In this section, we will discuss what the results look like and compare it with the results when the server is inactive. We will also show how to identify network bottleneck during synchronization.

To measure the sync time, our reference user falls in the class of a very active user who has made 10 independent file changes and two new files created in a new folder. Our test results of sync time of the reference user is as shown below:

The chart above shows the time taken for the local changes to be upload to the server and the remote changes to be downloaded to a different device. To put this in the context of user experience, a common concern in any sync system is a simple question posed by the user: “Will my files be available in all my devices immediately?” From Work Folders point of view, the answer to this question depends on several factors like frequency of synchronization, amount of data transferred to effect that sync, and the speed of the network connection from the device to the server. So the end-user experience of Work Folders is defined in terms of the perceived delay after which a user can expect the data to be available across multiple devices As the number of concurrent sync sessions to the server increases, we expect that the user will not experience a significant delay in changes from one device synchronizing to her other devices.

The following graph shows the time in seconds the reference user has to wait for their changes (10 independent file changes and two new files created in a new folder) to be available across multiple devices.

As we notice from the graph above, there is a less than 5% impact on the user experience.

However the previous chart shows that the upload time and download time increases from 2 seconds to 12 seconds, when the sync server is loaded with several sync and polling requests. We proceed forward in studying which resources are becoming a bottleneck on the server to cause this increase in the sync time from 2 seconds to 12 seconds.

The following chart gives the CPU utilization on the server during this process.

The CPU utilization remains within 36% with a median value of 28%, so we do not think CPU was becoming a bottleneck at this stage. Memory used by the process was 12GB, but it was primarily due to the database residing in memory for faster access. The server has a 64GB RAM and so it was not becoming a bottleneck. In other testing, we saw that if the operating system was indeed under memory pressure, the memory used by the database for caching would decrease.

The utilization of other network and disks are shown in the table below.

From the table above, it is evident that the network is becoming a bottleneck as the number of concurrent sync sessions and polling sessions increase. As already explained in the hardware topology, the client machines have a 1Gbps NIC, whereas the sync server has a 10Gbps NIC hooked up to a 10Gbps port of the same network hub. Since the network usage increases, the clients experience delay in communication. However we noticed that none of the HTTP packets were rejected.

From this experiment, we found that the performance of sync operation on high-scale is IO-bound. It is possible to achieve such a high performance even on an entry-level server with 4GB memory and quad-core single socket CPU, as long as the network has a higher bandwidth and storage sub-system has a higher throughput.

Runtime Performance Monitoring and Best Practices

Although Work Folders does not introduce any new performance counters, existing performance counters of IIS, network and disk in Windows Server 2012 R2 can be used to understand which resource is becoming overused or bottlenecked. We have listed some important performance counters in the table below

Work Folders on server is highly network and IO intensive. On sync server, Work Folders runs as a separate request queue named SyncSharePool. The table above contains the counters specific to the SyncSharePool that would be useful. If the rejection rate goes above 0% or if the number of rejected requests shoots up, then the network is clearly getting bottlenecked. Apart from these counters, there are other generic IIS counters and network counters that can give information about the network utilization.

The number of \HTTP Service Url Groups(*)\CurrentConnections gives the existing connections to the server that includes the combined count of both sync sessions and polling sessions. If the network utilization reaches 100%, we will notice the output queue length increasing as the packets get queued up. If the packets dropped greater than zero indicates that the network is getting choked up.

In general, if there are multiple Sync Shares in a single server, it is advisable to configure the Sync Shares in different volumes in different virtual disks if possible. Configuring Sync Shares in different volumes will translate the incoming requests to different Sync Shares as file IOs into multiple virtual disks. We have also listed some counters specific to logical disks that can be used to determine if the incoming requests are targeted towards them. Any time, if the average disk queue length goes above 10, the disk IO latency is going to increase.

Conclusion

When deploying Work Folders across the enterprise, it is a good idea to plan the rollout in phases so that you can limit the number of new users trying to enroll and upload their data all at once causing heavy network traffic. This will ensure that the users will not try to enroll and upload their data all at once causing heavy network traffic.

During this test, we wanted to explore if the mid-level server can support 5000 users. Based on the heuristic model, we classified the users under different profiles and simulated sync sessions accordingly. We monitored the server to collect at the vital statistics to ensure that its continuous stability. We measured the sync time from desktop-class hardware to understand the user-experience when the server is supporting 5000 users. With this experiment, we found out that the mid-level server with exact or similar configuration as mentioned above should be able to support 5000 users without affecting the user-experience. We found out that the network utilization on the server averaged at 60% and the sync slowed down by about 10 seconds due to the load. The total time taken for typical users’ change to appear across all their devices is not affected by more than 5%, even with a busy server with sync data of 5000 users.

Our study of performance counters shows that the performance of sync operation on high-scale is IO-bound. It is possible to achieve such a high performance even on an entry-level server with 4GB memory and quad-core single socket CPU, as long as the network has a higher bandwidth and storage sub-system has a higher IO throughput.

We hope you find this information helpful when you plan and deploy Work Folders in your organization.

Sundar Srinivasan

Updated Apr 10, 2019

Version 2.0roiyz

Former Employee

Joined April 02, 2019

Storage at Microsoft

Follow this blog board to get notified when there's new activity