Hi Cluster Fans,

In Windows Server 2008 R2 we have added performance counters for Failover Clustering. Performance counters are like meters you have on some devices in your house. For instance, your electrical or water meter tracks what utilities you have consumed or your thermometer shows you the current temperature. They all have at least one thing in common – they show you aggregated information about the current state. However, they would not show you every event that contributes to that state. Some components in the cluster deal with lots of calls or traffic going through them and some buffer information in memory before it can get processed. We have added performance counters to several such components. This post will discuss each counter set and in future posts we will look at a couple of practical examples showing several issues resolved with the help of the performance counters.

For some information about using Performance Counters will PowerShell, check out this blog post: http://blogs.msdn.com/clustering/archive/2009/07/22/9844473.aspx

Following diagram gives you an overview of several cluster components that got instrumented and are relevant to these posts. Please note all the counters sets (except one) are in the cluster service. There are no counters in the Resource Host Monitor (RHS). One counter set that is outside the cluster service is in the Clustered Shared Volume component.

Network

The network is a subsystem that is responsible for the reliable communication between cluster nodes using TCP. All inter-node messages go over this component so you can see how much traffic cluster’s internal communication puts on your network.

On this picture you can see the set of the performance counters in the networking component. Hopefully names are self-explanatory, so let’s go over the meaning of the columns.

In this case we have a 2 node cluster (vpclus01 and vpclus22). The counters are collected on the node vpclus01. The column vpclus22 tells you how much traffic is going between vpclus01 and vpclus22. The column vpclus01 tells you how much traffic the node has sent to itself. Please note that this traffic does not go over the networking stack - this is a loopback implemented completely inside the cluster service. The _Network column tells how much traffic actually went through the networking stack. This is calculated as a sum of the how much traffic went to/from each node, and it does not count the loopback interface.

Cluster uses TCP to communicate between cluster nodes. In some cases the connection might get broken. If a TCP connection breaks, we will reestablish the connection and node will stay up. Reconnect Count tells how many times the TCP connection was broken and reestablished.

We cannot rely on TCP to tell us what messages made it across the wire so we had to implement our own acknowledgement mechanism.

The Normal Message Queue Length and the Urgent Message Queue Length tell you how many messages are sitting in the queue and waiting to be sent. Normally this number is 0, but if TCP connection breaks you might observe it is going up until TCP connection is reestablished and we can send all of them through.

The Normal Message Queue Length Delta and the Urgent Message Queue Length Delta are slightly misnamed. From the name you might expect that they would tell you how much queue length has changed, but actually they tell you incoming message rate to the queue.

Unacknowledged Message Queue and Unacknowledged Message Queue Delta tell how many messages we’ve sent, but have not yet received a returned acknowledgement. Similar to the other Delta counters in this group, Unacknowledged Message Queue Delta tells you not how much queue length has changed, but the incoming message rate.

Multicast Request Reply

Multicast Request Reply is a communication primitive that we have in the cluster. You can think of it as an RPC that allows you to send a call to multiple recipients and then get response from all of them. This component also has an internal queue. Messages Outstanding tells you the length of this queue. Usually it is close to 0. Messages Sent shows how many MRRs we have sent since the server has been running, and the Delta tells you what the change is since the last sampling interval.

There are many components in the cluster that are utilizing MRR.

One of the heavy MRR users is Cluster API. For instance when you run Get-ClusterResource using PowerShell, all the resources are displayed and the cluster has to communicate with the current resource owner to query the resource state. This communication is done using MRRs. If you have resources spread across cluster nodes and if you enumerate resources on one of cluster nodes you will observe MRR rate similar to what is displayed in the above picture.

Another MRR user is Global Update Manager. We will discuss this component next.

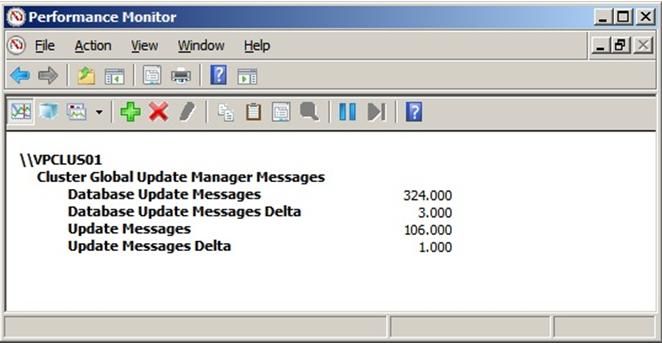

Global Update Manager

Global update manager is one of the components that allows a cluster to keep its state consistent across all its nodes. As you’ve probably already guessed from the name this component is responsible for making an update on all the nodes in the cluster and making sure that multiple updates are properly serialized.

Database Update Messages are making changes only to the cluster database. For instance this is happening when you change a property of a resource or a group.

Update Messages also might make changes to the database, but in some cases they just modify an unstable cluster state. You will see this counter changes when you move a group between nodes.

There are many components in the cluster that rely on the Global Update Manager.

Database

The database is the cluster component that provides persistent, consistent and replicated storage. You can find more information on how database works on MSDN and in the Global Update Manager chapter above.

You can find how many database updates are happening using the Global Update Manager counters (see above). The two counters on the picture bellow give you some visibility to how the transaction mechanism is functioning. When cluster writes data to the database, the data is first written into the transaction log, and only after that in the database. This way, if a node crashes we can replay the transaction log and make sure the database is consistent. When cluster writes data to the database it actually first just updates in memory cache and only after several seconds this cache will get flushed. By default we flush database every 20 seconds. But if there are too many database updates or if database updates are very large it might cause the transaction log to get overflowed. In this case cluster will flush the database and clean up the log before the 20 seconds have expired.

These performance counters help you to monitor how often database flushes are happening.

Stay tuned for Part 2 where we will discuss the Checkpoint Manager, Resource Control Manager, Resource Types, APIs and Cluster Shared Volumes.

Thanks,

Vladimir Petter

Senior Software Development Engineer

Clustering & High-Availability

Microsoft