- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Computer Vision Read (OCR) API previews new languages and docker containers

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

Businesses today are racing to convert their scanned paper documents, digital files, and even on-screen content into actionable insights. These insights power knowledge mining, business process automation, and accessibility for everyone regardless of the source of content, location of users, and the language and medium of communication.

Optical Character Recognition (OCR) is the foundational technology that drives the digitization of content today by extracting text from images, documents, and screens. There are several OCR technology providers that provide this capability as services, tools, and solutions, both in the cloud and for deployment within your environment.

However, there are several challenges to successfully implementing OCR at scale.

Challenges

Text extraction quality

To extract text with high accuracy from the diverse content types, formats, and mediums, your OCR should be of the highest out-of-the-box quality, work on a variety of content textures, fonts, and styles, and be easy to integrate by using cloud APIs and SDKs.

Cloud and on-premise compliance

If you are a business that serves customers in healthcare, insurance, banking, or other verticals with additional data privacy and security requirements, you typically require not just secure online access but the flexibility to deploy within your network to ensure that the personal data does not leave your network.

Mixed languages

Your customers and users are global so your OCR should also support international languages and locales. These documents most likely contain text in multiple languages that are impossible to identify as you are scanning them at scale.

Handwritten text

Finally, your documents and forms have both print and handwritten text. To combat this challenge, you should use a technology that seamlessly handles both styles of text in the same document.

Computer Vision Read (OCR)

Microsoft’s Computer Vision OCR (Read) capability is available as a Cognitive Services Cloud API and as Docker containers. Customers use it in diverse scenarios on the cloud and within their networks to solve the challenges listed in the previous section.

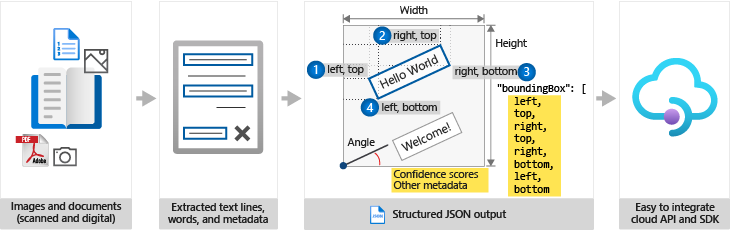

The following figure illustrates the high-level flow of the OCR process. The input is your document or image. The service extracts the text and converts it into a structured JSON response that includes the extracted text lines and words with their bounding boxes and confidence scores. You integrate with the service or the containers with a simple API that’s described next.

At its core, the OCR process breaks it down into two operations. You use the Read operation to submit your image or document. That starts an asynchronous process that you poll with the Get Read Results operation.

Read operation

Call the Read operation to extract the text. The call returns with a response header field called Operation-Location. The Operation-Location value is a URL that contains the Operation ID to be used in the next step.

See the full Read OCR REST API QuickStart in Python for the following code snippets.

text_recognition_url = endpoint + "/vision/v3.0/read/analyze"

# Set image_url to the URL of an image that you want to recognize.

image_url = "https://raw.githubusercontent.com/MicrosoftDocs/azure-docs/master/articles/cognitive-services/Computer-vision/Images/readsample.jpg"

headers = {'Ocp-Apim-Subscription-Key': subscription_key}

data = {'url': image_url}

response = requests.post(

text_recognition_url, headers=headers, json=data)

response.raise_for_status()

# Extracting text requires two API calls: One call to submit the

# image for processing, the other to retrieve the text found in the image.

# Holds the URI used to retrieve the recognized text.

operation_url = response.headers["Operation-Location"]

Get Read results operation

Call the Get Read Results operation until it returns with a completed status. This operation takes as input the operation ID that was created by the Read operation. It returns a JSON response that contains a status field with the following possible values.

# The recognized text isn't immediately available, so poll to wait for completion.

analysis = {}

poll = True

while (poll):

response_final = requests.get(

response.headers["Operation-Location"], headers=headers)

analysis = response_final.json()

print(json.dumps(analysis, indent=4))

time.sleep(1)

if ("analyzeResult" in analysis):

poll = False

if ("status" in analysis and analysis['status'] == 'failed'):

poll = False

polygons = []

if ("analyzeResult" in analysis):

# Extract the recognized text, with bounding boxes.

polygons = [(line["boundingBox"], line["text"])

for line in analysis["analyzeResult"]["readResults"][0]["lines"]]

New Cloud API and Container releases

During Ignite 2020, we are announcing new cloud service and container releases.

New features in Read 3.1 preview (cloud and container)

The new Read 3.1 preview for cloud and containers adds these capabilities:

1. Support for Simplified Chinese and Japanese languages. See all supported languages.

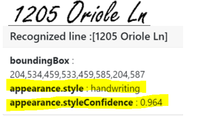

- The following images show Simplified Chinese and Japanese text lines extracted respectively, along with their bounding boxes (locations).

OCR (Read) Simplified Chinese OCR (Read) Simplified Chinese |

OCR (Read) Japanese OCR (Read) Japanese |

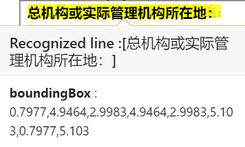

2. Indicating whether the appearance of text is Handwriting or Print style, along with a confidence score (Latin languages only).

- The following image shows that the handwritten style text is not only correctly extracted but its appearance is recognized as handwriting style along with a confidence score for more granular control by the invoking business process.

3. Ability to extract text for selected pages or page range within multi-page documents.

- The following image shows the JSON response with only the selected pages (201 and 202) when the Read operation was called with a pages query parameter of “201,202" for a 500 page document.

{

"status": "succeeded",

"createdDateTime": "2020-09-08T19:23:19Z",

"lastUpdatedDateTime": "2020-09-08T19:23:35Z",

"analyzeResult": {

"version": "3.1.0",

"readResults": [

{

"page": 201,

"angle": 0,

"width": 8.2639,

"height": 11.6944,

"unit": "inch",

"language": "",

"lines": [...]

},

{

"page": 202,

"angle": 0,

"width": 8.2639,

"height": 11.6944,

"unit": "inch",

"language": "",

"lines": [...]

}

]

}

}

This preview version of the Read API supports English, Dutch, French, German, Italian, Japanese, Portuguese, Simplified Chinese, and Spanish languages.

Read 3.1 cloud API preview

We are releasing the new Read 3.1 preview cloud API with the following features:

- Support for Simplified Chinese and Japanese

- Print vs. handwriting appearance for each text line with confidence scores

- Extract text from only selected page(s) from a large multi-page document

See the Read API overview to learn more.

Read 3.0 and Read 3.1 container previews

We are announcing two new container releases.

The Read 3.0 container preview

The Read 3.0 container preview is the on-premise version of the Read 3.0 Cloud API that’s generally available (GA) today.

The Read 3.0 container preview is a significant upgrade to the Read 2.0 container preview that’s available today. Major features include:

- Enhanced accuracy based on updated deep learning models

- Support for Dutch, English, French, German, Italian, Portuguese, and Spanish

- Support for multiple languages within the same document

- Single operation for both documents and images

- Support for significantly larger documents and images

- Confidence scores with a full range of 0 to 1 instead of labels (“low” only), , for granular visibility.

- Support for mixed model documents with print and handwritten style text

The Read 3.1 container preview

The Read 3.1 container preview is the on-premise version of the same Read 3.1 API preview features we reviewed in the previous section. Moving forward, Read 3.1 and newer versions will add expanded language coverage and enhancements.

The Read 3.1 container preview includes everything that Read 3.0 has and adds the following additional capabilities covered in the previous section:

- Support for Simplified Chinese and Japanese

- Print vs. handwriting appearance for each text line with confidence scores

- Extract text from only selected page(s) from a large multi-page document

- Unified code and architecture ensures the container will be in step with cloud API releases in the future.

Get started with containers

Learn how to install and run the Read containers to get started and find the recommended configuration settings. If you are using Read 2.0 containers today, a migration guide is available for help along the way.

When to deploy which version

Can’t wait? Deploy the Read 3.0 container preview knowing that this release is on track for general availability (GA) soon. Want more languages and enhancements? Deploy the Read 3.1 container preview if you can wait a tad longer for more features.

Deploy the Read container of your choice

What customers say

Instabase

Instabase is a technology platform for business productivity applications that can be deployed in the cloud or on-premises.

"Microsoft's Read OCR container technology provides a powerful option that our customers can leverage to read text from documents, which Instabase uses to generate understanding, without data ever leaving their firewall, ensuring data privacy and security. This is essential for our banking and enterprise customers." - Justin Herlick, Product Manager, Instabase

GE Aviation

While mandatory for regulatory compliance, assembling complete back-to-birth aircraft maintenance record is an expensive, time-consuming, and unreliable process. GE Aviation’s Digital Solutions Group built the AirVault solution to solve the challenge.

“It can be a huge task across an airline's entire fleet to record and then easily retrieve evidence of maintenance activity or compliance to an Airworthiness Directive. A lot of what gets archived, things like parts receipts, vendor airworthiness certificates or maintenance records are still paper-based, and these documents can contain both handwriting as well as printed text. Microsoft’s Computer Vision OCR technology helped us to greatly enhance our full text word search capability during the conversion of paper documents to digital format as well as documents that were never printed in the first place with scale, speed, and accuracy.” - Nate Hicks, Sr. Product Group Leader at GE Aviation Digital Solutions

NHS Business Services Authority

UK’s NHS Business Services Authority (NHS BSA) is a Special Health Authority and an arm's length body of the Department of Health and Social Care (DHSC).

“We believe that we can do so much more by using AI to read our documentation, to read more fields on that, and to read handwritten info, and to use that AI engine to deliver better taxpayer value, to deliver better outcomes, and deliver better patient safety.” - Michael Brodie, Chief Executive, NHS BSA

Get Started

- Create a Computer Vision resource in Azure and follow one of our QuickStarts.

- Learn more about OCR (Read) and Form Recognizer.

- Learn more about the Read containers and download them from Docker Hub.

- Write to us at formrecog_contact@microsoft.com

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.