First published on MSDN on Jan 09, 2019

A distributed availability group (distributed AG) is a special type of availability group that spans two availability groups. This blog will clarify some issues regarding failover in a distributed AG, specifically demonstrates distributed AG resiliency from data loss despite the synchronizing state between the availability groups.

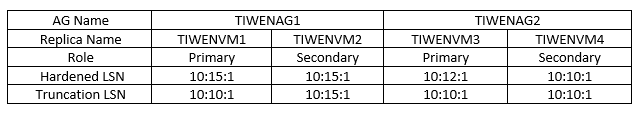

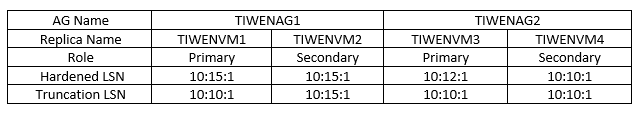

A simple distributed AG ‘TIWENDAG’ is created for relevant tests that will be addressed later in this blog. The structure of ‘TIWENDAG’ is illustrated by the following diagram. ‘TIWENAG1’ is the primary AG of distributed AG ‘TIWENDAG’, with ‘TIWENVM1’ as the primary replica and ‘TIWENVM2’ as the secondary replica. ‘TIWENAG2’ is the secondary AG of the distributed AG, with ‘TIWENVM3’ as the primary replica and ‘TIWENVM4’ as the secondary replica.

Automatic failover of the two availability groups defined in the distributed AG is supported. For example, if “automatic” is specified for failover mode in availability group TIWENAG1, then automatic failover can occur between TIWENAG1 (primary) and TIWENAG2 (synchronous secondary) in the event that TIWENAG1 goes down. Similarly, in availability group TIWENAG2, in the event TIWENVM3 (primary) goes down, it can automatically failover to TIWENVM4 (synchronous secondary).

the primary replica in the AG (no matter whether the AG is part of a distributed AG) will automatically failover to a synchronous secondary replica when the primary replica goes down.

If the primary replica on the secondary AG (also known as the “forwarder”) is lost and causes automatic failover to happen or manual failover is performed in the secondary AG in a distributed AG, there will be no data loss if the following conditions are met:

Assume that the hardened LSN on the global primary, “TIWENVM1”, is 10:15:1. The secondary replica of the primary AG, “TIWENVM2”, is synchronous, and its hardened LSN is also 10:15:1. For the secondary AG, between the primary AG and the forwarder, synchronization mode is asynchronous, and the hardened LSN of the forwarder, “TIWENVM3”, is 10:12:1. The secondary replica of the secondary AG, “TIWENVM4”, is not yet synchronized and its hardened LSN is 10:10:1. Globally across the two AGs in the distributed AG, replica “TIWENVM4” has the smallest value of hardened LSN, truncation LSN on the global primary won’t go beyond this smallest value. In the event that “TIWENVM3” is lost and “TIWENVM4” becomes the new forwarder, it will be able to synchronize with the primary AG.

The reason for this is that when the transaction logs are backed up, only the logs that have been applied to all the replicas in the distributed AG (including the replicas in both AGs) will be truncated. The following table provides an example of how the transaction logs will be truncated in a distributed AG, using the test environment described at the beginning of this post.

This scenario can be easily demonstrated. The steps are as following:

The following screenshots record a complete repro of the scenario.

Step1 -

DMVs used in this step:

Results from the four replicas :

Primary replica on primary AG (global primary):

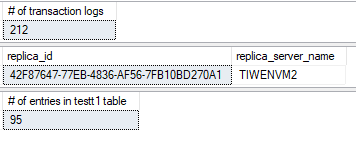

Secondary replica on primary AG:

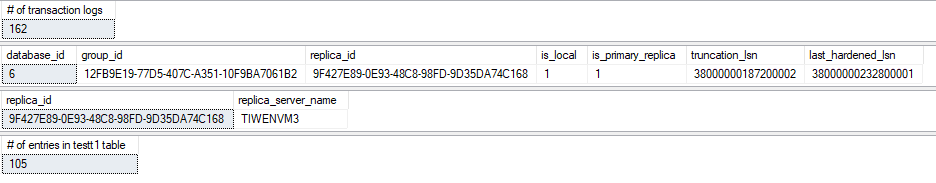

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

If all the replicas are synchronized, all the replicas should have the same number of transaction logs as well as the same truncation_lsn as in the test environment shown in the screenshots above.

Step 2 -

Execute an

Step 3 -

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

The size of transaction logs grows due to the

Step 4 -

Perform a transaction log backup on the global primary.

Step 5 -

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

Truncation_lsn of each replica should grow to the current lsn, and the number of transaction logs should shrink by the same size as the t-logs have been truncated.

Step 6 -

Suspend data movement of ‘test’ database on the secondary replica on the secondary AG.

Step 7 -

After repeating step 2 and 3, we can see that except for the secondary replica on the secondary AG (TIWENVM4), the ‘test’ database on all other three replicas are modified again and another 10 entries are inserted into the table. The database on secondary replica on the secondary AG is not updated because the data movement has been suspended.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

The results from repeating step 4 and 5 are shown below.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

Note that the newly generated transaction logs were truncated on the secondary replica on the primary AG, but the truncation_lsn of the primary replica on the primary didn’t change after 10 new rows were inserted into the database and executing transaction logs back up. As mentioned earlier, this is because the secondary replica on the secondary AG of the distributed AG (TIWENVM4) is not yet synchronized and thus has not successfully applied the new transaction logs generated by the

The global primary of a distributed AG only truncates logs to the least LSN among all the replicas in the distributed AG. This logic guarantees that there’ll be no data loss when the forwarder got lost even when the secondary replica on the secondary AG has not fully synchronized with the forwarder, as long as the global primary is in a healthy state and can communicate with the secondary replica on the secondary AG properly.

To simulate the scenario mentioned above, we shut down the network of the primary replica on the secondary AG (TIWENVM3) so that it becomes unavailable before we resume the data movement on the secondary replica on the secondary AG (TIWENVM4).

After the data movement of ‘test’ database on TIWENVM4 is resumed, the secondary AG (TIWENAG2) is now in a resolving state because the current primary replica on TIWENAG2, TIWENVM3, fails to be connected. To fix this issue, a failover needs to be performed. Under the scenario when in the secondary AG of a distributed AG, the forwarder is not available, the only failover option to the secondary replica of the secondary AG is

After performing failover with

In this scenario, though the only failover option is

One thing worth notice is that after

After resuming data movement on TIWENVM3, all replicas on the distributed AG are synchronized and the transaction logs get truncated to the same point.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

(New) Secondary replica on secondary AG (former forwarder):

(New) Primary replica on secondary AG (new forwarder):

It needs to be clarified that though under this scenario,

A distributed availability group (distributed AG) is a special type of availability group that spans two availability groups. This blog will clarify some issues regarding failover in a distributed AG, specifically demonstrates distributed AG resiliency from data loss despite the synchronizing state between the availability groups.

A simple distributed AG ‘TIWENDAG’ is created for relevant tests that will be addressed later in this blog. The structure of ‘TIWENDAG’ is illustrated by the following diagram. ‘TIWENAG1’ is the primary AG of distributed AG ‘TIWENDAG’, with ‘TIWENVM1’ as the primary replica and ‘TIWENVM2’ as the secondary replica. ‘TIWENAG2’ is the secondary AG of the distributed AG, with ‘TIWENVM3’ as the primary replica and ‘TIWENVM4’ as the secondary replica.

Automatic failover of the two availability groups defined in the distributed AG is supported. For example, if “automatic” is specified for failover mode in availability group TIWENAG1, then automatic failover can occur between TIWENAG1 (primary) and TIWENAG2 (synchronous secondary) in the event that TIWENAG1 goes down. Similarly, in availability group TIWENAG2, in the event TIWENVM3 (primary) goes down, it can automatically failover to TIWENVM4 (synchronous secondary).

the primary replica in the AG (no matter whether the AG is part of a distributed AG) will automatically failover to a synchronous secondary replica when the primary replica goes down.

If the primary replica on the secondary AG (also known as the “forwarder”) is lost and causes automatic failover to happen or manual failover is performed in the secondary AG in a distributed AG, there will be no data loss if the following conditions are met:

- The primary replica on the primary AG (which is usually referred to as "global primary") is successfully synchronizing with the forwarder replica in the secondary AG over their database mirroring endpoints.

- The forwarder replica in the secondary AG is successfully synchronizing with the secondary replica in the secondary AG over their database mirroring endpoints.

Assume that the hardened LSN on the global primary, “TIWENVM1”, is 10:15:1. The secondary replica of the primary AG, “TIWENVM2”, is synchronous, and its hardened LSN is also 10:15:1. For the secondary AG, between the primary AG and the forwarder, synchronization mode is asynchronous, and the hardened LSN of the forwarder, “TIWENVM3”, is 10:12:1. The secondary replica of the secondary AG, “TIWENVM4”, is not yet synchronized and its hardened LSN is 10:10:1. Globally across the two AGs in the distributed AG, replica “TIWENVM4” has the smallest value of hardened LSN, truncation LSN on the global primary won’t go beyond this smallest value. In the event that “TIWENVM3” is lost and “TIWENVM4” becomes the new forwarder, it will be able to synchronize with the primary AG.

The reason for this is that when the transaction logs are backed up, only the logs that have been applied to all the replicas in the distributed AG (including the replicas in both AGs) will be truncated. The following table provides an example of how the transaction logs will be truncated in a distributed AG, using the test environment described at the beginning of this post.

This scenario can be easily demonstrated. The steps are as following:

- Record the current truncation_lsn and last hardened LSN of each replica.

-

Modify the database. Here an

INSERTquery is executed 10 times on the “test” database. - Verify that the last hardened LSN of each replica grows by the same number.

- Do a transaction logs back up on the “test” database on the global primary.

- Verify current truncation_lsn and last hardened LSN .

- Suspend data movement on the secondary replica on the secondary AG.

- Repeat 2-5.

The following screenshots record a complete repro of the scenario.

Step1 -

DMVs used in this step:

USE test

SELECT COUNT(*) AS '# of transaction logs' FROM fn_dblog(null, null)

SELECT database_id,

group_id,

replica_id,

is_local,

is_primary_replica,

truncation_lsn,

last_hardened_lsn

FROM sys.dm_hadr_database_replica_states

WHERE database_id=6 AND is_local=1

SELECT replica_id, replica_server_name

FROM sys.availability_replicas

WHERE replica_id=

(SELECT replica_id FROM sys.dm_hadr_database_replica_states WHERE database_id=6 AND is_local=1)

SELECT COUNT(*) AS '# of entries in testt1 table' FROM testt1

Results from the four replicas :

Primary replica on primary AG (global primary):

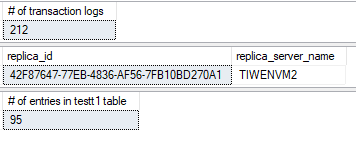

Secondary replica on primary AG:

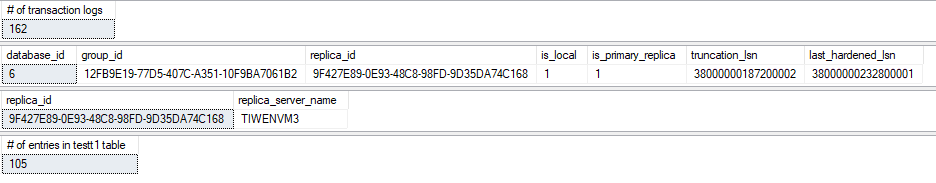

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

If all the replicas are synchronized, all the replicas should have the same number of transaction logs as well as the same truncation_lsn as in the test environment shown in the screenshots above.

Step 2 -

Execute an

INSERT

clause 10 times.

INSERT INTO testt1 (NAME, ID) VALUES ('John Smith', 1)

GO

Step 3 -

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

The size of transaction logs grows due to the

INSERT

executions. The increase of entries in the ‘test’ database also reflects that 10 rows are inserted into the table.

Step 4 -

Perform a transaction log backup on the global primary.

Step 5 -

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

Truncation_lsn of each replica should grow to the current lsn, and the number of transaction logs should shrink by the same size as the t-logs have been truncated.

Step 6 -

Suspend data movement of ‘test’ database on the secondary replica on the secondary AG.

Step 7 -

After repeating step 2 and 3, we can see that except for the secondary replica on the secondary AG (TIWENVM4), the ‘test’ database on all other three replicas are modified again and another 10 entries are inserted into the table. The database on secondary replica on the secondary AG is not updated because the data movement has been suspended.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

The results from repeating step 4 and 5 are shown below.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

Primary replica on secondary AG (forwarder):

Secondary replica on secondary AG:

Note that the newly generated transaction logs were truncated on the secondary replica on the primary AG, but the truncation_lsn of the primary replica on the primary didn’t change after 10 new rows were inserted into the database and executing transaction logs back up. As mentioned earlier, this is because the secondary replica on the secondary AG of the distributed AG (TIWENVM4) is not yet synchronized and thus has not successfully applied the new transaction logs generated by the

INSERT

queries.

The global primary of a distributed AG only truncates logs to the least LSN among all the replicas in the distributed AG. This logic guarantees that there’ll be no data loss when the forwarder got lost even when the secondary replica on the secondary AG has not fully synchronized with the forwarder, as long as the global primary is in a healthy state and can communicate with the secondary replica on the secondary AG properly.

To simulate the scenario mentioned above, we shut down the network of the primary replica on the secondary AG (TIWENVM3) so that it becomes unavailable before we resume the data movement on the secondary replica on the secondary AG (TIWENVM4).

After the data movement of ‘test’ database on TIWENVM4 is resumed, the secondary AG (TIWENAG2) is now in a resolving state because the current primary replica on TIWENAG2, TIWENVM3, fails to be connected. To fix this issue, a failover needs to be performed. Under the scenario when in the secondary AG of a distributed AG, the forwarder is not available, the only failover option to the secondary replica of the secondary AG is

FORCE_FAILOVER_ALLOW_DATA_LOSS

.

ALTER AVAILABILITY GROUP TIWENAG2 FORCE_FAILOVER_ALLOW_DATA_LOSS

After performing failover with

FORCE_FAILOVER_ALLOW_DATA_LOSS

on the secondary replica on the secondary AG (TIWENVM4), the database is synced even though the previous forwarder is currently unavailable, as the global primary keeps track of the unsynchronized part of transaction logs of the secondary replica on the secondary AG.

In this scenario, though the only failover option is

FORCE_FAILOVER_ALLOW_DATA_LOSS

, there’s no data loss during the failover.

One thing worth notice is that after

FORCE_FAILOVER_ALLOW_DATA_LOSS

and the previous primary replica (in this case, TIWENVM3) is brought back, data movement will not resume automatically and needs to be resumed manually. This behavior is by design and not limited to the distributed AG scenario but applies whenever failover with

FORCE_FAILOVER_ALLOW_DATA_LOSS

is performed. This is because there might be difference and even conflicts between the previous primary replica and the new primary replica, and user needs to manually choose which version to keep.

After resuming data movement on TIWENVM3, all replicas on the distributed AG are synchronized and the transaction logs get truncated to the same point.

Primary replica on primary AG (global primary):

Secondary replica on primary AG:

(New) Secondary replica on secondary AG (former forwarder):

(New) Primary replica on secondary AG (new forwarder):

It needs to be clarified that though under this scenario,

FORCE_FAILOVER_ALLOW_DATA_LOSS

in the secondary AG doesn’t cause data loss, it’s not guaranteed that there’ll be no data loss when using this option to perform failover in a distributed AG. For example, if the primary AG is lost, there might be data loss when a force failover is performed in the secondary AG. This behavior is expected, and the cases that might cause data loss are not limited to this example.

Updated Mar 24, 2019

Version 2.0SQL-Server-Team

Former Employee

Joined March 23, 2019

SQL Server Blog

Follow this blog board to get notified when there's new activity