Hi, this is Scott Johnson and I’m a Program Manager on the Windows File Server team. I’ve been at Microsoft for 17 years and I’ve seen a lot of cool technology in that time. Inside Windows Server 2012 we have included a pretty cool new feature called Data Deduplication that enables you to efficiently store, transfer and backup less data.

This is the result of an http://research.microsoft.com/en-us/news/features/deduplication-101311.aspx and after two years of development and testing we now have state-of-the-art deduplication that uses variable-chunking and compression and it can be applied to your primary data. The feature is designed for industry standard hardware and can run on a very small server with as little as a single CPU, one SATA drive and 4GB of memory. Data Deduplication will scale nicely as you add multiple cores and additional memory. This team has some of the smartest people I have worked with at Microsoft and we are all very excited about this release.

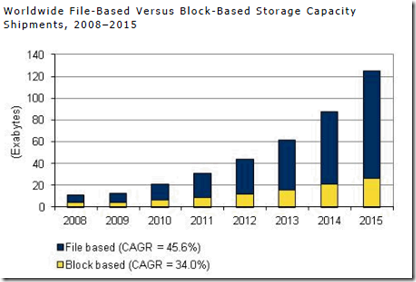

Does Deduplication Matter?

Hard disk drives are getting bigger and cheaper every year, why would I need deduplication? Well, the problem is growth. Growth in data is exploding so much that IT departments everywhere will have some serious challenges fulfilling the demand. Check out the chart below where IDC has forecasted that we are beginning to experience massive storage growth. Can you imagine a world that consumes

90 million terabytes

in one year? We are about 18 months away!

Source:

IDC Worldwide File-Based Storage 2011-2015 Forecast:

Foundation Solutions for Content Delivery, Archiving and Big Data, doc #231910, December 2011

Welcome to Windows Server 2012!

This new Data Deduplication feature is a fresh approach. We just submitted a https://www.usenix.org/conference/usenixfederatedconferencesweek/primary-data-deduplication%E2%80%94large-scale-study-and-system paper on Primary Data Deduplication to USENIX to be discussed at the upcoming Annual Technical Conference in June.

Typical Savings:

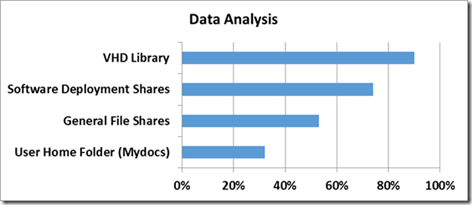

We analyzed many terabytes of real data inside Microsoft to get estimates of the savings you should expect if you turned on deduplication for different types of data. We focused on the core deployment scenarios that we support, including libraries, deployment shares, file shares and user/group shares. The Data Analysis table below shows the typical savings we were able to get from each type:

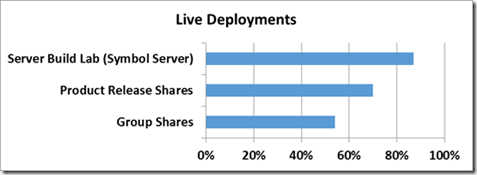

Microsoft IT has been deploying Windows Server with deduplication for the last year and they reported some actual savings numbers. These numbers validate that our analysis of typical data is pretty accurate. In the Live Deployments table below we have three very popular server workloads at Microsoft including:

-

A build lab server:

These are servers that build a new version of Windows every day so that we can test it. The

http://en.wikipedia.org/wiki/Debug_symbol

it collects allows developers to investigate the exact line of code that corresponds to the machine code that a system is running. There are a lot of duplicates created since we only change a small amount of code on a given day. When teams release the same group of files under a new folder every day, there are a lot of similarities each day.

-

Product release shares:

There are internal servers at Microsoft that hold every product we’ve ever shipped, in every language. As you might expect, when you slice it up, 70% of the data is redundant and can be distilled down nicely.

- Group Shares: Group shares include regular file shares that a team might use for storing data and includes environments that use http://technet.microsoft.com/en-us/library/cc778976.aspx to seamlessly redirect the path of a folder (like a Documents folder) to a central location.

Below is a screenshot from the new Server Manager ‘Volumes’ interface on of one of the build lab servers, notice how much data that we are saving on these 2TB volumes. The lab is saving over 6TB on each of these 2TB volumes and they’ve still got about 400GB free on each drive. These are some pretty fun numbers.

There is a clear return on investment that can be measured in dollars when using deduplication. The space savings are dramatic and the dollars-saved can be calculated pretty easily when you pay by the gigabyte. I’ve had many people say that they want Windows Server 2012 just for this feature. That it could enable them to delay purchases of new storage arrays.

Data Deduplication Characteristics:

1) Transparent and easy to use: Deduplication can be http://technet.microsoft.com/en-us/library/hh831700.aspx on selected data volumes in a few seconds. Applications and end users will not know that the data has been transformed on the disk and when a user requests a file, it will be transparently served up right away. The file system as a whole supports all of the NTFS semantics that you would expect. Some files are not processed by deduplication, such as files encrypted using the Encrypted File System (EFS), files that are smaller than 32KB or those that have Extended Attributes (EAs). In these cases, the interaction with the files is entirely through NTFS and the deduplication filter driver does not get involved. If a file has an alternate data stream, only the primary data stream will be deduplicated and the alternate stream will be left on the disk.

2) Designed for Primary Data: The feature can be installed on your primary data volumes without interfering with the server’s primary objective. Hot data (files that are being written to) will be passed over by deduplication until the file reaches a certain age. This way you can get optimal performance for active files and great savings on the rest of the files. Files that meet the deduplication criteria are referred to as “in-policy” files.

a. Post Processing : Deduplication is not in the write-path when new files come along. New files write directly to the NTFS volume and the files are evaluated by a file groveler on a regular schedule. The background processing mode checks for files that are eligible for deduplication every hour and you can add additional schedules if you need them.

b. File Age: Deduplication has a setting called MinimumFileAgeDays that controls how old a file should be before processing the file. The default setting is 5 days. This setting is configurable by the user and can be set to “0” to process files regardless of how old they are.

c. File Type and File Location Exclusions: You can tell the system not to process files of a specific type, like PNG files that already have great compression or compressed CAB files that may not benefit from deduplication. You can also tell the system not to process a certain folder.

3) Portability: A volume that is under deduplication control is an atomic unit. You can back up the volume and restore it to another server. You can rip it out of one Windows 2012 server and move it to another. Everything that is required to access your data is located on the drive. All of the deduplication settings are maintained on the volume and will be picked up by the deduplication filter when the volume is mounted. The only thing that is not retained on the volume are the schedule settings that are part of the task-scheduler engine. If you move the volume to a server that is not running the Data Deduplication feature, you will only be able to access the files that have not been deduplicated.

4) Focused on using low resources: The feature was built to automatically yield system resources to the primary server’s workload and back-off until resources are available again. Most people agree that their servers have a job to do and the storage is just facilitating their data requirements.

a. The chunk store’s hash index is designed to use low resources and reduce the read/write disk IOPS so that it can scale to large datasets and deliver high insert/lookup performance. The index footprint is extremely low at about 6 bytes of RAM per chunk and it uses temporary partitioning to support very high scale

c. Deduplication jobs will verify that there is enough memory to do the work and if not it will stop and try again at the next scheduled interval.

d. Administrators can schedule and run any of the deduplication jobs during off-peak hours or during idle time.

5) Sub-file chunking : Deduplication segments files into variable-sizes (32-128 kilobyte chunks) using a new algorithm developed in conjunction with Microsoft research. The chunking module splits a file into a sequence of chunks in a content dependent manner. The system uses a http://en.wikipedia.org/wiki/Rabin_fingerprint -based sliding window hash on the data stream to identify chunk boundaries. The chunks have an average size of 64KB and they are compressed and placed into a chunk store located in a hidden folder at the root of the volume called the System Volume Information, or “SVI folder”. The normal file is replaced by a small http://en.wikipedia.org/wiki/NTFS_reparse_point , which has a pointer to a map of all the data streams and chunks required to “rehydrate” the file and serve it up when it is requested.

Imagine that you have a file that looks something like this to NTFS:

https://msdnshared.blob.core.windows.net/media/TNBlogsFS/prod.evol.blogs.technet.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/47/85/metablogapi/6170.clip_image009_03F23817.jpg

And you also have another file that has some of the same chunks:

https://msdnshared.blob.core.windows.net/media/TNBlogsFS/prod.evol.blogs.technet.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/47/85/metablogapi/3146.clip_image011_636AF864.jpg

After being processed, the files are now reparse points with metadata and links that point to where the file data is located in the chunk-store.

https://msdnshared.blob.core.windows.net/media/TNBlogsFS/prod.evol.blogs.technet.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/00/47/85/metablogapi/2548.clip_image013_4E0D42FC.png

6)

BranchCache™

: Another benefit for Windows is that the sub-file chunking and indexing engine is shared with

http://www.microsoft.com/en-us/download/details.aspx?id=29247

. When a Windows Server at the home office is running deduplication the data chunks are already indexed and are ready to be quickly sent over the WAN if needed. This saves a ton of WAN traffic to a branch office.

What about the data access impact?

Deduplication creates fragmentation for the files that are on your disk as chunks may end up being spread apart and this causes increases in seek time as the disk heads must move around more to gather all the required data. As each file is processed, the filter driver works to keep the sequence of unique chunks together, preserving on-disk locality, so it isn’t a completely random distribution. Deduplication also has a cache to avoid going to disk for repeat chunks. The file-system has another layer of caching that is leveraged for file access. If multiple users are accessing similar files at the same time, the access pattern will enable deduplication to speed things up for all of the users.

-

There are no noticeable differences for opening an Office document. Users will never know that the underlying volume is running deduplication.

-

When copying a single large file, we see end-to-end copy times that can be 1.5 times what it takes on a non-deduplicated volume.

-

When copying multiple large files at the same time we have seen gains due to caching that can cause the copy time to be faster by up to 30%.

-

Under our file-server load simulator (the

http://www.microsoft.com/en-us/download/details.aspx?id=27284

) set to simulate 5000 users simultaneously accessing the system we only see about a 10% reduction in the number of users that can be supported over

http://blogs.technet.com/b/josebda/archive/2012/04/24/updated-links-on-windows-server-2012-file-server-and-smb-3-0.aspx

.

- Data can be optimized at 20-35 MB/Sec within a single job, which comes out to about 100GB/hour for a single 2TB volume using a single CPU core and 1GB of free RAM. Multiple volumes can be processed in parallel if additional CPU, memory and disk resources are available.

Reliability and Risk Mitigations

Even with RAID and redundancy implemented in your system, data corruption risks exist due to various disk anomalies, controller errors, firmware bugs or even environmental factors, like radiation or disk vibrations. Deduplication raises the impact of a single chunk corruption since a popular chunk can be referenced by a large number of files. Imagine a chunk that is referenced by 1000 files is lost due to a sector error; you would instantly suffer a 1000 file loss.

-

Backup Support:

We have support for fully-optimized backup using the in-box Windows Server Backup tool and we have several major vendors working on adding support for optimized backup and un-optimized backup. We have a

http://msdn.microsoft.com/en-us/library/windows/desktop/hh449202(v=vs.85).aspx

to enable backup applications to pull files out of an optimized backup.

-

Reporting and Detection

: Any time the deduplication filter notices a corruption it logs it in the event log, so it can be scrubbed. Checksum validation is done on all data and metadata when it is read and written. Deduplication will recognize when data that is being accessed has been corrupted, reducing silent corruptions.

-

Redundancy

: Extra copies of critical metadata are created automatically. Very popular data chunks receive entire duplicate copies whenever it is referenced 100 times. We call this area “the hotspot”, which is a collection of the most popular chunks.

- Repair : A weekly scrubbing job inspects the event log for logged corruptions and fixes the data chunks from alternate copies if they exist. There is also an optional deep scrub job available that will walk through the entire data set, looking for corruptions and it tries to fix them. When using a Storage Spaces disk pool that is mirrored, deduplication will reach over to the other side of the mirror and grab the good version. Otherwise, the data will have to be recovered from a backup. Deduplication will continually scan incoming chunks it encounters looking for the ones that can be used to fix a corruption.

It slices, it dices, and it cleans your floors!

Well, the Data Deduplication feature doesn’t do

everything

in this version. It is only available in certain Windows Server 2012 editions and has some limitations. Deduplication was built for NTFS data volumes and it does not support boot or system drives and cannot be used with Cluster Shared Volumes (CSV). We don’t support deduplicating live VMs or running SQL databases. See how to

http://technet.microsoft.com/en-us/library/hh831700#BKMK_Step1

on Technet.

Try out the Deduplication Data Evaluation Tool

To aid in the evaluation of datasets we created a portable evaluation tool. When the feature is installed, DDPEval.exe is installed to the \Windows\System32\ directory. This tool can be copied and run on Windows 7 or later systems to determine the expected savings that you would get if deduplication was enabled on a particular volume. DDPEval.exe supports local drives and also mapped or unmapped remote shares. You can run it against a remote share on your http://blogs.technet.com/storageserver , or an EMC / NetApp NAS and compare the savings.

Summary:

I think that this new deduplication feature in Windows Server 2012 will be very popular. It is the kind of technology that people need and I can’t wait to see it in production deployments. I would love to see your reports at the bottom of this blog of how much hard disk space and money you saved. Just copy the output of this PowerShell command:

PS

>

Get-DedupVolume

-

30-90%+ savings can be achieved with deduplication on most types of data. I have a 200GB drive that I keep throwing data at and now it has 1.7TB of data on it. It is easy to forget that it is a 200GB drive.

-

Deduplication is easy to install and the default settings won’t let you shoot yourself in the foot.

-

Deduplication works hard to detect, report and repair disk corruptions.

-

You can experience faster file download times and reduced bandwidth consumption over a WAN through integration with BranchCache.

- Try the evaluation tool to see how much space you would save if you upgrade to Windows Server 2012!

Links:

Online Help:

http://technet.microsoft.com/en-us/library/hh831602.aspx

PowerShell Cmdlets:

http://technet.microsoft.com/en-us/library/hh848450.aspx