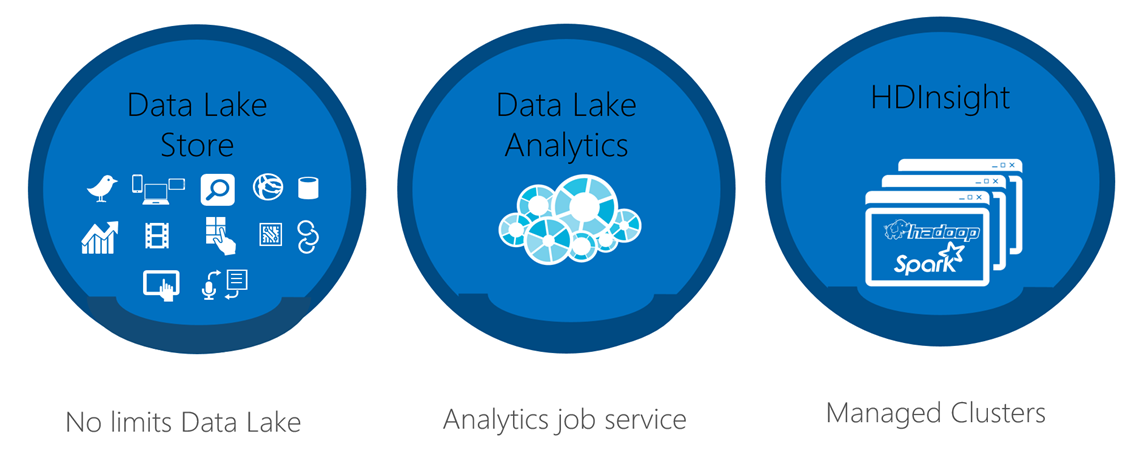

HDInsight

Reliable with an industry leading SLA

Enterprise-grade security and monitoring

Productive platform for developers and scientists

Cost effective cloud scale

Integration with leading ISV applications

Easy for administrators to manage

Resources & Hands on Labs for teaching

https://github.com/MSFTImagine/computerscience/tree/master/Workshop/7.%20HDInsight

Cluster Types available on Azure

Hadoop

Governed by the Apache Software Foundation

Core services: MapReduce, HDFS and YARN

Other functions across: data lifecycle and governance, data workflow, security, tools, data access and operations

MapReduce

Programming framework for analysing datasets stored on HDFS

Typically MapReduce + HDFS run on the same set of nodes

Distributes computation locally to where the data is

Scales linearly as you add nodes

Composed of used-supplied Map and Reduce functions:

Map(): subdivide and conquer

Reduce(): combine and reduce cardinality

Hive

SQL-like queries on data in HDFS

HiveQL = SQL-like languages

Hive structures include well-understood database concepts such as tables, rows, columns, partitions

Compiled into MapReduce jobs that are executed on Hadoop

Supports custom serializer/deserializers for complex or irregularly structured data

ODBC drivers to integrate with Power BI, Tableau, Qlik, etc.

HBase

NoSQL database on data in HDFS

random access and strong consistency for large amounts of unstructured and semistructured data in a schemaless database organized by column families

Can be queried using Hive

HDInsight implementation: automatic sharding of tables, strong consistency for reads and writes, and automatic failover

Storm

Stream Analytics for Near-Real Time processing

Consumes millions of real-time events from a scalable event broker: Apache Kafka, Azure Event Hub

Guarantees processing of data

Output to persistent stores, dashboards or devices

HDInsight implementation: Event Hub, Azure Virtual Network, SQL Database, Blob storage, and DocumentDB

Kafka

High-throughput, low-latency for real-time data

Stream millions of events per second

Enterprise-grade management and control

Resources & Hands on Labs for courses

https://github.com/MSFTImagine/computerscience/tree/master/Workshop/6.%20HPC%20and%20Containers

https://github.com/Microsoft/TechnicalCommunityContent/tree/master/Big%20Data%20and%20Analytics

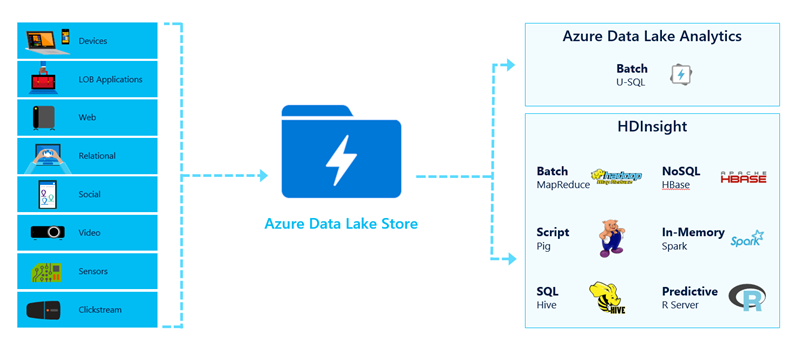

Azure Data Lake

Petabyte size files and Trillions of objects

Scalable throughput for massively parallel analytics

HDFS for the cloud

Always encrypted, role-based security & auditing

Enterprise-grade support

Data Ingress & Egress –> To & From Azure Data Lake

Data can be ingested into Azure Data Lake Store from a variety of sources

Data can be exported from Azure Data Lake Store into numerous targets/sinks

Resources and Hands on Labs

https://github.com/Microsoft/TechnicalCommunityContent/tree/master/Big%20Data%20and%20Analytics

https://azure.microsoft.com/en-us/solutions/data-lake/

Azure Data Lake Analytics

Built on Apache YARN

Scales dynamically with the turn of a dial

Pay by the query

Supports Azure AD for access control, roles, and integration with on-premise identity systems

Processes data across Azure

Built with U-SQL to unify the benefits of SQL with the power of C#

U-SQL: a simple

and powerful language that’s familiar and easily extensibleUnifies the declarative

nature of SQL with expressive

power of C#Leverage existing libraries in .NET languages, R and Python

Massively parallelize code on diverse workloads (ETL, ML, image tagging, facial detection)

Resources

https://azure.microsoft.com/en-us/services/data-lake-analytics/

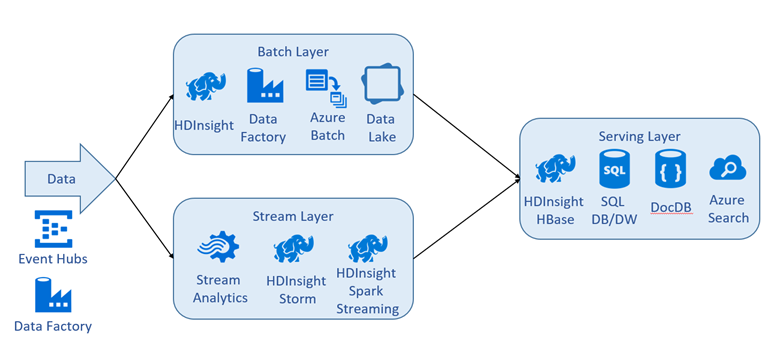

Architecture

Nathan Marz came up with the term Lambda Architecture (LA) for a generic, scalable and fault-tolerant data processing architecture, based on his experience working on distributed data processing systems at Backtype and Twitter.

The LA aims to satisfy the needs for a robust system that is fault-tolerant, both against hardware failures and human mistakes, being able to serve a wide range of workloads and use cases, and in which low-latency reads and updates are required. The resulting system should be linearly scalable, and it should scale out rather than up.

Here’s how it looks like, from a high-level perspective:

http://lambda-architecture.net/

Azure Data Architecture

Resources

https://channel9.msdn.com/Search?term=Big%20Data#pubDate=year&ch9Search&lang-en=en

Microsoft

Microsoft