- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Deploy your Azure Machine Learning prompt flow on virtually any platform

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Available in Azure Machine Learning Studio, Azure AI Studio and locally on your development laptop, prompt flow is a development tool designed to streamline the entire development cycle of AI applications powered by LLMs (Large Language Models). Prompt flow makes the prompts stand front and center instead of obfuscating them or burying them deep in abstraction layers as other tools do. This approach allows developers not only to build orchestration but also evaluate and iterate over their prompts the same way they do over code in the traditional software development cycle.

After development, prompt flow allows developers to conveniently deploy their application to what Azure Machine Learning refers to as an online endpoint. Such an endpoint loads the prompt flow behind a REST API (Application Programming Interfaces) and executes it to respond to inference requests. In most cases, this is the easiest way to deploy the solution to production because Microsoft Azure is then responsible for making it highly available and secure. However, in some cases, you might want to take on take on the deployment of the prompt flow. Perhaps you want more granularity than a managed service inherently provides, perhaps you already have Kubernetes based CI/CD (continuous integration and continuous delivery) pipeline to which you want to add your prompt flow, or perhaps you could be required to execute some nodes of your prompt flow on premises for regulation reasons.

Fortunately, prompt flow allows you to build your prompt flow and package it as a docker container that you can push to any arbitrary container registry and subsequently pull to run on any container enabled platform. Let’s imagine that we are at the tail end of a sprint, we have finalized the next iteration of the prompt flow, and we wish to self-host the deployment. This blog post aims at demonstrating the steps to get there.

Our starting point is an HR (Human Resources) benefits chatbot. The idea was to create a chat-based assistant which would know everything about the benefits offered to our employees and which would be able to answer questions quickly and accurately. To that end, we have taken the benefits brochure from Microsoft, chunked it and ingested it in Azure AI Search to allow for full text search, vector search and hybrid search. We use a retrieval augmented generation (RAG) based approach whereby the user’s question is vectorized, that vector is then used to retrieve the most semantically relevant chunks of documents from Azure AI Search; ultimately both the user’s question and the chunks are sent to a large language model, in our case GPT-35-turbo, which will generate the answer to be returned to the user.

If you would like to deploy this solution in your environment, you will find the code in this repository rom212/benefitspromptflow (github.com). Note that you would have to index your own benefits brochure in an Azure AI Search index.

I like to use prompt flow locally on my development machine with Visual Studio Code and the prompt flow extension that can be found here: Prompt flow for VS Code - Visual Studio Marketplace. Although the directed acyclic graph (DAG) that represents your flow is a yaml file, the Visual Studio Code extension gives you a visual representation of the DAG, making the orchestration of the various steps and the overall flow clear.

The prompt flow interface in Visual Studio Code and the DAG

Above, we can see that the execution starts with an input; that is the user’s initial question. This question is vectorized, then both that vector and initial question as text are sent to a Python node that executes the hybrid search and retrieves the most relevant chunk or chunks from Azure AI Search. We then have a prompt node that prepares the input for the LLM by stuffing the chunk(s) alongside the initial question. Finally, the LLM receives this enriched prompt and returns an answer as the final output to the prompt flow. Note the prompt flow extension showing on the left-hand side. Also note that prompt flow is a rapidly evolving tool and if you are reading this blog post months after the publishing date, you will certainly get a different experience.

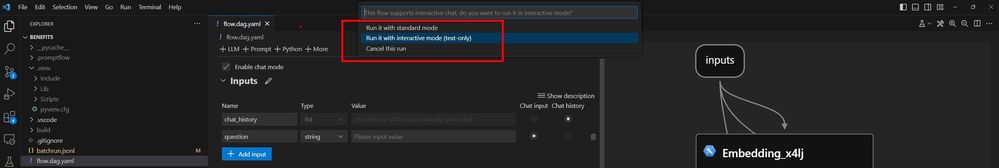

We can run the flow locally from within the Visual Studio Code experience. This lets us establish a baseline of how it is supposed to behave. We can click the play button or hit Shift+F5 to open the dialog box and run the prompt flow in interactive mode.

Running the prompt flow in interactive mode

Above, we can see that the user can ask a question and get a definite answer from the chat bot. We now have a functioning backend in dev that can power an HR copilot type of application and allow employees to ask specific questions about their benefits! Our goal for this blog post though, is to see how we can deploy it on a production grade computing platform which could be virtually anywhere and even on premises. To get there, we are going to use the build functionality in the prompt flow extension for Microsoft Visual Studio Code, see how it generates a docker file and the artifacts we need to build and push a container image.

With the visual editor enabled, you can see five menu options on the top navigation bar. In between the “debug” and the “sort” button is the “build” button which gives us the options to build our flow as docker.

This prompts us to select an output directory for the artifacts that we decide to call “build.”

Selecting the output directory

Finally, we can ask the prompt flow extension to go ahead and build by clicking the “Create Dockerfile” button. Note that at the time of writing, this did not work with the 1.6.0 version of the Visual Studio Code extension but worked well with the 1.5.0 version.

Clicking the Create Dockerfile button

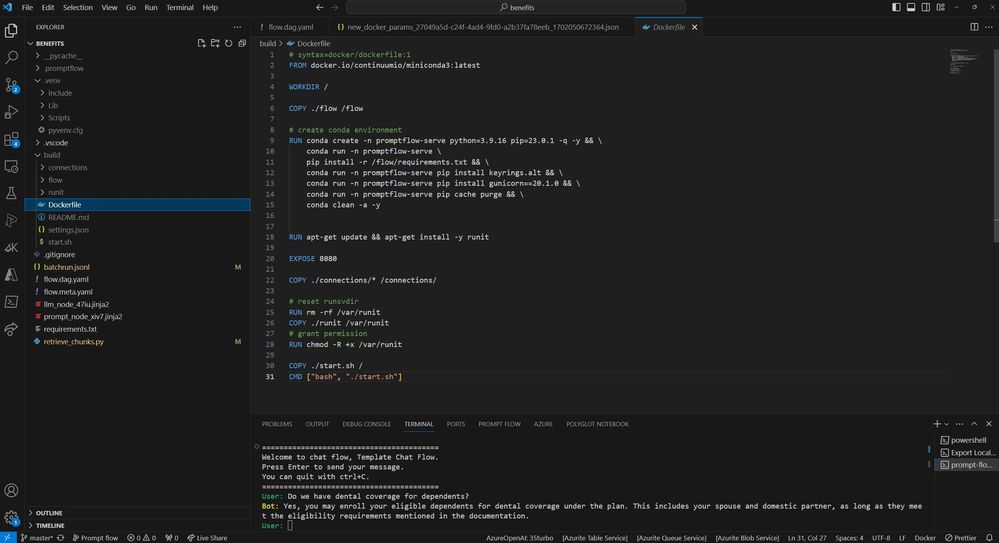

Exploring the build folder reveals the artifacts and in particular the Dockerfile which shows how the image will be built. It will build on a miniconda3 base image and instantiate a gunicorn server to serve the inference requests on port 8080.

The Dockerfile

Worthy of noting, the API keys we used in our development were not baked into the build. Instead, the various connections to Azure AI Search or Azure Open AI are now expecting to find their keys as environment variables. This allows us to keep our secrets safe and inject them dynamically at runtime.

API keys as environment variables

To build and run this Linux based image we are going to move the build folder to a Linux host. In our case here and for simplicity, we will use WSL2 (Windows Subsystem for Linux 2) locally. WSL2 allows us to run a Linux environment on our Windows machine easily but the following steps would work similarly on another Linux host that has docker enabled. After navigating to our build folder, we can invoke the docker build command.

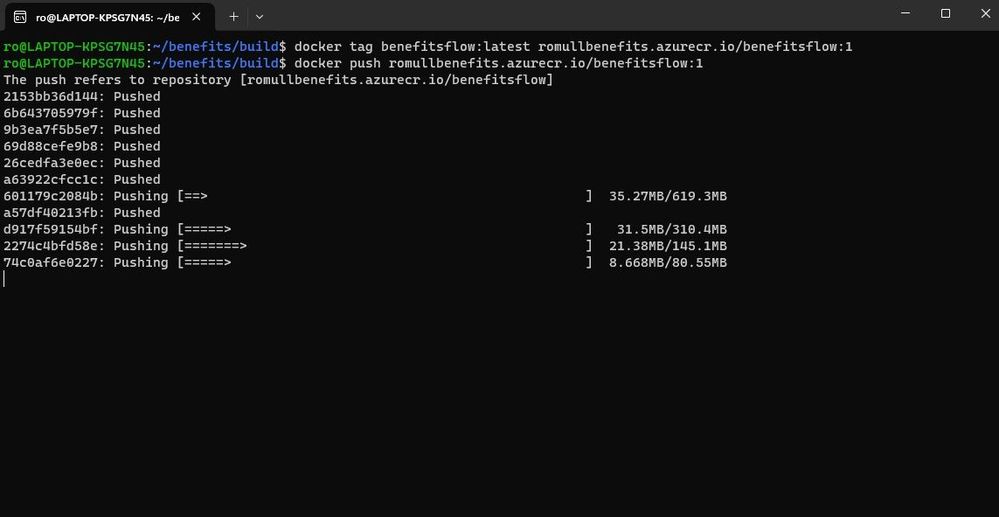

Now we can tag our image and push it to the container registry of our choice such as Docker Hub. In our case, we decided to push it to the Microsoft Azure Container Registry service.

From that point on, it is container-based deployment as usual. We can deploy our image to a Kubernetes cluster or any computer running docker. And because our prompt flow made use of connections, we must remember to pass the required secrets as environment variables to access Azure AI Search and Azure Open AI.

Running the docker image

Our application is running, and the API is ready to take questions as input to the prompt flow. In our case, we even have a little web front end that we can use for testing purposes.

Testing the deployment

In this blog post, we saw some of the convenient features that prompt flow provides to developers. We also saw that although prompt flow makes it easy to deploy an orchestrated flow to a managed endpoint, it is not strongly opinionated as to where we should actually deploy it. In fact, the build capability allows you to package your application and execute it on virtually any computing platform.

I very much appreciate my esteemed colleague Andrew Ditmer for his peer review.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.