- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Combine Semantic Kernel with Azure Machine Learning prompt flow

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Context

With the rapid advent of generative AI and the multiplication of use cases came the inevitable battle of the tools for world domination. Aligning with their mission to empower every person, Microsoft has built a suite of tools with various levels of abstraction and control so that the applied data scientist, the casual hobbyist, and anyone in between can build generative AI applications. Two of these tools particularly appeal to the enterprise and their engineering teams because they allow for rapid development, a high level of control and they ultimately lead to performant applications when deployed at scale. These tools are Semantic Kernel and Azure Machine Learning prompt flow. Both have been receiving their fair share of attention and because their capabilities largely overlap, one may wonder which one is best. This is a question my teammates and I are asked often by colleagues and customers alike. If answering it is a non-trivial exercise, this post, instead, aims to show that these tools are not mutually exclusive. You don’t have to choose!

Semantic Kernel is an open-source project that lets you intertwine conventional code with generative AI capabilities underpinned by LLMs (Large Language Models) such as GPT-4 or Hugging Face models. Semantic Kernel offers a broad spectrum of features, but its strength resides in the ability to create skills and dynamically orchestrate their invocation with planners. The skills themselves usually wrap a prompt containing variables. Upon invocation of the kernel with a request, the planners leverage an LLM to devise a “step-by-step” plan to fulfill the request; they then map each step to the most relevant skill they know of, along with the variables to inject. Planners then proceed to execute each step until a satisfactory response is produced. Note that depending on which version of Semantic Kernel you come across, you might see the term “plugin” being used instead of "skill". This disparity is due to an ongoing renaming effort but these two can be used interchangeably here for all intents and purposes.

Azure Machine Learning prompt flow is a tool that helps streamline the development of generative AI applications end to end. In prompt flow, we can build applications brick by brick by adding nodes containing native code, nodes powered by an LLM and by connecting them with one another. The output of one node becomes the input of the downstream nodes. Prompt flow offers a convenient user interface showing a visual representation of the directed acyclic graph (DAG) and many convenient features with respect to collaboration and deployment. Prompt flow particularly shines when it comes to the evaluation phase of the development cycle. Because Microsoft is committed to the responsible AI approach and because one of its tenets is reliability, we do not want to deploy generative AI based applications that have not been rigorously evaluated. Prompt flow offers a convenient and unique way to run an evaluation flow and calculate metrics. This gives you a measurable sense for how grounded or accurate your prompt flow is before you deploy it. Even better, you can now iterate and continuously improve your application. If you would like to learn more about deployment options in prompt flow, you may find this other post helpful: Deploy your Azure Machine Learning prompt flow on virtually any platform (microsoft.com)

The goal of this blog post is to demonstrate how Semantic Kernel and Azure Machine Learning Prompt Flow can be paired up so you can get the best of both worlds. We will demonstrate this by authoring semantic kernel code directly in the prompt flow experience, we will create a semantic kernel skill, test it interactively and ultimately measure its accuracy.

Preliminary steps

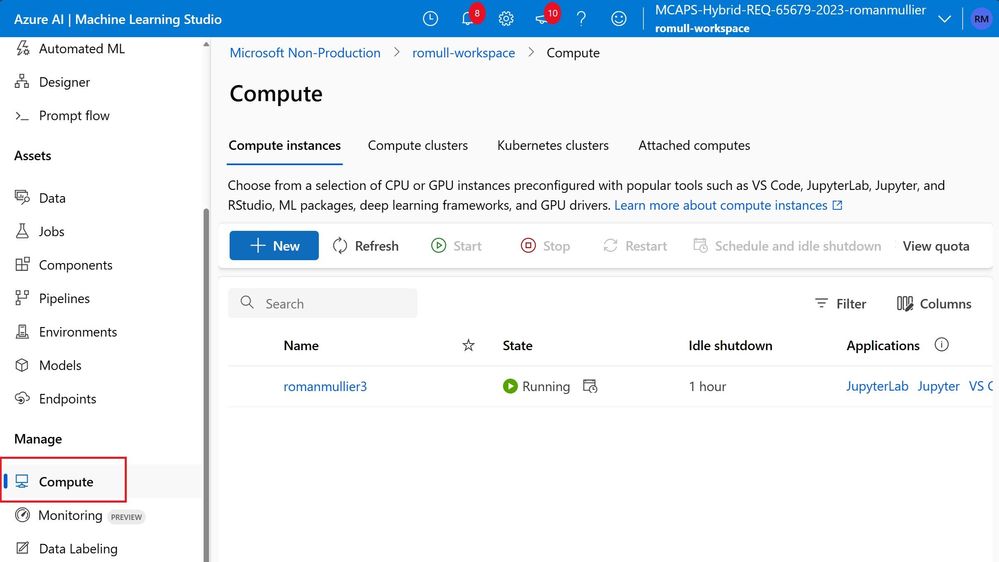

Before we can run our prompt flow, we need the proper environment, and I would like to use Machine Learning Studio to set it up. There, I see that I already have some compute ready, willing, and able to serve.

Even though the default environment on this compute is preloaded with the prompt flow library, the semantic kernel library is not part of the image. So, we need to create a custom environment. An easy enough task in Azure Machine Learning Studio, we just navigate to the “Environments” menu and click “Create”. To ensure that the custom environment is going to work well with prompt flow, it needs to be built upon a specific base image: mcr.microsoft.com/azureml/promptflow/promptflow-runtime-stable. We build a new environment that we call “pfwsk”, an easy to pronounce short name for “prompt flow with semantic kernel”.

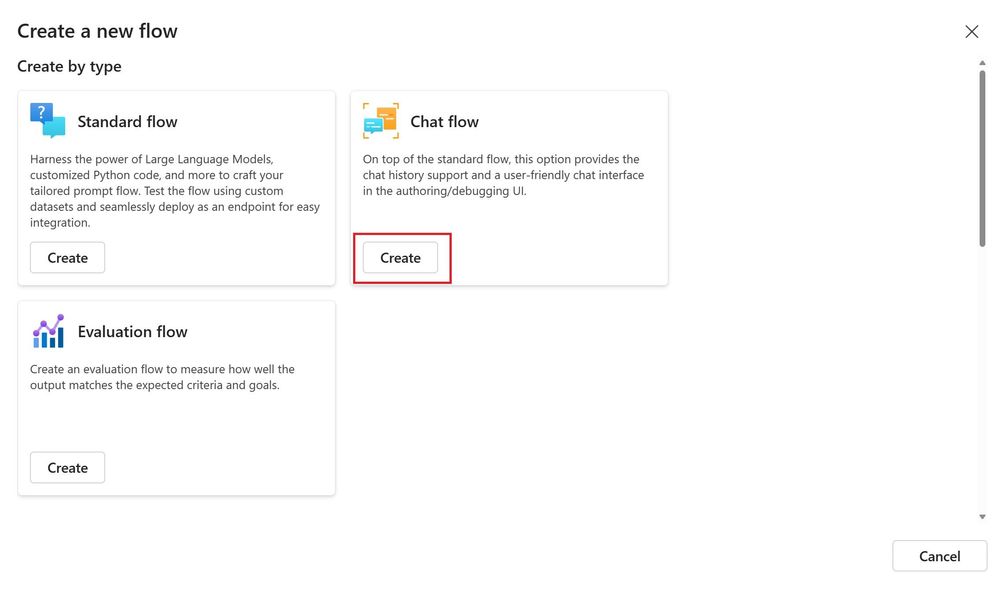

With this out of the way, we can go ahead, navigate to prompt flow, and create a new chat flow.

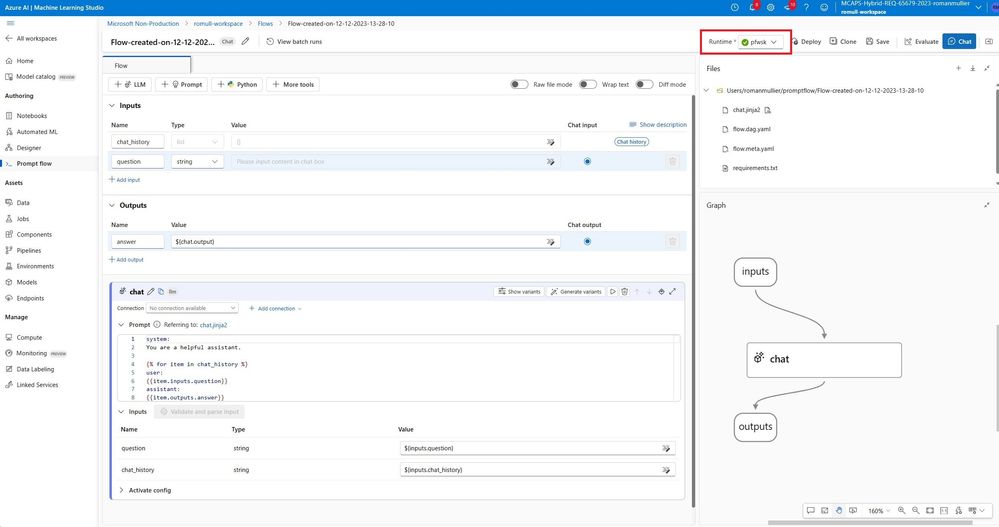

The default canvas of a chat flow consists in an input called “question”, a single LLM node and an output called “answer”. In our case, we want to write semantic kernel python code containing our custom LLM powered skill. So later, we will get rid of the chat node and replace it with a python node that will encapsulate our entire logic, including calling the large language model, when our custom skill is invoked. Note that we selected our custom runtime “pfwsk”.

The custom skill

In Semantic Kernel, building a semantic skill consists in creating a prompt file and a config file. Let’s pretend that we want to create a spam classification skill. Given a text message, our skill will determine whether it is spam or not spam. Semantic Kernel expects an “skprompt” text file containing the prompt to be sent to the LLM as well as placeholders for the inputs. We are going to use a few shot learning approach and author the below prompt in skprompt.txt:

Decide whether a message is spam or not from its content, and tone.

Only use one of the two categories that follow and nothing else:

Categories: spam, not spam

Examples

Message: You've won!

Category: spam

Message: Claim your gift card!

Category: spam

Message: where do we request this for our customers?

Category: not spam

Message: "{{$input}}"

Category:

Semantic Kernel also expects a config.json file which specifies the settings to be used when invoking the LLM. I decided to use the settings below for this use case:

{

"schema": 1,

"type": "completion",

"description": "Tells you whether a given text is spam",

"completion": {

"max_tokens": 256,

"temperature": 0.0,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

}

To allow our application to eventually have multiple skills and even multiple classification skills, we follow the Semantic Kernel best practices with our folder structure. We create a “skills” folder which contains a “ClassificationSkill” folder which contains a “Spam” folder which in turn, contains the two files created earlier. These files are also available in this repository: rom212/semantic_skill_evaluation (github.com)

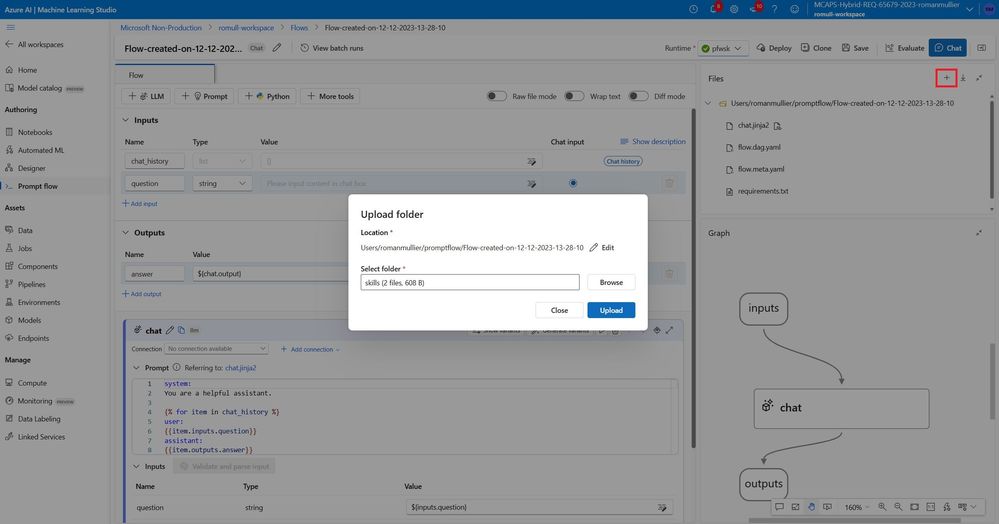

Back to Azure Machine Learning Studio and our prompt flow setup, we can go ahead and upload our skills folder to the project.

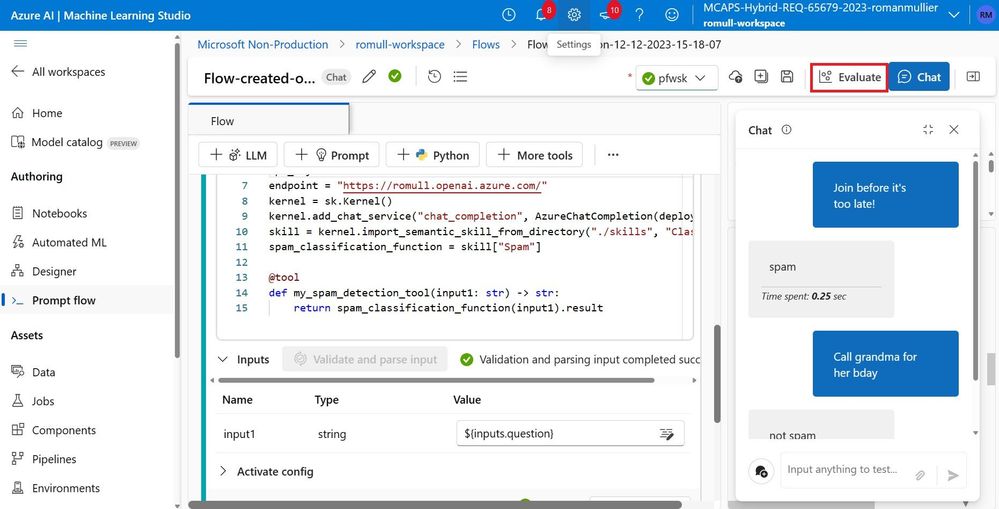

The semantic kernel code and the python node

As explained earlier, let’s replace the default LLM node with a single Python node that is going to encapsulate our semantic skill. Our python node declares a function with an input and leverages the spam classification semantic skill that we authored earlier. Upon invocation, it makes a call to our GPT3.5-Turbo deployment in Azure Open AI. We link the flow inputs and outputs to this python node. If you wish to recreate this in your subscription, the code is available in the python_node_ydoa.py file at this repository: rom212/semantic_skill_evaluation (github.com). You will have to replace the endpoint and deployment names with your own as well as inject your API key.

First tests

Prompt flow makes it easy to test your application. Let’s use the built-in “Chat” feature and invoke our semantic kernel skill a couple of times to establish a first baseline. As it tuns out, our skill is invoked properly, and the flow seems to respond with the expected outcome for our first tests.

Evaluation

With a fully functioning flow, we are now almost ready to evaluate our semantic skill. The last missing piece is what is commonly referred to as a golden dataset. A golden dataset contains data points and their respective inference (e.g. spam or not spam) as established or validated by experts. In short, a golden dataset represents the ground truth that we are going to evaluate our semantic skill against.

The prompt flow evaluation feature accepts the “jsonlines” format, which is a straightforward way to load a dataset, each line being a full json document representing a single data point. For this exercise, we created the batchdata.jsonl file and produced a synthetic dataset consisting of ten data points. The batchdata.jsonl file can be found at rom212/semantic_skill_evaluation (github.com) and its content below:

{"message" : "You've won!!!" , "ground_truth" : "spam" , "chat_history": []}

{"message" : "Hey don't forget our meeting tomorrow" , "ground_truth" : "not spam" , "chat_history": []}

{"message" : "A prompt recovery to you, feel better soon" , "ground_truth" : "not spam" , "chat_history": []}

{"message" : "You qualify for our new credit cards!" , "ground_truth" : "spam" , "chat_history": []}

{"message" : "Congrats, click here to redeem your prize" , "ground_truth" : "spam" , "chat_history": []}

{"message" : "The exec briefing tomorrow will be rescheduled" , "ground_truth" : "not spam" , "chat_history": []}

{"message" : "reminder to fill out your activity report by Friday" , "ground_truth" : "not spam" , "chat_history": []}

{"message" : "F_R_E_E C_R_Y_P_T_O" , "ground_truth" : "spam" , "chat_history": []}

{"message" : "Check out our winter sale" , "ground_truth" : "spam" , "chat_history": []}

{"message" : "Don't forget to pick up the kids at school this afternoon" , "ground_truth" : "not spam" , "chat_history": []}

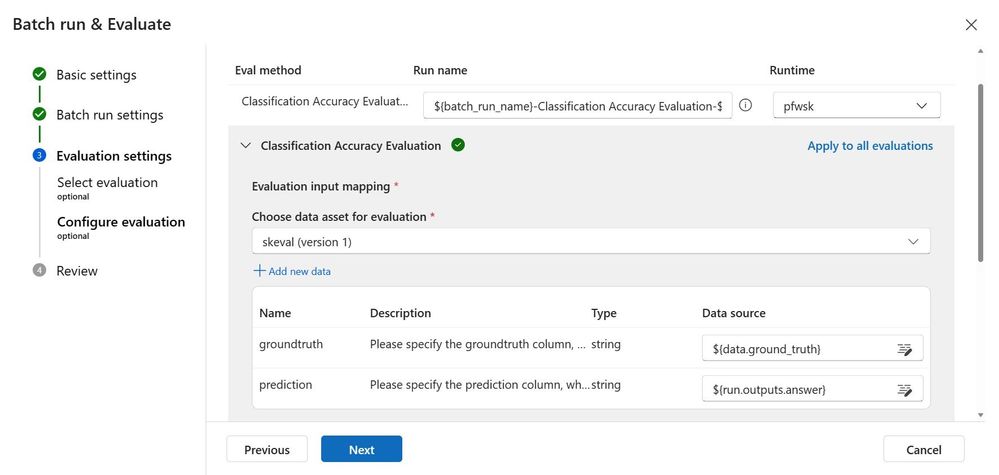

Armed with this dataset, prompt flow can run inferences on the messages in dataset, compare the output with the ground truth and calculate an aggregate accuracy. Let’s go ahead and click “Evaluate”!

Conveniently, prompt flow lets us re-map the keys in our golden dataset to the input expected by the flow. In our case, the jsonlines objects use the key “message” whereas our flow is expecting the default “question” input.

To cover most common use cases out of the box, Prompt flow comes with many built-in evaluation flows. It will come as no surprise that there is a built-in evaluation flow to compute classification accuracy but note that you could always build your own.

Logically enough, the next step consists in telling prompt flow what comparison to make. We want to compare the ground truth in our golden dataset to the flow output called “answer”.

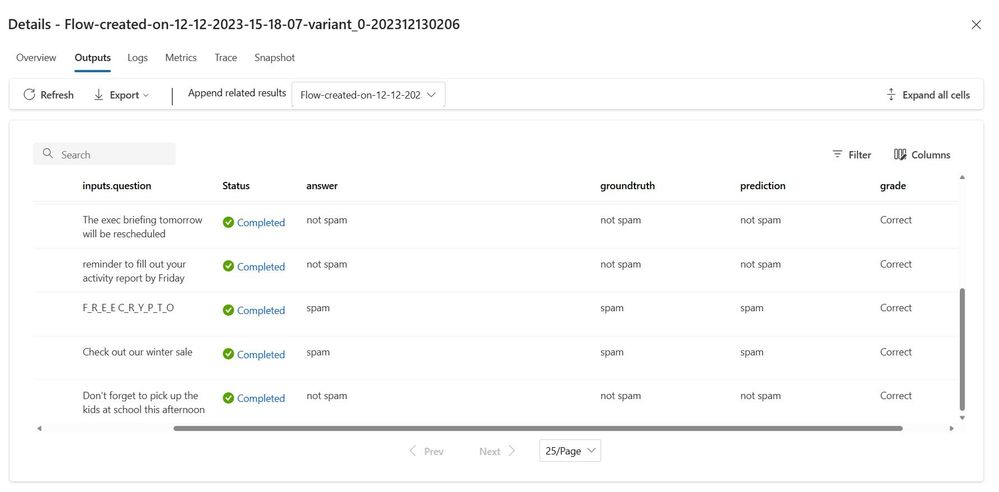

We can then submit our evaluation job! On this compute, it takes about half a minute to run, and the prompt flow UI makes the results easy to assess. At first glance, it seems that our custom semantic skill performed well on our golden dataset. We can observe that all the predictions happen to match the ground truth. It looks like we may have built a decent spam classifier semantic skill with minimal effort!

The metrics tab shows the aggregate metric and confirms that our skill shot a perfect 10/10 on this dataset with an accuracy of 1.

Conclusion

The battle of generative AI tools for world domination is not going to be a winner-takes-all type of battle. New tools will emerge, many will die, many will prosper. Some will have strength, all will have weaknesses. But AI practitioners need not commit to one unique tool. Often, those are not mutually exclusive; they can combine with each other to form something greater than the sum of their parts for a particular use case. In our example, we tried to highlight the fact that the specific strengths of Semantic Kernel and Azure Machine Learning prompt flow could compound, allowing us to build a new semantic skill and systematically evaluating it prior to eventually using it with a planner down the road.

My sincere appreciation goes to my colleague Kit Papandrew for her time on this post as peer reviewer!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.