I put a presentation together quite some time ago going over various SQL Server I/O behaviors and relating them to the SQL Server I/O whitepapers I authored. I keep getting requests to post the presentation and the information is relevant from SQL 7.0 to SQL Server 2008 and beyond.

Here are the RAW slides and my speaker notes. You can read the reference materials outlined in details on the final sides for completeness.

The goal of the presentation was to expose the attendee to the wide aspects of SQL Server I/O so they had a better understanding of what the system requirements are and how to troubleshoot common problems.

As you can see from the wide list of topics this presentation discusses a broad range of SQL Server I/O aspects.

The WAL protocol for SQL Server is the ability to secure/harden the log records to stable media. SQL Server uses the File Flag Write Through option when opening the file (CreateFile) to accomplish this.

Hard/Stable media is deemed any media that can survive a power failure. This could be the physical storage device or sophisticated, battery backed caching mechanisms. As long as the I/O path returns successful write to the SQL Server it can uphold that guarantee.

SQL Server uses WAL protocol designs to accomplish durability of transaction.

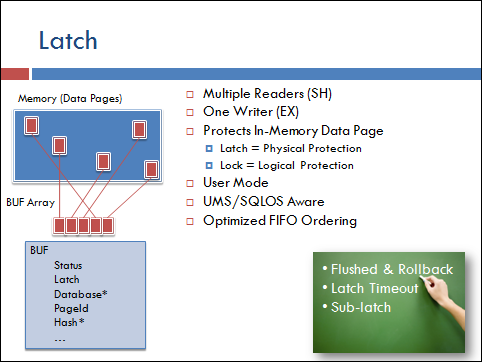

When I whiteboard this slide I talk about commit and rollback and the impact of locks and latches. For example a latch is only used to protect the physical integrity of the data page while in memory. A lock is more of a virtual concept and can protect data that is no longer or not yet in memory.

Take the following as an example “update tblxxx set col1 = GetDate()” where the table is 1 billion rows.

Simplified process to discuss.

Begin transaction

Fetch Page

Acquire Lock

Acquire Latch

Generate log record

Update page LSN to match that of the log record

Change Data (dirty the page)

Release Latch

Fetch Next Page

Acquire Lock

Commit Transaction Starting

FlushToLSN of Commit

Release locks

Commit Transaction Complete

The entire table won’t fit into memory so lazy writer will wrote dirty pages to disk. In doing so lazy writer will first call the routine FlushToLSN to flush all log records up to and including the LSN stored in the page header of the dirty page. Then lazy writer, and only then, will it issue the write of the data page. If a power outage occurs at this point the log records can be used to reconstruct the page (rollback the changes).

Notice that the latch only protects the physical access to the in-memory data page only for the small about of time the physical change is being made. The lock protects the associated data until the commit or rollback takes place.

This allows lazy writer to remove a dirty page while the locking structures continue to maintain the transactional integrity.

The question I always ask here is if I issue a rollback instead of a commit would SQL Server fetch back in pages to undo the update? The answer is yes. The page could have been removed from buffer pool/page cache by lazy writer after the FlushToLSN took place. When the rollback occurs the pages changed by the transaction may need to be fetched into buffer pool to undo the change.

SQL Server 2000 used to use the log records to populate the INSERTED and DELETED tables in a trigger. Snapshot isolation is now used, internally, for any trigger instead of having to scan the log records. This means the version store (located) in TEMPDB is involved in INSERTED and DELETED table population and row version tracking.

For whatever reason this is a confusion subject for many people. Make sure to spend time on this slide to make sure everyone understands sync vs async well.

I like to use a an example outside of the direct API calls before I dive into API behaviors. I often use the example of a package vs a phone conversation for a send and response paradigm to help explain the behavior a bit.

If you go to the post office and send a package contents of the package move to the designation in a single way. Once it is sent you can’t really cancel the action and you have to wait for the sender to receive the package and reply to you. It is a synchronization point or a sync type of activity.

Instead if you are in a phone conversation the traffic is two-way. In fact, many of us interrupt each other with answers and information before the full message even arrives at the other end of the conversation. You can hang up the phone to cancel the transmission or ask a customer service representative a question, get put on hold, do other things and then get an answer. This is closer to an asynchronous operation.

The more I do this presentation I think it might be better to compare sending a package vs sending an e-mail. You can send the e-mail and it goes out while you do other activities (reading other e-mails and being efficient with your time). You can later come back and check response, send location, etc…

Others have suggested I use the idea of hand writing a note vs sending the note to the printer. When had writing you are tied up (sync) and can’t do other things. When you send the note to the printer you can do other things (async) while the physical generation of the note takes place.

In Windows the I/O APIs allow sync and async requests. Sync requests are calls to the API such as WriteFile that will not return control to the calling code until the operation is complete. Async hands the request off to the operating system and associated drivers and returns control to the calling code. The calling code is free to execute other logic and later come back to see if/when the I/O completes.

SQL Server uses mostly Async I/O patterns. This allows SQL Server to write or read a page and then continue to use the CPU and other resources effectively. Take the example of a large sort operation. SQL Server can use its read ahead logic to post (async request) many pages and then start processing the first page returned by the request. This allows SQL Server to use the CPU resources to sort the rows on the page while the I/O subsystem is fetching (reading) in other pages at the same time. Maximizing the I/O bandwidth and using other resources such as CPU more effectively.

If you want to know more about Async processing study the Overlapped structure associated with I/O requests and HasOverlappedIOCompleted.

SQL Server also exposes the pending (async) I/O requests in the sys.dm_io_pending_io_requests DMV. I specifically point out that the column ‘io_pending’ is a key to understanding the location of the I/O request and who is responsible for it. If the value is TRUE it indicates that HasOverlappedIOCompleted returned FALSE and the operating system or driver stack has yet to complete the I/O. Looking at the io_pending_ms_ticks indicates how long the I/O has been pending. If the column reports FALSE for io_pending it indicates that the I/O has completed at the operating system level and SQL Server is now responsible for handling the remainder of the request.

Using Async I/O means that SQL Server will often exceed the recommended disk queue length depth of (2). This is by design as I just described with the read ahead logic as one example. SQL Server is more concerned with the number of I/O requests and average disk seconds per transfer than the actual queue depth.

There are a few places in SQL Server where async I/O does not make sense. For example, if you are writing to a tape drive the backup has to lay down blocks in order so the I/O request is sync and the thread(s)/worker(s) doing this activity are located on hidden schedulers to they don’t cause any scheduler stalls.

An advantage of async is the avoidance of forcing a SQL Server worker to stay in kernel mode and allows it to do other user mode processing like the sort activity I describe here. Thus, it reduces the number of kernel threads in wait states and allows SQL Server to better work with the operating system and the SQLOS scheduler design.

In service pack 4 for SQL Server 6.5 scatter/gather I/O APIs started to be used. Prior to this a checkpoint would first sweep the buffer pool and locate all dirty pages for the current checkpoint generation and place them on a list in page id sorted order. The older design was attempting to write the pages in an order that was often close to on disk order.

One problem that SQL 6.x and previous builds had was elevator seeking. The drive(s) would often service the I/O requests closest to the drive head. So in some cases a single I/O could be stalled longer than expected. If this was a critical page in an index it could lead to unwanted concurrency stalls as checkpoint or lazy writer executed. Another problem was the need to have a separate list to maintain. Yet another problem was the number of I/O requests.

Scatter/Gather reduces addresses all of these issues nicely.

First we are able to remove the sweep and sort onto a list of dirty pages. Instead a new routine named WriteMultiple was added to SQL Server. Whenever write multiple is called it can look in the hash table for the next ## of pages and attempt to bundles a larger I/O request. In the diagram it shows the pages disbursed in physical memory but located next to each other on disk. Without scatter gather each of these data pages would require a separate I/O request to write the physically disbursed pages to disk. With the WriteFileGather the pages can all be part of a single I/O request.

This design removes the need to sort. All SQL Server has to do is sweep the buffer pool from position 0 to …. N and locate a dirty page for the database. Call WriteMultiple that will gather pages that would be in physical order next to it on disk that are also dirty and issue the write. SQL Server 2005 will gather up 16 pages with page numbers greater than the initial page requested and SQL Server 2008 can gather up 32 pages before or after the requested page that will make a contiguous write request.

By doing the sweep the writes are now out of order and are no longer as prone to elevator seek issues and are more efficient because the size of the transfers are larger with fewer transfer requests.

ReadFileScatter is used for reading pages into the buffer pool and performs the opposite operation. There is no longer a need to have a contiguous 8, 16, 32, 64, …K chunk of memory. SQL Server merely needs to locate 8K chunks of memory and setup the read request. During the read the data is placed in disparate locations in memory. Prior to the scatter request each of these would result in a separate I/O request.

Some SQL Server versions (Enterprise for example) will do additional ramp-up reads. When SQL Server is first started each page read is expanded to 8 pages so the buffer pool is populated quickly with additional pages near the pages that are currently being asked for. This can reduce the time required to return the buffer pool to a warm/hot state. Once the commit target it reached the ramp-up behavior is disabled.

Sector size is used to write to the log. Versions of SQL Server before SQL Server 7.0 used data page sizes for the log records and the page could be re-written. This actually violates the intention DURABILITY. For example, you have a committed transaction that has FlushToLSN and released locks but the data pages have not been written to the data file. Another transaction issues a FlushToLSN and the same log page write occurs with the additional log records. If this write fails (bad sector or hardware failure) you may have lost information about the transaction that was previously considered committed.

The SQL 6.x design can be faster than the SQL 7.0 and later design because the same location on disk may be written several times but it is unsafe. The SQL 6.x design can also pack more log records, for smaller transactions, on the same set of sectors where SQL 7.0 and later builds will use more log (.ldf) disk space.

SQL 7.0 changed the logging algorithms to sector based. Any time the physical flush takes place it occurs on a sector boundary. The FlushToLSN will attempt to pack as many active log records into sector aligned boundaries and write on the sector size. The sectors each contain a parity bit that the log can use to detect the valid sectors flushed before a crash recovery.

Hardware manufactures typically maintain that the stable media has enough capacity to ensure a complete sector write when a power outage is encountered so the sector size is the most table choice.

Take the following example:

While(1 < 1000)

begin

insert into tblTest values (…)

end

SQL Server 6.x would keep writing the same log page over and over. SQL Server 7.0 and later builds will flush each insert so 1000 sectors are used. Many customers have encountered this and needed to understand the new behavior.

To correct the issue you should put groups of inserts into a single transaction. For this example if you wrap the entire loop in a begin / commit a single FlushToLSN is issued and all 1000 inserts are compacted into a handful of log sectors.

CAUTION: Wrapping transaction broadly can reduce concurrency so control break processing and transaction grouping is usually a better design than global wrapping.

Some newer drives can have sectors larger than 512 bytes. This is a not a problem for SQL Server but restoring a database between drives with different sector sizes can be prevented by SQL Server. The reason for preventing the move is to avoid the possibility of sector rewrites.

For example the database is created in a drive with a sector size of 512 bytes and (if allowed by SQL) restored to a 4096 byte sector size drive. SQL Server’s .ldf metadata and log file initialization is tracking on 512 byte boundaries. So it would continue to flush log records on 512 byte sectors. This could result in sector rewrites during subsequent flushes to the 4096 sectors and leave the database susceptible to log record loss.

Note that some drives with large sector sizes will report 512 bytes for backward compatibility and do the re-writes without the system knowing about it. You should validate the physical sector size vs reported sector sizes when using these new drives.

Block size and alignment comes up in support often before the NTFS changes in Windows 2008 to adjust the alignment to a better boundary.

The problem is often that the partition alignment ends at 63 – 512K sectors so every fetch and write of a 64K SQL Server extent results in 2 disk block touches. You want to avoid rewrites of a block just to handle the 64th sector and prevent stable media damage to the other 63 sectors. You also want to avoid the performance impact of the 2-for-1 operations.

Review any number of KB articles related to Diskpart/DiskPar and work with the hardware manufacture to make sure the proper block alignment is achieved. You can also look at the SQLIO.exe utility to help tune your I/O path for SQL Server.

Defragmentation is sometimes a good idea for SQL Server but generally not needed. I usually see benefit if the database shrinks and grows a lot so it would be releaseing and acquring physical sectors frequently. If the database size is fixed the sectors are acquired on time and usually in blocks.

Whenever you defragment a volume with SQL Server files be sure to take a SQL Server backup first and make sure the defragmentation utility is transactional. The utility must acquire new space, make the copy of the data and release the space in a transactional safe way so a power outage during defragmentation does not damage the SQL Server files.

The LATCH protects the physical access to the in-memory database page. They are used in other areas of the server for synchronization but for I/O they protect the physical integrity of the page.

For example, when the page is being read into data cache there is no way to tell how much of the page is valid for reading until the I/O is fully complete. SQL Server associates a latch with every database page held in-memory. When a read of the page from the data file takes place an exclusive (EX) latch is acquired and held until the read completes. This prevents any other access to the physical page. (PAGE_IO*_LATCH) wait types are used when reading and writing pages and are expected to be long page latches (I/O speed).

This is different from the lock because multiple row locks can apply to the same page but only a single latch is used to protect the physical integrity. A (PAGE*_LATCH) indicates a latch is held on a page that is already in memory (not in I/O) and it should be held for only the time needed to modify some physical data on the page. This is considered a short latch and allows SQL Server to maintain row level locking in conjunction with the need for the specific physical change to be synchronized (one at a time) on the page itself.

The latch allows multiple readers but a single writer. So once the page is in memory it can be read (select for example) by 100s of sessions at the same time. SH (Shared) acquires don’t block each other. The behavior is the latch is basically FIFO and prevents live lock scenarios from occurring.

The latch is implemented in user mode and does not involve kernel synchronization objects. Instead it is built in conjunction with SQLOS to properly yield to other workers and maximize the overall resource usage by the SQL Server.

You can wait on yourself? Yes it is possible to wait on yourself and that behavior was always part of the latch design but only exposed starting with SQL Server 2000 SP4. In the case of a read the worker acquires a EX latch and posts (async request) the I/O. The worker goes off and does other work and later comes back to read the data on the page that it put in motion. It will attempt to acquire an SH latch on the page and if the I/O is still pending the original EX latch mode will block it. (Blocked on an I/O request you posted yourself.) When the I/O completes the EX latch is released and processing continues. The aspect of this to be aware of is that you don’t want large wait times for PAGE_IO_*_LATCH) types or it indicates SQL Server is using an I/O pattern that the hardware is not responding to in a timely fashion.

Many jump to the conclusion that if you see average disk seconds per transfer > 4ms or > 10ms you have an I/O bottleneck at the hardware. This may not be true. As you recall I earlier discussed that read ahead can post a deep number of I/Os. While you wan the average disk seconds per transfer to be small the PAGE_IO*_LATCH type is a good indicator of how well the sub-system is responding to the needs of SQL Server. The virtual file statistics DMV is another good place to determine how well the I/O sub-subsystem is responding to SQL Server requests.

Sub-latch is also referred to as super latch. These are only used for data cache page latches. They are designed to reduce the latching contention on hot pages. For example if you have a lookup table that is only a few pages in size but used by 100s of queries per second that SH latch activity is aggressive to protect the page. When SQL Server detects high rates of SH latch activity for a sustained period a buffer latch is PROMOTED to a sub-latch. A sub-latch partitions a single latch into an array of latch structures per logical CPU. In doing so the worker (always assigned to a specific CPU) only needs to acquire a SH on the sub-latch assigned to the local scheduler. This avoids interlocked activity and cache line invalidations across all physical CPUs. The acquiring of an SH sub-latch resource uses less resources and scales access to hot pages better.

The downside of a sub-latch is that when an EX latch is requested the EX latch must be acquired on EVERY sub-latch. When a sub-latch detects a series of EX requests the sub-latch can be DEMOTED back to the single latch mechanisms.

I have touched on reading a page on previous slides already and described the locks vs latching mechanisms. Now walk-through a page read in detail with audit checks and such.

When a worker needs to access a page is calls routines such as BufferPool::GetPage. The GetPage routine does a hash search looking for a BUF structure that already has the page in memory. If found the page is latched and returned to the caller. If not found the page must be read from disk.

Here is the simplest form of reading a page. SQL Server can read pages with read ahead, ramp-up and other logic but the following is the clearest for discussion.

Step 1: A request to the memory manager for an aligned (OS Page alignment 4K or 8K) 8K page is made.

Step 2: The page is associated with a BUF structure for tracking the page

Step 3: The EX latch is acquired to protect the page

Step 4: The BUF is inserted into the hash table. In doing so all other requests for the page use the same BUF and Page and access is currently prevented by the EX latch

If the entry is already in the hash table release the memory and use what is already in the hash table at this time

Step 5: Setup the I/O request and post (async I/O request) the I/O request.

Step 6: Attempt to acquire the requested latch type asked for. (This will block until the page read completes)

Step 7: Check for any error conditions that may be present for the page and raise an error if present.

Some errors result in additional activity. For example a checksum failure will result in read-retry behavior. Exchange and SQL Server have found that in some instances issuing the same read again (up to 4 times) can return the correct image of the page. The SQL Server error log will reflect that retries were attempted and successful or failed. In either case the retry messages should be taken as a sign of possible sub-system problems and corrected.

SQLIOSim.exe ships with SQL Server 2008 or can be downloaded. It mimics SQL Server I/O behavior(s) and patterns as well as adds random I/O patterns to the test passes. We have done extensive testing with the utility and it often will reproduce the same I/O problem(s) logged in the SQL Server error log independent from the SQL Server process or database files. Use it to help narrow a reproduction on a troubled system. CAUTION: SQLIOSIM can’t be used for performance testing as it can post I/O requests at depths of 10,000 or more to make sure the sub-system and drivers don’t cause blue screens when the I/O depth is stressed. Some drivers have caused blue screens and others don’t recover well. It is expected that the I/O response time will be poor but the system should recovery gracefully.

When the read completes it does not release the EX latch until audit activity takes place. (823, 824, 605 and such error condition checks).

The process of completing an I/O is a callback routine and can’t log an error so an error code (berrcode) is set in the BUF structure and the next acquire (Step 7 above) will check for the error and handle it accordingly.

•Check the number of bytes transferred

•Then the operating system error code

•Does the page in the page header match that expected from the offset (offset / 8K) - Some sub-system bugs will return the wrong offset (605 error)

•If PAGE_AUDIT is enabled check the checksum or torn bit information

•If the trace flag is enabled to perform dbcc audit a page audit is executed

Set the berrcode accordingly and release the latch. Compliant waiters of the latch are woken to continue the processing.

Revisit the PAGE_IO* vs PAGE_* latch meanings.

Writing a page is just pretty much like reading a page. The page is already in memory and the BUF status is dirty (changed). To write a page SQL Server always used the WriteMultiple that I discussed during an earlier slide.

Lazy Write – Clock sweeping the buffer pool to maintain the free lists. A buffer is found dirty and the time of last access shows the buffer can be aged so WriteMultiple is called on the buffer.

Checkpoint – A request to checkpoint a database is enqueued or requested. This can happen for various reasons (number of changes in the database would exceed recovery interval), backup is issues, manual checkpoint, an alteration requiring checkpoint. A sweep from ordinal 0 to max committed is done, locating the dirty pages associated with the specified database and WriteMultiple is called.

Eager Writes – During some operations (BCP, non-logged blob activity, …) pages are flushed during the transactional activity as they must be on disk to complete the transaction. These are deemed eager writes and WriteMultiple is used for these writes as well.

If you will recall WriteMultiple does not just write the requested page but attempts to build up a request for those pages that are dirty and ajacent to the page to reduce the number of I/O requests and increase the I/O request size for better performance.

To write the page a latch must first be acquired. In most cases this is an EX latch to prevent further changes on the page. For example the EX latch is acquired and the checksum or torn bits are calculated and the page is then written. The page can never change during the write or it will be come corrupted. In some cases you can think of an SH latch would prevent an EX latch from changing the page so why would an EX latch be required during the write and block readers. Take the torn PAGE_AUDIT protection as the example. The torn bit protection changes a bit on every sector. If read in this state it would appears as the page was corrupted. So to handle torn bit protection the EX latch is acquired, the write completes and the in-memory copy of the page removed the torn bit protection so readers see the right data. In most instances the EX latch is used but SQL Server will use an SH latch when possible to allow readers during the write.

Stalled/Stuck I/O: SQL Server 2000 SP4 added a warning that the I/O was taking too long and appears to be stuck or stalled. When an I/O request is posted (async) the time is kept (sys.dm_io_pending_io_requests) with the tracking information. Lazy writer checks these lists periodically and if any I/O is still pending at the operating system level (FALSE == HasOverlappedIoCompleted) and 15 seconds has elapsed the warning is recorded. Each file will report the number of stalls at most every 5 minutes to avoid flooding the log.

Since a normal I/O request should respond in ~15ms or less 15 seconds is way too long. For example if the I/O request is stalled for 30 seconds and the query timeout is 30 seconds it can cause query timeouts. If the stalled I/O request if for the log it can cause unwanted blocking situations.

If you are seeing these warnings you need to double check the I/O sub-system and use SQLIOSIM.exe to help narrow the problem. It can be anything from the configured HBA queue depth, multi-path failover detection mechanism, virus scanners or other filter drivers.

The Microsoft Platforms team can use ETW tracing facilities to help track down the source of the stalled/stuck I/O request.

In some situations the stall can result in scheduler hang situations (17883) for example. The slide shows a stack from a stuck I/O request. Remember that SQL Server I/O is mostly async so the call to WriteFile should be fast, just a hand-off. However, if a filter driver gets stuck before the I/O is considered posted at the (Interrupt Request Packet (IRP)) level the kernel call will appear as if the I/O request was sync. This is bad because the worker that is posting the async I/O is stuck in kernel mode and the logical scheduler is not progressing. SQL Server will detect this and issue the 17883 warning and capture a mini-dump.

I have touched on reading a page on previous slides already and described the locks vs latching mechanisms. Now walk-through a page read in detail with audit checks and such.

When a worker needs to access a page is calls routines such as BufferPool::GetPage. The GetPage routine does a hash search looking for a BUF structure that already has the page in memory. If found the page is latched and returned to the caller. If not found the page must be read from disk.

Here is the simplest form of reading a page. SQL Server can read pages with read ahead, ramp-up and other logic but the following is the clearest for discussion.

Step 1: A request to the memory manager for an aligned (OS Page alignment 4K or 8K) 8K page is made.

Step 2: The page is associated with a BUF structure for tracking the page

Step 3: The EX latch is acquired to protect the page

Step 4: The BUF is inserted into the hash table. In doing so all other requests for the page use the same BUF and Page and access is currently prevented by the EX latch

If the entry is already in the hash table release the memory and use what is already in the hash table at this time

Step 5: Setup the I/O request and post (async I/O request) the I/O request.

Step 6: Attempt to acquire the requested latch type asked for. (This will block until the page read completes)

Step 7: Check for any error conditions that may be present for the page and raise an error if present.

Some errors result in additional activity. For example a checksum failure will result in read-retry behavior. Exchange and SQL Server have found that in some instances issuing the same read again (up to 4 times) can return the correct image of the page. The SQL Server error log will reflect that retries were attempted and successful or failed. In either case the retry messages should be taken as a sign of possible sub-system problems and corrected.

SQLIOSim.exe ships with SQL Server 2008 or can be downloaded. It mimics SQL Server I/O behavior(s) and patterns as well as adds random I/O patterns to the test passes. We have done extensive testing with the utility and it often will reproduce the same I/O problem(s) logged in the SQL Server error log independent from the SQL Server process or database files. Use it to help narrow a reproduction on a troubled system. CAUTION: SQLIOSIM can’t be used for performance testing as it can post I/O requests at depths of 10,000 or more to make sure the sub-system and drivers don’t cause blue screens when the I/O depth is stressed. Some drivers have caused blue screens and others don’t recover well. It is expected that the I/O response time will be poor but the system should recovery gracefully.

When the read completes it does not release the EX latch until audit activity takes place. (823, 824, 605 and such error condition checks).

The process of completing an I/O is a callback routine and can’t log an error so an error code (berrcode) is set in the BUF structure and the next acquire (Step 7 above) will check for the error and handle it accordingly.

•Check the number of bytes transferred

•Then the operating system error code

•Does the page in the page header match that expected from the offset (offset / 8K) - Some sub-system bugs will return the wrong offset (605 error)

•If PAGE_AUDIT is enabled check the checksum or torn bit information

•If the trace flag is enabled to perform dbcc audit a page audit is executed

Set the berrcode accordingly and release the latch. Compliant waiters of the latch are woken to continue the processing.

Revisit the PAGE_IO* vs PAGE_* latch meanings.

Writing a page is just pretty much like reading a page. The page is already in memory and the BUF status is dirty (changed). To write a page SQL Server always used the WriteMultiple that I discussed during an earlier slide.

Lazy Write – Clock sweeping the buffer pool to maintain the free lists. A buffer is found dirty and the time of last access shows the buffer can be aged so WriteMultiple is called on the buffer.

Checkpoint – A request to checkpoint a database is enqueued or requested. This can happen for various reasons (number of changes in the database would exceed recovery interval), backup is issues, manual checkpoint, an alteration requiring checkpoint. A sweep from ordinal 0 to max committed is done, locating the dirty pages associated with the specified database and WriteMultiple is called.

Eager Writes – During some operations (BCP, non-logged blob activity, …) pages are flushed during the transactional activity as they must be on disk to complete the transaction. These are deemed eager writes and WriteMultiple is used for these writes as well.

If you will recall WriteMultiple does not just write the requested page but attempts to build up a request for those pages that are dirty and ajacent to the page to reduce the number of I/O requests and increase the I/O request size for better performance.

To write the page a latch must first be acquired. In most cases this is an EX latch to prevent further changes on the page. For example the EX latch is acquired and the checksum or torn bits are calculated and the page is then written. The page can never change during the write or it will be come corrupted. In some cases you can think of an SH latch would prevent an EX latch from changing the page so why would an EX latch be required during the write and block readers. Take the torn PAGE_AUDIT protection as the example. The torn bit protection changes a bit on every sector. If read in this state it would appears as the page was corrupted. So to handle torn bit protection the EX latch is acquired, the write completes and the in-memory copy of the page removed the torn bit protection so readers see the right data. In most instances the EX latch is used but SQL Server will use an SH latch when possible to allow readers during the write.

Stalled/Stuck I/O: SQL Server 2000 SP4 added a warning that the I/O was taking too long and appears to be stuck or stalled. When an I/O request is posted (async) the time is kept (sys.dm_io_pending_io_requests) with the tracking information. Lazy writer checks these lists periodically and if any I/O is still pending at the operating system level (FALSE == HasOverlappedIoCompleted) and 15 seconds has elapsed the warning is recorded. Each file will report the number of stalls at most every 5 minutes to avoid flooding the log.

Since a normal I/O request should respond in ~15ms or less 15 seconds is way too long. For example if the I/O request is stalled for 30 seconds and the query timeout is 30 seconds it can cause query timeouts. If the stalled I/O request if for the log it can cause unwanted blocking situations.

If you are seeing these warnings you need to double check the I/O sub-system and use SQLIOSIM.exe to help narrow the problem. It can be anything from the configured HBA queue depth, multi-path failover detection mechanism, virus scanners or other filter drivers.

The Microsoft Platforms team can use ETW tracing facilities to help track down the source of the stalled/stuck I/O request.

In some situations the stall can result in scheduler hang situations (17883) for example. The slide shows a stack from a stuck I/O request. Remember that SQL Server I/O is mostly async so the call to WriteFile should be fast, just a hand-off. However, if a filter driver gets stuck before the I/O is considered posted at the (Interrupt Request Packet (IRP)) level the kernel call will appear as if the I/O request was sync. This is bad because the worker that is posting the async I/O is stuck in kernel mode and the logical scheduler is not progressing. SQL Server will detect this and issue the 17883 warning and capture a mini-dump.

This is a myth that I have worked hard in dispelling. There was some wording in books online that was inacurate that lead people to believe that there are special threads for file on SQL Server. This is NOT the case. When doing I/O each worker is free to post and process I/O so there is not thread based throttle for file on I/O. SQL Server will do as much I/O as necessary across any worker thread.

There are some things that change this behavior.

The first is create database. Before instant file initialization was added to SQL Server the data files were zero’ed (zeros written to every byte in the file) when the file was created. In order to do this faster a set of workers is used. SQL Server 2005 and 2008 still use the concept of workers aligned per volume. When you create a database the workers are used to create the files. The zeroing is no longer needed on data files as long as instant file initialization is enabled but if not they will zero the contents of the data files. Log files are always zeroed. So for creation of the database each volume (by drive letter usually) does get a worker to create the files assigned to the volume. Once created any worker can do I/O on the file.

Also, the little used feature of I/O affinity creates special workers. The I/O affinity workers are workers assigned to specific CPUs for processing I/O requests. Whenever a standard worker would normally post an I/O request the request is intercepted and put on a list for the I/O affinity worker. The only job of the I/O affinity worker it to process the request queue(s) (read and a write queue) by posting (async I/O still applies) the request and processing the completion routine for the request on a dedicated CPU. I/O affinity requires an extreme amount of I/O to be flowing on the system (enough to keep a dedicated CPU busy with just posting and completion activities.) This is very rare and I have only seen a couple of servers even approach the need for this.

When I see someone evaluating I/O affinity I ask them to first look at the queries producing the I/O. What I find 99% of the time is that the queries need to be tuned or indexes added. That SQL Server has decided to do a large sort or parallel operation and the I/O activity is heavy but it does not need to be. Not only would I/O affinity be a poor choice but the amount of I/O is turning over the pages in data cache and impacting overall performance negatively.

One other issue with I/O affinity is that the log writer thread is placed on a separate scheduler. The log writer is generally located on scheduler 0 or 1 (based on start-up). When a log write is triggered the log writer is signaled to handle the activity. This means the log writer shares the scheduler with other workers. Since all it is doing is posting the log I/O and handling the completion of the I/O this is lightweight and on 99% of systems I have never seen this be an issue. Using I/O affinity can move the log writer to a dedicated scheduler but I have not seen a system this changed log write behavior on. The log write critical path is the I/O and this is 100x times slower than the CPU needed by the log writer.

The lazy writer is responsible for keeping the free lists prepared for the system, monitoring and handling the commit targets and such.

The latest builds of SQL Server have moved to Time of Last Access (TLA) algorithms to determine which buffers to eject from cache. The older algorithms were based on a reference counting scheme and TLA has been found to be more efficient.

The lazy writer works like a clock. It starts it sweep hand at buffer position zero and ticks every time is runs. The tick us usually 16 buffers. Looking over each buffer in the tick it finds those below the TLA threshold and handles removal from data cache. If the page is clean it can just put the buffer on the free list. If the page is dirty, WriteMultiple is used to FlushToLSN and write the page to the data base file. Once the write is complete the buffer is placed on the free list for use.

There are routines to help lazy writer (Routine is HelpLazyWriter). As each worker is allocating memory there are conditions that show the free list too low were any worker can perform a lazy writer tick. This makes SQL Server very adaptable to environment changes of the buffer pool as lazy write activity can be performed by dozens of workers when needed.

Once the clock hand has reached the committed level it is reset to the zero position and the tick behavior continues.

On hardware based NUMA system (soft NUMA sees memory as a single pool and SQL Server used a signal lazy writer) each node has a lazy writer that maintains the buffer pool portion assigned to the node. SQL Server divides the max server memory but the number of nodes and each node is treated equally. Since the goal of NUMA memory is to keep memory local and not remote a lazy writer is used on each node to maintain that goal. Keeping the free lists populated on the local node with local buffers increases performance. This means that queries running on the node have the advantage of keeping their activity within the node as much of the time as possible and they won’t flood other nodes with data cache populations. For example, if you start a dbcc checkdb you only want the local node to service the data cache requests. You don’t want every node get populated with the pages being read by the dbcc for the database as many of the other nodes could have more fundamental pages for servicing queries. By having a lazy writer per node the query is commonly contained within the node and the entire data cache does not become polluted (in a sense).

The I/O stall warnings are always checked and handled by the lazy writer on node #0. With some of the RTM builds of SQL 2005 this can cause false warnings on other nodes if the RDTSC timer(s) are not in sync. You can read about all the RTDSC issues on my block posts and move to later builds to use the multi-media timer that avoid the false reports.

Since the best performance is to use local memory on the local node checkpoint has been enhanced. Checkpoint will continue to sweep over the BUF structures but it assigns any writes of dirty buffers to the lazy writers on the respective nodes. This gives checkpoint the advantage of allowing local write activity but scaling up by using the lazy writer worker(s) on each node to assist it.

I briefly touched on checkpoint and recovery interval on previous slides. Checkpoint is commonly triggered by the changes in a database exceeding the target recovery interval. TO simplify the algorithm each log record is estimated to take ## of ms to recover assuming crash recovery and a cold data cache. When a log record is created the count of records since last checkpoint request is incremented and when the number of log records * estimated recovery time > recovery interval a checkpoint request for the database is enqueued.

Checkpoint can also be triggered by a backup or during shutdown of the service for example. SQL Server will attempt to do its best to have a clean database on startup (all transaction before shutdown of the service were flushed to log and data files.) This avoids the need for crash recovery processing and allows the databases to come online faster.

Checkpoint has been adjusted many times over various builds to accommodate various systems and requests from customers. The biggest change I have seen in checkpoint is the target mechanism. I was working with several customers where checkpoint would kick in and the I/O load would cause negative impacts on the overall system and concurrency. In SQL Server 2000 a change was proto-typed and eventually made in the later service packs (I think it was SP3 when first introduced but I would have to check my history on this fact). Checkpoint times the I/O requests and when latency grows larger than the latency target (~20ms) the number of checkpoint I/Os is reduced. The outstanding number of I/O (WriteMultiple) requests for checkpoint generally starts at 100. So checkpoint will attempt to keep the I/O depth for the checkpoint I/Os at 100 and less than 20ms response time.

It is pretty neat to watch the checkpoint rate and start a heavy copy to the same drive and watch checkpoint back down the number of outstanding requests to keep the I/O timing below the threshold. Allowing the timing to get above the threshold can cause a significant page (say the top most page in an index) to hold the EX latch and cause PAGE_IO*_LATCH waits for longer than expected. This shows up as potential concurrency issues when checkpoint is running as an example.

SQL Server 2005 and later versions has exposed the target timing as an option for manual checkpoint. You can issue a checkpoint on a database and tell it that it should take 5 minutes for example. The pace of the checkpoint is further altered to try to maintain the target for the full checkpoint of the database. In the RTM release of SQL 2005 it did not throttle if ahead of the pace as long as the I/O response was acceptable. Later service packs have altered the behavior and it may cause checkpoint to throttle (sleep) to meet the target.

Why would I ever use the throttle mechanism? Continuous checkpoint is the answer. If you disable the recovery interval or set it to a very high value the automatic checkpoints are few and far between. If you establish a startup procedure it can loop and issue checkpoint with the target and never stop. This would allow you to control the database check pointing on a continuous basis at a pace that is conducive to your needs. NOTE: When you disable the automatic check pointing this is for ALL databases so you must have a way to accommodate this with a startup stored procedure set or some job design. This is not recommended for general use or practice but it is an option.

Older builds need a fix and trace flag to make lazy writer and checkpoint work better when both are running so the I/O sub-system is not flooded with requests.

Notice that the slide points out the checkpoint queue. The automatic checkpoint process works from a queue so only one database is executing a checkpoint automatically at any given point in time. There is also a high level per database latch that serializes checkpoints for the same database. If an automatic is executing it holds the EX latch for the database checkpoint process and a manual checkpoint would wait to acquire the latch.

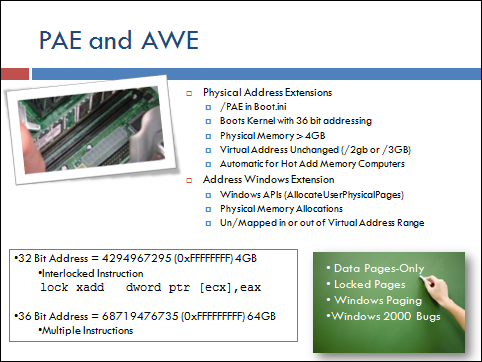

The PAE and Awe issue seems to confuse folks and on top of that why would it apply to an I/O conversation?

First of all you need to distinguish the PAE behavior of the operating system from the AWE API set. The boot.ini /PAE is what tells the 32 bit operating system to boot the extended kernel that handles 36 bit addressing of memory and allows access to more than 4GB of RAM. This is independent of AWE usage.

Notice that I mention independent of AWE because the AWE APIs can be used on a system that does not have /PAE enabled or on a 64 bit operating system. AWE is the ability to allocate physical RAM using the AWE API set that is not managed by Windows working set, page file and other memory operations. For us old timers you can think if it like the extended memory we used to have in DOS. The application is fully responsible for the AWE allocations. The operating system can not trim them, page them or otherwise touch them. As you will find from various sources it does not even show these allocations in common task manager output.

The key for AWE for SQL Server is that the only allocations that can be mapped and unmapped into the extended AWE address space are data pages. So using AWE on 32 bit extends only the data cache. It does not extend the procedure cache or other allocations for the SQL Server. Thus it can reduce the physical I/O requirement once data cache is populated.

To access a page that has been mapped out of common virtual address range (2GB or 3GB) the AWE APIs are used to map/unmap the area and access is again to the data is granted.

Why would SQL Server allow AWE on 64 bit. Well it really doesn’t. The sp_configure value is a no-op and should not be present. Instead if all the appropriate options are enabled for lock pages the AWE API is used by the buffer pool to do the allocations. This is because AWE allocates physical pages for SQL Server that can’t be touched by the windows memory manager for paging, working set trim and such operations. So you can think of it like under-the-covers use of the API to allow locked page behavior.

Why not use VirtualLock? If you read virtual lock carefully it is only a hint to the operating system and if a working set trim is needed it is an all or nothing activity and we don’t want SQL Server to get a 100% working set trim operation.

Note that on systems that are enabled for Hot Add Memory the /PAE behavior may be automatically enabled by the operating system.

NOTE: Windows 2000 and early Windows 2003 build had several issues with /PAE behavior and the QFEs are needed to prevent corruption of data pages and service termination of the SQL Server.

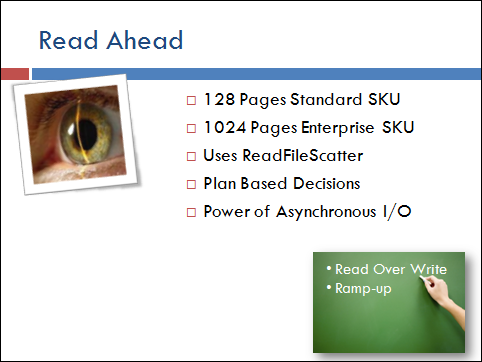

I have touched on read ahead behavior and how it drives the disk queue length > 2 and how ReadFileScatter is used to reduce the number of I/O requests and allow larger I/O transfers. I want to reiterate these facts, how the plan drives the decision and the power of async I/O.

Specifically point out that the read ahead depth for Enterprise SKUs is 1024 pages instead of 128 pages.

Mention that ramp-up is an 8 for 1 read during the initial growth of the buffer pool (until first time the commit target is reached) so help populate the buffer pool from a cold to a warm state faster.

Read-Over-Write: This is a behavior that many hardware manufactures did not expect but is used by SQL Server and the operating system page file routines. All the manufactures that I am aware of now test for the behavior to prevent stale reads.

While doing a read-ahead SQL Server wants to minimize the number of I/O requests. Let’s say we need to read in page 1 thru 8 but we find page 5 is already hash and in the data cache. We don’t want to issue a read for page 1, 2, 3 and 4 and another for page 6, 7, and 8. Instead we issue a read for pages 1 – 8 and we ignore page 5. The in-memory buffer for page 5 is only used during the physical read but it is not hashed (already a different buffer supporting page 5). When the read completes the buffer is put directly onto the free list and ignored.

The read-over-write can occur if a read-ahead is taking place at the same time the hashed buffer (5 in our example) is being written to the data file. At this point the data returned for page 5 from the read could be part old and part new. SQL Server knows this and is going ignore the page 5 read anyway so it does not matter.

The problem we saw several years ago is that the hardware cache was not expecting it and it would not properly invalidate the hardware cache with the new data. In some case none of the old sectors for the page was removed from hardware cache and in others only some sectors.

With some sectors we can detect checksum and torn bit failures. When the sectors all remain in-tact the checksum or torn bit protection is valid for the previous version of the page. So extend the example for the page 5 and assume that the write behavior was a lazy write so the page is removed from data cache. The next read will pull in the previous version of the page. More specifically I just deposited $500 in my bank account that got lost (lost write/stale read) as the next read never shows the transaction that was successfully committed.

When you extend this to replication or log shipping and restore you can get strange errors that the LSN on the page is not expected because the last change the log knows about is not seen on the page. (lost write/stale read).

The first test added to SQLIOStress – later SQLIOSim.exe was for stale read/lost write scenarios.

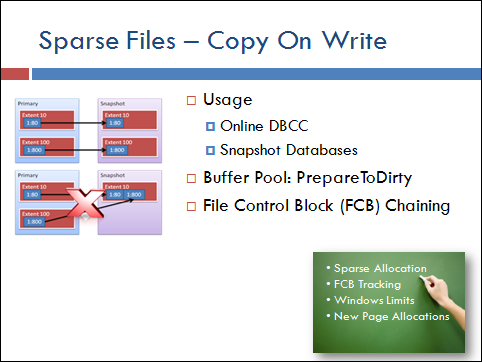

The introduction of database snapshots is nice for reporting as well as DBCC activity.

When a snapshot database is created (internal for DBCC or external with CREATE DATABASE for SNAPSHOT) copy-on-write behavior is established in the buffer pool. As I discussed earlier to dirty a page the EX latch has to be acquired. At this central location the copy on write decision can also be made. If the page is going to be dirtied a check is made to see if the page has been copied to the snapshot(s). If not a write to the snapshot takes place before the change to the page can be made.

This changes the behavior of a page modification. Instead of just the EX latch and the modification an I/O must first complete, the first time the page is dirtied after snapshot completion, for any page that is being changed. For this simple reason the snapshot I/O behavior needs to match that of a high speed SQL Server database file. Don’t place the snapshot on a less performing drive as it has direct impact on the production throughput.

In order to handle snapshot the internal file control block (FBCs) of SQL Server are chained to the parent database. This allows a copy-on-write to know which file(s) are associated with 1 or more snapshots setup on the database. It also allows queries on the snapshot to know the parent file.

When a select is executed on the snapshot the pages are retrieved from the snapshot files. If the page has not been copied to the snapshot (never dirtied on the parent database) the request is sent to the parent file control block to retrieve the page from the parent database. This allows the snapshot to remain sparse as it only has to maintain pages that have been dirtied on the parent database files.

Since the snapshot is a point-in-time operation the file sizes are fixed but marked with the sparse attribute so the physical space required is only for those pages dirtied and copied.

We have found a couple of NTFS bugs related to sparse files so refer to the PSSSQL blog for more spare file details and the latest patching information. There are also NTFS limitations on how large a sparse file can be which may require you to size the database files to accommodate the sparse file activity.

New page allocations in the parent are first copied to the snapshot. In a scenario where the page was allocated when the snapshot was created and later the table truncated the page is not moved to the snapshot. The page itself was not changed just the allocation information. So when the page is going to be reused for a new allocation in the parent database it must first be copied to the snapshot. So while you might not expect new allocations to cause physical usage in the snapshot they will.

DBCC used to reverse engineer the log records to fix-up the fact table information that changed during the dbcc scan. It now uses a internal snapshot (secondary stream on the data file). This allows DBCC to have a stable, point-in-time view of the database to build the fact tables from. This means you need as much space on the volume containing the database file as the number of pages that can be dirtied for the duration of the snapshot. If there is not enough space the dbcc must be run WITH TABLE LOCK to block activity while the fact tables are being built. The internal snapshot is removed when the DBCC completes. Crash recovery also removes any internal snapshots in the event that DBCC was active when a crash was encountered.

When you work with Microsoft CSS on corruption issues the term scribbler is often used. The idea is like a child coloring outside the lines of the picture. In terms of data cache and SQL Server memory it indicates that a component is making changes to memory that is does not own. For example

BYTE * pData = new BYTE[10];

delete [] pData; <----------------------- Memory can be reused for something else we no longer own it

memcpy(pData, pBuffer, 10); ß---------- Scribbler, just wrote to memory that it did not own. Some times an exception (AV usually) other times just damage that is not seen until the real owner tries to use the memory.

DANGER: If this memory is a database page in cache it could be flushed to disk with the damage and perminant corruption encountered.

SQL Server uses the checksum behavior to help prevent such a problem. When checksum PAGE_AUDIT is enabled the constant page checks are also enabled. Lazy writer will check pages in memory (as it handles clock ticks) and re-caclulate the checksum for pages that have not been dirtied. If the checksum is not correct an error is logged and the page removed from data cache, signaling a scribble has taken place.

If you suspect scribbling first check all 3rd party modules loaded in the address space. Many times a DLL or COM object is not thread pool safe and will be the source of the issue.

Tracking scribblers down can be difficult. This is where the Latch enforcement trace flag comes into play. With the trace flag enabled SQL Server will keep the page with a VirtualProtect of READ_ONLY and only toggle to READ_WRITE state when a modification latch is required. When the modification latch is released the protection is set back to READ_ONLY. Then if a scribbling code line attempt to write to the page they encounter an exception that is captured by the SQL Server stack dump logic and a mini-dump is generated showing the source of the issue. This works well for database pages but not stolen memory as stolen memory is always in a READ_WRITE state.

More frequent dbcc checks and page audit trace flag can help track down data page scribblers. At times working from a backup and the log backups can reply the issue and help track down the problem as well. SQLIOSIM.exe should always be used when corruption is at play to help rule out the I/O sub-system basic behaviors.

Stale Read: This has been a common problem with firmware and hardware caches that did not expect a read-over-write behavior. As I discuses in the read ahead section this can lead to all kinds of bad behavior. Staring with SQL Server 2000 SP4 we added a hash table that will check the LSN on the page against that in the write hash table when a page is read in and hashed into the buffer pool. This hash table is limited to the last ## of writes but is designed to catch when a write took place and the next read of that same page returns the wrong LSN.

SQL Server 2008 has also extended protections to the sort buffers in tempdb to better catch scribbles and stale read behaviors using similar design concepts.

Bit Flip: You may also hear the term bit-flip used to describe the type of corruption found. This is when a value is expected to be ## but when you look at the binary representation it is only off by one bit. A bit has been flipped from 1 to 0 or 0 to 1. This is often a scribbler scenario but we have also seen hardware issues. For example in one dump the ESP register was set to an ODD offset. This is not correct so we knew there was a CPU problem on the machine. We set affinity mask for the SQL Server scheduler to see where the error kept occurring and we could help identify the faulty CPU.

Bit flips are also common with bad reference counting. When you do an AddRef or Release it usually leads to an InterlockedIncrment or InterlockedDecrement activity (chaning the counter by 1) and these can look like bit flips for a stale object pointer.

New additions to extend checksum to the log as well as backup media help protect your SQL Server against corruption.

Other Blog Content

¨SQL Server Urban Legends Discussed

http://blogs.msdn.com/psssql/archive/2007/02/21/sql-server-urban-legends-discussed.aspx

¨How It Works: SQL Server Checkpoint (FlushCache) Outstanding I/O Target

http://blogs.msdn.com/psssql/archive/2008/04/11/how-it-works-sql-server-checkpoint-flushcache-outstanding-i-o-target.aspx

¨How It Works: SQL Server Page Allocations

http://blogs.msdn.com/psssql/archive/2008/04/08/how-it-works-sql-server-page-allocations.aspx

¨How It Works: Shapshot Database (Replica) Dirty Page Copy Behavior (NewPage)

http://blogs.msdn.com/psssql/archive/2008/03/24/how-it-works-shapshot-database-replica-dirty-page-copy-behavior-newpage.aspx

¨How It Works: SQL Server 2005 I/O Affinity and NUMA Don't Always Mix

http://blogs.msdn.com/psssql/archive/2008/03/18/how-it-works-sql-server-2005-i-o-affinity-and-numa-don-t-always-mix.aspx

¨How It Works: Debugging SQL Server Stalled or Stuck I/O Problems - Root Cause

http://blogs.msdn.com/psssql/archive/2008/03/03/how-it-works-debugging-sql-server-stalled-or-stuck-i-o-problems-root-cause.aspx

¨How It Works: SQL Server 2005 Database Snapshots (Replica)

http://blogs.msdn.com/psssql/archive/2008/02/07/how-it-works-sql-server-2005-database-snapshots-replica.aspx

¨How It Works: File Stream the Before and After Image of a File

http://blogs.msdn.com/psssql/archive/2008/01/15/how-it-works-file-stream-the-before-and-after-image-of-a-file.aspx

¨Using SQLIOSim to Diagnose SQL Server Reported Checksum (Error 824/823) Failures

http://blogs.msdn.com/psssql/archive/2008/12/19/using-sqliosim-to-diagnose-sql-server-reported-checksum-error-824-823-failures.aspx

¨How to use the SQLIOSim utility to simulate SQL Server activity on a disk subsystem

http://support.microsoft.com/kb/231619

¨Should I run SQLIOSim? - An e-mail follow-up from SQL PASS 2008

http://blogs.msdn.com/psssql/archive/2008/11/24/should-i-run-sqliosim-an-e-mail-follow-up-from-sql-pass-2008.aspx

¨What do I need to know about SQL Server database engine I/O?

http://blogs.msdn.com/psssql/archive/2006/11/27/what-do-i-need-to-know-about-sql-server-database-engine-i-o.aspx

¨SQLIOSim is "NOT" an I/O Performance Tuning Tool

http://blogs.msdn.com/psssql/archive/2008/04/05/sqliosim-is-not-an-i-o-performance-tuning-tool.aspx

¨How It Works: SQLIOSim - Running Average, Target Duration, Discarded Buffers ...

http://blogs.msdn.com/psssql/archive/2008/11/12/how-it-works-sqliosim-running-average-target-duration-discarded-buffers.aspx

¨How It Works: SQLIOSim [Audit Users] and .INI Control File Sections with User Count Options

http://blogs.msdn.com/psssql/archive/2008/08/19/how-it-works-sqliosim-audit-users-and-ini-control-file-sections-with-user-count-options.aspx

¨Understanding SQLIOSIM Output

http://sqlblog.com/blogs/kevin_kline/archive/2007/06/28/understanding-sqliosim-output.aspx

Additional Learning Resources

¨Inside SQL Server 7.0 and Inside SQL Server 2000, …

Written by Kalen Delaney

¨The Guru’s Guide to SQL Server Architecture and Internals – ISBN 0-201-70047-6

Written by Ken after he joined Microsoft SQL Server Support

Many chapters reviewed by developers and folks like myself

¨SQL Server 2005 Practical Troubleshooting ISBN 0-321-44774-3 – Ken Henderson

Authors of this book were key developers or support team members

Cesar – QP developer and leader of the QP RedZone with Keithelm and Jackli

Sameert – Developer of UMS and SQLOS Scheduler

Santeriv – Developer of the lock manager

Slavao – Developer of the SOS memory managers and engine architect

Wei Xiao – Engine developer

Bart Duncan – long time SQL EE and now developer of the Microsoft Data Warehouse – performance focused

Bob Ward – SQL Server Support Senior EE

¨Advanced Windows Debugging – ISBN 0-321-37446

Written by Microsoft developers – excellent resource

¨Applications for Windows – Jeffrey Richter

Great details about Windows basics

Bob Dorr – Principle SQL Server Escalation Engineer