Frequently, we see our customers using table variables in their stored procedures and batches and experience performance problems.

In general, these performance problems are introduced because of large number of rows being populated into the table variable.

Table variables were introduced in SQL Server 2000 with intention to reduce recompiles. Over time, it gained popularity. Many users use to to populate large number of rows and then join with other tables.

When the batch or stored procedure containing the table variable is compiled, the number of rows of the table variable is unknown. Therefore, optimizer has to make some assumptions. It estimates very low number of rows for the table variable. This can cause inefficient plan. Most of the time, a nested loop join is used with the table variable as outer table. If large number of rows exist in the table variable, this results in inner table be executed many times.

So if you anticipate large number of rows to be populated to the table variable, you should not use it to begin with unless you don’t intend to join with other tables or views.

If you have large number of rows to be populated into the table variable, consider this solution. You can add option recompile to the statement that involves the table variable joining with other tables. By doing this, SQL Server will be able to detect number of rows at recompile because the rows have already been populated. This option is only available for SQL Server 2005 and beyond.

Additionally, you can also use temp tables which can provide better statistics.

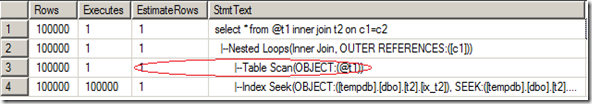

The script below demonstrate the cardinality issue and solution. I re-arranged the execution plan here. Note that the EstimateRows for @t1 is 1 row but in fact 100000 rows were populated into the table. The one that has option recompile has accurate cardinality estimate.

Execution plan without option recompile

Execution plan with option recompile

/******************************************************

1. create a permenant table t2 and insert 100,000 rows

*******************************************************/

set statistics profile off

go

use tempdb

go

if OBJECT_ID ('t2') is not null

drop table t2

go

create table t2 (c2 int)

go

create index ix_t2 on t2(c2)

go

--insert 100,000 rows into the perm table

set nocount on

begin tran

declare @i int

set @i = 0

while @i < 100000

begin

insert into t2 values (@i)

set @i = @i + 1

end

commit tran

go

--update stats

update statistics t2

go

/********************************************************

2. join permantant table with table variable

the table variable gets 100,000 rows inserted

then it is joined to t2

@t1 gets 1 rows estimate

it ends up with nested loop join

*********************************************************/

set nocount on

declare @t1 table (c1 int)

begin tran

declare @i int

set @i = 0

while @i < 100000

begin

insert into @t1 values (@i)

set @i = @i + 1

end

commit tran

set statistics profile on

select * from @t1 inner join t2 on c1=c2

go

set statistics profile off

go

/****************************************************

3. solution

use stmt level recompile

******************************************************/

declare @t1 table (c1 int)

set nocount on

begin tran

declare @i int

set @i = 0

while @i < 100000

begin

insert into @t1 values (@i)

set @i = @i + 1

end

commit tran

set statistics profile on

select * from @t1 inner join t2 on c1=c2 option (recompile)

go

set statistics profile off

go