First published on MSDN on Dec 11, 2012

This issue was puzzling until we stepped through the code and studied it in some detail. The report was “SQL Server won’t use the physical memory I expect it to use.”

Scenario: SQL Server 2008 R2, 32GB RAM - SQL only using ~22GB of total memory and won’t use anymore.

Let me try to explain what we found.

|

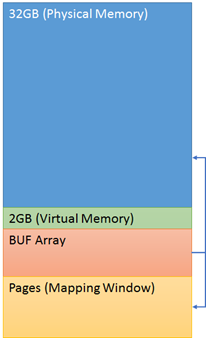

This is a high level diagram of the SQL Server process when started on a 32GB system.

The BUF array is a set of structures that point to associated 8K memory blocks. The blocks stored above the 2GB, virtual memory range, are the AWE buffers; which can only be data pages. The blocks stored in the mapping window can be data pages or stolen memory maintained by SQL Server. When SQL Server needs to access a data page stored in the AWE memory range it performs a mapping operation. Think of this like a page file swap. A block from the mapping window is exchanged with a block in the AWE range allowing SQL Server to access the page. |

| Formula Basics and Mapping Window |

The default behavior of SQL Server is to maintain a large mapping window, similar to the SQL Server 2000 design.

The formula is strait-forward enough. Take the physical memory you want to address, divide it by 8K (SQL Server data page size) to determine how many BUF structures are required to track those pages. Now take the virtual address range (2GB) and subtract overhead for thread stacks, (-g default 256MB) parameter, and images to determine the virtual address space remaining. Take the remaining virtual address space and subtract the desired size of the BUF array to determine the maximum mapping window size. Note: There are checks in the code to make sure reasonable boundaries are maintained during the calculations. The mapping window is important because too small of mapping window causes high levels of memory swaps and can reduce performance. However, setting aside to much physical memory can result in reduced performance as well. This is a delicate balancing act. Monitoring the dbcc memorystatus (Visible) value can help you understand the mapping window size. Combining this with several performance counters, including the AWE maps and unmaps/sec counters, while comparing overall application performance is a way to tune your system. |

|

SQL 2000 was exactly as I have described the formula allowing all the memory to be addressed in the AWE range.

Calculate the tracking buffers needed then size of the BUF array and mapping window. |

|

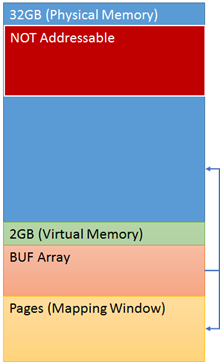

SQL 2005 and newer versions of SQL Server had to make a behavior choice.

The size of the BUF structure increased in SQL 2005 and newer versions. This means if you applied the same formula the mapping window would be smaller. (I.E. The same formula needs to use a larger BUF size so the mapping window can’t be as large.) Let me give you an example. Assume you need to track 100, 8K blocks and the overhead for the SQL 2000 BUF is 40 bytes and SQL 2005 and later it is 64 bytes ( not actual sizes, example only .) 100 * 40 = 4000 bytes 100 * 64 = 6400 bytes To track the same number of 8K buffers the BUF array has to increase by 2400 bytes and the mapping window has to shrink by 2400 bytes. The decision was made to DEFAULT to the SAME MAPPING WINDOW size as SQL 2000 and NOT increase the overall BUF array size. This means SQL Server prefers the larger mapping window vs addressing all RAM when on a 32 bit SKU using AWE memory. The example looks like the following. 100 * 40 = 4000 bytes (Same SQL 2000 BUF array size) 400 / 64 = 62 BUFs instead of 100 BUFs. The BUF array is no longer able to track 38 of the 100 blocks. Thus, you may experience the situation where SQL Server Target memory appears to be deflated on your system and won’t increase as you expect it to. |

|

SQL Server provides a startup only, trace flag (

-T836

) that indicates you wish to maximize the use of physical RAM, at the expense of a smaller mapping window.

Note: Again, test carefully because reducing the mapping window may change your performance. The trace flag tells SQL Server to calculate the BUF size based on the SQL 2005 and newer version(s) BUF overhead. This allows the additional RAM to be tracked and used at the expense of the reduced mapping window. |

As you can see it all boils down to the maintenance of the mapping window. With careful testing and attention to your application performance you can determine the best option to maximize for your deployment(s).

Bob Dorr - Principal SQL Server Escalation Engineer

Jack Li – SQL Server Escalation Engineer

Updated Jan 15, 2019

Version 2.0mssql-support

Iron Contributor

Joined January 15, 2019

SQL Server Support Blog

Follow this blog board to get notified when there's new activity