More and more of businesses are either storing or manipulating some, if not all, of their data in the cloud. We learnt from our customers that they would prefer to leverage their existing on-premises ETL investment while seeking low cost solution to access and integrate data in the cloud.

https://msdn.microsoft.com/en-US/library/mt146770.aspx enables you to easily extend your SSIS workflow to use cloud technologies powered by Microsoft Azure, such as Azure Storage, HDInsight, and Machine Learning etc.

The rest of the article lists four types of canonical scenarios using the https://msdn.microsoft.com/en-US/library/mt146770.aspx to accomplish business needs common in hybrid data integration scenarios.

Extending SSIS with Cloud Compute Capability using HDInsight

Businesses nowadays often have lot of data and log from the web site and/or web services. Processing such large amount raw data is important to extract business insight such as web page usage patterns, customer preference prediction and more. However, it does not make too much sense cost-wise to move all of this data from cloud to on-premises before processing it with SSIS. It is more efficient to process these data on the cloud upfront before moving the business insight back to on-premises data warehouse for internal reporting.

With the HDInsight Task from the https://msdn.microsoft.com/en-US/library/mt146770.aspx , you can now orchestrate and create a Hadoop jobs on http://azure.microsoft.com/en-us/services/hdinsight/ as part of the SSIS workflow to process raw data on the cloud. You can now use SSIS as your main data orchestration pipeline for both on-premises and cloud transformation activities.

Here is how you can do that:

1) Creating an on-demand cluster using https://msdn.microsoft.com/en-US/library/mt146774.aspx .

2) Uploading a bunch of Hive scripts (*.hql) to the cluster storage using https://msdn.microsoft.com/en-US/library/mt146776.aspx .

3) Invoking all the Hive scripts by wrapping an https://msdn.microsoft.com/en-US/library/mt146771.aspx in the https://msdn.microsoft.com/en-US/library/ms187670(v=sql.120).aspx .

4) Deleting the cluster using https://msdn.microsoft.com/en-US/library/mt146778.aspx at the end.

Note: Using an on-demand HDInsight cluster is a practice to save cost from http://azure.microsoft.com/en-us/pricing/details/hdinsight/ , as it is costly to keep the cluster alive but idle. The tradeoff, however, is the overhead time on creating and deleting the cluster dynamically.

Using SSIS as Data Preparation and Masking for Cloud Compute

HDInsight Task from the https://msdn.microsoft.com/en-US/library/mt146770.aspx provides a new way to manipulate and transform data in the cloud. In order to get relevant business insight from the web raw data, it is common that businesses usually run the HDInsight processing with both raw web data and company data on-premises. It is important to prepare the on-premises data in certain format before consuming by HDInsight. It is also important to mask PII or sensitive data before moving the data to the cloud for HDInsight processing.

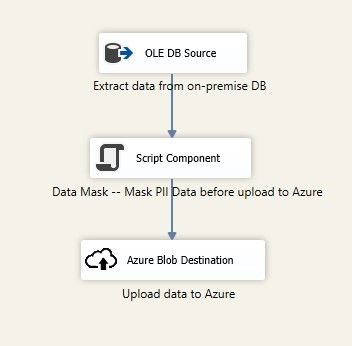

In such case, you can use the transformations in SSIS to prepare and mask the data that contains your customer information. Then, you can use https://msdn.microsoft.com/en-US/library/mt146772.aspx Blob Destination from the https://msdn.microsoft.com/en-US/library/mt146770.aspx to load the masked data into Azure Storage blob.

The SSIS package below uses a https://msdn.microsoft.com/en-us/library/ms137640(v=sql.120).aspx Component in its https://msdn.microsoft.com/en-us/library/ms140080(v=sql.120).aspx to transform the data before loading it to Azure blob.

The transformation runs following C# code snippet for each row of [CustomerName] column.

|

public override void Input0_ProcessInputRow(Input0Buffer Row) { string customerName = Row.CustomerName; Row.HashedCustomerName = MaskCustomerName(customerName); }

private string MaskCustomerName(string customerName) { //Add your data mask logic here } |

The prepared data can then be used in http://azure.microsoft.com/en-us/services/hdinsight/ for big data processing, and/or in http://azure.microsoft.com/en-us/services/machine-learning for prediction analytic.

Using SSIS to move Cloud Compute Result back to On-premises

Once you get the business insight data from the cloud compute services, such as http://azure.microsoft.com/en-in/services/stream-analytics/ , http://azure.microsoft.com/en-us/services/machine-learning and http://azure.microsoft.com/en-us/services/hdinsight/ , the next thing you would like to do is to move the business insight data back to on-premises for reporting or for additional processing.

In such case, you can use the https://msdn.microsoft.com/en-US/library/mt146775.aspx from the https://msdn.microsoft.com/en-US/library/mt146770.aspx to extract the data from Blob storage.

In the following example, the SSIS package uses a https://msdn.microsoft.com/en-US/library/ms187670(v=sql.120).aspx to run a https://msdn.microsoft.com/query/dev10.query?appId=Dev10IDEF1&l=en-US&k=k(sql11.dts.designer.dataflowtask.F1)&rd=true against each blob outputted from HDInsight Hive processing.

Inside the https://msdn.microsoft.com/en-us/library/ms140080(v=sql.120).aspx , the https://msdn.microsoft.com/en-US/library/mt146775.aspx extracts the data and passes it to a https://msdn.microsoft.com/en-us/library/ms141715(v=sql.120).aspx . The transformation helps identify Type 1 and Type 2 changes, which makes it a very useful component in SSIS to handle data upsert into data warehouse.

Data Archiving to Cloud Storage

Traditionally, businesses build data warehouse with extra secondary storage for backup and/or archival purpose, especially on transactional data that are required to be kept due to legal or audit reason. With the rapid growth of data size, cloud-based storage, such as http://azure.microsoft.com/en-in/services/storage/ , becomes popular mainly due to the benefit of cost and scalability.

The https://msdn.microsoft.com/en-US/library/mt146770.aspx helps you to easily create a SSIS package that migrates all your data into Azure blob for archiving purpose, using the https://msdn.microsoft.com/en-US/library/mt146772.aspx in the https://msdn.microsoft.com/en-us/library/ms140080(v=sql.120).aspx .

Sometimes you may just want to use Azure blob as a back-up storage, while keeping the data written into the original data store. In this case, you can slightly modify your SSIS package and add a https://msdn.microsoft.com/en-us/library/ms137701(v=sql.120).aspx to write another copy of the output data into https://msdn.microsoft.com/en-US/library/mt146772.aspx .

Summary

Building ETL data pipeline and workflow sometimes is just like building LEGO, you can build it with different patterns and possibilities. The https://msdn.microsoft.com/en-US/library/mt146770.aspx , now opens more possibilities for building different patterns of hybrid data pipeline.

Try it yourself

To build and run the scenarios by yourself, please download and install:

https://www.microsoft.com/en-us/download/details.aspx?id=42313

http://www.microsoft.com/en-us/download/details.aspx?id=47366