Part 4 of a 9-part

series

.

The Common Engineering Criteria (CEC) that I’ve talked about in previous posts have been instrumental in ensuring that the teams contributing to 2012 R2 met rigorous and specific criteria in areas like Manageability, Virtualization Readiness, Data Center & Enterprise Readiness, Reliability, Hardware Support, and Interoperability. The original idea behind the creation of the CEC back in the early 2000’s was to drive consistency across all of the Microsoft Server workloads and applications – simplifying the experience of using multiple Microsoft solutions together, as well as driving down the total cost of owning and operating our solutions.

The benefit of the CEC is that it ensures all our enterprise solutions come ready to be deployed, operated, and managed “out-of-the-box” in the Microsoft Clouds. To get really specific, each and every workload team across Microsoft ( e.g. , all the roles in Windows Server, the Office Workloads, etc.) deliver the knowledge that instructs System Center how to operate that workload in the Microsoft Clouds. If you ever ask the question, “Which Cloud is best suited for running Microsoft workloads?” the answer should be clear – the Microsoft Clouds!

One of the best things about this 2012 R2 wave of products was how its unified planning and engineering milestones allowed teams from across Microsoft to work on different aspects of the release in parallel. This process brought together a genuinely amazing collection of solutions and value while leveraging the expertise of each team and each individual. This is a really important point to think about. If I were to ask who has the most knowledge and expertise in how Windows Server should be deployed and operated, the answer is simple: The Windows Server team at Microsoft. Therefore, whenever you deploy Windows Server in the Microsoft Clouds you have an enormous built in advantage : The expertise of the entire Windows Server team. This far-reaching expertise is expressed through the Common Engineering Criteria in the form of any future updates that will become available – every one of those updates will be built, tested, and approved by the Windows Server team, aka the world’s foremost Windows Server deployment experts.

There are a lot of great surprises in these new R2 releases – things that are going to make a big impact in a majority of IT departments around the world. Over the next four weeks , the 2012 R2 series will cover the 2nd pillar of this release: Transform the Datacenter . In these four posts (starting today) we’ll cover many of the investments we have made that better enable IT pros to transform their datacenter via a move to a cloud-computing model.

This discussion will outline the ambitious scale of the functionality and capability within the 2012 R2 products. As with any conversation about the cloud, however, there are key elements to consider as you read. Particularly, I believe it’s important in all these discussions – whether online or in person – to remember that cloud computing is a computing model, not a location . All too often when someone hears the term “cloud computing” they automatically think of a public cloud environment. Another important point to consider is that cloud computing is much more than just virtualization – it is something that involves change: Change in the tools you use (automation and management), change in processes, and a change in how your entire organization uses and consumes its IT infrastructure.

Microsoft is extremely unique in this perspective, and it is leading the industry with its investments to deliver consistency across private, hosted and public clouds. Over the course of these next four posts, we will cover our innovations in the infrastructure (storage, network, compute), in both on-premise and hybrid scenarios, support for open source, cloud service provider & tenant experience, and much, much more.

As I noted above, it simply makes logical sense that running the Microsoft workloads in the Microsoft Clouds will deliver the best overall solution. But what about Linux ? And how well does Microsoft virtualize and manage non-Windows platforms, in particular Linux? Today we’ll address these exact questions.

Our vision regarding other operating platforms is simple: Microsoft is committed to being your cloud partner . This means end-to-end support that is versatile, flexible, and interoperable for any industry, in any environment, with any guest OS. This vision ensures we remain realistic – we know that users are going to build applications on open source operating systems, so we have built a powerful set of tools for hosting and managing them.

A great deal of the responsibility to deliver the capabilities that enable the Microsoft Clouds (private, hosted, Azure) to effectively host Linux and the associated open source applications falls heavily on the shoulders of the Windows Server and System Center team. In today’s post Erin Chapple , a Partner Group Program Manager in the Windows Server & System Center team, will detail how building the R2 wave with an open source environment in mind has led to a suite of products that are more adaptable and more powerful than ever.

As always in this series , check out the “ Next Steps ” at the bottom of this post for links to a variety of engineering content with hyper-technical overviews of the concepts examined in this post.

* * *

During the planning process for a release we look at the assumptions we’re making, challenge them, and then look ahead to demarcate how our industry will be shaped by changing market conditions. While open source software has been present in the datacenter for several years, as we look at what a modern datacenter entails it is increasingly clear that enabling open source software is a key tenet in our cloud offerings .

Not only do enterprises run key workloads based on Linux and UNIX, but, in this cloud-first world, many applications leverage open source components. To provide our customers with one cloud infrastructure, one set of system management tools, and one set of paradigms to transform their datacenter with the cloud, we knew that we needed to ensure that Windows is the best platform to run Linux workloads as well as open source components. With Windows Server 2012 R2 , System Center 2012 R2 , and in the public-cloud with Windows Azure, IT pros now have this assurance.

Whether it’s on-premise management of your datacenter, running in the Microsoft public cloud, or a hybrid of the two, you can now run and manage Windows and Microsoft applications, as well as run and manage Linux, UNIX, and open source applications – all with a consistent experience .

Windows Server: The Best Infrastructure to Run Linux Workloads

Consider this scenario: You are an infrastructure administrator for a large hosting company, or for an IT department within an enterprise organization. Your customers very likely want to host and manage complex applications that require services running on multiple Windows and Linux guest virtual machines. Today, you may be using separate hosting and management tools for the Windows and Linux environments. This means separate hypervisors, separate management tools, and separate user interfaces for your customers. You may even have separate technical staff for the two environments! This bifurcation dramatically increases complexity and costs – both for you and for your customers.

Windows Server 2012 R2 and System Center 2012 R2 offer consolidation on a single infrastructure to run and manage Windows and Linux guest virtual machines. With a single infrastructure, operations and processes are simplified considerably. For example, you no longer have to deal with the complexity of handling Windows one way and Linux another, and complex applications that have Windows and Linux components are no longer a special case that must span two infrastructures. Now you can spend more time providing great service to your customers and less time dealing with operating system differences. Your customers also get the advantage of a single, unified, view of their applications and workloads, with consistent and unified reporting, resource usage, and billing.

At the core of enabling this single infrastructure is the ability to run Linux on Hyper-V. With the release of Windows Server 2012 Hyper-V, and enhanced by the updates in the 2012 R2 version, Hyper-V is at the top of its game in running Windows guests. We’re delivering this with engineering investments in Hyper-V, of course, but also in the Linux operating system .

You read that correctly – some of the work we are doing at Microsoft involves working directly with the Linux community and contributing the technology that really enables Hyper-V and Windows to be the best cloud for Linux .

Here’s how we’ve done it: Microsoft developers have built the drivers for Linux that we call the Linux Integration Services, or “LIS.” Synthetic drivers for network and disk provide performance that nearly equals the performance of bare hardware. Other drivers provide housekeeping for time synchronization, shutdown, and heartbeat. Directly in Hyper-V, we have built features to enable live backups for Linux guests, and we have exhaustively tested to ensure that Hyper-V features, like live migration (including the super performance improvements in 2012 R2), work for Linux guests just like they do for Windows guests. In total, we worked across the board to ensure Linux is at its best on Hyper-V.

To ensure compliance, Microsoft had done this LIS development as a member of the Linux community . The drivers are reviewed by the community and checked into the main Linux kernel source code base. Linux distribution vendors then pull the drivers from the main Linux kernel and incorporate them into specific distributions. LIS is currently a built-in part of these distributions:

- Red Hat Enterprise Linux 5.9 and 6.4

- SUSE Linux Enterprise Server 11 SP2 and SP3

- Ubuntu Server 12.04, 12.10, and 13.04

- CentOS 5.9 and 6.4

- Oracle Linux 6.4 (Red Hat Compatible Kernel)

- Debian GPU/Linux 7.0

Updates to LIS for the 2012 R2 release tackle several key issues needed to bring Linux to the same baseline as Windows when running on Hyper-V:

- Dynamic memory : Increase Linux VM density on Hyper-V by having Hyper-V automatically add and remove physical memory for Linux guests based on the guest needs, just like for Windows.

- 2D synthetic video driver : Gives great 2D video performance for Linux guests, and solves earlier problems with duplicate mouse pointers.

- VMbus protocol updates : Linux guests have the ability to spread interrupts across multiple virtual CPUs for better performance, just like for Windows.

- Kexec : Linux guests running in Hyper-V can get crash dumps, just like on physical hardware.

These enhancements and others are described in more technical detail on the Hyper-V Virtualization blog .

Going forward, Microsoft will continue the cycle of enhancing the Linux Integration Services to match new Hyper-V capabilities, contributing the enhancements to the Linux kernel through the community process, and then working with distribution vendors to incorporate the latest LIS into new Linux distribution versions. As a result, IT pros can be confident in Microsoft’s commitment to offering a unified infrastructure and to helping you and your customers to reduce cost and complexity. Also keep in mind that all the work we do in Windows and Hyper-V is applicable and consistent across the Microsoft clouds: Private, hosted and Windows Azure. Because Windows Server and Hyper-V are the foundation of Windows Azure, all our investments directly apply .

Manage Heterogeneous Environments Using Standards and System Center

Consider another scenario: As an infrastructure administrator for a large hosting company, or for an IT department within an enterprise organization, you have to manage those Windows and Linux guest VMs and associated applications that are running on Hyper-V. Most likely, you also have physical computers running Windows, Linux, or UNIX that haven’t been virtualized. As with the core execution layer provided by Hyper-V, you’d like to have consistent management of these different operating systems, and consistent management of the different “hardware” – whether it be virtual or physical. You don’t want different consoles, different tools, and different processes/procedures for the different operating systems and hardware. Most importantly, you don’t want your customers to see these differences. Management represents the second major investment area of OSS enablement.

To support a single infrastructure that runs and manages Windows and Linux, we bet on standard-based management using CIM (Common Information Model) and WS-Man (Web Services for Management). At the heart of this bet is the work we are driving with the industry on the Data Center Abstraction Layer (DAL) to provide a common management abstraction for all the resources of a data center to make it simple and easy to adopt and deploy cloud computing. The DAL is not specific to one operating system; it benefits Linux cloud computing efforts every bit as much as Windows. The DAL uses the existing DMTF standards-based management stack to manage all the resources of a data center. To support the DAL, Microsoft has contributed Open Management Infrastructure ( OMI ) as an open source implementation of these standards along with a set of providers for managing Linux. We built OMI from the ground up to support Linux natively and provide the rich functionality, performance and scale traits needed in a Linux CIMOM.

For our customers, we wanted to make managing Linux and any CIM-based system simple to automate via PowerShell. We introduced the PowerShell CIM cmdlets in Windows Server 2012 which enable IT pros to manage CIM based systems natively from Windows.

To learn more about these cmdlets in PowerShell you can type:

Get-Command –Module CimCmlets

System Center builds upon and enhances the core management investments in the platform to deliver consistent management across Windows, Linux, and UNIX. We started on this journey several years ago, and System Center Operations Manager was the first major area of investment, offering Linux/UNIX monitoring more than 4 years ago. Since then, Microsoft has broadened our Linux/UNIX coverage to include Configuration Manager , Virtual Machine Manager , and now, in the System Center 2012 R2 release, Data Protection Manager .

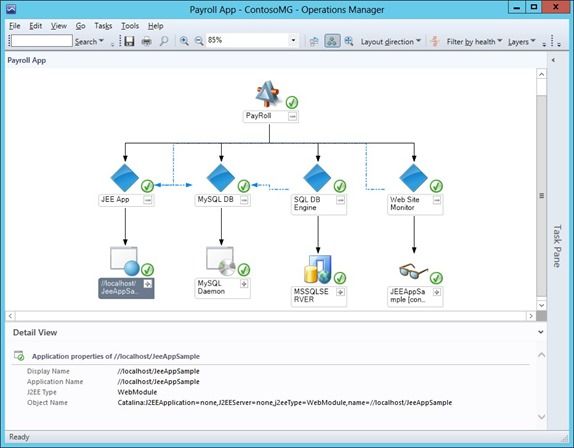

In Operations Manager , nearly all of the functionality available for Windows servers is also available for Linux and UNIX servers: Monitor OS health and performance, monitor log files, monitor line-of-business application, monitor databases and web servers, and audit security relevant events. Going up the software stack, Microsoft supplies management packs for Java application servers, both open source (Tomcat, JBoss) and proprietary (IBM WebSphere and Oracle WebLogic) . Partners also supply management packs for other open source software such as MySQL and the Apache HTTP Server . This functionality appears in a single console, with Windows, Linux, and UNIX computers side-by-side so that you get one view of your workloads and applications, as seen here:

Similarly, core Configuration Manager functionality is available for Linux and UNIX, including hardware inventory, inventory of installed applications, the ability to distribute and install software packages, and reporting on all of these areas. ConfigMgr can install open source and proprietary software packages to Linux and UNIX in almost any format. ConfigMgr also includes anti-virus agents for all of the Linux distributions managed by Microsoft. Again, Windows, Linux, and UNIX computers appear side-by-side, with one set of concepts and paradigms as highlighted below. This means you spend less time flipping between environments and more time solving real problems.

Virtual Machine Manager is the fabric controller, and it is at the heart of a private cloud. It manages Windows guests and Linux guests running on Hyper-V, and it can personalize Linux OS instances during deployment, so that multiple Linux guests can be deployed from a single template (with each guest automatically getting a unique identity, IP address, etc. – just like for sysprep’ed Windows images). For those complex applications with Windows and Linux components, Linux can participate in VMM service templates to deploy a multi-tier service. The service template can be all Linux, or it can be mixture of Linux and Windows tiers. Almost of all the rest of the great capabilities of VMM are agnostic to the guest OS, so live migration and placement, IP address management, network virtualization, and storage management easily work for Linux. With this level of consistency, you will rarely need to worry about whether a virtual machine is running Windows or Linux.

In System Center 2012 R2, Data Protection Manager adds the ability to backup Linux guest VMs running on Hyper-V, again giving you consistency across Windows and Linux. The Linux guest VMs can continue running live – there is no need to pause or suspend them – and DPM will get a file system consistent snapshot of the VM to backup. “File system consistent” means that the Linux file system buffers are automatically flushed via integration with the Linux Integration Services for Hyper-V. This kind of consistency is analogous to application consistency via VSS writers that are available for Windows VMs.

System Center 2012 R2 gives you a single, consistent, systems management infrastructure for private clouds with Windows and Linux, or in your datacenters physical or virtualized infrastructure running Windows, Linux, and UNIX. Applications with Windows and Linux components can be deployed and managed from a single interface, giving you reduced complexity and reduced costs.

Open Source on Windows

In any IT environment, open source is more than just the operating system. You may be using open source components in your applications, whether you are a vendor offering Software-as-a-Service (SaaS) from the cloud, or an enterprise running open source components in your datacenter.

To provide customers with increased flexibility for running open source-based applications on Windows, Microsoft simplified the process for building, deploying and updating services that are built on Windows. This was achieved through the development of a set of tools called “ CoApp ” (Common Open source Application Publishing Platform), which is a package management system for Windows that is akin to the Advanced Packaging Tool (APT) on Linux.

Using CoApp, developers on Windows can easily manage the dependencies between components that make up an open source application. Developers will notice that many of the core dependencies, such as zlib and OpenSSL, are already built to run on Windows and are available immediately in the NuGet repository. Through NuGet, CoApp-built native packages can be included in Visual Studio projects in exactly the same manner as managed-code packages, making it very easy for a developer to download core libraries and create open source applications on Windows. Those of you with a developer orientation can get more details on CoApp in these videos: GoingNative - Inside NuGet for C++ and Building Native Libraries for NuGet with CoApp’s PowerShell Tools .

We’ve also done great work collaborating with the open source community to ensure specific OSS apps run on, and are optimized for, Windows. For example, consider PHP , which is a foundational component of many content management and publishing applications. Microsoft works in the PHP community to ensure that versions are available which run natively on Windows, right alongside the versions that run on Linux or UNIX. The newest version, PHP 5.5.0, has just been released for Windows on the same day that it was released for other operating systems. The Windows version includes significant performance improvements that deliver functionality that will surprise many.

In addition to all of these improvements, the Azure gallery now includes a broad range of Open Source applications thereby providing customers with ready access to install and run commonly used Open Source software on Azure.

Microsoft’s ongoing commitment to supporting Open Source Software has been highlighted recently in two important partnerships: First, our customers can now run Oracle software on Windows Server Hyper-V and in Windows Azure encompassing Java, Oracle Database, and Oracle WebLogic Server. This can all happen on Windows Server Hyper-V or Windows Azure in a fully supported mode. Second, a new Java development kit (JDK) will be available through a partnership with Azul Systems. This will enable customers to deploy Java applications on Windows Azure using open source Java – on Windows and Linux.

Summary

Enabling open source software is a key part of our promise to support the efforts of our customers as they continue to transform their datacenters with the cloud. This enablement is a key tenet of the scenarios we design and build our products to handle. The features and functions that enable open source software are an integral part of our products, and each element of these products are built and tested by our core engineering teams. These efforts are fully supported by Microsoft .

As you might expect for the “Enable OSS” tenet of this 2012 R2 release, key parts of our open source enablement are themselves open source. For example, the Linux Integration Services are open source in the Linux kernel, and Microsoft releases the source code for most of the agents that System Center uses on Linux and UNIX to provide management capabilities. OMI and CoApp are also an open source projects, and, of course, PHP on Windows is part of the PHP open source project.

With this release Microsoft is clearly the choice for datacenter infrastructure if you require the ability to run and manage open source software alongside Windows.

* * *

This post has covered one key trend in the datacenter (the need for one infrastructure and management solution for Windows and Linux), and next week we’ll examine another critical element: The need for enterprises to act more like service providers.

A core requirement for this trend is for enterprises to deploy and operate an Infrastructure-as-a-Service. In next week’s post, we’ll examine the infrastructure and experience improvements we’ve made across Windows and System Center to enable this scenario for customers.

- Brad

NEXT STEPS:

To learn more about the topics covered in this post, check out the following articles:

-

Running and Managing Linux and UNIX with Hyper-V and System Center

This channel 9 video session provides technical details and demos of how to leverage System Center to manage Linux and UNIX systems. -

Enabling Linux Support on Windows Server 2012 R2 Hyper-V

Details of the range of new features and capabilities that have been implemented to improve the performance, operation and management of Linux on Hyper-V. -

Enabling Management of Open Source Software in System Center Using Standards

An overview of the standards-based management approach that System Center has implemented to manage open source software, with a detailed look at the management implementation in the UNIX/Linux agents for Operations Manager and Configuration Manager. -

Extending Inventory on Linux and UNIX computers in Configuration Manager

A detailed look at the new features centered on OMI that have extended Configuration Manager’s capabilities for performing inventory of Linux and UNIX systems. -

CoApp

Learn about the package management capabilities of CoApp that simplify running open source applications on Windows. -

OMI

Learn about Open Management Infrastructure, the OSS version of Windows Management Infrastructure (WMI).