Lately our SAP colleague Leslie Moser and myself got involved in a support case where a company had severe problems to keep their website up and running for days and weeks. When analyzing the outages, SAP Support found that the VM which ran SQL Server had on two occasions completely hung and the VM had to be restarted. The website or at least parts of it were relying on the SAP Netweaver Java stack which was running on top of SQL Server 2008R2. Once we had Terminal Server access, everything looked normal on a first glance. SQL Server was humming along. It had 6GB of the 16GB VM allocated for it. Since the Java stack was running in another VM, there should have been plenty of memory for SQL Server to leverage.

When checking the performance counters:

- SQL Server:Memory Manager –> Total Server Memory

- SQL Server:Memory Manager –> Target Server Memory

we even saw that the total Server Memory was lower than the Target Server Memory. Means according to the configuration settings of ‘min server memory’ and ‘max server memory’ for SQL Server, SQL Server could have allocated more (Target Server Memory) than it really allocated. A first assumption would indicate, that SQL Server didn’t need more memory due to low workload and hence it didn’t even reach the maximum of what it could allocate.

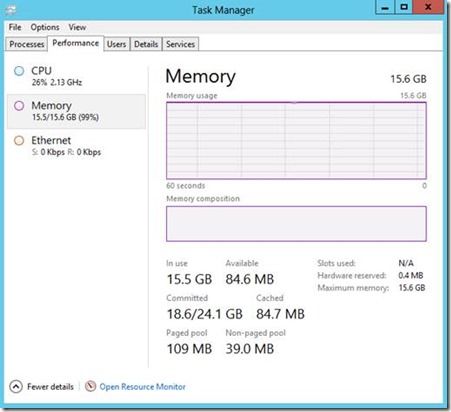

However one thing showed up as suspicious. Using Task Manager and looking into the Performance Tab, the strange thing was that all the 16GB memory of the VM were ‘Committed’

Means the Task manager picture looked like:

We knew that SQL Server was using less than 5GB, but the VM is completely saturated in memory consumption. Looking at Task Manager in the list of single processes to figure out other memory consumers, we also draw a blank since the list looked like:

Besides SQL Server no real consumer of memory.

On the other side, querying the SQL Server DMV sys.dm_os_sys_memory revealed that the low memory signal by the OS was set (column system_low_memory_signal_state =1). This means it did not hold back on allocating more memory by choice, but felt pressure by the OS to give up memory.

So at the end our suspicion was that the host was completely overcommitted on memory and the virtualization layer would take away real memory from the VM. Whereas Task Manager still would reflect the memory at start of the VM. We had no means to prove this theory since we had no SAP ABAP stack running where ST06 would have shown this information. This was the SAP Java stack only. Fortunately Leslie recalled a blog talking about Rammap.

We were able to run the tool over the Live-site without downloading it into the customer VM and were left with a picture which roughly looked like:

Stunning , there were a substantial amount of the VM’s memory marked as ‘Driver Locked’

Binging the internet Leslie found out that there were many cases like that in combination with VMWare. The culprit basically is that one can start the VM with a certain setting in memory. However, VMWare can dynamically balance the VM guest resources as needed, including reducing the memory. Windows in the guest VM then will basically note the memory taken away as ‘committed’ in Task Manager. This memory then will show up as ‘Driver Locked’ in Rammap.

Essentially, the customer had over-allocated memory across the guest VMs in his host. Unknown to us how it was exactly done. But we need to assume that it had to do with VMware's Memory Ballooning (see this whitepaper for more details: http://www.vmware.com/files/pdf/perf-vsphere-memory_management.pdf (thanks to Marius Cirstian for the pointer to the paper). The customer is now analyzing the actual memory assignments versus the physical memory on the host so that all the VM guest memory allocations can be reduced so that over-commitment doesn’t occur.

Especially on VMware ESX, we could have found similar information within the VM with the Performance Counters:

- VM Memory --> Memory Active in MB

- VM Memory --> memory ballooned in MB

Hyper-V does not offer counters like those within the VMs.

Is this a problem with VMWare only?

Actually, NO, it isn’t. The screenshots above were not taken on the customer system. Nor were the screen shots taken with VMWare as virtualization layer. The screenshots are taken with Hyper-V in a scenario we could reconstruct within 10min. In Hyper-V a similar effect can show up when one enables Dynamic memory on one or several VMs. In dynamic memory you define the startup size of the VM, which will be the size which ultimately will show up in Task Manager as real memory. Additionally you configure maximum and minimum memory as shown below:

Works a bit different than VMWare, but at the end is nothing else than a method to overcommit memory available on the host in the dire hope that not all the VMs require the memory at the same time. But also a method to introduce non-deterministic behavior as we experienced with the VMWare customer we were looking at.

We were a bit surprised about it in all honesty since SAP OSS notes make it very very clear, that overcommitting of memory is not something which should be done.

E.g.: Note 1056052 - Windows: VMware vSphere configuration guidelines

Section 10 in this OSS note could not be clearer, starting with the statement: ‘Don’t do memory overcommit’.

Or for Hyper-V: Note 1246467 - Hyper-V Configuration Guideline with the quote: ‘SAP strongly recommends to use static memory’

How can we figure out on the Hyper-V host how much real memory is assigned to a VM?

Having access to a Hyper-V host, it is easy to figure out whether a certain VM has static or dynamic memory configured. Just check the memory settings of a specific VM and it is obvious whether the VM has static settings or whether there is dynamic memory configured. Still the question would be how much memory is exactly assigned to a specific VM at a certain point in time.

On can see the actual assigned memory in Hyper-V Manager:

The VM above did start with 16GB and still shows 16GB of memory in Task Manager. But real assigned memory did differ dramatically

The actual assignment of memory also can be monitored with the Performance Monitor counters:

- Hyper-V Dynamic Memory –>Guest Visible Memory

- Hyper-V Dynamic Memory –> Physical Memory

In case of overcommitting and a VM running with less memory than its start memory, the picture could look like:

‘Guest Visible Memory’ is indicating that within the Guest OS, 16GB of memory are visible (though committed or driver locked eventually). Whereas ‘Physical Memory’ shows the actual memory the hypervisor is allowing the VM to use. The difference between Guest Visible Memory and Physical memory will at the end add up to what Rammap executed within the VM will show as ‘driver locked’ memory.

In opposite to the screenshot above, here a screenshot of a VM with static memory setting:

In this case one only sees one of the lines because they match exactly in value.

What did we learn beyond that one should be very careful with overcommitting memory in virtualized environment?

Even with not having access to the host one can within the VM and w/o help of ABAP transaction ST06 detect whether the VM is memory throttled from the host side.

Microsoft

Microsoft