First published on TECHNET on Apr 04, 2017

A seismic shift is happening in the way applications are developed and deployed as we move from traditional three-tier software models running in VMs to "containerized" applications and micro-services deployed across a cluster of compute resources. Networking is a critical component in any distributed system and often requires higher-level orchestration and policy management systems to control IP address management (IPAM), routing, load-balancing, network security, and other advanced network policies. The Windows networking team is swiftly adding new features ( Overlay networking and Docker Swarm Mode on Windows 10 ) and working with the larger containers community (e.g. Kubernetes sig-windows group ) by contributing to open source code and ensuring native networking support for any orchestrator, in any deployment environment, with any network topology.

Today, I will be discussing how Kubernetes networking is implemented in Windows and managed by an extensible Host Networking Service (HNS) - which is used in both Azure Container Service (ACS) Windows worker nodes and on-premises deployments - to plumb network policy in the OS .

Note: A video recording of the 4/4 #sig-windows meetup where I describe this is posted here: https://www.youtube.com/watch?v=P-D8x2DndIA&t=6s&list=PL69nYSiGNLP2OH9InCcNkWNu2bl-gmIU4&index=1

Windows containers can be orchestrated using either Docker Swarm or Kubernetes to help "automate the deployment, scaling, and management of 'containerized' applications". However, the networking model used by these two systems is different.

Kubernetes networking is built on the fundamental requirements listed here and is either agnostic to the network fabric underneath or assumes a flat Layer-2 networking space where all containers and nodes can communicate with all other containers and nodes across a cluster without using NAT (encapsulation is permitted) . Windows can support these requirements using a few different networking modes exposed by HNS and working with external IPAM drivers and route configurations.

The other large difference between Docker and Kubernetes networking is the scope at which IP assignment and resource allocation occurs. Docker assigns an IP address to every container whereas Kubernetes assigns IP addresses to a Pod which represents a network namespace and could consist of multiple containers running inside the Pod. Windows also has a network namespace concept called a network compartment and a management surface is being built in Windows to allow for multiple containers in a Pod to communicate with each other through localhost.

Connectivity between pods located on different nodes in a Kubernetes cluster can be accomplished either by using an overlay (e.g. vxlan) network or without an overlay by configuring routes and IPAM on the underlying (virtual) network fabric. Realizing this network model can be done through:

The sig-windows community (led by Apprenda ) did a lot of work to come up with an initial solution for getting Kubernetes networking to work on Windows. The networking teams at Microsoft are building on this work and continues to partner with the community to add support for the native Kubernetes networking model - defined by the Container Network Interface (CNI) which, is different from the Cloud Network Model (CNM) used by Docker - and surfacing policy management capabilities through HNS.

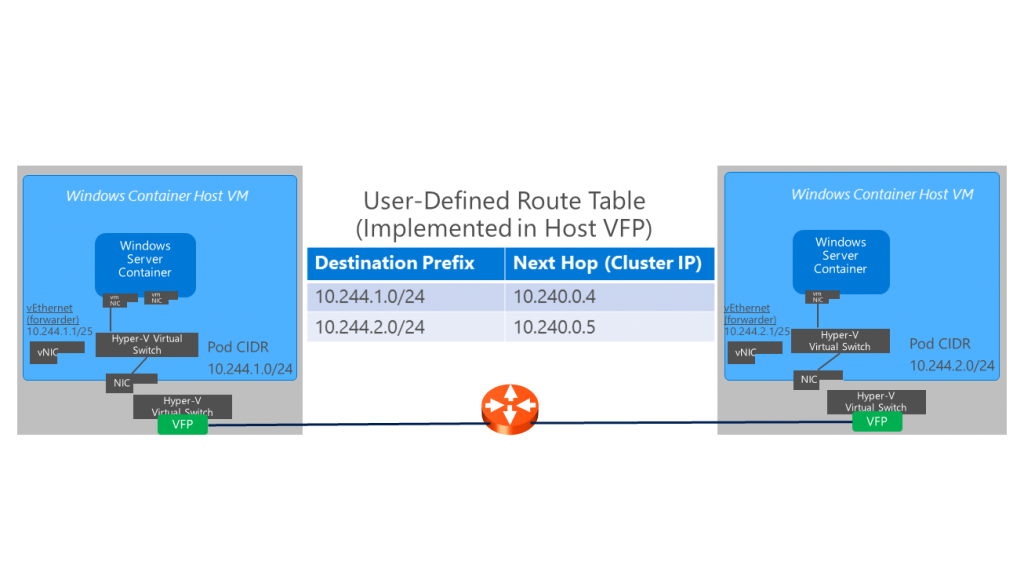

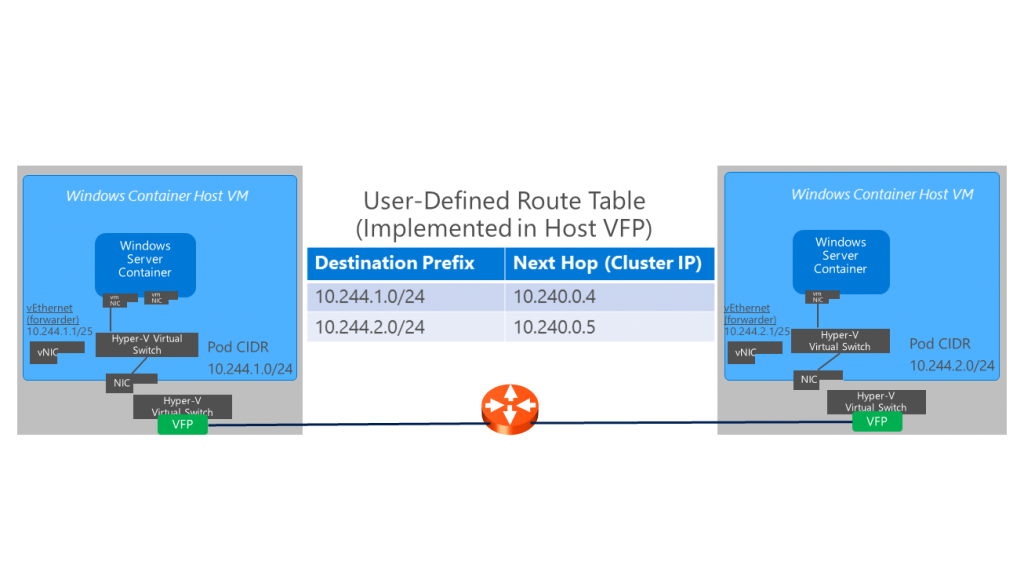

Azure Container Service recently announced Kubernetes general availability which uses a routable-vip approach (no overlay) to networking and configures User-Defined Routes (UDR) in the Hyper-V (virtualization) host for Pod communication between Linux and Windows cluster node VMs. A /24 CIDR IP pool (routable between container host VMs) is allocated to each container host with one IP assigned per Pod (one container).

With the recent Azure VNet for Containers announcement which includes support for a CNI network plugin used in Azure (pre-released here: https://github.com/Azure/azure-container-networking/releases/tag/v0.7 ), tenants can connect their ACS clusters (containers and hosts) directly to Azure Virtual Networks. This means that individual IPs from a tenant's Azure VNet IP space will be assigned to Kubernetes nodes and pods in potentially the same subnet. The Windows networking team is also working to build a CNI plugin to support and extend container management through Kubernetes on Windows for on-premises deployments.

Microsoft engineers across Windows and Azure product groups actively contributed code to the Kubernetes repo to enhance kube-proxy (used for DNS and service load-balancing) and kubelet (for Internet access) binaries which are installed on ACS Kubernetes Windows worker nodes. This overcame gaps previously identified so that both DNS and service load-balancing worked correctly without the need for Routing and Remote Access Services (RRAS) or netsh port proxy. In this implementation, the Windows network uses Kubernetes' default kubenet plugin without CNI plugin.

Using HNS, one transparent and one NAT network is created on each Windows container host for inter-Pod and external communication respectively. Two container endpoints - connected to the Service and Pod networks - are required for each Windows container which will participate in the Kubernetes service. Static routes must be added to the running Windows containers themselves on the container endpoint attached to the service network.

In the absence of ACS-managed User-Defined Routes, Out-of-Band (OOB) configuration of these routes need to be realized in the Cloud Service Provider network, implemented using the "routing" interface of the Kubernetes cloud provider, or connected via overlay networks. Other solutions include using the HNS overlay network driver for inter-Pod communication or using the OVS Hyper-V switch extension with OVN Controller.

Today, with the publicly available versions of Windows server and client you can deploy Kubernetes with the following restrictions:

Windows is moving to a faster release cadence such that new platform features will be made available in a matter of months rather than years. In some circumstances, early builds can be made available to Insiders as well as to TAP customers and EEAP partners for early feature validation.

Stay tuned for new features which will be made available soon...

In this blog post, I described some of the nuances of the Kubernetes networking model and how it differs from the Docker networking model. I also talked about the code updates made by Microsoft engineering teams to the kubelet and kube-proxy binaries for Windows in open source repos to enable networking support. Finally, we ended with how Kubernetes networking is implemented in Windows today and the plans for how it will be implemented through a CNI plugin in the near future...

A seismic shift is happening in the way applications are developed and deployed as we move from traditional three-tier software models running in VMs to "containerized" applications and micro-services deployed across a cluster of compute resources. Networking is a critical component in any distributed system and often requires higher-level orchestration and policy management systems to control IP address management (IPAM), routing, load-balancing, network security, and other advanced network policies. The Windows networking team is swiftly adding new features ( Overlay networking and Docker Swarm Mode on Windows 10 ) and working with the larger containers community (e.g. Kubernetes sig-windows group ) by contributing to open source code and ensuring native networking support for any orchestrator, in any deployment environment, with any network topology.

Today, I will be discussing how Kubernetes networking is implemented in Windows and managed by an extensible Host Networking Service (HNS) - which is used in both Azure Container Service (ACS) Windows worker nodes and on-premises deployments - to plumb network policy in the OS .

Note: A video recording of the 4/4 #sig-windows meetup where I describe this is posted here: https://www.youtube.com/watch?v=P-D8x2DndIA&t=6s&list=PL69nYSiGNLP2OH9InCcNkWNu2bl-gmIU4&index=1

Kubernetes Networking

Windows containers can be orchestrated using either Docker Swarm or Kubernetes to help "automate the deployment, scaling, and management of 'containerized' applications". However, the networking model used by these two systems is different.

Kubernetes networking is built on the fundamental requirements listed here and is either agnostic to the network fabric underneath or assumes a flat Layer-2 networking space where all containers and nodes can communicate with all other containers and nodes across a cluster without using NAT (encapsulation is permitted) . Windows can support these requirements using a few different networking modes exposed by HNS and working with external IPAM drivers and route configurations.

The other large difference between Docker and Kubernetes networking is the scope at which IP assignment and resource allocation occurs. Docker assigns an IP address to every container whereas Kubernetes assigns IP addresses to a Pod which represents a network namespace and could consist of multiple containers running inside the Pod. Windows also has a network namespace concept called a network compartment and a management surface is being built in Windows to allow for multiple containers in a Pod to communicate with each other through localhost.

Connectivity between pods located on different nodes in a Kubernetes cluster can be accomplished either by using an overlay (e.g. vxlan) network or without an overlay by configuring routes and IPAM on the underlying (virtual) network fabric. Realizing this network model can be done through:

- CNI Network Plugin

- Implementing the "Routing" interface in Kubernetes code

- External configuration

The sig-windows community (led by Apprenda ) did a lot of work to come up with an initial solution for getting Kubernetes networking to work on Windows. The networking teams at Microsoft are building on this work and continues to partner with the community to add support for the native Kubernetes networking model - defined by the Container Network Interface (CNI) which, is different from the Cloud Network Model (CNM) used by Docker - and surfacing policy management capabilities through HNS.

Kubernetes networking in Azure Container Service (ACS)

Azure Container Service recently announced Kubernetes general availability which uses a routable-vip approach (no overlay) to networking and configures User-Defined Routes (UDR) in the Hyper-V (virtualization) host for Pod communication between Linux and Windows cluster node VMs. A /24 CIDR IP pool (routable between container host VMs) is allocated to each container host with one IP assigned per Pod (one container).

With the recent Azure VNet for Containers announcement which includes support for a CNI network plugin used in Azure (pre-released here: https://github.com/Azure/azure-container-networking/releases/tag/v0.7 ), tenants can connect their ACS clusters (containers and hosts) directly to Azure Virtual Networks. This means that individual IPs from a tenant's Azure VNet IP space will be assigned to Kubernetes nodes and pods in potentially the same subnet. The Windows networking team is also working to build a CNI plugin to support and extend container management through Kubernetes on Windows for on-premises deployments.

Kubernetes networking in Windows

Microsoft engineers across Windows and Azure product groups actively contributed code to the Kubernetes repo to enhance kube-proxy (used for DNS and service load-balancing) and kubelet (for Internet access) binaries which are installed on ACS Kubernetes Windows worker nodes. This overcame gaps previously identified so that both DNS and service load-balancing worked correctly without the need for Routing and Remote Access Services (RRAS) or netsh port proxy. In this implementation, the Windows network uses Kubernetes' default kubenet plugin without CNI plugin.

Using HNS, one transparent and one NAT network is created on each Windows container host for inter-Pod and external communication respectively. Two container endpoints - connected to the Service and Pod networks - are required for each Windows container which will participate in the Kubernetes service. Static routes must be added to the running Windows containers themselves on the container endpoint attached to the service network.

In the absence of ACS-managed User-Defined Routes, Out-of-Band (OOB) configuration of these routes need to be realized in the Cloud Service Provider network, implemented using the "routing" interface of the Kubernetes cloud provider, or connected via overlay networks. Other solutions include using the HNS overlay network driver for inter-Pod communication or using the OVS Hyper-V switch extension with OVN Controller.

Today, with the publicly available versions of Windows server and client you can deploy Kubernetes with the following restrictions:

- One container per Pod

- CNI Network Plugins are not supported

- Each container requires two container endpoints (vNICs) with IP routing manually plumbed

- Service IPs can only be associated with one Container Host and will not be load-balanced

- Policy specifications (e.g. network security) are not supported

What's Coming Next?

Windows is moving to a faster release cadence such that new platform features will be made available in a matter of months rather than years. In some circumstances, early builds can be made available to Insiders as well as to TAP customers and EEAP partners for early feature validation.

Stay tuned for new features which will be made available soon...

Summary

In this blog post, I described some of the nuances of the Kubernetes networking model and how it differs from the Docker networking model. I also talked about the code updates made by Microsoft engineering teams to the kubelet and kube-proxy binaries for Windows in open source repos to enable networking support. Finally, we ended with how Kubernetes networking is implemented in Windows today and the plans for how it will be implemented through a CNI plugin in the near future...

Published Feb 14, 2019

Version 1.0Jason Messer

Former Employee

Joined June 13, 2017

Networking Blog

The Official Blog Site of the Windows Core Networking Team at Microsoft