Update 11/11/2019: for a significant update on this subject, please see Updated: Running PowerShell cmdlets for large numbers of users in Office 365.

When PowerShell was introduced back in Exchange 2007 it was a boon too all us Exchange administrators. It allowed us as admins to manage large numbers of objects quickly and seamlessly. We have come to rely on it for updating users, groups, and other sets of objects.

With Office 365 things have changed a bit. No longer do we have a local Exchange server to talk too for running our cmdlets, instead we have to communicate over the internet to a shared resource. This introduces a number of challenges for managing large numbers of objects that did not exist previously.

PowerShell Throttles

Office 365 introduces many throttles to ensure that one tenant or user can’t negatively impact large swaths of users by over utilizing resources. In PowerShell this shows up primarily as Micro Delays.

WARNING: Micro delay applied. Actual delayed: 21956 msecs, Enforced: True, Capped delay: 21956 msecs, Required: False,

Additional info: .;

PolicyDN: CN=[SERVER]-B2BUpgrade-2015-01-21T03:07:53.3317375Z,CN=Global

Settings,CN=Configuration,CN=company.onmicrosoft.com,CN=ConfigurationUnits,DC=FOREST,DC=prod,DC=outlook,DC=com;

Snapshot: Owner: Sid~EURPR05A003\a-ker531361849849165~PowerShell~false

BudgetType: PowerShell

ActiveRunspaces: 0/20

Balance: -1608289/2160000/-3000000

PowerShellCmdletsLeft: 384/400

ExchangeCmdletsLeft: 185/200

CmdletTimePeriod: 5

DestructiveCmdletsLeft: 120/120

DestructiveCmdletTimePeriod: 60

QueueDepth: 100

MaxRunspacesTimePeriod: 60

RunSpacesRemaining: 20/20

LastTimeFrameUpdate: 1/23/2015 10:39:08 AM

LastTimeFrameUpdateDestructiveCmdlets: 1/23/2015 10:38:48 AM

LastTimeFrameUpdateMaxRunspaces: 1/23/2015 10:38:48 AM

Locked: False

LockRemaining: 00:00:00

Throttles like this one in O365 can be thought of like a bucket. The service is always pouring more time into the top of the bucket and we as administrators are pulling time out of the bottom of the bucket. As long as the service is adding more time than we are using then we don’t run into any issues.

The problem is when we are running an intensive command like Get-MobileDeviceStatistics, Set-Clutter, or Get-MailboxFolderStatistics. These take a significant amount of time to run for each user and consume a fair bit of resources doing so. In this scenario we are pulling more out than the service is putting in so we end up getting throttled.

Another way to look at it is to examine the return and do the math to find out how much time we can spend running commands. If we examine the Micro Delay warning we can find our recharge rate here:

ActiveRunspaces: 0/20

Balance: -1608289/2160000/-3000000

PowerShellCmdletsLeft: 384/400

In this case mine is 2,160,000 milliseconds. So that is how many milliseconds I can spend per hour consuming resources. The value you see here will vary depending on the number of mailboxes in your tenant.

If we take our recharge rate and divide it by the number of milliseconds in one hour we get how much of each hour we can spend actively consuming resources in our session.

2,160,000 milliseconds recharge rate / 3,600,000 milliseconds per hour = 0.6

Or in other words 0.6 * 60 minutes = 36 minutes

Now when we are using the Shell for daily tasks we are never going to come anywhere close to this limit. Since it takes a bit of time for someone to type a command, plus most of us aren’t typing PowerShell command as fast as they can for hours at a time.

A quick solution if you are getting Micro Delayed is to introduce a sufficient pause between each command so that you don’t exceed your percent usage.

$(Get-Mailbox) | foreach { Get-MobileDeviceStatistics -mailbox $_.identity; Start-Sleep -milliseconds 500 }

Session stability

The next common issue I see is problems with Session Stability. Since we are connecting over the internet the stability of our session becomes a major concern. If we are going to have a script running Get-InboxRule against 180,000 users for four days, then the chance of us dropping the HTTPS session at some point in that time period is pretty high.

These session drops can be caused by any number of things

- Firewall dropping a long running session

- O365 CAS server getting upgraded or rebooted

- Upstream network issues

Most of the reasons for session drops we as admins have no control over. This issue will manifest itself as you coming back to a PowerShell window that is asking for your credentials.

Overcoming this one quickly isn’t easy. We would need to monitor the status of the connection and rebuild it if it encountered any errors. This can be done but it takes more than a few lines of code and could be a challenge to integrate every time we needed to use it.

Data return

Finally, we have the issue of data return. Before we can run a set command on a large number of mailboxes we must get an array of objects to execute against. Most of us do this with something similar to $mbx = Get-Mailbox -ResultSize Unlimited. But, if we are running this against thousands of mailboxes just the amount of data coming back from the server can take a significant amount of time.

This one we can easily get around but using two simple PowerShell tricks.

First we make use of Invoke-Command to run our get command on the remote server instead of on the local host. This means that we can send our command across the wire and have the O365 server execute it for us taking a good bit of the client out of the loop.

This is great since it puts the onus to do the work a lot closer to source of the data. But, it isn’t a perfect solution. This is because we are not able to run anything complex using a remote session and Invoke-Command. Instead we must restrict ourselves to fairly strait forward cmdets and simple filtering. Anything else will result in the server returning an error and rejecting our command.

This is where our second trick Select-Object comes in. By coupling Invoke-Command with Select-Object we can rapidly get our objects and only return a limited amount of data.

Invoke-Command -session (Get-Pssession) -scriptblock {Get-Mailbox -resultsize unlimited | select-object -property Displayname,Identity,PrimarySMTPAddress}

This command invokes a session on the server to run Get-Mailbox but uses Select-Object to only return the properties that I need to identify each object to operate on.

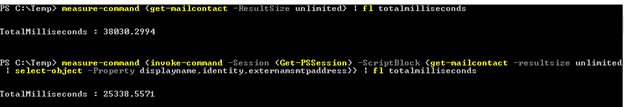

In my testing with 5000 Mail Contacts the invoke-command was on average 35% faster and returned significantly less data for my PowerShell client to deal with.

A scripted solution

Now that we know what challenges we face can we come up with a consistent, reusable solution to overcome them when we do have to run a cmdlet against a large collection of object.

To that end we have developed a highly generic wrapper script, Start-RobustCloudCommand.ps1, that will take your PowerShell command and execute it against the service in a robust manner that seeks to avoid throttles and actively deal with session issues. Couple this with Invoke-Command for quickly getting the list of objects to operate on and we can start getting back most of our pre service PowerShell functionality.

You can download the script here.

First, a key disclaimer. This script will NOT make your commands complete faster. If you are running a command that takes 5s per user and you have 7,200 users to run it against, it will still take at least 10 hours to complete. Using, this wrapper script will actually slow it down a bit. What it will do is try very hard to ensure that the PowerShell command is able to run uninterrupted, without failure, and without admin interaction for those 10+ hours.

How to use the script

Using the wrapper involves three steps

- Build the PowerShell script block you want to run.

- Collect the objects you want to run against.

- Wrap your script block and execute.

Build the PowerShell Script Block

This is pretty much the same as building any PowerShell command. I like to build and test my commands against a single user before I try to use them in the Start-RobustCloudCommand.ps1.

Our first step is nice and easy, just write the PowerShell that we want against a single users data returned from Invoke-Command.

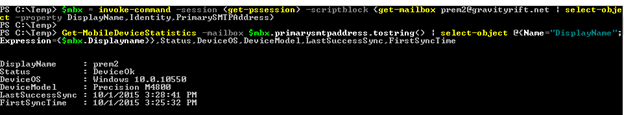

$mbx = invoke-command -session (get-pssession) -scriptblock {get-mailbox $mailboxstring | select-object -property DisplayName,Identity,PrimarySMTPAddress}

Get-MobileDeviceStatistics -mailbox $mbx.primarysmtpaddress.tostring() | select-object @{Name="DisplayName";Expression={$mbx.Displayname}},Status,DeviceOS,DeviceModel,LastSuccessSync,FirstSyncTime

Here I populated a variable $mbx with a single mailbox's information but I made sure to do it exactly like I am going to do when I gather the full data set. So I used Invoke-Command and Select-Object to only pull a minimum set of properties that I wanted for running Get-MobileDeviceStatistics.

Using invoke-command can at time result in unexpected data types in the results. This means when I send the resulting object into my command I can get errors where it is unable to deal with the data type presented. Most of these cases can be resolved by using .tostring() to convert the results into a string so that the cmdlet can understand them.

Next, I ran my Get-MobileDeviceStatistics command and again used Select-Object to get just the output information that I wanted. I also used the ability of Select-Object (Example 4 here) to allow me to create a property on the fly so that I could populate the Display Name of the mailbox in my output, something that isn't in the output of Get-MobileDeviceStatistics by default.

Now that I have my script working and giving me the data that I want I just need to make a minor alteration so that it will work in the script block potion of Start-RobustCloudCommand.ps1. The script block isn't able to read the $mbx variable since inside the script we are using local Invoke-Command -input to pass each object into the command one at a time. So to read the values of these objects we have to use the automatic variable $input.

Therefore, our command becomes the following:

Get-MobileDeviceStatistics -mailbox $input.primarysmtpaddress.tostring() | select-object @{Name="DisplayName";Expression={$input.Displayname}},Status,DeviceOS,DeviceModel,LastSuccessSync,FirstSyncTime

Easy to make the change but highly critical to the script functioning.

Collect the objects you want to run against.

Now that we have our command that we want to run we need to gather all of the objects that we want to run it against.

Here we use what we learned above to quickly and efficiently gather the objects that we are going to operate on.

Invoke-Command -Session (Get-PSSession) -ScriptBlock {Get-Mailbox -resultsize unlimited | select-object -property DisplayName,Identity,PrimarySMTPAddress} | Export-Csv c:\temp\users.csv

$mbx = Import-Csv c:\temp\users.csv

You will notice that I exported the output to a CSV file then imported it back into a variable. When I am operating on a large collection of objects I prefer this method because it gives me a written out collection of objects that I am working with. Later, if something beyond my control goes wrong, I can use the CSV file to restart the script with the objects that I have already run against removed from the file, giving me a manual "resume" functionality.

Wrap your script block and execute.

Finally, we put everything we have together and feed it into the wrapper script.

$cred = Get-Credential

.\Start-RobustCloudCommand.ps1 -Agree -LogFile c:\temp\10012015.log -Recipients $mbx -ScriptBlock { Get-MobileDeviceStatistics -Mailbox $input.primarysmtpaddress.tostring() | Select-Object @{Name="DisplayName";Expression={$input.Displayname}},Status,DeviceOS,DeviceModel,LastSuccessSync,FirstSyncTime } -Credential $cred

Here we can see the script iterating thru each of my test users, executing the command and sending the resulting output to the screen. Also we can see that the script is logging everything it is doing to the screen and to the log file that I specified.

Information to the screen is great for a demo but generally you would want this information in a file so that you could review it later. All we have to do is add | Export-Csv c:\temp\devices.csv -Append to the end of our script block and it will push the output of each command into our CSV. Remember that since this is basically a very complex foreach loop we have created you will need the -append otherwise it will overwrite itself for every user.

.\Start-RobustCloudCommand.ps1 -Agree -LogFile c:\temp\10012015.log -Recipients $mbx -ScriptBlock { Get-MobileDeviceStatistics -Mailbox $input.primarysmtpaddress.tostring() | Select-Object @{Name="DisplayName";Expression={$input.Displayname}},Status,DeviceOS,DeviceModel,LastSuccessSync,FirstSyncTime | Export-Csv c:\temp\devices.csv -Append } -Credential $cred

Conclusion

This solution is designed for customers who are having to run cmdlets against Large numbers of users in the service (>5000) and are running into issues. It is a bit complex to get working exactly how you want but it has been designed to be highly generic to allow it to handle most anything you throw at it.

Hopefully for our folks with large user sets they will be able to start utilizing this to make those changes across their tenant, or run reports easier.

Matthew Byrd