- Home

- Security, Compliance, and Identity

- Core Infrastructure and Security Blog

- The SUITE spot of imaging

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

First published on MSDN on Mar 29, 2014

There are many ways to deliver images to systems. Some groups still choose to allow technicians to manually build each machine. Most leverage some type of automation such as using the ADK tools directly or leveraging something like Ghost or MDT. If the imaging target is a virtual machine then VM templates are often used. Another very common tool for imaging is the Operating System Deployment (OSD) feature of System Center Configuration Manager (ConfigMgr). With all of these imaging options, which is best? Is it possible to choose just one or should there be different solutions used depending on the scenario? What about physical vs. virtual systems? What about workstations vs. servers? What about the needs of one department vs. the needs of another?

While there are many tools that will help you toward the end goal of building a new system my argument in this article will be that if you own ConfigMgr then regardless of physical, virtual, workstation, server or departmental needs, OSD should be the centerpiece of your imaging solution. I can hear some of you saying – but, wait, what about the Microsoft Deployment Toolkit (MDT)? We use it for imaging and we also have ConfigMgr. Let me be clear - MDT is great tool and in the absence of OSD it should be THE choice and centerpiece for imaging. When OSD is present, though, it makes more sense to allow OSD to be the primary driver of imaging and to use MDT to augment the capabilities of OSD. Why should OSD be the primary driver? Think of all of the other capabilities ConfigMgr brings such as granular scheduling, reporting, bandwidth control, status updates, maintenance windows and more. In addition, in most organizations that own ConfigMgr significant work has already been done to configure packages, applications and other settings. Why recreate the needed components in another system or sacrifice some of the goodness built into ConfigMgr? Ultimately, either OSD or MDT are capable of handling any imaging scenario quite effectively so whatever the scenario one of these tools will be able to solve your imaging needs.

The concepts of thick vs. thin imaging come into focus because of the rich capabilities of OSD and MDT to deliver ‘on the fly’ customization during image deployment. You will commonly hear arguments for both approaches. In brief, thick imaging describes the traditional approach of configuring a base image with everything that might be needed by the target system and deploying that fully configured image. This approach leads to the need to manage a library of images which is a manual and potentially error prone process. Thin imaging is the concept of maintaining the most basic of base images, even as basic as using the install.wim file directly from the OS media, and customizing those images on the fly during the imaging process. The thin imaging approach is where OSD and MDT really shine. Thin imaging allows organizations to get out of the business of image management and instead be in the business of managing the imaging process. There are many advantages of this approach including removal of human error during imaging, having the imaging process fully documented in the task sequence, moving from managing the image to managing the task sequence, change control and more. And then there is the hybrid approach where some items are baked into the image and other items are delivered ‘on the fly’ during image deployment. This approach is not uncommon in organizations today and OSD or MDT either one are able to handle this scenario well. The challenge though is that using the hybrid model requires an organization to keep one ‘foot’ in the ‘pool’ of thick imaging and the other ‘foot’ In the ‘pool’ of thin imaging. While there are perceived benefits of the hybrid model I would encourage you to evaluate the gains you will make going with a fully thin approach instead of trying to do both! OK, enough about that.

I mentioned earlier that OSD should be the centerpiece of imaging whether physical or virtual. Really? Aren’t there tools specific to either VMWare or Hyper-V that allow deploying ‘images’ (templates in a virtual world) to virtual systems? Yes. It is common practice to deploy templates to create virtual machines. VMWare has their template solution and Virtual Machine Manager (VMM) has its own toolset. Whether you are leveraging VMWare or Hyper-V or both for your virtual solution, VMM is a fantastic tool and I would encourage you to take a look at it. VMM is a seamless management solution to both environments and also supports Citrix as well! The capability of VMM to manage VMWare, Hyper-V and Citrix environments is important to understand because the example scenario I will discuss shortly, while focused on Hyper-V, is equally applicable to VMWare or Citrix environments. The tool and processes I will be demonstrating shortly simply leverages the native VMM commands which can be brought to bear on any of the three supported environments. OK, if this is the case and these tools expect and can manage their own imaging through templates why do we need to leverage OSD? Good question. A question back to you. What type of image deployment experience do you get when deploying templates to your virtual systems? Thick or thin? The answer is mostly thick. Leveraging templates puts you right back in the place of maintaining an image library vs. managing the imaging process. And templates aren’t nearly as robust of an imaging option as is available with OSD or even MDT. In addition, think about the work that has already been investing in ConfigMgr in terms of building packages, applications, software updates and maybe even building out OSD. Why duplicate the effort?

I can hear you saying – “OK, Steve, assuming for a minute I’m considering allowing OSD to be the centerpiece of imaging, how do I pull it all together? We don’t want our technicians having to figure out the virtual vs. physical differences or toolsets.” Great, I’m glad you asked. The title of this article is ‘The SUITE spot of imaging’. System Center is a SUITE of products that can be leveraged together to achieve some really cool results. In the remainder of this article I will show a concept of how you could leverage ConfigMgr OSD, VMM and System Center Orchestrator (SCORCH) to provide a robust imaging solution that will meet the needs of physical, virtual, server, workstation or more very effectively while keeping the process very simplistic for the technician or even the end user. Let’s get started.

NOTE: The example shown here is intended to illustrate conceptually how you might approach building a unified imaging solution. The example works effectively but doesn’t cover all of the potential needs for imaging. The example shows bare metal imaging but could be modified fairly easily to also drive non-bare metal imaging. You will need to extend the example or build your own from scratch to address your specific needs. Either way, the time invested will pay dividends for your imaging efficiency.

A few components we need to make all of this work. I’ll list them out first and then show all of them in use in the example to follow.

· A task sequence to drive the imaging process. This is built in the OSD environment.

· An image to deploy. This is derived either by capturing a base image or simply deploying the install.wim from whatever source media is of interest for the deployment.

· A blank virtual machine template loaded with the ConfigMgr boot media. WAIT, you said you need a template!!!! You just argued against templates! Very true, hang with me! J

· An Orchestrator runbook that will unify the imaging process.

OK, let’s get started linking this all up.

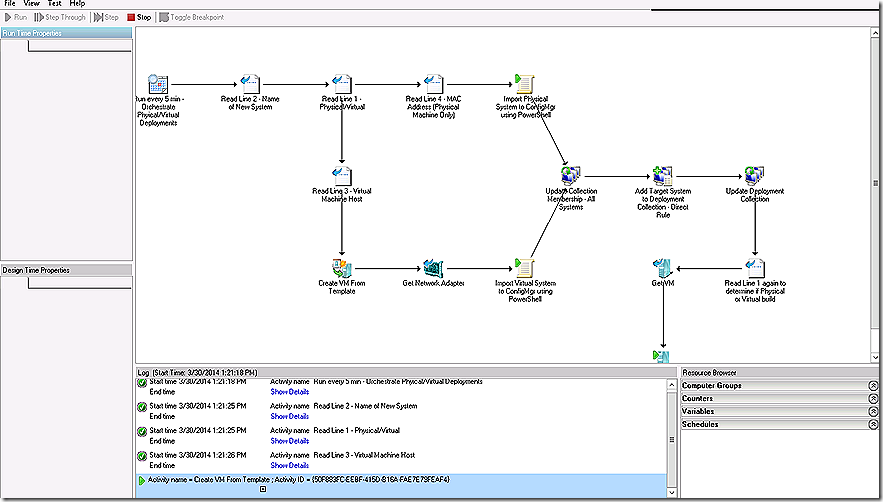

In this scenario we are looking to build a solution that will handle imaging of machines, whether virtual or physical and whether server or workstation. The lead component that drives this solution is SCORCH. The runbook we will use in this demo is below.

Note: If you haven’t worked with SCORCH you are missing out on a world of possible ways to streamline operations – and not just imaging or ConfigMgr related – in your environment!

How does this runbook work? First, notice that all but 2 steps leverage available Orchestrator runbook integration pack components. Leveraging integration pack components is an easy way to get started with Orchestrator but you aren’t limited to just the integration pack components. Much of the flexibility and real power of Orchestrator comes in its ability to run scripts – including VB Script. PowerShell, Java Script and more. The other thing that is awesome about scripts in SCORCH is the ability to break those scripts into succinct logical components which are easier to visualize instead of just presenting hundreds of lines of code (though you can do that too).

For our imaging example consider the scenario where we have an input source that a technician can use to provide the details of imaging. The technician never needs to touch the ConfigMgr or VMM consoles – just provide imaging information. In this example I’m leveraging a text file for simplicity. In production you will likely want a more robust solution such as a web page, SharePoint list, Excel spreadsheet or whatever else meets your needs. This input source will be a template for the technician to provide whatever detail is needed. For the example that detail will be to indicate whether the system is physical or virtual, the new name of the system, if virtual then provide the name of the proposed VM host and if physical provide the MAC address of the new system. In production you might want to add detail such as available packages and applications that could be installed, time zone information, department detail or more. In a previous article I worked through how to build a web page to drive the imaging process that might be of interest for review. It is available here . SCORCH wasn’t available at the time or I would have leveraged it instead of the scripting that you see.

OK, so how does this runbook work? Let’s go step by step.

1 – Step 1 is used to determine the run behavior of the runbook. In this case I’m using the ‘Monitor Date/Time’ Scheduling object. For ongoing production the runbook would run every 5 minutes. The idea on the schedule is that the runbook will monitor a location – such as a web page, SharePoint site, Excel spreadsheet or other location to determine if there is any imaging work to be done. As mentioned, in my simple case imaging will be controlled by a text file.

The text file I’m using to drive the imaging process is below.

Virtual

TestSCORCHVirt

labsrv64-6.contoso.com

1C:3B:E5:F1:F1:38

· The first line of the text file will either read physical or virtual. In this example a virtual machine is being created.

· The second line of the text file specifies the name of the system being built and applies regardless of whether building a physical or virtual system.

· The third line only applies if creating a virtual system. If creating a physical system the line needs to be included to ensure proper spacing but can be blank or contain any text of interest as it will not be read during the process.

· The fourth line only applies if building a physical machine and does not need to be populated if building a virtual system. The MAC address will be used for importing the physical machine into the ConfigMgr database. Note that it would also be possible to specify a system by SMBIOS if preferred but the runbook would need to have a couple of monitor adjustments made for this to work.

2 – Step 2 is used to read the input text file. In my case I leverage the ‘Read Line’ Text File Management object. This step is very flexible as you don’t have to read the lines in order – you can choose what you need when you need it. Here we read line 2 which gives us the proposed name of the system to be imaged.

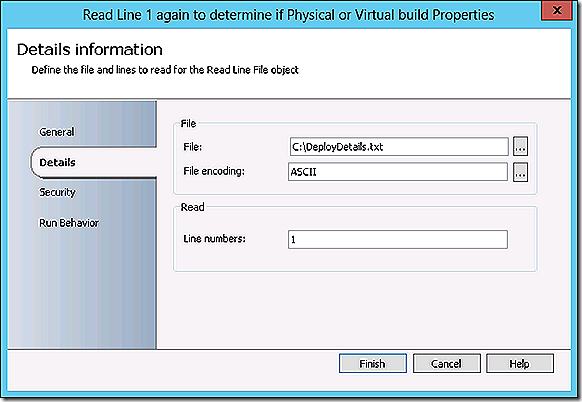

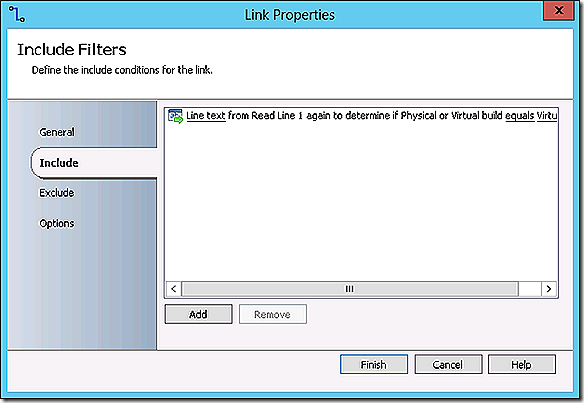

3 – Step 3 also leverages the ‘Read Line’ object and this time reads line 1. Remember that line 1 is where we specify whether we are building a physical or a virtual machine. I’m sure you have noted the arrows describing the flow of the runbook. These arrows need a bit of explanation. The arrows are great to indicate the flow of processing but they are more than just that! If you double-click an arrow it is possible to specify logical flow conditions. Here we have two options – physical or virtual. The conditions for flow direction are specified as follows.

If we are building a virtual machine we follow the arrow pointing down.

If we are building a physical machine we follow the arrow pointing to the right.

In our test case we are building a virtual machine – but let’s follow the steps for a physical machine for a bit.

8 – Step 8 takes place after step 3, which is our decision step about whether we are building a physical or a virtual system. Now that we know we are building a physical system we will again leverage the ‘Read Line’ object to obtain the MAC address of the physical system to be built.

9 – When we are building a bare metal physical machine we need to ensure it is imported into the ConfigMgr database or leverage unknown computer support. Some additional error checking here would be appropriate to determine if the import is truly necessary – it could be the system is already in the database and, therefore, known.

Since there is no integration pack object to allow importing a machine to the database in the provided ConfigMgr integration pack (although this step is available through 3 rd party integration packs) we will leverage the ‘Run .NET script’ object and supply PowerShell commands.

The full text of the PowerShell commands are below. Notice the seeming gibberish embedded in the PowerShell script. This comes because we are leveraging Published Data that has been stored in the SCORCH Data Bus based on data from previous steps. From the snip in the window above you will see that the ComputerName option is specified from Published Data as is the MAC address. Accessing Published Data is a simple matter – simply place your cursor where needed and right-click and select subscribe which will allow you to leverage either Published Data from the current or previous steps as well as available variable data. Simply put, Published Data is information that has been published to the Data Bus from previously executed steps of the runbook. Variable data is that information the runbook may need to get started which would be supplied automatically by whatever process is initiating the runbook (such as the MDT ‘execute runbook’ task sequence step) or manually by a user initiating the runbook. Not all runbooks need to leverage either published data or variables but many do.

$PSE = Powershell {

import-module($Env:SMS_ADMIN_UI_PATH.Substring(0,$Env:SMS_ADMIN_UI_PATH.Length-5) + '\ConfigurationManager.psd1')

CD CAS:

Import-CMComputerInformation -ComputerName "\`d.T.~Ed/{2F098AF5-596D-4A72-9489-6901DAA13634}.LineText\`d.T.~Ed/" -MacAddress "\`d.T.~Ed/{D367ADF3-1542-4305-A6BE-E223511E22F7}.LineText\`d.T.~Ed/"-CollectionName "SCORCHVMPhysicalDemo"

}

The next step after import is to adjust collection membership to include the newly imported machine. Those steps are common between physical and virtual imaging so we will continue discussion of those steps after we catch up with the virtual machine imaging branch.

OK, so now back to the virtual machine.

4 – Step 4 is the first stop in the flow of specifically building a virtual machine. We start down the virtual branch and again leverage the ‘Read Line’ action to pull in the name of the system that will serve as the host for the virtual machine being built.

5 – We are building a virtual machine so with this step we leverage the ‘Create VM from Template’ step that is available in the Virtual Machine Manager Integration pack. With this step we specify the Name of the connection that has been setup to allow access to the Virtual Machine Manager system. We specify the other required properties including the Destination, VM Name and Source Template. The Destination and VM Name are provided by reading the text file and storing the data in the Data bus which is accessible through subscribing to Published Data.

At this point I can hear you saying “Wait! In the introduction to this article you mentioned that VM Templates are essentially thick imaging and thick imaging wasn’t the best approach! So why are you using VM templates?” OK, good question. In order to stage a new virtual system it is easiest to deploy a template. To allow ConfigMgr OSD the ability to provide the imaging the template will be blank with just the required settings to get the virtual system up and running. You could either stage the template manually and manually initiate imaging or you could leverage automation to stage and prepare the VM for imaging. Here we are choosing automation. Let’s take a look at the template.

Opening up the Virtual Machine Manager environment (remember, VMM fully supports managing VMWare, Hyper-V or Citrix environments) we navigate to the Virtual Machine Manager library and select properties of our template – BareMetal-Large. The BareMetal-Large template is a blank virtual machine that has all of the settings needed for the VM loaded as part of the template. The system also has a 60 GB blank hard disk. Note in the DVD drive we have a ConfigMgr 2012 R2 OSD boot iso file.

This template is what our ‘Create VM from Template’ step in the Orchestrator runbook will move out to the VM host system.

6 – In step 6 we have finished deploying the template and now we need to start work on getting the newly staged system imported into the ConfigMgr database in preparation for imaging. To do this we will leverage the ‘Get Network Adapter’ object from the Virtual Machine Manager Integration pack. All we need to specify in this step is the name of the virtual machine which is already in the Data bus from reading the text file earlier.

7- Now that the information about the network adapter for our specific virtual machine is stored in the Data bus we can pull out any number of details. All we care about here is the MAC address. Similar to the import PowerShell steps we saw earlier, here we simply subscribe to the published data from step 6 and pick out the MAC address.

Like before, the full script is exactly the same as the earlier one except we are subscribing to our MAC address data from step 6 except the source for our parameter information is different.

$PSE = Powershell {

import-module($Env:SMS_ADMIN_UI_PATH.Substring(0,$Env:SMS_ADMIN_UI_PATH.Length-5) + '\ConfigurationManager.psd1')

CD CAS:

Import-CMComputerInformation -ComputerName "\`d.T.~Ed/{2F098AF5-596D-4A72-9489-6901DAA13634}.LineText\`d.T.~Ed/" -MacAddress "\`d.T.~Ed/{2A525C66-ADB9-488E-B669-86D543AC33FA}.MAC Address\`d.T.~Ed/" -CollectionName "SCORCHVMPhysicalDemo"

}

At this point all of the specialized work of setting up imaging for a physical or a virtual machine is complete and we can unify the runbook workflow again. This begins in step 10.

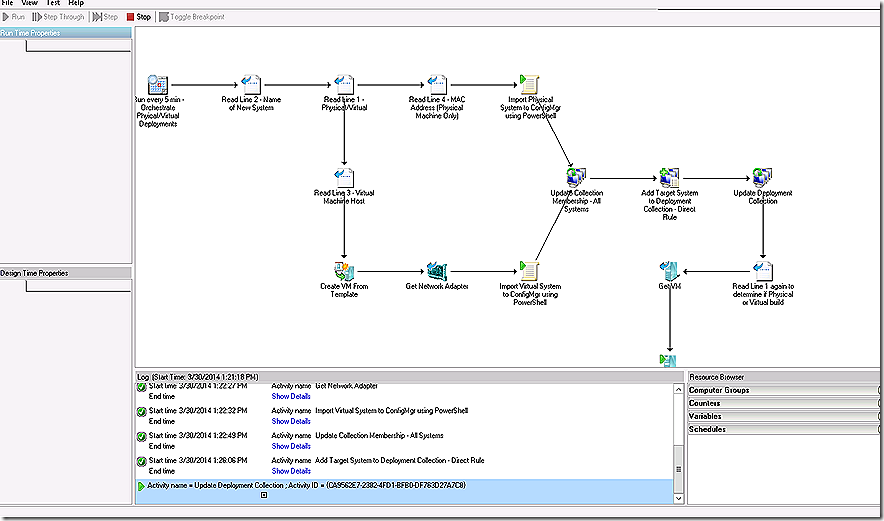

10 – With our machine now imported, whether physical or virtual, it’s time to update and refresh the All Systems collection. This update and refresh will result in the imported machine being made a member of the All Systems collection. Since our collection used as a target for image deployment is limited to the All Systems collection we must ensure All Systems is fully updated before we can proceed to add our direct membership rule to the targeting collection.

The Update Collection Membership integration pack item requires you identify a Collection that will be updated. The Collection Value Type field allows specifying the Collection by either Name or ID. The Wait for Refresh Completion option is either True or False. In my case I have set it to True which means the runbook will wait until the collection update is complete prior to proceeding. This is important because the next couple of steps require membership in the All Systems collection be up to date.

Note: In a hierarchy this step may take several minutes. Notice in the connection we are connected to the CAS. This means for the collection to complete updating requires a collection update request be sent to the child primary site (which is where collection evaluator is running) through replication, the collection update will take place on the child primary site and then replication needs to send the updated collection membership back to the CAS.

It would be very easy to specify a direct connection to the primary instead of the CAS. If doing so then all ConfigMgr processes in the runbook would likely need to be run against the primary. In production leveraging the primary is recommended and some time savings related to replication would be seen by doing so but where multiple primary sites are present some minimal additional logic would need to be added to the process to allow specifying which primary should be leveraged for a given run of the runbook.

11 – With the membership of the All Systems collection updated the imported system can now be added to the collection targeted by our task sequence. The Add Collection Rule integration pack item can be used for this.

The collection name for adding the direct membership rule is SCORCHVMPhysicalDemo and is specified by Name. The Collection Value Type options also would allow specifying by Collection ID if preferred. The Rule Name field specifies the name of the direct membership rule being added. Here note that the first portion of the line is text that will be consistent for each addition where the last portion of the line derives its value from published data, in this case the machine name specified in the text file read earlier. The Rule Type can either be Direct, as in this case, Query, Include or Exclude. The Rule Definition specifies the criteria for the rule being added. In this case simply leveraging the name from the published data makes sense but several potential criteria could be specified. Available criteria include Resource ID’s, Resource Names, Query ID, Query String, Collection IDs and Collection Names.

12 – With the All Systems collection updated and the direct membership rule added step 12 now simply forces an update on the collection that will be used for targeting the task sequence. The only difference from the previous update step is the collection name being updated.

Once the update here completes the system added is ready for imaging. All that remains is for the system to be switched on and booted to PE. In the case of a physical system this would involve an individual powering on the system and either PXE booting or supplying boot media. In the case of a virtual system the runbook is leveraged to power on the system. Since the virtual machine already has the boot media as part of the template and the boot media is enabled for unattended installation the imaging will start immediately without the need for manual assistance.

13 – If the system being built is a virtual machine then the extra step of turning it on needs to be handled. For simplicity this step simply again reads the input file to determine if this is a physical or virtual system and, if virtual, continues with subsequent steps which will power it on.

14 – Processing continues here only if the system being imaged is a virtual machine. This step leverages the Get VM Properties integration pack object to get the VM just staged by name. The Name definition is pulled from the data bus based on inputs read earlier.

15 – The Get VM Properties pulls a full set of details about the specified VM, including the unique VM ID property which is needed by the Start VM step. The value of this property is referenced from the data bus as populated by the previous step.

At this stage the physical or virtual system being imaged has started up and, because the system in question is a part of the collection targeted by the task sequence deployment, will begin to image. So what specifically is in the task sequence? Nothing special – just a basic image deployment but with a twist at the end.

This is just a simple task sequence that will either deploy Windows 8 or Server 2012 and then provide customization of each based on variables associated with the collection. This is shown simply to illustrate that a single task sequence could be leveraged to handle deployment of different Operating Systems including customization of those Operating Systems based simply on variable input. And, the sample runbook could be easily extended to handle and work with the needed variables supplied as part of the input file, SharePoint site, etc.

Note: There are some caveats to the process of allowing multiple Operating Systems to be imaged in a single task sequence such as the extra download effort (depending on settings) to ensure both images are present before the task sequence begins running. I won’t go into those caveats in detail here .

The task sequence runs and at the very end has the highlighted step to initiate cleanup of the system if a virtual machine. In this case the external utility SCOJobRunner is used to call back into Orchestrator to initiate a runbook to handle the cleanup. SCOJobRunner is used here to call out the utility which is a free download and is very useful in some scenarios, such as imaging Linux as described in my previous blog post here . In most cases though and as mentioned earlier the preferred route to call an Orchestrator runbook from a task sequence is to leverage the MDT integration option to Execute Runbook as shown.

However the runbook is called it’s important that the step have conditions to only trigger if the virtual machine registry key is populated.

And this leads the discussion back to Orchestrator where we see a very simple cleanup runbook that acts to shut down the virtual machine. This cleanup runbook example really isn’t practical in production but does serve to illustrate the interplay that can be achieved between task sequencing and SCORCH. Just in the simple example shown here Orchestrator starts the process, invokes virtual machine manager and ConfigMgr along the way, initiates the process to start the task sequence, the task sequence runs and the calls SCORCH again to finish up some processing. This also answers a long running question. Is it possible to have one task sequence call another? No. But Yes. A task sequence is not able to call another task sequence but with SCORCH a task sequence is able to call a runbook. So, in effect, we now have the ability for one task sequence to call another ‘task sequence’ since a runbook is, effectively, a task sequence.

So all of this is really cool, now time to see the runbook in action. Using the runbook tester component of SCORCH the runbook is initiated.

The VM template is deployed to the VM host specified in the input file.

Checking the VM Host the system being created is seen but is powered off because it is still in the process of being fully deployed.

With the VM template deployed now the All Systems collection is updated, the direct membership rule added to the target collection and the target collection updated.

The runbook proceeds to ‘power on’ the virtual system.

The virtual system boots from the included boot media, finds the required task sequence deployment and begins to work through it.

And voila! The image deployment is underway totally hands off and also leveraging all of the work already done preparing the OSD environment for physical imaging. Using this approach we take full advantage or the capabilities of ConfigMgr and bring consistency to virtual and physical image management! The demo runbook is attached for reference.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.