Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Azure

- Microsoft Developer Community Blog

- Building Microservices with AKS and VSTS – Part 3

Building Microservices with AKS and VSTS – Part 3

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Published

Feb 12 2019 03:55 PM

731

Views

Feb 12 2019

03:55 PM

Feb 12 2019

03:55 PM

First published on MSDN on Apr 10, 2018

We're still working the microservice game. When we left things last time we had a service running inside a managed Kubernetes (AKS) cluster, but no apparent way to browse to it. Which is not the end-user happy state.

I suggest skimming parts 1 & 2 if you haven't done so before:

https://blogs.msdn.microsoft.com/azuredev/2018/03/27/building-microservices-with-aks-and-vsts-pa...

https://blogs.msdn.microsoft.com/azuredev/2018/04/03/building-microservices-with-aks-and-vsts-pa...

Clearly there are a couple of things we need to fix for our microservice to become a regular web page:

- We need an external IP address accessible over the Internet.

- We would like to assign a DNS URL to the IP address.

- We should assign a certificate to our service for encrypting the traffic.

My opinion is that when the architect's eyes become glossy waxing about microservices they kind of omit minor details like these. I'm not saying this is impossible to fix, but developers usually don't have to deal with this when deploying classic monoliths, and mentally this means more "boxes" to keep track of. It may also lead to a discussion of its own whether this is something the developers can be trusted to handle themselves, or if this should be handled by operations.

In a larger organization where the cluster is already up and running, and you the developer are just handed instructions on how to get going, it may indeed already have been taken care of. But for the context of this series you're running a one-man shop and need to do things by yourself :) (For those of you who have worked in/still work in big corp you know that there's still days where it feels like you're on your own, so this should feel realistic.)

While I have run into network admins who have enough public IPv4 addresses to supply an entire town, the most common pattern is to have a limited number of external facing addresses and port openings exposed, and have all traffic to the back-end flow through some sort of aggregator. (I use the term "aggregator" because it could be any combination of firewalls, load balancers, and routers.) Kubernetes is no different in this sense. You can assign a public IP for each service, as long as your cluster provider doesn't limit you, but you probably don't have a good design if you set it up like that.

But how do we go about making this work?

Referring to the yaml we created in the previous post we had this definition as part of it (just an extract):

[code language="csharp"]

apiVersion: v1

kind: Service

metadata:

name: api-playground

spec:

ports:

- port: 80

selector:

app: api-playground

[/code]

By adding type: LoadBalancer to the spec you instruct Kubernetes that you want a proper IP address assigned:

[code language="csharp"]

apiVersion: v1

kind: Service

metadata:

name: api-playground

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: api-playground

[/code]

Note that it is specific to the cluster implementation if this is a "true" external IP, or just external to the cluster (which could still be from a private address space). In managed Kubernetes Azure provides addresses from the public address space.

If you want to test this out immediately you can do so as an exercise of your own, but I will skip it for now since it really is just an intermediary step. We want to implement a pattern where the traffic goes through a middleman instead like I referred to in the previous paragraph. For instance we want to be able to have the internal IP addresses change as services are updated/redeployed/etc. and still have the external IP be fairly static. Correspondingly keeping the external DNS records synchronized are easier when the IP addresses don't change too often. While not a microservice concern per se troubleshooting DNS caching can be less fun than you would think.

There are a number of options available for providing this feature, and comparing these are out of scope for an introductory walkthrough. To make things easy, and fairly standardized, we will use nginx to serve this purpose. In Kubernetes parlance this is referred to as an "ingress controller", as it does exactly that :)

Before setting up nginx there are some additional things to get a handle on. The external IP address will be assigned and handled automatically by Azure. But as already stated you usually want DNS to be what drives your internet surfing.

This is a point where you need to make a decision on how much lab vs production you choose for this walkthrough. AKS comes with the option to use the default Azure DNS namespace, so you can configure myAKScluster.westeurope.cloudapp.azure.com to point to whatever external IP address you have at the time. This is great, and you can happily use that for both http and https testing. However you can't really tell end-users to browse to such addresses. Meaning you either need to provide them with a shorter link that redirects their browser, use a CNAME mapping www.contoso.com to longurl.azure.com (provided you don't need https), or other "tricks" that act as workarounds. (HTTPS will break when the certificate isn't issued to the CNAME.) Or you can properly setup your own DNS namespace for use in the AKS cluster.

I know what would be the right answer for production, but if you don't have a test domain available you can go with the default Azure.com namespace.

Configuring azure.com DNS records

To configure the DNS name you need to set it on the "nodepool VM":

The option to configure DNS will only be available once a public IP has been assigned, which we elected to not do yet. (Well, if you tested it on your own you're good on this.) When DNS has been made available you can configure the record like this:

So, I need to configure the ingress to be publically exposed before I configure the name it will be available through? Well, yes, sort of chicken and egg problem I suppose, but that is the way it is.

Regardless of that - being able to do a screenshot it's obviously there somewhere, buy where am I finding this in the portal you ask? This is not an option when opening the resource group we created for AKS? Well, no, another fine point of the cluster creation process. AKS creates a separate resource group, named something like MC_csharpFiddleRG_csharpFiddleAKS_westeurope , where you can locate most of the actual resources. (The "Virtual Machine" resource type is where you configure DNS.) This is one of those "managed" things you don't really need to care about, but which it makes sense to be aware of.

Fair enough, I have purchased a domain name earlier, but what do you mean by "test domain"? Well, you see, many DNS providers are fairly static experiences. When you need to add or edit a DNS record you login to a control panel and do it manually. After all, since you haven't been changing DNS records multiple times pr day so far it doesn't matter if it is a manual task. In the microservice world you might not want this dependency. You need to be able to do this programmatically and dynamically. In other words, your DNS provider needs to offer an API in addition to the web interface. Not all DNS providers do.

Luckily there is an Azure service for this - aptly named "Azure DNS" that I will be using :)

I will not cover how to point your existing DNS zone to Azure, but clearly this is not something you want to do with your current production DNS zone without fully considering the consequences, so that's why I advise you to use a "test" domain. If you want to delegate DNS (test or production alike) more info can be found here:

https://docs.microsoft.com/en-us/azure/dns/dns-delegate-domain-azure-dns

AKS or Kubernetes doesn't require you to host your DNS in Azure, but the range of DNS providers supported out of the box are somewhat limited so if you not using the big names like Azure DNS, AWS Route53, etc. there would be an extra effort to make it work for your specific provider if they aren't on the list.

Adding DNS registration to your cluster

In the microservice spirit we will add a separate service for handling DNS. We will use a component called ExternalDNS:

https://github.com/kubernetes-incubator/external-dns/blob/master/docs/tutorials/azure.md

The name of this service lends itself well to sending us on a minor detour. The service is called "ExternalDNS", and I have been referring to external records as well. Do we not need DNS internally or inside the cluster? Well, this is one of those things you expect the orchestration "machine" to handle for you. Since the internal IP addresses can change I can't have the www container hardcoded to hit the api container at 10.0.0.10, but my code can understand things like making HTTP requests towards http://api provided this resolves to an IP address. When discussing microservices on an implementation-neutral level we usually bake this into the term "service discovery". Where we are now this is handled by the "AKS Magic Black Box", and as far as you are concerned it just works™. It is however entirely possible to handle this differently as well, but I will avoid these details to keep the abstraction clean for now. (Don't worry, we will have some more details on this as well in due time.)

Getting back to the external part of name resolution there are instructions on the setup on the page linked to above, so I will borrow that, and clarify the following:

- You need to take the route with creating a service principal, and

- You need to build this azure.json file yourself.

By logging in with az login , and if necessary setting the active subscription, you might already have some of these ids handy, but in case not do an az account list and take note of the following:

- tenantId

- Id => this is the subscription id.

If you already have created a DNS resource group in the Azure Portal you need the id for this:

az group show --name myCsharpFiddleDNSrg

It is in the format /subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg so you can figure it out yourself, but it's probably easier to do a copy & paste.

Of course, if you don't have a DNS resource group, you can create that as well through the CLI:

az group create --name myCSharpFiddleDNSrg

In either case - create the service principal, and assign necessary rights:

az ad sp create-for-rbac --role="Contributor"

--scopes="/subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg"

-n AKSExternalDnsServicePrincipal

(I have broken it over multiple lines for readability, but apply it like one continuous line.)

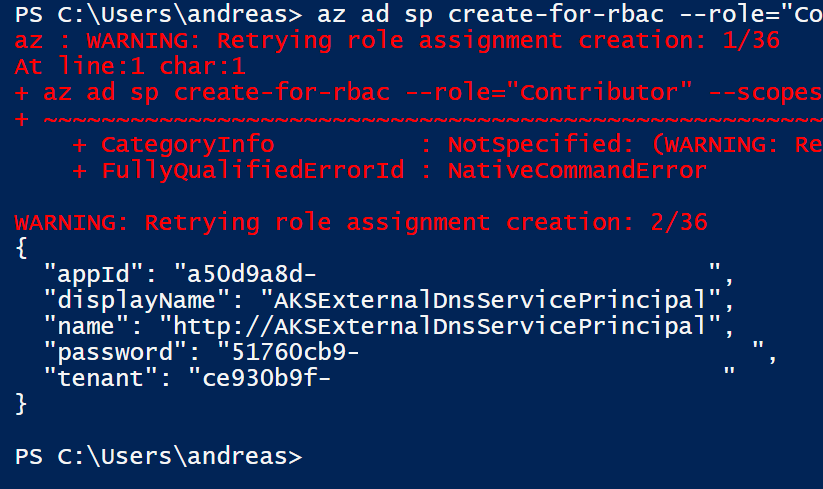

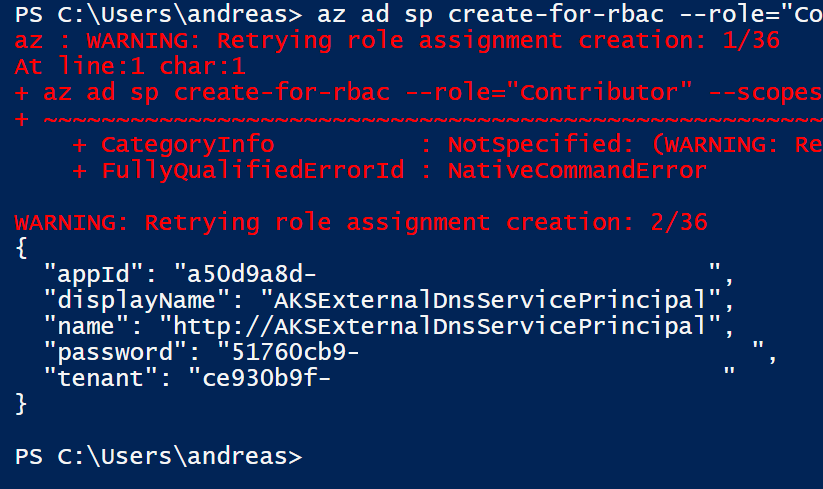

For some reason it doesn't always succeed on the first attempt, and I see a couple of retries before my command goes through:

What you want from this output is the appid and the password, which we will use in our azure.json. This should now be complete in this format (create file and fill in):

[code language="csharp"]

{

"tenantId": "tenant-guid",

"subscriptionId": "subscription-guid",

"aadClientId": "appId",

"aadClientSecret": "password",

"resourceGroup": "/subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg"

}

[/code]

Make sure you get the key names right - I messed up that my first time :)

Before we forget - let's create the actual DNS zone as well:

az network dns zone create -g myCsharpFiddleDNSrg -n contoso.com

Contoso.com is taken, but you can actually grab any name you like. Do keep in mind, that while it will work within the Azure sphere it will not work across the general Internet until you login to your registrar and point the necessary records to the Azure nameservers. (Preventing you from random hijacks of other people's domain names.)

Alright, with azure.json created we can create the secret needed for ExternalDNS to work.

Run the following command:

kubectl create secret generic azure-config-file --from-file=azure.json

And now we can deploy ExternalDNS. Create a new file, externaldns.yaml , and copy-paste from the sample with RBAC:

[code language="csharp"]

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: default

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.opensource.zalan.do/teapot/external-dns:v0.4.8

args:

- --source=service

- --domain-filter=example.com # (optional) limit to only example.com domains; change to match the zone created above.

- --provider=azure

- --azure-resource-group=externaldns # (optional) use the DNS zones from the tutorial's resource group

volumeMounts:

- name: azure-config-file

mountPath: /etc/kubernetes

readOnly: true

volumes:

- name: azure-config-file

secret:

secretName: azure-config-file

[/code]

You need to change --domain-filter to the domain zone you chose (contoso.com), as well as --azure-resource-group (myCsharpFiddleDNSrg) but the rest should be good.

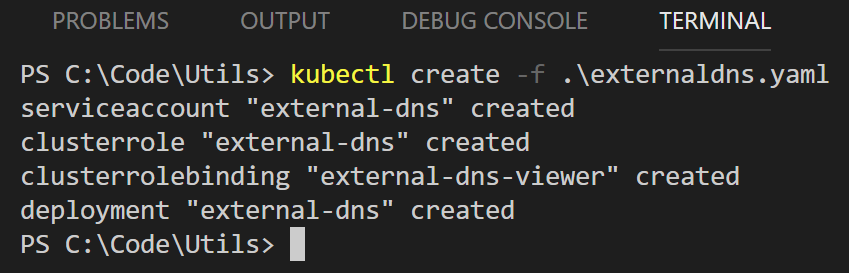

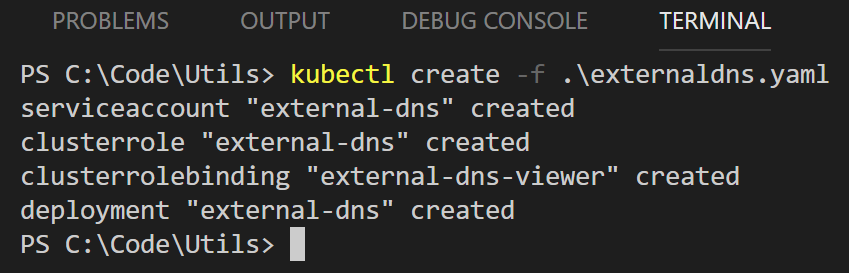

Deploy with kubectl create -f externaldns.yaml

Whew, that's a lot of steps just to get DNS working. Good thing we don't have to do that on a daily basis. What we have deployed now though is the "engine" for registering DNS records dynamically; we don't actually have any records created yet as we still have not deployed an ingress controller.

There's just one more thing we want to do before we expose the service on the Internet.

Adding SSL/TLS certificates

Wouldn't it be nice if we had certificates automatically correctly configured for https? Yes, it would. And fortunately there is a component for that too.

We will use a component called Kube-Lego for this. This can be installed through Helm (also broken up for readability):

helm install stable/kube-lego \

--set config.LEGO_EMAIL=user@contoso.com \

--set config.LEGO_URL=https://acme-v01.api.letsencrypt.org/directory

Replace LEGO_EMAIL with your email address.

If you get an error about not being able to download/install it might be that you didn't properly run helm init and helm repo update .

You might notice that it says that Kube-Lego is deprecated, and to use cert-manager instead. You might also notice that we refer to v1 of the Let's Encrypt API even though v2 was released not so long ago. (Ok, maybe not everyone is that into Let's Encrypt, but in case you are one of those, I am aware of this.) Yes, this TLS component is being deprecated, and it will not support v2 either. For now it is sufficient though.

Why not move to the newer bits? Quoting https://github.com/jetstack/cert-manager :

This project is not yet ready to be a component in a critical production stack, however it is at a point where it offers comparable features to other projects in the space. If you have a non-critical piece of infrastructure, or are feeling brave, please do try cert-manager and report your experience here in the issue section.

So in the meantime we will stick with Kube-Lego, but if you want to migrate the folks at Jetstack have a guide for that:

https://github.com/jetstack/cert-manager/blob/master/docs/user-guides/migrating-from-kube-lego.m...

Installing nginx

After getting the TLS pieces in place I think we're ready to install the ingress controller now.

helm install stable/nginx-ingress

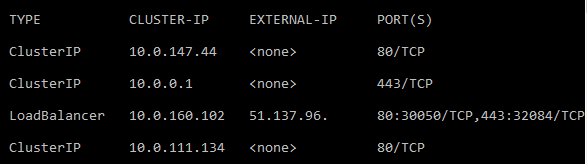

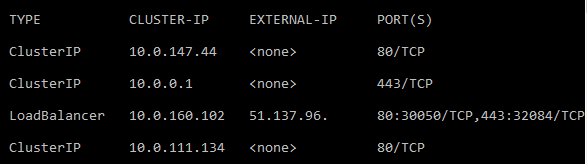

As you can see this triggers creation of several objects. You will notice that it says "pending" for external IP initially. Wait a minute or two and run kubectl get svc , and you will notice that you have an external IP listed:

Redeploying services/containers should not require modifying the nginx controller, and this means the external interface stays pretty much like it is.

Alright, so how do we link this to DNS. The initial thought is perhaps that the microservice container is responsible for this, but this is handled by the ingress controller as well. (The container needs to know its internal DNS name, but not the external.) This is done by adding to the configuration of nginx. But we deployed using helm , so we don't have a yaml file locally for this. So, we will perform this task with a different method. Even though we did not write a yaml file nginx does have a config behind the scenes. Remember the Kubernetes dashboard we used back in part 1?

Locate the nginx-controller service. (Names assigned automagically so you will probably not have the same name as my service.)

Hit the three dots to get a context menu and select View/edit YAML .

Add the following annotation to the YAML:

[code language="csharp"]

"annotations": {

"external-dns.alpha.kubernetes.io/hostname": "csharpfiddle.westeurope.cloudapp.azure.com"

}

[/code]

Replace with the DNS name of your choice. You can use either contoso.com (your own domain name), and cloudapp.azure.com (supplied by Microsoft) here if you like.

It goes in the metadata section like this:

Make sure spaces, indentation levels, and json is valid before hitting Update .

An nginx configuration can be verified like this:

You will notice that this appears as an "empty" cluster - this illustrates how nginx should be responding regardless of whether we have something listening on the backend or not. (The 404 here indicating there is nothing on the backend.)

You can use https as well, but we're using a self-signed certificate so you will get a warning:

We have the ingress controller in place, so what follows is defining an ingress entity. It would look similar to this:

api-playground-ingress.yaml

[code language="csharp"]

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api-playground-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

kubernetes.io/tls-acme: 'true'

spec:

tls:

- hosts:

- csharpfiddle.westeurope.cloudapp.azure.com

secretName: tls-secret

rules:

- host: csharpfiddle.westeurope.cloudapp.azure.com

http:

paths:

- path: /

backend:

serviceName: api-playground

servicePort: 80

[/code]

The tls host attribute defines which name we want for our certificate. The rules host attribute defines the DNS name we want to listen on. These two should normally match each other. (The exception would be wildcard certificates, but since that requires v2 Let's Encrypt we can't support that anyways.)

The backend specifies the name (internal DNS) of the service nginx should route the traffic too (match to the name you used when defining the microservice). You will also notice that we're routing to port 80 internally, so the http traffic inside the cluster is unencrypted. It is possible to run with encryption internally as well, but that requires additional work - since this traffic does not route across the Internet we are entirely ok with this at this stage in our experiment.

Apply the ingress config: Kubectl create -f api-playground-ingress.yaml

If we head back to the browser, and refresh, the certificate should be good now:

Which also means that if everything went well we are finally where we have a microservice-based web app running in an AKS cluster.

Give yourself a pat on the back for what you have achieved so far.

But isn't it weird that we were able to assign certificate to an azure.com DNS URL that we don't own?

And doesn't it still feel like we're missing something in the DevOps story - do we need to do all this yaml file stuff on the command line all the time?

Guess we need to return next week to wrap up a couple of loose ends :)

Authored by Andreas Helland

We're still working the microservice game. When we left things last time we had a service running inside a managed Kubernetes (AKS) cluster, but no apparent way to browse to it. Which is not the end-user happy state.

I suggest skimming parts 1 & 2 if you haven't done so before:

https://blogs.msdn.microsoft.com/azuredev/2018/03/27/building-microservices-with-aks-and-vsts-pa...

https://blogs.msdn.microsoft.com/azuredev/2018/04/03/building-microservices-with-aks-and-vsts-pa...

Clearly there are a couple of things we need to fix for our microservice to become a regular web page:

- We need an external IP address accessible over the Internet.

- We would like to assign a DNS URL to the IP address.

- We should assign a certificate to our service for encrypting the traffic.

My opinion is that when the architect's eyes become glossy waxing about microservices they kind of omit minor details like these. I'm not saying this is impossible to fix, but developers usually don't have to deal with this when deploying classic monoliths, and mentally this means more "boxes" to keep track of. It may also lead to a discussion of its own whether this is something the developers can be trusted to handle themselves, or if this should be handled by operations.

In a larger organization where the cluster is already up and running, and you the developer are just handed instructions on how to get going, it may indeed already have been taken care of. But for the context of this series you're running a one-man shop and need to do things by yourself :) (For those of you who have worked in/still work in big corp you know that there's still days where it feels like you're on your own, so this should feel realistic.)

While I have run into network admins who have enough public IPv4 addresses to supply an entire town, the most common pattern is to have a limited number of external facing addresses and port openings exposed, and have all traffic to the back-end flow through some sort of aggregator. (I use the term "aggregator" because it could be any combination of firewalls, load balancers, and routers.) Kubernetes is no different in this sense. You can assign a public IP for each service, as long as your cluster provider doesn't limit you, but you probably don't have a good design if you set it up like that.

But how do we go about making this work?

Referring to the yaml we created in the previous post we had this definition as part of it (just an extract):

[code language="csharp"]

apiVersion: v1

kind: Service

metadata:

name: api-playground

spec:

ports:

- port: 80

selector:

app: api-playground

[/code]

By adding type: LoadBalancer to the spec you instruct Kubernetes that you want a proper IP address assigned:

[code language="csharp"]

apiVersion: v1

kind: Service

metadata:

name: api-playground

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: api-playground

[/code]

Note that it is specific to the cluster implementation if this is a "true" external IP, or just external to the cluster (which could still be from a private address space). In managed Kubernetes Azure provides addresses from the public address space.

If you want to test this out immediately you can do so as an exercise of your own, but I will skip it for now since it really is just an intermediary step. We want to implement a pattern where the traffic goes through a middleman instead like I referred to in the previous paragraph. For instance we want to be able to have the internal IP addresses change as services are updated/redeployed/etc. and still have the external IP be fairly static. Correspondingly keeping the external DNS records synchronized are easier when the IP addresses don't change too often. While not a microservice concern per se troubleshooting DNS caching can be less fun than you would think.

There are a number of options available for providing this feature, and comparing these are out of scope for an introductory walkthrough. To make things easy, and fairly standardized, we will use nginx to serve this purpose. In Kubernetes parlance this is referred to as an "ingress controller", as it does exactly that :)

Before setting up nginx there are some additional things to get a handle on. The external IP address will be assigned and handled automatically by Azure. But as already stated you usually want DNS to be what drives your internet surfing.

This is a point where you need to make a decision on how much lab vs production you choose for this walkthrough. AKS comes with the option to use the default Azure DNS namespace, so you can configure myAKScluster.westeurope.cloudapp.azure.com to point to whatever external IP address you have at the time. This is great, and you can happily use that for both http and https testing. However you can't really tell end-users to browse to such addresses. Meaning you either need to provide them with a shorter link that redirects their browser, use a CNAME mapping www.contoso.com to longurl.azure.com (provided you don't need https), or other "tricks" that act as workarounds. (HTTPS will break when the certificate isn't issued to the CNAME.) Or you can properly setup your own DNS namespace for use in the AKS cluster.

I know what would be the right answer for production, but if you don't have a test domain available you can go with the default Azure.com namespace.

Configuring azure.com DNS records

To configure the DNS name you need to set it on the "nodepool VM":

The option to configure DNS will only be available once a public IP has been assigned, which we elected to not do yet. (Well, if you tested it on your own you're good on this.) When DNS has been made available you can configure the record like this:

So, I need to configure the ingress to be publically exposed before I configure the name it will be available through? Well, yes, sort of chicken and egg problem I suppose, but that is the way it is.

Regardless of that - being able to do a screenshot it's obviously there somewhere, buy where am I finding this in the portal you ask? This is not an option when opening the resource group we created for AKS? Well, no, another fine point of the cluster creation process. AKS creates a separate resource group, named something like MC_csharpFiddleRG_csharpFiddleAKS_westeurope , where you can locate most of the actual resources. (The "Virtual Machine" resource type is where you configure DNS.) This is one of those "managed" things you don't really need to care about, but which it makes sense to be aware of.

Fair enough, I have purchased a domain name earlier, but what do you mean by "test domain"? Well, you see, many DNS providers are fairly static experiences. When you need to add or edit a DNS record you login to a control panel and do it manually. After all, since you haven't been changing DNS records multiple times pr day so far it doesn't matter if it is a manual task. In the microservice world you might not want this dependency. You need to be able to do this programmatically and dynamically. In other words, your DNS provider needs to offer an API in addition to the web interface. Not all DNS providers do.

Luckily there is an Azure service for this - aptly named "Azure DNS" that I will be using :)

I will not cover how to point your existing DNS zone to Azure, but clearly this is not something you want to do with your current production DNS zone without fully considering the consequences, so that's why I advise you to use a "test" domain. If you want to delegate DNS (test or production alike) more info can be found here:

https://docs.microsoft.com/en-us/azure/dns/dns-delegate-domain-azure-dns

AKS or Kubernetes doesn't require you to host your DNS in Azure, but the range of DNS providers supported out of the box are somewhat limited so if you not using the big names like Azure DNS, AWS Route53, etc. there would be an extra effort to make it work for your specific provider if they aren't on the list.

Adding DNS registration to your cluster

In the microservice spirit we will add a separate service for handling DNS. We will use a component called ExternalDNS:

https://github.com/kubernetes-incubator/external-dns/blob/master/docs/tutorials/azure.md

The name of this service lends itself well to sending us on a minor detour. The service is called "ExternalDNS", and I have been referring to external records as well. Do we not need DNS internally or inside the cluster? Well, this is one of those things you expect the orchestration "machine" to handle for you. Since the internal IP addresses can change I can't have the www container hardcoded to hit the api container at 10.0.0.10, but my code can understand things like making HTTP requests towards http://api provided this resolves to an IP address. When discussing microservices on an implementation-neutral level we usually bake this into the term "service discovery". Where we are now this is handled by the "AKS Magic Black Box", and as far as you are concerned it just works™. It is however entirely possible to handle this differently as well, but I will avoid these details to keep the abstraction clean for now. (Don't worry, we will have some more details on this as well in due time.)

Getting back to the external part of name resolution there are instructions on the setup on the page linked to above, so I will borrow that, and clarify the following:

- You need to take the route with creating a service principal, and

- You need to build this azure.json file yourself.

By logging in with az login , and if necessary setting the active subscription, you might already have some of these ids handy, but in case not do an az account list and take note of the following:

- tenantId

- Id => this is the subscription id.

If you already have created a DNS resource group in the Azure Portal you need the id for this:

az group show --name myCsharpFiddleDNSrg

It is in the format /subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg so you can figure it out yourself, but it's probably easier to do a copy & paste.

Of course, if you don't have a DNS resource group, you can create that as well through the CLI:

az group create --name myCSharpFiddleDNSrg

In either case - create the service principal, and assign necessary rights:

az ad sp create-for-rbac --role="Contributor"

--scopes="/subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg"

-n AKSExternalDnsServicePrincipal

(I have broken it over multiple lines for readability, but apply it like one continuous line.)

For some reason it doesn't always succeed on the first attempt, and I see a couple of retries before my command goes through:

What you want from this output is the appid and the password, which we will use in our azure.json. This should now be complete in this format (create file and fill in):

[code language="csharp"]

{

"tenantId": "tenant-guid",

"subscriptionId": "subscription-guid",

"aadClientId": "appId",

"aadClientSecret": "password",

"resourceGroup": "/subscriptions/subscription-guid/resourceGroups/myCsharpFiddleDNSrg"

}

[/code]

Make sure you get the key names right - I messed up that my first time :)

Before we forget - let's create the actual DNS zone as well:

az network dns zone create -g myCsharpFiddleDNSrg -n contoso.com

Contoso.com is taken, but you can actually grab any name you like. Do keep in mind, that while it will work within the Azure sphere it will not work across the general Internet until you login to your registrar and point the necessary records to the Azure nameservers. (Preventing you from random hijacks of other people's domain names.)

Alright, with azure.json created we can create the secret needed for ExternalDNS to work.

Run the following command:

kubectl create secret generic azure-config-file --from-file=azure.json

And now we can deploy ExternalDNS. Create a new file, externaldns.yaml , and copy-paste from the sample with RBAC:

[code language="csharp"]

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: default

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: external-dns

spec:

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.opensource.zalan.do/teapot/external-dns:v0.4.8

args:

- --source=service

- --domain-filter=example.com # (optional) limit to only example.com domains; change to match the zone created above.

- --provider=azure

- --azure-resource-group=externaldns # (optional) use the DNS zones from the tutorial's resource group

volumeMounts:

- name: azure-config-file

mountPath: /etc/kubernetes

readOnly: true

volumes:

- name: azure-config-file

secret:

secretName: azure-config-file

[/code]

You need to change --domain-filter to the domain zone you chose (contoso.com), as well as --azure-resource-group (myCsharpFiddleDNSrg) but the rest should be good.

Deploy with kubectl create -f externaldns.yaml

Whew, that's a lot of steps just to get DNS working. Good thing we don't have to do that on a daily basis. What we have deployed now though is the "engine" for registering DNS records dynamically; we don't actually have any records created yet as we still have not deployed an ingress controller.

There's just one more thing we want to do before we expose the service on the Internet.

Adding SSL/TLS certificates

Wouldn't it be nice if we had certificates automatically correctly configured for https? Yes, it would. And fortunately there is a component for that too.

We will use a component called Kube-Lego for this. This can be installed through Helm (also broken up for readability):

helm install stable/kube-lego \

--set config.LEGO_EMAIL=user@contoso.com \

--set config.LEGO_URL=https://acme-v01.api.letsencrypt.org/directory

Replace LEGO_EMAIL with your email address.

If you get an error about not being able to download/install it might be that you didn't properly run helm init and helm repo update .

You might notice that it says that Kube-Lego is deprecated, and to use cert-manager instead. You might also notice that we refer to v1 of the Let's Encrypt API even though v2 was released not so long ago. (Ok, maybe not everyone is that into Let's Encrypt, but in case you are one of those, I am aware of this.) Yes, this TLS component is being deprecated, and it will not support v2 either. For now it is sufficient though.

Why not move to the newer bits? Quoting https://github.com/jetstack/cert-manager :

This project is not yet ready to be a component in a critical production stack, however it is at a point where it offers comparable features to other projects in the space. If you have a non-critical piece of infrastructure, or are feeling brave, please do try cert-manager and report your experience here in the issue section.

So in the meantime we will stick with Kube-Lego, but if you want to migrate the folks at Jetstack have a guide for that:

https://github.com/jetstack/cert-manager/blob/master/docs/user-guides/migrating-from-kube-lego.m...

Installing nginx

After getting the TLS pieces in place I think we're ready to install the ingress controller now.

helm install stable/nginx-ingress

As you can see this triggers creation of several objects. You will notice that it says "pending" for external IP initially. Wait a minute or two and run kubectl get svc , and you will notice that you have an external IP listed:

Redeploying services/containers should not require modifying the nginx controller, and this means the external interface stays pretty much like it is.

Alright, so how do we link this to DNS. The initial thought is perhaps that the microservice container is responsible for this, but this is handled by the ingress controller as well. (The container needs to know its internal DNS name, but not the external.) This is done by adding to the configuration of nginx. But we deployed using helm , so we don't have a yaml file locally for this. So, we will perform this task with a different method. Even though we did not write a yaml file nginx does have a config behind the scenes. Remember the Kubernetes dashboard we used back in part 1?

Locate the nginx-controller service. (Names assigned automagically so you will probably not have the same name as my service.)

Hit the three dots to get a context menu and select View/edit YAML .

Add the following annotation to the YAML:

[code language="csharp"]

"annotations": {

"external-dns.alpha.kubernetes.io/hostname": "csharpfiddle.westeurope.cloudapp.azure.com"

}

[/code]

Replace with the DNS name of your choice. You can use either contoso.com (your own domain name), and cloudapp.azure.com (supplied by Microsoft) here if you like.

It goes in the metadata section like this:

Make sure spaces, indentation levels, and json is valid before hitting Update .

An nginx configuration can be verified like this:

You will notice that this appears as an "empty" cluster - this illustrates how nginx should be responding regardless of whether we have something listening on the backend or not. (The 404 here indicating there is nothing on the backend.)

You can use https as well, but we're using a self-signed certificate so you will get a warning:

We have the ingress controller in place, so what follows is defining an ingress entity. It would look similar to this:

api-playground-ingress.yaml

[code language="csharp"]

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: api-playground-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

kubernetes.io/tls-acme: 'true'

spec:

tls:

- hosts:

- csharpfiddle.westeurope.cloudapp.azure.com

secretName: tls-secret

rules:

- host: csharpfiddle.westeurope.cloudapp.azure.com

http:

paths:

- path: /

backend:

serviceName: api-playground

servicePort: 80

[/code]

The tls host attribute defines which name we want for our certificate. The rules host attribute defines the DNS name we want to listen on. These two should normally match each other. (The exception would be wildcard certificates, but since that requires v2 Let's Encrypt we can't support that anyways.)

The backend specifies the name (internal DNS) of the service nginx should route the traffic too (match to the name you used when defining the microservice). You will also notice that we're routing to port 80 internally, so the http traffic inside the cluster is unencrypted. It is possible to run with encryption internally as well, but that requires additional work - since this traffic does not route across the Internet we are entirely ok with this at this stage in our experiment.

Apply the ingress config: Kubectl create -f api-playground-ingress.yaml

If we head back to the browser, and refresh, the certificate should be good now:

Which also means that if everything went well we are finally where we have a microservice-based web app running in an AKS cluster.

Give yourself a pat on the back for what you have achieved so far.

But isn't it weird that we were able to assign certificate to an azure.com DNS URL that we don't own?

And doesn't it still feel like we're missing something in the DevOps story - do we need to do all this yaml file stuff on the command line all the time?

Guess we need to return next week to wrap up a couple of loose ends :)

0

Likes

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.