First published on TechNet on Apr 14, 2016

Hi all, Herbert Mauerer here. In this post we’re back to talk about the built-in AD Diagnostics Data collector set available for Active Directory Performance (ADPERF) issues and how to ensure a useful report is generated when your DCs are under heavy load.

Why are my domain controllers so busy you ask? Consider this: Active Directory stands in the center of the Identity Management for many customers. It stores the configuration information for many critical line of business applications. It houses certificate templates, is used to distribute group policy and is the account database among many other things. All sorts of network-based services use Active Directory for authentication and other services.

As mentioned there are many applications which store their configuration in Active Directory, including the details of the user context relative to the application, plus objects specifically created for the use of these applications.

There are also applications that use Active Directory as a store to synchronize directory data. There are products like Forefront Identity Manager (and now Microsoft Identity Manager ) where synchronizing data is the only purpose. I will not discuss whether these applications are meta-directories or virtual directories, or what class our Office 365 DirSync belongs to…

One way or the other, the volume and complexity of Active Directory queries has a constant trend of increasing, and there is no end in sight.

So what are my Domain Controllers doing all day?

We get this questions a lot from our customers. It often seems as if the AD Admins are the last to know what kind of load is put onto the domain controllers by scripts, applications and synchronization engines. And they are not made aware of even significant application changes.

But even small changes can have a drastic effect on the DC performance. DCs are resilient, but even the strongest warrior may fall against an overwhelming force. Think along the lines of "death by a thousand cuts". Consider applications or scripts that run non-optimized or excessive queries on many, many clients during or right after logon and it will feel like a distributed DoS. In this scenario, the domain controller may get bogged down due to the enormous workload issued by the clients. This is one of the classic scenarios when it comes to Domain Controller performance problems.

What resources exist today to help you troubleshoot AD Performance scenarios?

We have already discussed the overall topic in this blog , and today many customer requests start with the complaint that the response times are bad and the LSASS CPU time is high. There also is a blog post specifically on the toolset we've had since Windows Server 2008. We also updated and brought back the Server Performance Advisor toolset. This toolset is now more targeted at trend analysis and base-lining. If a video is more your style, Justin Turner revealed our troubleshooting process at Ignite .

The reports generated by this data collection are hugely useful for understanding what is burdening the Domain Controllers. There are fewer cases where DCs are responding slowly, but there is no significant utilization seen. We released a blog on that scenario and also gave you a simple method to troubleshoot long-running LDAP queries at our sister site . So what's new with this post?

The AD Diagnostic Data Collector set report "report.html" is missing or compile time is very slow

In recent months, we have seen an increasing number of customers with incomplete Data Collector Set reports. Most of the time, the “report.html” file is missing:

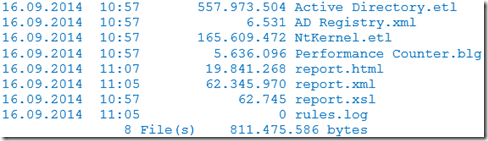

This is a folder where the creation of the report.html file was successful:

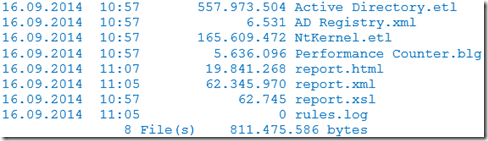

This folder has exceeded the limits for reporting:

Notice the report.html file is missing in the second folder example. Also take note that the ETL and BLG files are bigger. What’s the reason for this?

The Data Collector Set report generation process uncovered:

I worked with a customer recently with a pretty well-equipped Domain Controller (24 server-class CPUs, 256 GB RAM). The customer was kind enough to run a few tests for various report sizes, and found the following metrics:

If you have lower-spec and busier machines, you shouldn't expect the same results as this example (On a lower spec machine with a 3 GB ETL file, the report.html file would likely fail to compile within the 6-hour window).

What a bummer, how do you get Performance Logging done then?

Fortunately, there are a number of parameters for a Data Collector Set that come to the rescue. Before you can use any of them you first need one of the more custom Data Collector Sets. You can play with a variety of settings, based on the purpose of the collection.

In Performance Monitor you can create a custom set on the "User Defined" folder by right-clicking it, to bring up the New -> Data Collector Set option in the context menu:

This launches a wizard that prompts you for a number of parameters for the new set.

The first thing it wants is a name for the new set:

The next step is to select a template. It may be one of the built-in templates or one exported from another computer as an XML file you select through the “Browse” button. In our case, we want to create a clone of “Active Directory Diagnostics”:

The next step is optional, and it’s specifies the storage location for the reports. You may want to select a volume with more space or lower IO load than the default volume:

There is one more page in the wizard, but there is no reason to make any more changes here. You can click “Finish” on this page.

The default settings are fine for an idle DC, but if you find your ETL files are too large, your reports are not generated, or it takes too long to process the data, you will likely want to make the following configuration changes.

For a real "Big Data Collector Set" we first want to make important changes to the storage strategy of the set that are available in the “Data Manager” log:

The most relevant settings are “Resource Policy” and “Maximum Root Path Size”. I recommend starting with the settings as shown below:

Notice, I've changed the Resource policy from "Delete largest" to "Delete oldest". I've also increased the Maximum root path size from 1024 to 2048 MB. You can run some reports to learn what the best size settings are for you. You might very well end up using 10 GB or more for your reports.

The second crucial parameter for your custom sets is the run interval for the data collection. It is five minutes by default. You can adjust that in the properties of the collector in the “Stop Condition” tab. In many cases shortening the data collection is a viable step if you see continuous high load:

You should avoid going shorter than two minutes, as this is the maximum LDAP query duration by default. (If you have LDAP queries that reach this threshold, they would not show up in a report that is less than two minutes in length.) In fact, I would suggest the minimum interval be set to three minutes.

One very attractive option is automatically restarting the data collection if a certain size of data collection is exceeded. You need to use common sense when you look at the multiple reports, e.g. the ratio of long-running queries is then shown in the logs. But it is definitely better than no report.

If you expect to exceed the 1 GB limit often, you certainly should adjust the total size of collections (Maximum root path size) in the “Data Manager”.

So how do I know how big the collection is while running it?

You can take a look at the folder of the data collection in Explorer, but you will notice it is pretty lazy updating it with the current size of the collection:

Explorer only updates the folder if you are doing something with the files. It sounds strange, but attempting to delete a file will trigger an update:

Now that makes more sense…

If you see the log is growing beyond your expectations, you can manually stop it before the stop condition hits the threshold you have configured:

Of course, you can also start and stop the reporting from a command line using the logman instructions in this post .

Room for improvement

We are aware there is room for improvement to get bigger data sets reported in a shorter time. The good news is that much of these special configuration changes won’t be needed once your DCs are running on Windows Server 2016. We will talk about that in a future post.

Thanks for reading.

Herbert

Hi all, Herbert Mauerer here. In this post we’re back to talk about the built-in AD Diagnostics Data collector set available for Active Directory Performance (ADPERF) issues and how to ensure a useful report is generated when your DCs are under heavy load.

Why are my domain controllers so busy you ask? Consider this: Active Directory stands in the center of the Identity Management for many customers. It stores the configuration information for many critical line of business applications. It houses certificate templates, is used to distribute group policy and is the account database among many other things. All sorts of network-based services use Active Directory for authentication and other services.

As mentioned there are many applications which store their configuration in Active Directory, including the details of the user context relative to the application, plus objects specifically created for the use of these applications.

There are also applications that use Active Directory as a store to synchronize directory data. There are products like Forefront Identity Manager (and now Microsoft Identity Manager ) where synchronizing data is the only purpose. I will not discuss whether these applications are meta-directories or virtual directories, or what class our Office 365 DirSync belongs to…

One way or the other, the volume and complexity of Active Directory queries has a constant trend of increasing, and there is no end in sight.

So what are my Domain Controllers doing all day?

We get this questions a lot from our customers. It often seems as if the AD Admins are the last to know what kind of load is put onto the domain controllers by scripts, applications and synchronization engines. And they are not made aware of even significant application changes.

But even small changes can have a drastic effect on the DC performance. DCs are resilient, but even the strongest warrior may fall against an overwhelming force. Think along the lines of "death by a thousand cuts". Consider applications or scripts that run non-optimized or excessive queries on many, many clients during or right after logon and it will feel like a distributed DoS. In this scenario, the domain controller may get bogged down due to the enormous workload issued by the clients. This is one of the classic scenarios when it comes to Domain Controller performance problems.

What resources exist today to help you troubleshoot AD Performance scenarios?

We have already discussed the overall topic in this blog , and today many customer requests start with the complaint that the response times are bad and the LSASS CPU time is high. There also is a blog post specifically on the toolset we've had since Windows Server 2008. We also updated and brought back the Server Performance Advisor toolset. This toolset is now more targeted at trend analysis and base-lining. If a video is more your style, Justin Turner revealed our troubleshooting process at Ignite .

The reports generated by this data collection are hugely useful for understanding what is burdening the Domain Controllers. There are fewer cases where DCs are responding slowly, but there is no significant utilization seen. We released a blog on that scenario and also gave you a simple method to troubleshoot long-running LDAP queries at our sister site . So what's new with this post?

The AD Diagnostic Data Collector set report "report.html" is missing or compile time is very slow

In recent months, we have seen an increasing number of customers with incomplete Data Collector Set reports. Most of the time, the “report.html” file is missing:

This is a folder where the creation of the report.html file was successful:

This folder has exceeded the limits for reporting:

Notice the report.html file is missing in the second folder example. Also take note that the ETL and BLG files are bigger. What’s the reason for this?

The Data Collector Set report generation process uncovered:

- When the data collection ends, the process “tracerpt.exe” is launched to create a report for the folder where the data was collected.

- “tracerpt.exe” runs with “below normal” priority so it does not get full CPU attention especially if LSASS is busy as well.

- “tracerpt.exe” runs with one worker thread only, so it cannot take advantage of more than one CPU core.

- “tracerpt.exe” accumulates RAM usage as it runs.

- “tracerpt.exe” has six hours to complete a report. If it is not done within this time, the report is terminated.

- The default settings of the system AD data collector deletes the biggest data set first that exceed the 1 Gigabyte limit. The biggest single file in the reports is typically “Active Directory.etl”. The report.html file will not get created if this file does not exist.

I worked with a customer recently with a pretty well-equipped Domain Controller (24 server-class CPUs, 256 GB RAM). The customer was kind enough to run a few tests for various report sizes, and found the following metrics:

- Until the time-out of six hours is hit, “tracerpt.exe” consumes up to 12 GB of RAM.

- During this time, one CPU core was allocated 100%. If a DC is in a high-load condition, you may want to increase the base priority of “tracerpt.exe” to get the report to complete. This is at the expense of CPU time potentially impacting purpose of said server and in turn clients.

- The biggest data set that could be completed within the six hours had an “Active Directory.etl” of 3 GB.

If you have lower-spec and busier machines, you shouldn't expect the same results as this example (On a lower spec machine with a 3 GB ETL file, the report.html file would likely fail to compile within the 6-hour window).

What a bummer, how do you get Performance Logging done then?

Fortunately, there are a number of parameters for a Data Collector Set that come to the rescue. Before you can use any of them you first need one of the more custom Data Collector Sets. You can play with a variety of settings, based on the purpose of the collection.

In Performance Monitor you can create a custom set on the "User Defined" folder by right-clicking it, to bring up the New -> Data Collector Set option in the context menu:

This launches a wizard that prompts you for a number of parameters for the new set.

The first thing it wants is a name for the new set:

The next step is to select a template. It may be one of the built-in templates or one exported from another computer as an XML file you select through the “Browse” button. In our case, we want to create a clone of “Active Directory Diagnostics”:

The next step is optional, and it’s specifies the storage location for the reports. You may want to select a volume with more space or lower IO load than the default volume:

There is one more page in the wizard, but there is no reason to make any more changes here. You can click “Finish” on this page.

The default settings are fine for an idle DC, but if you find your ETL files are too large, your reports are not generated, or it takes too long to process the data, you will likely want to make the following configuration changes.

For a real "Big Data Collector Set" we first want to make important changes to the storage strategy of the set that are available in the “Data Manager” log:

The most relevant settings are “Resource Policy” and “Maximum Root Path Size”. I recommend starting with the settings as shown below:

Notice, I've changed the Resource policy from "Delete largest" to "Delete oldest". I've also increased the Maximum root path size from 1024 to 2048 MB. You can run some reports to learn what the best size settings are for you. You might very well end up using 10 GB or more for your reports.

The second crucial parameter for your custom sets is the run interval for the data collection. It is five minutes by default. You can adjust that in the properties of the collector in the “Stop Condition” tab. In many cases shortening the data collection is a viable step if you see continuous high load:

You should avoid going shorter than two minutes, as this is the maximum LDAP query duration by default. (If you have LDAP queries that reach this threshold, they would not show up in a report that is less than two minutes in length.) In fact, I would suggest the minimum interval be set to three minutes.

One very attractive option is automatically restarting the data collection if a certain size of data collection is exceeded. You need to use common sense when you look at the multiple reports, e.g. the ratio of long-running queries is then shown in the logs. But it is definitely better than no report.

If you expect to exceed the 1 GB limit often, you certainly should adjust the total size of collections (Maximum root path size) in the “Data Manager”.

So how do I know how big the collection is while running it?

You can take a look at the folder of the data collection in Explorer, but you will notice it is pretty lazy updating it with the current size of the collection:

Explorer only updates the folder if you are doing something with the files. It sounds strange, but attempting to delete a file will trigger an update:

Now that makes more sense…

If you see the log is growing beyond your expectations, you can manually stop it before the stop condition hits the threshold you have configured:

Of course, you can also start and stop the reporting from a command line using the logman instructions in this post .

Room for improvement

We are aware there is room for improvement to get bigger data sets reported in a shorter time. The good news is that much of these special configuration changes won’t be needed once your DCs are running on Windows Server 2016. We will talk about that in a future post.

Thanks for reading.

Herbert

Updated Apr 04, 2019

Version 2.0JustinTurner Microsoft

Microsoft

Microsoft

MicrosoftJoined December 04, 2018

Ask the Directory Services Team

Follow this blog board to get notified when there's new activity