Hey all,

If you are interested in an “incrementally expandable” Virtual Desktop Infrastructure (VDI) pool architecture and want to hear about some benchmarking results we just completed, you are in the right place!

Recently we partnered with Dell to build a 2000-seat VDI deployment (all pooled virtual desktops) at our Enterprise Engineering Center (EEC) in Redmond, based on a reference architecture we had developed jointly that allows easy, incremental expansion of capacity with minimal impact on the shared storage or the storage network. The lab environment was fully isolated to minimize interference from the corporate network, and the networking back bone was built on 2x10 GB network infrastructures.

The key idea of this architecture is that VDI compute and storage are per host; think of these as VDI pods, and you can just add more pods to host more users. The key advantage of such a design is that we only need high availability (HA) type storage for user docs and settings, and typically such an infrastructure already exists in an enterprise. If not, it can be built mostly independent of the VDI. And just to complete the picture, some shared storage might be required depending on your preference for HA configuration of back-end services such as SQL, although the VDI-specific services have application-level HA and can work either way.

Following is a high level overview of our deployment.

In the preceding diagram, please note that the capacity of this deployment is primarily a function of the number of VDI hosts; one could easily add more hosts to grow capacity without upgrading the management infrastructure, but bear in mind that you may need to increase storage for user docs and settings (this analogy holds for traditional desktops with roaming or redirected folders too). That said, another benefit of this architecture is that the design of storage for user docs and settings is decoupled from the VDI design, which makes it especially ideal for pooled virtual desktops.

So with that quick intro, let’s jump into the details and results!

ResultsWe deployed a Win8-x86 virtual machine with Office 2013, and optimized the virtual machines for the VDI workload . Each virtual machine had a single vCPU, and was allocated about 800 MB of RAM to start with. We also enabled Hyper-V’s Dynamic Memory for better memory utilization (especially helpful for the VDI workload). The R720 (dual socket E5-2690 @ 2.90GHz) hosts came with 256 GB of RAM.

We built a VSI benchmarking infrastructure (v 3.7) to run the VSI Medium workload ( http://www.loginvsi.com/ ) on this deployment. All launchers were virtual machines on a pair of Dell 910 Hyper-V servers; we used about 100 launchers (20 connections per launcher).

Although we could have pushed each host to maximum CPU, we decided to keep them at 80% and run 150 virtual machines per host to test a closer to a real-world use case, allowing some headroom for unexpected surge. That said, I’d like do another blog just to describe a single host’s behavior when pushing CPU to max, but that’s a side note.

So following is the VSI benchmarking results for our 2000-seat pooled virtual desktop deployment, where the VSI maximum was not reached.

The logon interval was over a period of one hour, so 2000 logons in 60 minutes, where each virtual machine would start running the VSI medium workload within ~15 seconds after logon.

The load on the VDI management infrastructure was pretty low too, that’s why such a deployment can easily grow by adding more hosts, since the management infrastructure shows a very light load based on this 2000-seat run.

Network load

In the LAN environment that we had set up (2x10 GB), the RDP generated load on the network was about 400 kbps (average) per pooled virtual desktop running the VSI medium workload, so user traffic for this 2000-seat deployment would be about 800 Mbps. This is well below the capacity of the network infrastructure we had deployed.

SQL load

The following chart shows the CPU and IO load on the SQL virtual machine.

As some of you may know, SQL is a key part of our high availability (HA) RD Connection Broker model in Windows Server 2012, where the HA RD Connection Broker servers use SQL to store deployment settings (for more info, please see the following post ). There are several HA models for SQL that customers can choose to build an e2e HA brokering.

Please note that the load on the SQL virtual machine is very small, about ~3% CPU and very little I/O. This means that we can easily host more virtual machines or handle faster logons.

SQL configuration

- 4 vCPUs, 8192 GB (~6 GB free, only ~2 GB used)

- 2000 connections in one hour

- SQL virtual machine running on R720s

Load on the HA RD Connection Broker server

Similarly, the CPU load on the RD Connection Broker server is ~2%, which means that we have plenty of CPU to handle user logons at a faster rate.

RD Connection Broker configuration

- 2 vCPUs, 8192 GB (~6 GB free, only 2 GB used)

- Broker virtual machines running on R720s

Following is the CPU and storage load on one of the 14 VDI hosts.

As you can see, the CPU consumption is about 75% with 150 virtual machines running the VSI medium workload.

The per-host local storage consists of 10x300 GB SAS 6 Gbps 15K disks configured as RAID1+0, easily handling the necessary IOPS.

For a tighter logon period, our recommendation is to replace one or two of the spindles with a small size SSD (for the virtual desktop template), as the I/O load during a shorter logon cycle can exceed I/O capacity of the local spindle-disks.

It is hard to say exactly when, but a good rule of thumb is to estimate I/O capacity of the 10-disk array at about 2000 read-IOPS and 1000 write-IOPS, and since the I/O load from 150 virtual machines over an hour-long logon period is about 1743, the same workload at about 30 minutes will be about 3500 IOPS, exceeding the I/O capability of the local array.

A 250-GB SSD for the virtual desktop template should easily provide the additional performance necessary for faster logons under a heavier workload.

And finally, let’s take a look at the memory consumption of a single virtual machine.

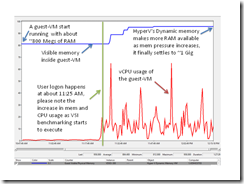

In the following chart, we see that a guest-VM is running/idling initially at about ~800 MB; CPU is pretty flat too. Then at about 11:25 AM (marked by the vertical green line), a user logon completes and moments later the VSI workload starts running. When benchmarking starts, we see that CPU usage picks up and so does the memory usage, where Hyper-V’s Dynamic Memory provides additional RAM, and finally the guest RAM settles to about 1 GB. This pattern repeats for all virtual machines on a VDI host where about 150 GB of memory is consumed by the 150 virtual machines at a cumulative CPU usage of about 75% CPU. We have plenty of headroom for CPU spikes and real world workload that could demand more memory as each server is configured with 256 GB of RAM (but remember that some portion of the 256 GB of RAM is reserved for the services running in the parent partition).

This post should help customers that are looking to set up a modular and scalable VDI solution, bringing deeper insight into some of the key performance and resource requirements that help the planning of large scale deployments.

Ara Bernardi

RDS Team