- Home

- Azure

- Azure Governance and Management Blog

- Azure Budgets and Azure OpenAI Cost Management

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure OpenAI is a cloud-based service that allows you to access and use large-scale, generative AI models, such as GPT-4 and Dall-E, for various applications involving language, code, reasoning, and image generation. Azure OpenAI Service provides enterprise-grade security and reliability for your AI workloads.

Azure OpenAI Pricing Overview

To give everyone a bit of a context, the pricing for Azure OpenAI Service is based on a pay-as-you-go consumption model, which means you only pay for what you use. The price per unit depends on the type and size of the model you choose. It also depends upon the number of tokens being used in the prompt and the response. Tokens are the units of measurement that OpenAI uses to charge for its API services. Each request to the API consumes a certain number of tokens, depending on the model, the input length, and the output length. For example, if you use the DaVinci model to generate a 100-token completion from a 50-token prompt, you will be charged 150 tokens in total. Tokens are not the same as words or characters. They are pieces of text that the models use to process language. For example, the word “hamburger” is split into three tokens: “ham”, “bur”, and “ger”. A short and common word like “pear” is a single token. Naturally, what this means is that the more tokens you use, the more the pricing, and hence the ask that is stated below.

Problem Statement

Many customers who have discussed Azure OpenAI have expressed a desire to control their costs by setting budgets and taking actions based on them. One specific and repeated request has been to put a hard stop to calls made to the AOAI service automatically once a certain budget threshold is crossed.

Possible Solution

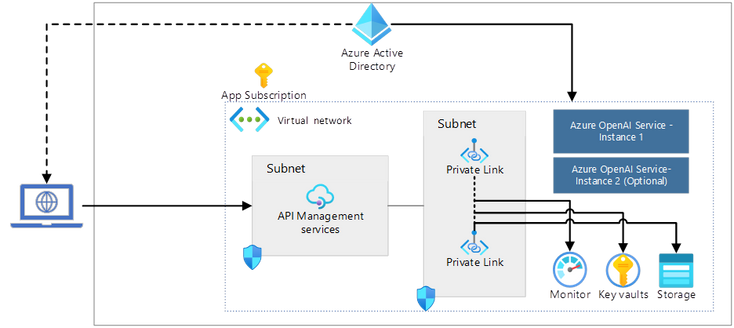

AOAI is an API based service and thus, there is no option to Start/Stop it like some other services in Azure. However, there is a workaround that can be taken to achieve this goal. Here is my perspective on how we can do this by adding Azure API Management layer before AOAI.

You can optionally route the requests through your usual enterprise route like FW, App Gateway etc. through your Hub.

This has multiple advantages besides the cost aspect which we will discuss a bit later.

- Provide a gate between the application and the AOAI instance to inspect and action on the request and response.

- Enforce and use Azure AD authentication.

- Provide enhanced logging for security and for tracking the use of tokens through the service.

- Lastly, use policies to restrict the usage if a certain cost threshold has been crossed.

The approach that I have taken here is to create an inbound processing policy using the ip-filter rule and leave the allowed IPs list blank. This will deny all the calls made to the service as none of the requestor IP is in the list. Here is what the basic inbound processing policy with ip-filter will look like:

<policies>

<inbound>

<base />

<ip-filter action="allow" />

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>

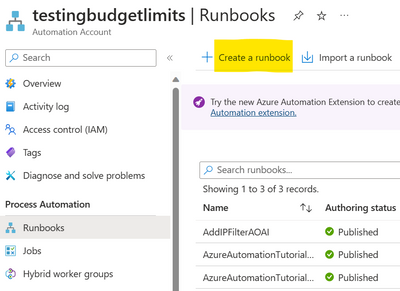

Let us start by creating the automation runbook which will add the above policy to the relevant scope under inbound processing. Scopes can be at the tenant level, Product level, API level or even at the operations level under each API. In this example, we will apply it at the API level.

|

Here is the code for the runbook:

try

{

"Logging in to Azure..."

Connect-AzAccount -Identity

}

catch {

Write-Error -Message $_.Exception

throw $_.Exception

}

$newPolicy = '<policies>

<inbound>

<base />

<ip-filter action="allow" />

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>'

$apimContext = New-AzApiManagementContext -ResourceGroupName "<<RG-NAME>>" -ServiceName "APIM-INSTANCE-NAME"

Set-AzApiManagementPolicy -Context $apimContext -ApiId "<<API-NAME>>" -Policy $newPolicy

If you notice, we are using the System Managed Identity of the automation account to authenticate and authorize the runbook to perform actions against the APIM API policy. Ensure that you give relevant RBAC permission to the automation account, otherwise the operation would fail. The minimum permission needed to do this would be 'Microsoft.ApiManagement/service/apis/operations/policies/write'.

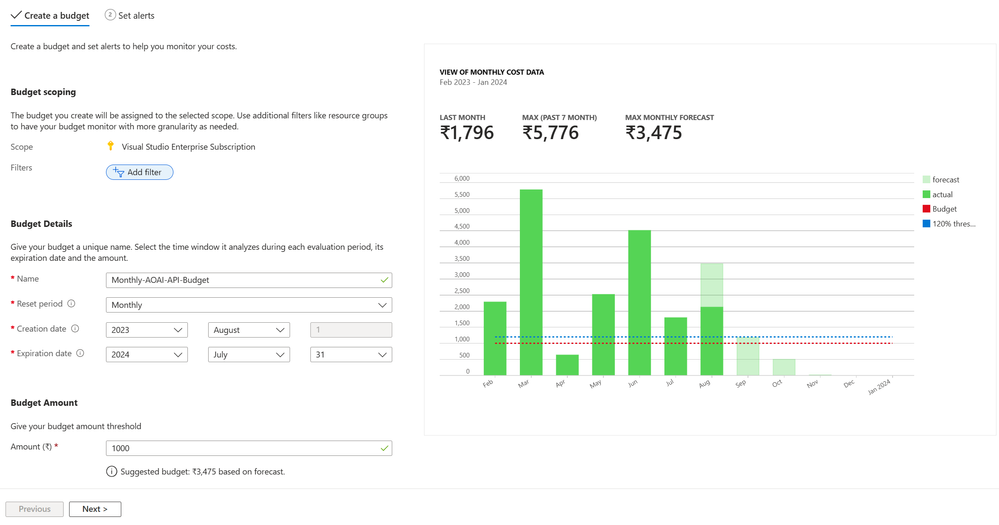

Once you have created and published the runbook, head over to the Budgets and create a budget at the scope you require. The scope here can be at Management Group, Subscription, Resource Group or even at the Resource level.

On the next page, select when the alert needs to be triggered. This can be done on both the Actual consumption as well as the Forecasted one. Below, it will alert if the actual consumption reaches 120% of my budget value.

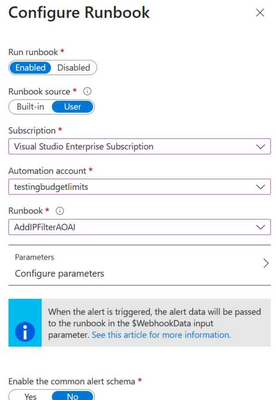

We will need to create an Action Group which will now call our Runbook in case this alert gets triggered. You either create this separately or click on the Manage action group link.

Create a new Action Group and head to the Actions section

Select the runbook created in the previous steps:

Give the action a name, add tags as necessary and save the Action Group. Click on the Create Budget link at the top of the page (if you had navigated from the budgets page).

Select the Action group that was created and add an email recipient to get the email if this budget alert is triggered.

And that is it! The billing data gets refreshed every 24 hours, so in case the budget alert gets triggered, an email will be sent, and the API policy will be added to restrict access to the service.

Optionally, you can setup another runbook with a schedule to reset the inbound policy on 1st of every month (or 1st day of the billing period).

One important footnote to this whole idea is to lock down access to the Azure OpenAI service using Private Endpoints and Service Firewall to restrict access. Only the APIM instance should be able to access the AOAI service, otherwise this solution will not work. People can easily circumvent the APIM to directly hit the service and make this solution redundant.

In conclusion, Azure OpenAI Service is a powerful and flexible way to leverage the state-of-the-art AI models for various applications. However, it also comes with a significant cost that depends on the type, size, and usage of the models. Therefore, it is important to plan and optimize your AI workloads to control your costs and avoid unnecessary expenses.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.