- Home

- Education Sector

- Educator Developer Blog

- Building AI Agent Applications Series - Understanding AI Agents

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Do you know about AI Agents? How to apply AI Agents in different scenarios? For AI Agents, Microsoft released the open source framework Autogen. But what is its relationship with Semantic Kernel and Prompt flow? I hope this series can answer your questions. Let everyone have a clear understanding of AI Agents, how to combine Autogen, Semantic Kernel, and Prompt flow to build intelligent applications

Around artificial intelligence, humans have made many attempts in different industries and different application scenarios. With the emergence of LLMs, we have transitioned from traditional chatbots with process predefinition plus semantic matching to Copilot applications that interact with LLMs through natural language. In the past year or so, everyone has mainly focused on basic theories based on LLMs. In 2024 we should enter the application scenario of LLMs. We have a lot of papers, application frameworks, and practices from large companies to support the implementation of LLMs applications. So what is the final form of our so-called artificial intelligence applications? What you can think of is GitHub Copilot for programming assistance, Microsoft 365 Copilot for office scenarios, and Microsoft Copilot on Windows or Bing, etc. But think about the application of Copilot, which relies more on individuals to guide or correct through prompt words, and does not achieve fully intelligent applications. In the 1980s, we began to try to do fully intelligent work, and AI Agent is a fully intelligent best practice.

The agent interacts with the scene where it is located, receives instructions or data in the application scene, and decides different responses based on the instructions or data to achieve the final goal. Intelligent agents not only have human thinking capabilities, but can also simulate human behavior. They can be simple systems based on business processes, or they can be as complex as machine learning models. Agents use pre-established rules or models trained through machine learning/deep learning to make decisions, and sometimes require external control or supervision.

Characteristics of the AI agent:

-

Planning, divide steps based on tasks, and have a chain of though. With LLMs, it can be said that the planning ability of the agent is greatly enhanced, and the understanding of the task can be more accurate.

-

Memory the ability to remember behavior and part of logic, the ability to store experiences, and the ability to self-reflect.

-

Tool Chain, such as code execution capabilities, search capabilities, and computing capabilities. It can be said that he has strong mobility

-

perceive and obtain information such as pictures, sounds, temperatures, etc. based on the scene, thus providing better conditions for execution.

Technical support for realizing intelligent agents

There is considerable application practice in the application of LLMs.

There are many frameworks for implementing intelligent agents. The previously mentioned Semantic Kernel or Autogen can implement intelligent agents. The Assitants API has also been added under OpenAI to enhance the model's capabilities in agents. Now OpenAI’s Assitants API opens up the capabilities of code interpretation, retrieval, and function calling. Assitants API of Azure OpenAI Service is also coming soon, which can be said to provide enough wisdom for the application capabilities of agents.

Many people pay more attention to the application layer framework. People often compare Semantic Kernel and Autogen. After all, both are from Microsoft and have good task or plan orchestration capabilities. However, some people always feel that the two have many similarities.

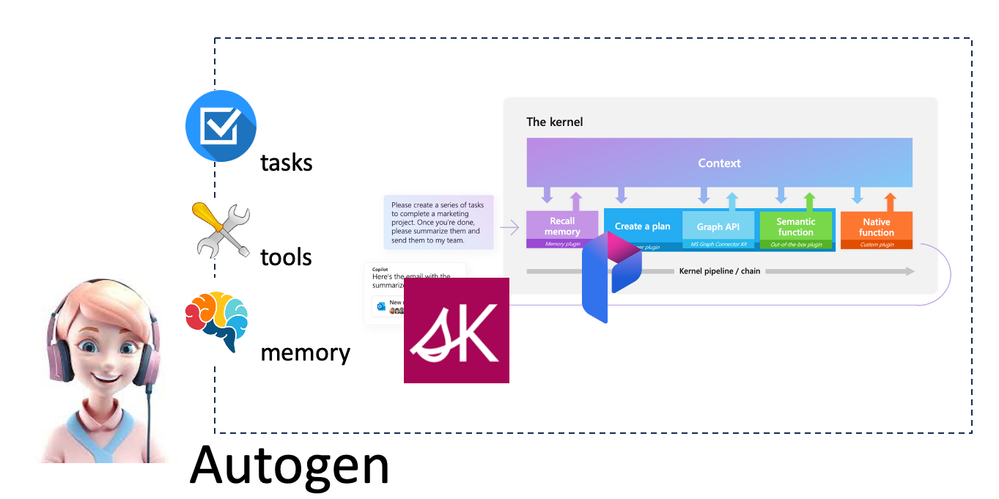

Semantic Kernel vs Autogen

Semantic Kernel focuses on effectively dividing individual tasks into steps in Copilot applications. This is also the charm of the Semantic Kernel Planner API. Autogen, on the other hand, focuses more on the construction of agents, dividing tasks to complete goals and assigning tasks to different agents. Each agent executes individually or interactively according to the assigned tasks. Behind each agent's task can be a streaming task arrangement, or an extended method for solving problems, or skills triggered by corresponding prompts, which can be organized in conjunction with Semantic Kernel plugins. When we want to have a stable task output, we can also add prompt flow to evaluate the output.

Use Semantic Kernel to implement AI agents.

Semantic Kernel has added support for agents in the Experimental library, introduced AgentBuilder, and combined with the Assistant API to complete the brain configuration of the agent. The corresponding planning, memory and tools are defined using different plugins.

var yourAgent = await new AgentBuilder()

.WithOpenAIChatCompletion("OpenAI Assitants API", "OpenAI Key")

.WithInstructions("Your agent instruction")

//.FromTemplate(EmbeddedResource.Read("Your agent YAML"))

.WithName("Your Agent Name")

.WithDescription("Your Agent Desctiption")

.WithPlugin("Your Agent Plugins")

.BuildAsync();

Notice

- WithOpenAIChatCompletion requires OpenAI/Azure OpenAI Service models that support Assistants API (soon to be released). Currently supported OpenAI models are GPT-3.5 or GPT-4 models.

- WithInstructions We need to give clear task instructions and inform the agent how to execute it. This is equivalent to a process. You need to describe it clearly, otherwise the accuracy will be reduced.

- .FromTemplate can also use Template to describe task instructions

- .WithName The name is required to make the call more clear.

- .WithPlugin is based on different skills and tool chains for the agent to complete tasks. This corresponds to the content of Semantic Kernel.

Let's take a simple scenario and hope to build a .NET console application through an agent, compile and run it, and require it to be completed through an agent. From this scenario, we need two agents - the agent that generates the .NET CLI script and the agent that runs the .NET CLI script. In Semantic Kernel, we use different plugins to define the required planning, memory and tools. The following is the relevant structure diagram.

You can get sample code from Semantic Kernel CookBook

https://github.com/microsoft/SemanticKernelCookBook/tree/main/workshop/dotNET/workshop3/dotNETAgent

Application scenarios of AI agents

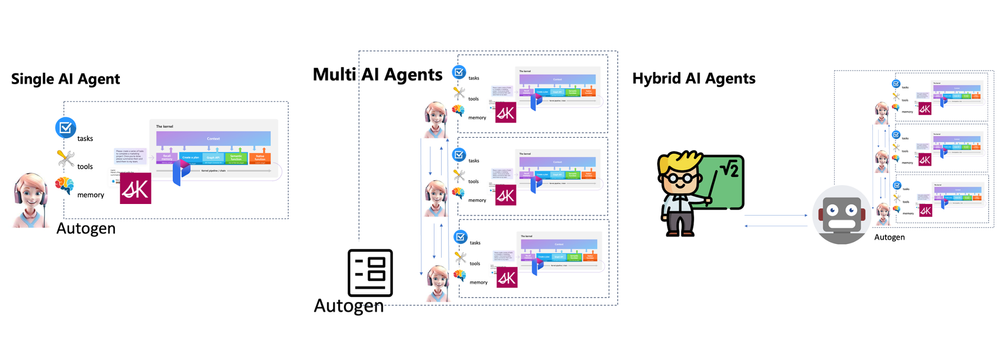

AI Agents are an important scenario for LLMs applications, and building agent applications will be an important technical field in 2024. We currently have three main forms of intelligence, such as single AI agent, multi- AI agents, and hybrid AI agent.

Single AI Agent

Work completed in specific task scenarios, such as the agent workspace under GitHub Copilot Chat, is an example of completing specific programming tasks based on user needs. Based on the capabilities of LLMs, a single agent can perform different actions based on tasks, such as requirements analysis, project reading, code generation, etc. It can also be used in smart homes and autonomous driving.

Multi-AI agents

This is the work of mutual interaction between AI agents. For example, the above-mentioned Semantic Kernel agent implementation is an example. The AI agent generated by the script interacts with your AI agent that executes the script. Multi-agent application scenarios are very helpful in highly collaborative work, such as software industry development, intelligent production, enterprise management, etc.

Hybrid AI Agent

This is human-computer interaction, making decisions in the same environment. For example, smart medical care, smart cities and other professional fields can use hybrid intelligence to complete complex professional work.

At present, the application of intelligent agents is still very preliminary. Many enterprises and individual developers are in the exploratory stage. Taking the first step is very critical. I hope you can try it more. I also hope that everyone can use Azure OpenAI Service to build more agent applications.

Resources

- Microsoft Semantic Kernel https://github.com/microsoft/semantic-kernel

- Microsoft Autogen https://github.com/microsoft/autogen

- Microsoft Semantic Kernel CookBook https://github.com/microsoft/SemanticKernelCookBook

- Pursuit of "wicked smartness" in VS Code https://code.visualstudio.com/blogs/2023/11/13/vscode-copilot-smarter

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.