- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Model Benchmarks in Azure AI Studio

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

Azure AI Studio is a versatile and user-friendly platform designed to cater to the diverse needs of developers, data scientists, and machine learning experts. It offers a comprehensive suite of tools and services, making the journey from concept to evaluation to deployment seamless and efficient. This all-in-one space allows users to experiment with their AI solutions, harness the power of data, and collaborate effectively with their teams. The GenAI applications built in the Azure AI Studio use LLMs (large language models) to generate predictions based on the input prompts. The quality of the generated text depends on, among many other things, the performance of the LLM. The LLM performance is assessed based on how well they perform on different downstream tasks.

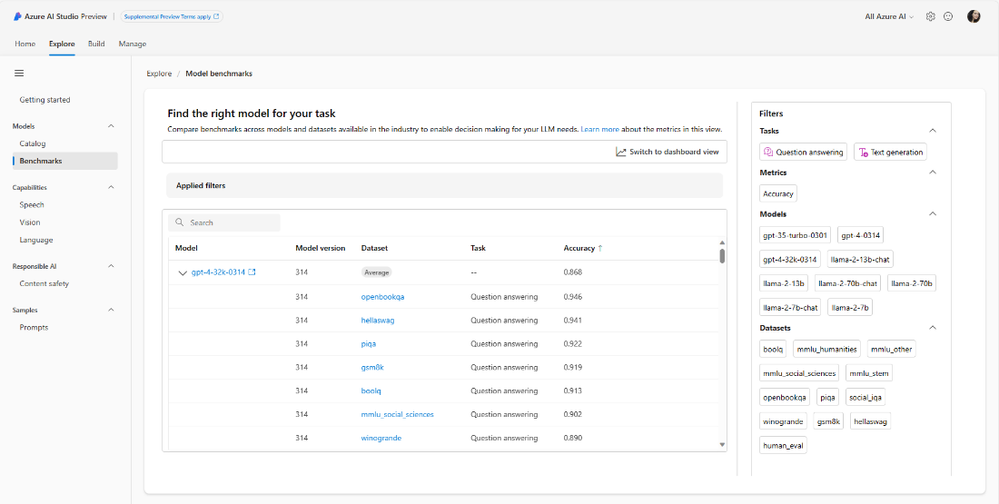

We are excited to announce the Public Preview release of “model benchmarks”. Model benchmarks in Azure AI Studio provide an invaluable tool for users to review and compare the performance of various AI models. The platform provides LLM quality metrics for OpenAI models and Llama 2 models such as Llama-2-7b, gpt-4, gpt-4-32k, and gpt-35-turbo. The metrics published in the model benchmarks help simplify the model selection process and enable users to make more confident choices when selecting a model for their task.

Why use Model Benchmarks?

Model benchmarks allow users to compare these models based on metrics such as accuracy. This comparison empowers users to make informed and data-driven decisions when selecting the right model for their specific task, ensuring that their AI solutions are optimized for the best performance.

Previously, evaluating model quality could require significant time and resources. With the prebuilt metrics in model benchmarks, users can quickly identify the most suitable model for their project, reducing development time and minimizing infrastructure costs. In Azure AI Studio, users can access benchmark comparisons within the same environment where they build, train, and deploy their AI solutions. This enhances workflow efficiency and collaboration among team members.

Azure AI Studio's model benchmarks provide an invaluable resource for developers and machine learning experts to select the right AI model for their projects. With the ability to compare industry-leading models like Llama-2-7b, gpt-4, gpt-4-32k, and gpt-35-turbo, users can harness the full potential of AI in their solutions. Model benchmarks empower users to make informed decisions, optimize performance, and enhance their overall AI development experience.

How do we calculate a benchmark?

To generate our benchmarks, we utilize three key things:

- A base model, available in the Azure AI model catalog

- A publicly available dataset

- A metric score to evaluate

The benchmark results published in the model benchmarks experience originate from public datasets that are commonly used for language model evaluation. In most cases, the data is hosted in GitHub repositories maintained by the creators or curators of the data. Azure AI evaluation pipelines download data from their original sources, extract prompts from each example/row, generate model responses, and then compute relevant accuracy metrics. In the

model benchmarks experience, you can view details about the datasets that we utilize via a display of dataset details within the tool.

Prompt construction follows best practice for each dataset, usually set forth by the paper introducing the dataset and industry standard. In most cases, each prompt contains several examples of complete questions and answers, or “shots,” to prime the model for the task. The evaluation pipelines create shots by sampling questions and answers from a portion of the data that is held out from evaluation.

What scores do we calculate?

In the model benchmarks experience, we calculate accuracy score. These accuracy scores are presented at both the dataset (or task) level and the model level. At the dataset level, the score is the average value of an accuracy metric computed over all examples in the dataset. The accuracy metric used is exact-match in all cases except for the HumanEval dataset which uses a pass@1 metric. Exact match simply compares model generated text with the correct answer according to the dataset, reporting one if the generated text matches the answer exactly and zero otherwise. Pass@1 measures the proportion of model solutions that pass a set of unit tests in a code generation task. At the model level, the accuracy score is the average of the dataset-level accuracies for each model.

Get Started Today

Model Benchmarks in Azure AI Studio documentation

Introduction to Azure AI Studio (learn module)

Sign up for Private Preview access to the latest features in AzureML: aka.ms/azureMLinsiders

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.