- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- Introducing NVIDIA Nemotron-3 8B LLMs on the Model Catalog

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We are excited to announce that we are expanding our partnership with NVIDIA to bring the best of NVIDIA AI software to Azure. This includes a new family of large language models (LLMs) called the NVIDIA Nemotron-3 8B, Triton TensorRT-LLM server for inference, and the NeMo framework for training. The NVIDIA Nemotron-3 8B family of models joins a growing list of LLMs in the AI Studio model catalog. AI Studio enables Generative AI developers to build LLM applications by offering access to hundreds of models in the model catalog and a comprehensive set of tools for prompt engineering, fine-tuning, evaluation, retrieval augmentation generation (RAG) and more.

NVIDIA Nemotron-3 8B family includes a pre-trained model, multiple variants of the chat model and question-answering model built on NVIDIA NeMo, a framework to build, customize, and deploy generative AI models that offers built-in parallelism across GPUs for distributed training and supports RLHF, p-tuning, prompt learning, and more for customizing models. Models trained with NeMo can be served with Triton Inference server using the TensorRT LLM backend that can generate GPU specific optimizations to achieve multi-fold increase in inference performance.

“Our partnership with NVIDIA represents a significant enhancement to Azure AI, particularly with the addition of the Nemotron-3 8B models to our model catalog,” said John Montgomery, Corporate Vice President, Azure AI Platform at Microsoft. “This integration not only expands our range of models but also assures our enterprise customers of immediate access to cutting-edge generative AI solutions that are ready for production environments.”

NVIDIA Nemotron-3 8B Models

Nemotron-3-8B Base Model: This is a foundational model with 8 billion parameters. It enables customization, including parameter-efficient fine-tuning and continuous pre-training for domain-adapted LLMs.

Nemotron-3-8B Chat Models: These are chatbot-focused models that target LLM-powered chatbot interactions. Designed for global enterprises, these models are proficient in 53 languages and are trained in 37 different coding languages. There are three chat model versions:

- Nemotron-3-8B-Chat-SFT: A building block for instruction tuning custom models, user-defined alignment, such as RLHF or SteerLM models.

- Nemotron-3-8B-Chat-RLHF: Built from the SFT model and achieves the highest MT-Bench score within the 8B category for chat quality.

- Nemotron-3-8B-Chat-SteerLM: Offers flexibility for customizing and training LLMs at inference, allowing users to define attributes on the fly.

Nemotron-3-8B-Question-and-Answer (QA) Model: The Nemotron-3-8B-QA model is a question-and-answer model that's been fine-tuned on a large amount of data focused on the target use case.

The Nemotron-8B models are curated by Microsoft in the ‘nvidia-ai’ Azure Machine Learning (AzureML) registry and show up on the model catalog under the NVIDIA Collection [Fig 1]. Explore the model card to learn more about the model architecture, use-cases and limitations.

Fig 1. Discover Nemotron-3 models in Azure AI Model catalog

High performance inference with Managed Online Endpoints in Azure AI and Azure ML Studio

The model catalog in AI Studio makes it easy to discover and deploy the Nemotron-3 8B models along with the Triton TensorRT-LLM inference containers on Azure.

Filter the list of models by the newly added NVIDIA collection and select one of the models. You can review the model card, code samples and deploy the model to Online Endpoints using the deployment wizard. The Triton TensorRT-LLM container is curated as an AzureML environment in the ‘nvidia-ai’ registry and passed as the default environment in the deployment flow.

Once the deployment is complete, you can use the Triton Client library to score the models.

Fig 2. Deploy Nemotron-3 models in Azure AI Model Catalog

Prompt-tune Nemotron-3 Base Model in AzureML Studio

You can tune the parameters of the Nemotron-3-8B-Base-4k model to perform well on a specific domain using prompt-tuning (P-tuning). This is supported for the text-generation task and curated for our users as an AzureML component. There are two ways customers can perform p-tuning on Nemotron-3 base model today on the AzureML Studio – using code-first approach leveraging the notebook samples in the model card and going no-code leveraging the drag-and-drop experience on AzureML pipelines.

The NeMo P-tuning components are available in the ‘nvidia-ai’ registry. You can build your

custom P-tuning pipelines as shown in Fig 3, configure the input parameters such as learning rate, max_steps etc. in the P-tuning component and submit the pipeline job which outputs the P-tuned model weights.

Fig 3. Author custom pipelines to P-tune Nemotron-3-8B-Base-4k using AzureML Designer

Fig 4. Sample P-tuning and Evaluation pipeline for Text-Generation task

Evaluate Nemotron-3 Base Model

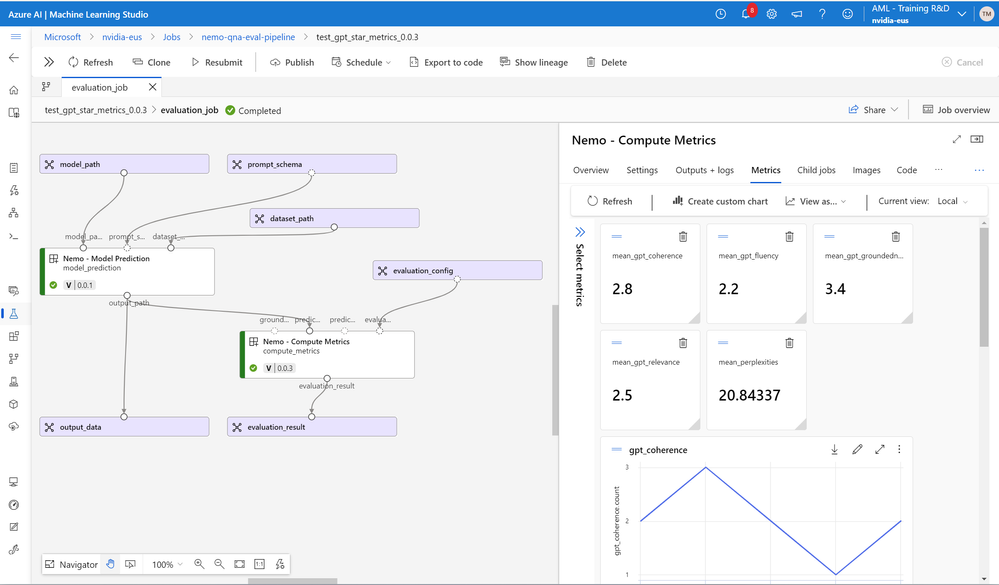

Nemotron-3-Base-4k model can be evaluated on a set of tasks such as text generation, text classification, translation and question-answering. AzureML offers curated Evaluation pipelines which evaluate the Nemotron-3 Base model by performing batch inference to generate predictions on the test data and using them further to generate task-based performance metrics such as perplexity and GPT evaluation metrics (Coherence, Fluency, Groundedness and Relevance).

The curated components for evaluation are available in the 'nvidia-ai' registry for users to consume while authoring pipelines in AzureML Designer, with an experience similar to Fig 3. The sample notebooks for evaluation are available on the model card.

Fig 5. Out-of-the-box evaluation component for QA task

Note about the license

Users are responsible for compliance with the terms of NVIDIA AI Product Agreement for the use of Nemotron-3 models.

Conclusion

“We are excited to team with Microsoft to bring NVIDIA AI Enterprise, NeMo, and our Nemotron-3 8B to Azure AI,” said Manuvir Das, VP, Enterprise Computing, NVIDIA. “This gives enterprise developers the easiest path to train and tune LLMs and deploy them at scale on Azure cloud.”

We want Azure AI to be the best platform for Generative AI developers. The integration with Nvidia AI Enterprise software advances our goal to integrate with the best models and tools for Generative AI development and enables you to build your own delightful LLM applications on Azure.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.