- Home

- Artificial Intelligence and Machine Learning

- AI - Machine Learning Blog

- An Enterprise Design for Azure Machine Learning - An Architect's Viewpoint

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

1. Problem Statement

Many Organisations want to create enterprise data science capability. The goals can include unlocking value from their data assets, reducing cost, and driving customer engagement. While many organisations have an initial capability, challenges can arise while "scaling and industrialization" into an enterprise service. This Point-of-view provides an opinionated design for a "fit for purpose" enterprise data science platform, delivered with Azure Machine Learning. It covers infrastructure, identity, data and functionality, aligned to an MLOps delivery framework.

2. Use Case Definition

The following use case is used to help define the scope of this PoV design.

This design:

-

Is for a fictitious mid-level enterprise, wanting to mature its data science function. The key goal is too standup an enterprise-ready platform that can support the 20 different projects, executing data science work packages.

- The initial work package is a basic statistical model for consumption by internal resources. -

Is for a solution that MUST deliver the following capabilities:

- Support of an MLOps function

- Enablement of innovation work -

Has the following key Non Functional Requirements (NFRs) in order of importance:

1. Security

2. Cost Optimisation

3. System Governance

4. Supportability

Azure Machine Learning (AML). AML was selected due to the firm’s existing Microsoft footprint, skills and capabilities, the desire to lower the toil/risk from integration and interoperability, and the easy of scaling delivery when utilizing Microsoft tooling.

3. The Key Design Decisions (KDD)

Decision - The use of Private endpoints (where available) are used to secure all egress/ingress of data.

Rational - Securing the service is the highest priority NFRs. Private endpoints (PE) provide the highest level of security for data transfer.

Impact

- Solution endpoints are only available to defined, Azure services. They are non-addressable by anything else.

- Increased solution Operating Expense (OPEX).

- Increased initial configuration work for routing setup.

Implications

- Endpoints that do not offer PE compatibility need to be either blocked, or otherwise hardened.

- New Services maybe rejected based upon their lack of PE support (or similar controls) that is, preview or beta versions.

Considerations - Unsecured endpoints with Application native controls – discounted based upon security NFRs.

Decision - Removing the Network jump boxes from the design.

Rational - The Network jump boxes are excluded from the design, based upon cost and the acceptable security baseline already provided.

Impact - Decreased solution OPEX and complexity.

Implications - Users interact with the platform either via the portal, IDEs or command prompt/CLI.

Considerations - Implement Azure Bastion – discounted due to cost.

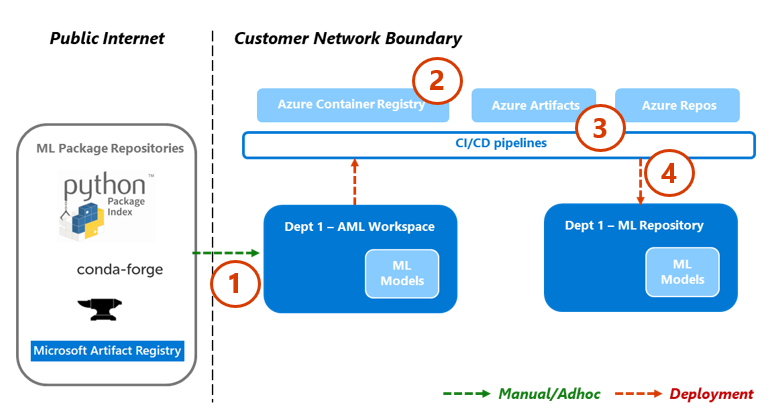

Decision

The following ML repositories are safe listed for the Platform, enabling data scientists to self-serve ML packages and libraries;

- PyPI · The Python Package Index.

- conda-forge

- Microsoft Artifact Registry

Outside of this, access to the public internet the platform is blocked.

Rational

- This decision balances the requirement for specialized ML packages and libraries as a prerequisite to delivering value, while protecting the platform from the risks of open-source codebases.

- Elements of AML, such as environment builds, require specific ML packages which by default access these repositories. Blocking this access, yet still enabling these processes, requires significant technical debt to address, and toil to maintain.

Impact

- The Platform network denies any access to the public internet, asides from the safe listed sites.

- An exception process is required for ML packages/libraries/binaries that are not available in the safe listed repositories.

- A full security scanning process is required for all developed containerised models on upload, pre-deployment, with error trapping and full audit trails.

Implications

- The Platform network acts as a security control, limiting the blast radius from any package issue.

- This design acts as an attractive force for standardisation, encouraging the use of the languages supported by these safe listed repositories, i.e., Python.

- Project Leads have an extended Responsible, Accountable, Consulted, and Informed (RACI), covering package management and usage within their workspace.

Considerations

- Fully Private Repository Approach – discounted due complexity and toil introduced from seeding and maintaining the repository, bespoke configuration updates required, and the lag introduced into the MLOps processes.

- Complete serve-self model with open public internet access – discounted due to security risks introduced.

- Web Application Firewall (WAF) based package inspection – discounted due to OPEX cost, ML language gaps and configuration complexity.

Decision - Aligning the platform design with an organisation's data classification under business ownership.

Rational - A key benefit of an enterprise approach is to drive reuse and economies of scale, while reducing complexity and duplication. The appropriate data access control is the main challenge to this approach. Aligning the platform design with data classification should provide the most balanced approach.

Impact

- The Platform has multiple implementations of AML and related components.

- Some levels of data duplication across implementations.

- Enterprise and Department based AML implementations hosting multiple projects, therefore will be long standing implementations.

- Project resources have access other project’s models/codebases within the same implementation.

Implications - Business data owner accepts the uplifted RACI of AML implementation decision making.

Considerations

- Single enterprise implementation using AML project segregation – discounted based upon the lack of fine grain data access controls.

- Each Project/model gets a separate AML implementation – discounted based upon complexity and cost.

Callout - If AML security is uplifted to provide fine grain data, metadata, and configuration controls, this decision should be reviewed.

Decision - In-build Azure Role Based Access Controls (RBAC) roles should be used. Custom roles should only be used on an exception basis, and proactively managed out.

Rational - Custom RBAC roles require the customer to adopt a Product Owner role. The toil and cost across the full lifecycle doesn’t provide enough Return On Investment (ROI) to justify the commitment.

Impact

- Identity and Access Management (IAM) design is simplified.

- Operational overhead, expense, and risk are reduced.

Implications - Automation/compute processes may be introduced to provide a further layer of abstraction and control.

Considerations - Custom Roles – discounted, due to the toil and cost across the full lifecycle.

Callout - Microsoft is committed to consistently improving the security posture of its services and products.

Decision - The design balances security against cost and use case need.

Rational - To provide an acceptable ROI, this platform must support a data science capability at reasonable cost.

Impact - While the platform delivers an enterprise security baseline, component and control configuration beyond this level will be balance against cost, functionality and ways of working impact.

Implications - Premium services that could provide the strongest security posture maybe be discounted based upon cost such as the use of confidential computing.

Callout - Every customer is urged to review this KDD within their own specific constraints, and use case context.

Decision - This platform design algins with a "Zero Trust" security model.

Rational - "Zero Trust" offers the highest level of security protection, as it removes implicit trust from services, components or people when interacting with the platform. Securing the service is a top priority NFR.

Impact - All elements of the platform are secured using identities within a consistent RBAC framework, governed via central policies.

Implications - No implicit trust is granted to any interactions behind the secure network perimeter. All Interactions and connectivity are enabled via explicit RBAC and policies.

Considerations - Secure Network – discounted "Zero Trust" is additive.

Callout - This approach aligns with Microsoft guidance for secure design.

Decision - The Platform uses Microsoft managed keys.

Rational - Customer Managed Keys (CMK) introduce toil, cost, and risk into the management and administration of the platform that only delivers a marginal uplift in security (depending on the maturity of the management process within the customer).

Impact - Acceptance of the Microsoft process and standards for managing keys, certificates, and secrets.

Considerations - CMK – discounted due the toil, cost and risk from this process.

Callout - For projects using the highest classification of data, CMK maybe considered for the highest level of security. But this project implementation would be treated as an exception under this design.

Decision - The preference for component selection is (in order);

1. Azure native.

2. Azure first party.

3. Available via the Azure marketplace.

4. Other.

Rational - This service selection preference provides the strongest support for platform integration, interoperability, and a consistent security baseline.

Impact - All Azure native services and components are preferred.

Implications - Components and services maybe discounted based upon their Azure status.

Callout - Azure products and services evolve quickly, with feature hardened and uplift driven by customer feedback. Meaningful gaps are often quickly addressed.

Decision - There is a strong preference for PaaS service selection.

Rational - This decision reflects a cloud native design, reducing toil and risk from non-value add activities.

Impact

PaaS services are selected above others.

Security design shifts from network and boundary controls to identity.

Implications

Above the line OPEX costs may appear higher than normal.

Customers must accept the reduction in control, flexibility, and custom extension to services.

Callout - True Total-Cost-of-Ownership (TCO) often contains a large factor of "hidden costs", due management and administration tasks carried out by a customer's resource pool. PaaS reduces this, at a cost.

Decision - Where appropriate, solutions reuse the AI services provided by Microsoft, rather than build bespoke/custom AI services.

Rational - Microsoft is better placed to support the full Software Development Lifecycle (SDLC) of these services, reducing toil and risk for non-value add activities, along with lifecycle TCO.

Impact

The Platform network design must support the API interoperability required by Microsoft’s AI services.

Individual solutions need API interoperability.

Uplift in the support RACI for Data Science leads across the evolution of AI services, particularly for the currently used suite.

Implications - Individual solutions could have one-to-many Microsoft AI services as subcomponents of that solution.

Considerations - Bring-Your-Own/Custom solutions – discounted due to the RACI of the product ownership role across the full lifecycle.

Decision - The Staging and QA test environments remain standing, as a shared asset across the platform. To reduce cost, these environments should be stripped back, removing all model elements when not being used in a testing cycle.

Rational - This approach enables greater reuse, speed-to-market, reduced complexity for setup and service interoperability.

Impact - There must be an automated process to deploy, test, and strip back individual models, while leaving the testing workspaces and shared elements in place.

Implications

- Only compute processes have access to these test environments.

- An automated test harness is required for Staging and QA.

Considerations

- Environments tore down post use – discounted based upon orchestration and implementation complexity introduced.

- Each Project or model gets a separate Testing environment – discounted based upon cost.

Decision - Development is able to access production models, configuration, metadata and model data.

Rational - Production data and code are a required input into the uplift and enhancement of an existing model.

Impact

- This access is temporary/elevated access to specific production assets controlled under an RBAC design.

- Copies of production data will be created in the development data store.

Implications

- The Platform requires a "fit for purpose" data/asset catalog that is available to data scientists, providing transparency of current models and their performance.

- Data scientists must have access to logging and monitoring details of productionised models to inform iteration cycles.

Considerations

- Open Development access - discounted due to security risks.

- Development in Production – discounted due to the requirements of changing or update elements, along with ensuring compute and traffic segregation.

Callout - Development activities often required the creation of test data, boundary use cases, skewing of values, etc. Synthetic test data can materially impact the quality/consistency of a model's outputs.

Decision - The Staging and QA test environments are implemented in production, using production assets.

Rational - A “Fit for purpose” testing baseline, especially for model iteration, requires current production datasets.

Impact

- Testing components have access to production data.

- Reduction in toil for testing phases, greater accuracy in outcomes.

Implications - RBAC & Identity implementation that clearly separates testing and productionised components.

Considerations

- Segregated Testing Environments – discounted based upon requirement for production data and the implementation cost and complexity introduced.

- Each Project/model gets a separate Testing implementation – discounted based upon cost.

Callout - Synthetic test data can materially impact the quality/consistency of a model's outputs.

Decision - Where is there reuse and value, tasks should be automated.

Rational - Automation increases service reliability, scale, compliance and security, reduce cost and risk, while enabling a greater focus on value-add tasks.

Impact - Automation tooling covering the SDLC is required to support the platform.

Implications - Resource capacity should be allocated to the maintenance and uplift of automation across the platform.

Considerations - Automate everything, upfront – discounted due to lag and lack of direct business value delivered from this work.

Decision - This platform design implements an MLOps framework to support the full SDLC lifecycle of ML models.

Rational - An MLOps framework creates more efficient, less costly or risky workflows, increase scalability, collaboration, and model output quality, while reducing risk and errors.

Impact - MLOps specific tooling is required to support the platform.

Implications - Resource capacity should be allocated to the maintenance and uplift of MLOps capabilities and functions.

Considerations

- Implement DevOps - discounted due to specific requirements of ML lifecycle and the gaps such an approach would introduce.

- Implement in a later phase – discounted due to the technical debt created.

Decision - The Platform uses Zone-redundant storage for its data storage by default.

Rational - This balances service resiliency with OPEX cost, and the low likelihood of a full regional outage.

Impact

- Data is replicated across each availability zone within the hosting region.

- The Platform service will be impacted by a full outage in the hosting region.

Implications - In the case of a full regional outage, the platform would be dependent upon Microsoft service recovery, as a prerequisite to its own service recovery.

Considerations

- Lower levels of redundancy – discounted due to the service resiliency requirements.

- Higher levels of redundancy – discounted due to cost and low risk region failure.

Callout - Microsoft hasn’t had a full regional outage (as at March 2024), although there are rare instances of key services going down, effectivity taking down regions.

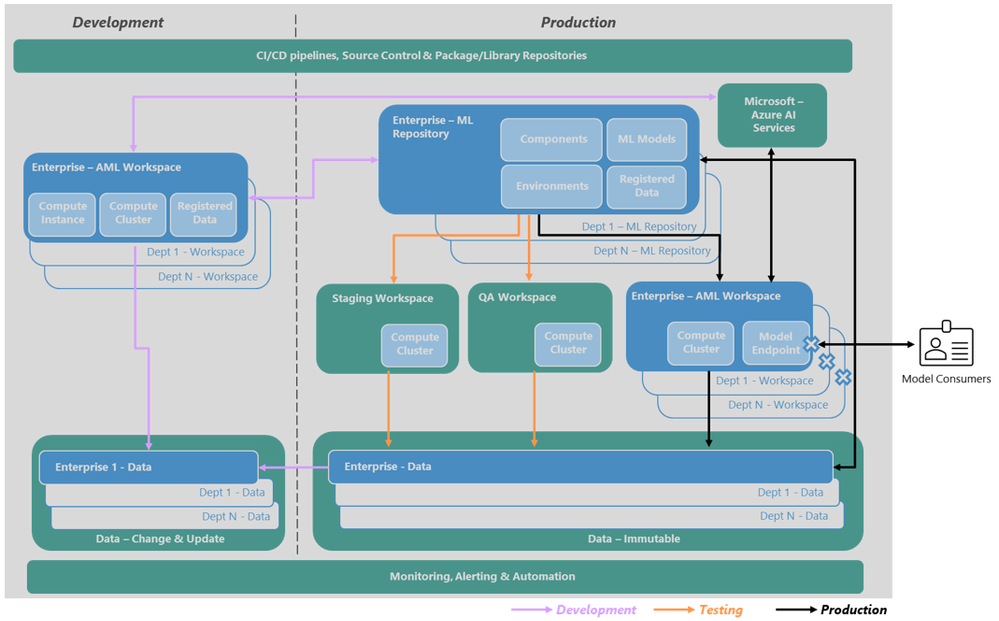

4. Design Overview

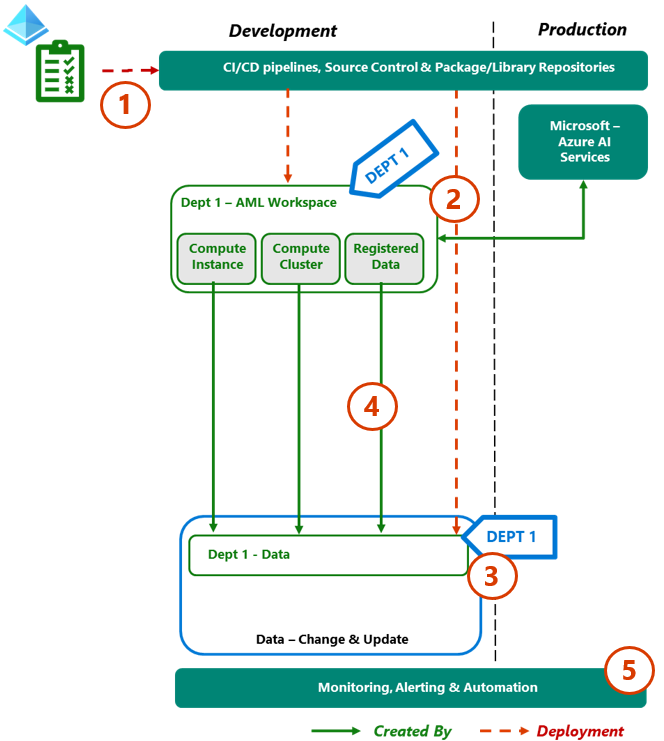

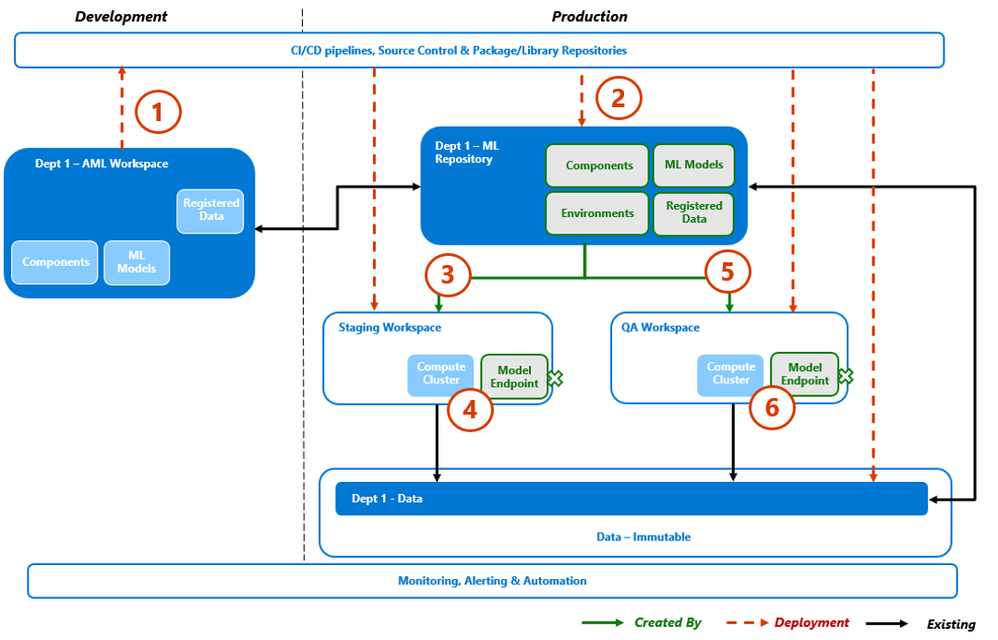

At a high level, the enterprise AML platform logical design can be illustrated as:

-

This AML design the completed ML model lifecycle, enabled by a MLOps framework.

-

The platform is split across two environments, reflecting data access security requirements while enabling the iteration of existing productionized models.

-

The design contains;

- Share assets/components which all workspaces use, irrespective of data classification scope, that is, monitoring, deployment, testing workspaces, etc.

- Foundation elements, which represent the enterprise implementation, intending to support many ML projects that have a data classification of general or lower.

- Dept/Project Assets are either departments or individual solutions, reflecting the in-scope data classification, separated from the enterprise implementation. -

Microsoft’s cloud-based AI services and APIs are be available as a shared enterprise service. This approach simplifies the process of adding AI features and reducing cost and risk across the full product lifecycle from custom solutions.

-

Both Enterprise and department implementations support one-many individual projects, therefore having a lifecycle outside of the individual projects. This design requires an uplifted RACI with nominated business owners of to manage/administer each implementation, ensuring tasks like project/data clean up, etc. are appropriately executed.

-

For audit and iteration purposes, production generated data is immutable/append only. To enable development activities like test data creation, edge cases, etc. the development workspaces are able to create & update data as required.

Building on top of the Foundational elements, the following ML specific components would be added:

ML Services:

- Azure Machine Learning (AML): Central hub for the machine learning experiments, datasets, and models, supporting the full ML model lifecycle.

- Azure ML Registry: organization-wide repository of machine learning assets such as models, environments, and components.

- Azure AI Services: out-of-the-box and customizable ML APIs, and models. These include services to support natural language processing for conversations, search, monitoring, translation, speech, vision, and decision-making.

- AI Services includes Cognitive Services suite of ML services

Supporting Services:

- Azure Container Registry: store container images for ML model deployment.

- Azure Key Vault: store and manage encryption keys and secrets securely.

- Azure Storage Account: storing raw data, test/training datasets, etc. as required by the MLOps processes.

- Application Insights - an extension of Azure Monitor a which provides targeted application performance monitoring.

Important:

Some Foundational elements would be extended to support the ML components, i.e. Azure Policies would be extended to cover ML component specific governance, ML components would be configured to ship logs to Azure Monitor – Log Analytics workspaces etc.

4.3. Design Assumptions and Constraints

- Each data source in scope has a clear Business owner who accepts the uplifted RACI of AML workspace decision maker.

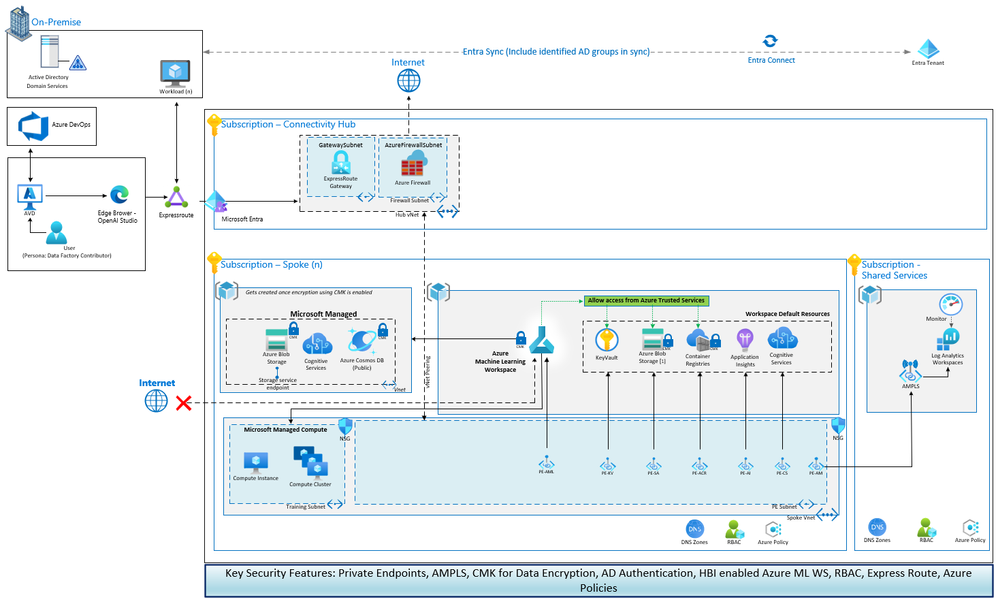

- The existing Azure enterprise platform contains enterprise shared components, that this design can reuse. This includes ExpressRoutefor on-perm connectivity, Microsoft Entra ID for access and authentication, Sentinel for SEIM and SOAR, Defender for threat protection, etc.

- There's a "fit for purpose" Data governance application and process in-place, providing transparency, classification, and structure to data available across the eco-system.

- There's a "fit for purpose" CI/CD automation application, such as Azure DevOps, and infrastructure monitoring application, such as Azure Monitoring, in-place.

- The Data Scientists need access to a deployed model's full lineage to enable the future uplift iterations.

- The three safe listed ML package repositories contain ~80% of all required ML packages, libraries, and binaries needed across an enterprise ML capability. Therefore, the other 20% can be sourced using an exception process without introducing unacceptable lag, toil, or expense.

- Azure Machine Learning has been selected as the ML application.

- This design is generalized and doesn’t reflect any specific regulatory standard or ML model use case. Customers should considered their own requirements and context when considering this PoV.

- "Copy & Paste" this design without considering individual context or requirements.

- Set & Forget implementations – Cloud services must be proactively monitored and managed.

- Not proactively managing cost.

- Not covering the full MLOps Lifecycle, including retirement of models.

- Business stakeholders who don’t understand or accept the Operating Model RACI.

- Embedding ML Project Leads without the time/priority to complete the addition tasks under the Operating Model RACI, that is, the break and fix support for the production model.

- Implementation of custom elements without understanding the full lifecycle and responsibilities of the "Product Ownership" role.

5. Networking

Azure Machine Learning (AML) provides network security features, such as virtual network integration, network isolation, and private endpoints. These measures help secure communication channels and limit access to ML resources within a trusted network environment.

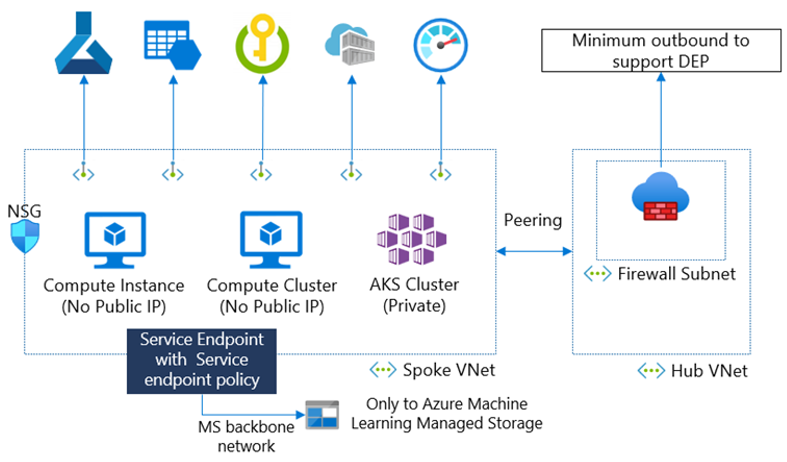

At a high level, this design can be illustrated as:

The key features of this network design are:

- Private and Isolated Services with Virtual Network (VNet) Integration: VNet Integration of an AML workspace is required to bring the workspace onto the Azure Backbone. VNet Injection can be implemented via Private link and Private endpoints that secure AML resources and restrict all access within a private network. VNet integration is also used to isolate AML resources and control all inbound/outbound traffic.

- An individual AML workspace uses multiple resources, such as Key Vault, Storage accounts, etc. This implementation requires the extension of the private endpoint configuration, securing all dependent resources ensuring a complete, unified secure design. - Fine Grain Control via Network Security Groups (NSGs): NSGs are firewall-like constructs that define and enforce inbound and outbound network traffic rules at the subnet or network interface level. NSGs provide granular control over network traffic, enabling you to permit or deny specific protocols, ports, and IP addresses.

- Uplifted Boundary Protection via Distributed denial of service attacks (DDOS): Enabling Azure DDoS (distributed denial of service attacks) Protection Standard safeguards against distributed DDoS attacks.

- Private Connectivity with Express Route (ER): ExpressRoute provides a private and dedicated network connection between your on-premises network and Azure ML and other resources in Azure. It improves data transfer performance, enhances security, and ensures compliance with data privacy regulations.

The complete network architecture can be illustrated as:

Important:

ML-assisted data labeling doesn't support default storage accounts that are secured behind a virtual network. You must use a non-default storage account for ML-assisted data labeling. The non-default storage account can be secured behind the virtual network.

5.1. ML Package Approach

The Problem Statement:

- Data scientists often require large numbers of highly specialized packages, libraries or binaries as “building blocks” for ML solutions.

- Many of these packages are community developed, iterate with fast-paced development cycles, and required "Subject Matter Expert" (SME) knowledge to understand and use.

- Traditional approaches to software management for this requirement, often result in expensive, toil-filled processes, which act as a bottleneck on the delivery of value.

Context:

There are industry standard package repositories, typically aligned to programming language that serve the ML community for most requirements.

Approach:

Safelist three industry standard ML package repositories, allowing self-serve from individual AML workspaces. Then, use an automated testing process during the deployment to scan the resulting solution containers. Failures would elegantly exit the deployment process and remove the container.

Process Flow

- Data scientists working within a specific AML workspace with network configuration applied, can self-serve ML packages on-demand from the whitelisted repositories.

- An exception process is required for everything else, using the Private Storage pattern, seeded/maintained via a centralized function. - AML delivers ML solutions as docker containers. As these solutions are developed, they are uploaded to the Azure Container Registry (ACR). Defender for Containers would be used to for the vulnerability scanning process.

- Solution deployment occurs via a CI/CD process. Defender for DevOps is used across the stack to provide security posture management and threat protection.

- Only if the solution container passes each of the security processes will it be deployed. Failure will result in the deployment elegantly exiting with error notifications, full audit trails and the solution container being discarded.

5.2. MVP Monitoring

The suggested MVP monitoring for this design is:

Description - Data drift tracks changes in the distribution of a model's input data by comparing it to the model's training data or recent past production data.

Environment - Production.

Implementation - AML – Model Monitoring.

Notes - Data drift refactoring requires recent production datasets and outputs, to be available for comparison.

Description - Several model serving endpoint metrics to indicate quality and performance.

Environment - All.

Implementation - Azure Monitor AML metrics.

Notes - This table has the supporting information to identify the AML workspace, deployment etc.

Description - Prediction drift tracks changes in the distribution of a model's prediction outputs by comparing it to validation or test labeled data or recent past production data.

Environment - Production.

Implementation - Azure Monitor AML metrics.

Notes - Prediction drift refactoring requires recent production datasets and outputs, to be available for comparison.

Description - Count of the Client Requests to the model endpoint.

Environment - Production.

Implementation;

- Machine Learning Services - OnlineEndpoints.

- Count of RequestPerMintute.

Notes - Acceptable thresholds could be aligned to t-shirt sizing’s or anomalies (acknowledging the need to establish a baseline).

- When a model is no longer being used, it should be retired from production.

Description - Throttling Delays in request and response in data transfer.

Environment - Production.

Implementation;

- [AMLOnlineEndpointTrafficLog](/azure/machine-learning/monitor-resource-reference?view=azureml-api-2#amlonlineendpointtrafficlog-table-preview).

- Sum of RequestThrottlingDelayMs.

- ResponseThrottlingDelayMs.

Notes - Acceptable thresholds should be aligned service's "Service Level Agreement" (SLA) and the solution's non-functional requirements (NFRs).

Description - Response Code - Errors generated.

Environment - Production.

Implementation;

- [AMLOnlineEndpointTrafficLog](/azure/machine-learning/monitor-resource-reference?view=azureml-api-2#amlonlineendpointtrafficlog-table-preview).

- Count of XRequestId by ModelStatusCode.

- Count of XRequestId by ModelStatusCode & ModelStatusReason.

Notes - All HTTP responses codes in the 400 & 500 range would be classified as an error.

Description - When monthly Operating expenses (OPEX), based on usage or cost, reaches or exceeds a predefined amount.

Environment - All.

Implementation - Azure – Budget Alerts.

Notes;

- Budget thresholds should be set based upon the initial NFR’s and cost estimates.

- Multiple threshold tiers should be used, ensuring stakeholders get appropriate warning before the budget is exceeded.

- Consistent budget alerts could also be a trigger for refactoring to support greater demand.

Description - When an AML workspace no longer appears to have active use.

Environment - Development.

Implementation;

- Azure Monitor AML metrics;

- Machine Learning Services - Workspaces - count of Active Cores over a period.

Notes; - Active Cores should equal zero with aggregation of count.

- Date thresholds should be aligned to the project schedule.

Description - Ensuring the appropriate security controls and baseline are implemented and not deviated from.

Environment - All.

Implementation;

- Azure – Policies.

- Including the “Audit usage of custom RBAC roles”.

Notes;.

- The full listing of available in-built policies is available for AML.

- Other components/services used in this design should also have their specific in-built policies reviewed and implemented where appropriate.

Description - Ensuring the appropriate standards and guardrails are adhered too.

Environment - Azure & CI/CD.

Implementation;

- Azure – DevOps Pipelines.

- PSRule for Azure.

- Enterprise Policy As Code (EPAC) (azure.github.io).

Notes;

- PSRule provides a testing framework for Azure Infrastructure as Code (IaC).

- EPAC can be used in CI/CD based system deploy Policies, Policy Sets, Assignments, Policy Exemptions and Role Assignments.

- Microsoft guidance is available in the Azure guidance for AML regulatory compliance.

Description - Automated security scanning is executed as part of the automated integration and deployment processes.

Environment - CI/CD.

Implementation - Azure – Defender For DevOps.

Notes - This processes can be extended with Azure marketplace for 3rd party security testing modules.

Description - Targeted security monitoring of any AML endpoint.

Environment - All.

Implementation - Azure – Defender For APIs.

Description - A development model appearing provide a regular service that should be productionised.

Environment - Development.

Implementation;

- Azure Monitor AML metrics.

- AMLOnlineEndpointTrafficLog - count of XMSClientRequestId over a month.

Notes - Date thresholds should be aligned to the project schedule.

Important:

Several of the implementations are in Preview (as at Mar ‘24), please refer to Preview Terms Of Use for greater detail.

6. Security

6.1. SDLC Access Patterns

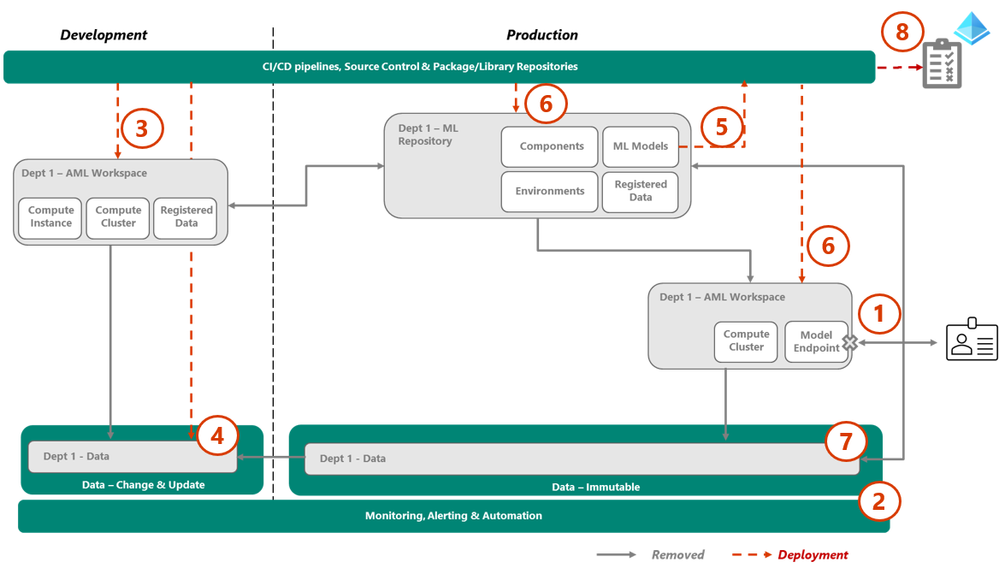

The Access patterns required to support the full Software Development Lifecycle (SDLC) can be illustrated as:

- All platform access patterns reflect a Zero Trust design approach, utilizing Role-Based Access Controls (RBAC).

- System/compute access to components/services is based upon identity, with Managed identities being preferred due to the strong security posture.

- Individuals access to components/services and data are based upon their Microsoft Entra ID identity, and its Azure – RBAC group membership.

- The design will use Azure defined RBAC roles over custom roles due to the reduced operating toil and risk of keeping custom roles up-to-date. - Access patterns can be aligned to the SDLC phase;

- Development - the access required to support the analysis, development, and initial testing of ML models. The access required by a new implementation is a subset of the access requirement for the iteration of an existing solution.

- Testing - temporary access required to support the deployment and Quality Assurance (QA) model testing as a prerequisite to production release.

- Production – the access required by a productionize ML model, released via an Azure AML endpoint to downstream consumers. - Azure AI Services APIs are available to development processes as part of the enterprise shared service. Once the model is productionized, a workspace specific implementation of that API is used.

- Depending on the individual solution's non functional requirements (NFRs), serving a model via an AML endpoint may not be appropriate. Other services, such as App Service, Azure Kubernetes Service (AKS), etc., could be considerd. But these requirements are Out-of-scope for this PoV.

6.2. Identity RBAC – Personas

This design considers the following Persona’s to inform the identity-based RBAC group design:

Description - The people doing the various ML and data science activities across the SLDC lifecycle for a project. This role's responsibilies include break and fix activities for the ML models, packages, and data, which sit outside of platform support expertise.

Type - Person.

Project Specific - Yes.

Notes - Involves data exploration and preprocessing to model training, evaluation, and deployment, to solve complex business problems and generate insight.

Description - The people doing the data analyst tasks required as an input to data science activities.

Type - Person.

Project Specific - Yes.

Notes - This role involves working with data, performing analysis, and supporting model development and deployment activities.

Description - The compute process used in Staging & QA testing.

Type - Person.

Project Specific - Yes.

Notes - This role provides functional segregation from the CI/CD processes.

Description - Business stakeholders attached to the project.

Type - Person.

Project Specific - Yes.

Notes - This role is read-only for the AML workspace components in development.

Description - The Data Science lead in a project administration role for the AML workspace.

Type - Person.

Project Specific - Yes.

Notes - This role would also have break/fix responsibility for the ML models and packages used.

Description - The Business stakeholders responsible for the AML workspace based upon data ownership.

Type - Person.

Project Specific - Yes.

Notes - This role is read-only for the AML workspace configuration and components in development. Production coverage will be provided by the data governance application.

Description - The Technical support staff responsible for break/fix activities across the platform. This role would cover infrastructure, service, etc. But not the ML models, packages or data. These elements remain under the Data Scientist/ML Engineer role's responsibility.

Type - Person.

Project Specific - No.

Notes - While the role group is permanent, membership is only transient, based upon a Privileged Identity Management (PIM) process for time boxed, evaluated access.

Description - The End consumers of the ML Model. This role could be a downstream process or an individual.

Type - Person and Process.

Project Specific - Yes.

Description - The compute processes that releases/rolls back change across the platform environments.

Type - Process.

Project Specific - No.

Description - The managed identities used by an AML workspace to interact with other parts of Azure.

Type - Person.

Project Specific - No.

Notes - This persona represents the various services that make up an AML implementation, which interact with other parts of the platform, such as, the development workspace connecting with the development data store, etc.

Description - The compute processes which monitor & alert based upon platform activities.

Type - Process.

Project Specific - No.

Description - The compute process that scans the ML project and datastores for data governance.

Type - Process.

Project Specific - No.

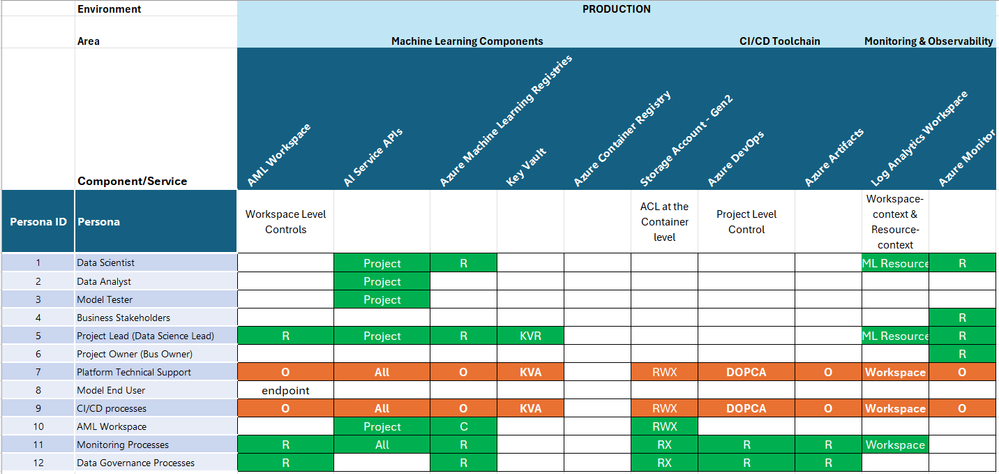

6.3. Identity RBAC – Control Plane

The Control plane is used to manage the resource level objects with a subscription.

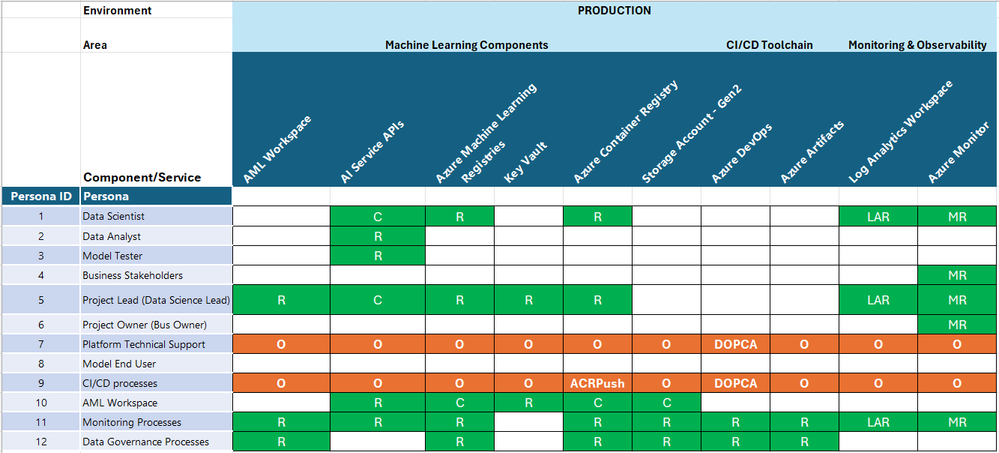

The Persona based identity RBAC design for the control plane for each environment can be described as;

Production:

Cell Colour = Access Period Granted. Green = Life of Project, Orange = Temporary, Just-in-time.

Development:

Cell Colour = Access Period Granted. Green = Life of Project, Orange = Temporary, Just-in-time.

Key:

Standard Roles

R = Reader.

C = Contributor.

O = Owner.

Component Specific Roles

ADS = Azure Machine Learning Data Scientist.

ACO = Azure Machine Learning Compute Operator.

ARU = Azure Machine Learning Registry User.

ACRPush = Azure Container Registry Push.

DOPA = DevOps Project Administrators.

DOPCA = DevOps Project Collection Administrators.

LAR = Log Analytics Reader.

LAC = Log Analytics Contributor.

MR = Monitoring Reader.

MC = Monitoring Contributor.

Important:

Once a model has been productionized using one or more Azure AI Services API’s, service specific built-in roles should be implemented into that project.

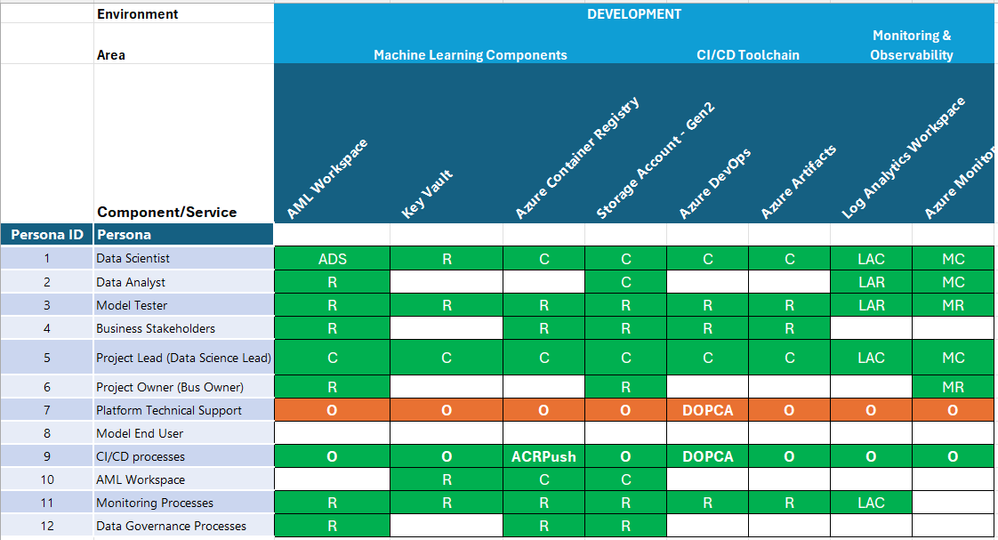

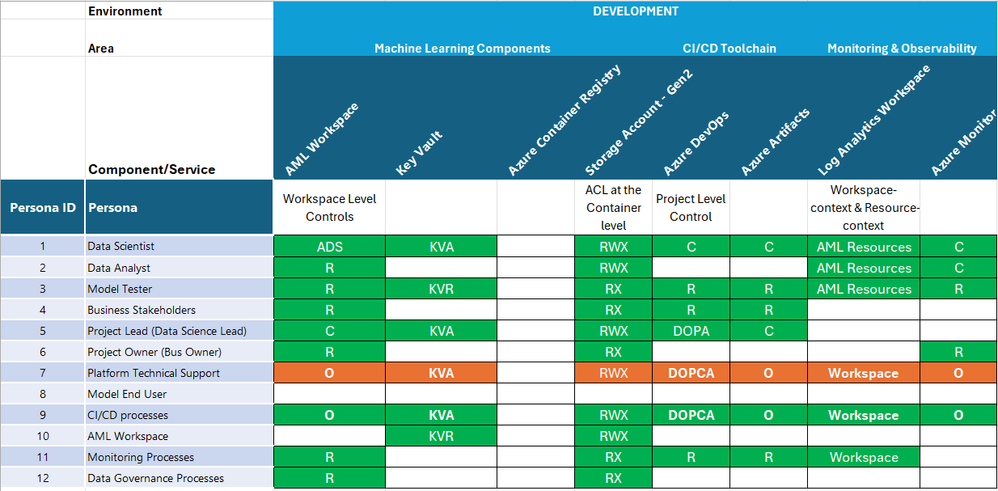

6.4. Identity RBAC – Data/Model Plane

The Data plane is used to manage the capabilities exposed by a resource.

The Persona based identity RBAC design for the data plane for each environment can be described as;

Production:

Cell Colour = Access Period Granted. Green = Life of Project, Orange = Temporary, Just-in-time.

Development:

Cell Colour = Access Period Granted. Green = Life of Project, Orange = Temporary, Just-in-time.

Key:

Standard Roles

R = Reader.

C = Contributor.

O = Owner.

Component Specific Roles

ADS = Azure Machine Learning Data Scientist.

ACO = Azure Machine Learning Compute Operator.

ARU = Azure Machine Learning Registry User.

ACRPush = Azure Container Registry Push.

DOPA = DevOps Project Administrators.

DOPCA = DevOps Project Collection Administrators.

KVA = Key Vault Administrator.

KVA = Key Vault Administrator.

Important:

- Data plane controls are additive to the Control plane, i.e. they build on top of them.

- The Data plane controls vary depending on the specific AI Service selected, its recommended to take to most restrictive scope matched with the most appropriate built-in role available for the role/task requirements.

7. SDLC Flow

This section describes the full Software Development Lifecycle (SDLC) for a departmental ML model.

- A Departmental ML model development describes the most detailed work-through of the process. An Enterprise process is a simplified version of this process.

7.1. Step 1 – Create Development

For a new development, the first step is to create the development environment with the various AML components required and develop the initial version of the ML model.

Prerequisites

- Assess the in-scope data, validating the reuse of the Enterprise or an existing Department implementation.

- Confirm the Project Lead and Business owner roles, and validate understanding/acceptance of the Responsible, Accountable, Consulted, and Informed (RACI).

Process Flow

- Create the Department related development Entra ID groups as described on the identity RBAC section.

- From deployment templates, create the AML workspace and data storage components in the development environment, linking together with compute identities.

- This deployment includes linking to the production instance of the enterprise shared Microsoft's AI service API’s suite.

- Tagging of components/services is key to driving policies, monitoring, and cost attribution. - From deployment templates, create the AML data store in the development environment.

- This approach enables the workspace users to create, alter, or update data as required to support the development process. - Enable the Workspace compute managed identities to access the data storage.

- Update the Monitoring and Alerting rules for a new department workspace in the development environment, as per the MVP Monitoringsection.

From this baseline, the project team is able to start ML development activities.

Important:

This worked example is for a new Dept setup, but the same process would apply for the initial Enterprise or individual project setups.

7.2. Step 2 – Push to testing

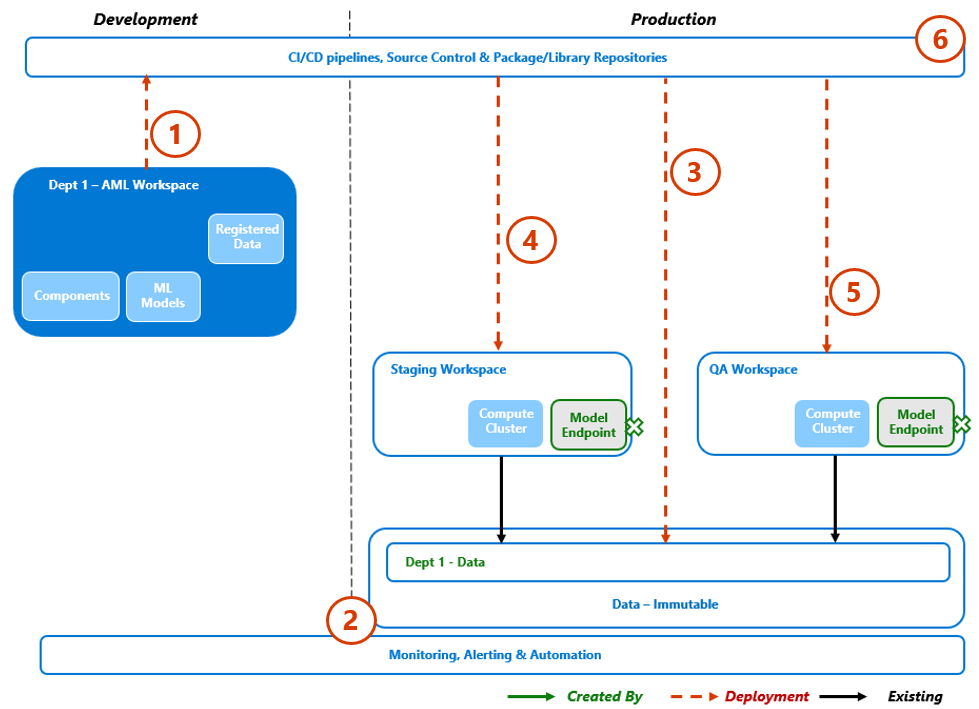

Once the model completes the initial testing phases, it should progress through Quality Assurance (QA) testing. If testing is failed, this process would exit with alerting back to the project. The new model elements would be removed, enabled access backed out, and process logs made available for trouble-shooting.

Process Flow

- Using the CI/CD framework, the model, configuration and metadata are "pulled" into the deployment pipeline.

- Update the Monitoring and Alerting rules for a new model entering the testing phase, as per the MVP Monitoring section.

- Model data (if necessary) is populated into the production data to support the testing tasks.

- The Shared Staging workspace is updated for the new model deployment and components are stood-up to complete model/manifest deployment testing.

- Once staging has completed, the Shared QA workspace is updated for the new model deployment. QA Components are stood-up to complete integration, performance & volume, and security testing, etc.

- This phase of testing will be extended over time as individual projects extend the QA testing harness. - All audit & testing logs are made available back to the project team.

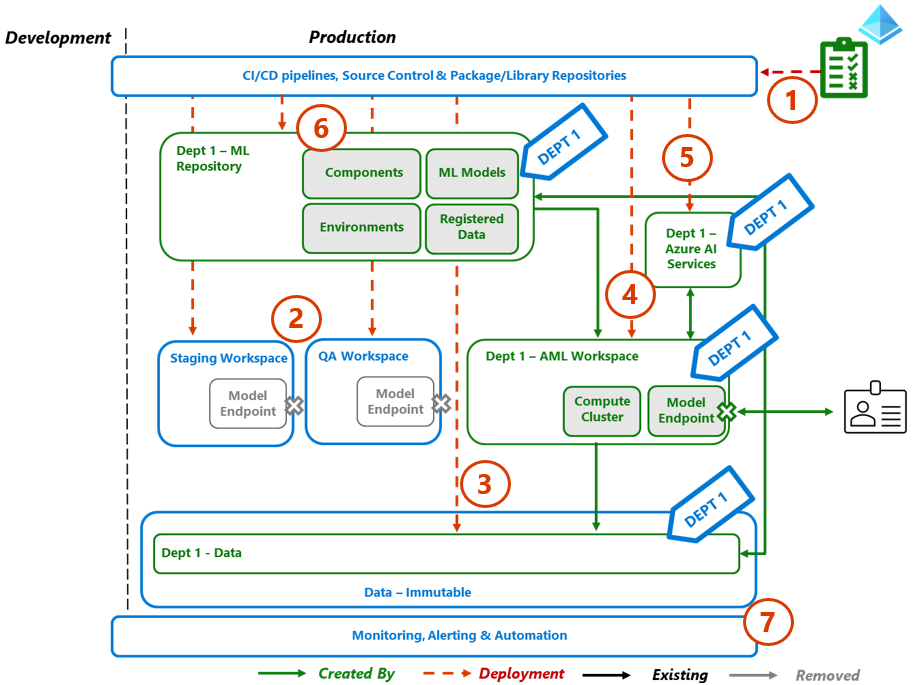

7.3. Step 3 – Release to Production

Once the model completes QA testing, it would be deployed into production. Process failure would trigger a support incident, in addition to the failure attributes as described for Step 2.

Process Flow

- Create the Department related production Entra ID groups as described on the identity RBAC section.

- Removing the model and data components from the Staging and QA workspaces.

- From deployment templates, create the department specific data store in the production immutable data store, tagged appropriately.

- From deployment templates, create the department specific AML production workspace using its managed identities connect to the data store.

- From deployment templates, link to the department specific Azure AI Services (for the subset of required services) via the AML production workspace – managed identity.

- From deployment templates, create the department ML repository in production using its managed identity connect to the AML workspace and the data store.

- Deploy the production workspace endpoint, enabling downstream processes or individuals to access/interact with the model.

- Depending upon the desired Service Level Agreement (SLA) or Non Functional Requirements (NFRs), other model serving methods maybe appropriate such as Web Apps, Azure Kubernetes Service (AKS) etc. These options aren’t covered in this PoV. - Update the Monitoring and Alerting rules for a new model entering production, as per the MVP Monitoring section.

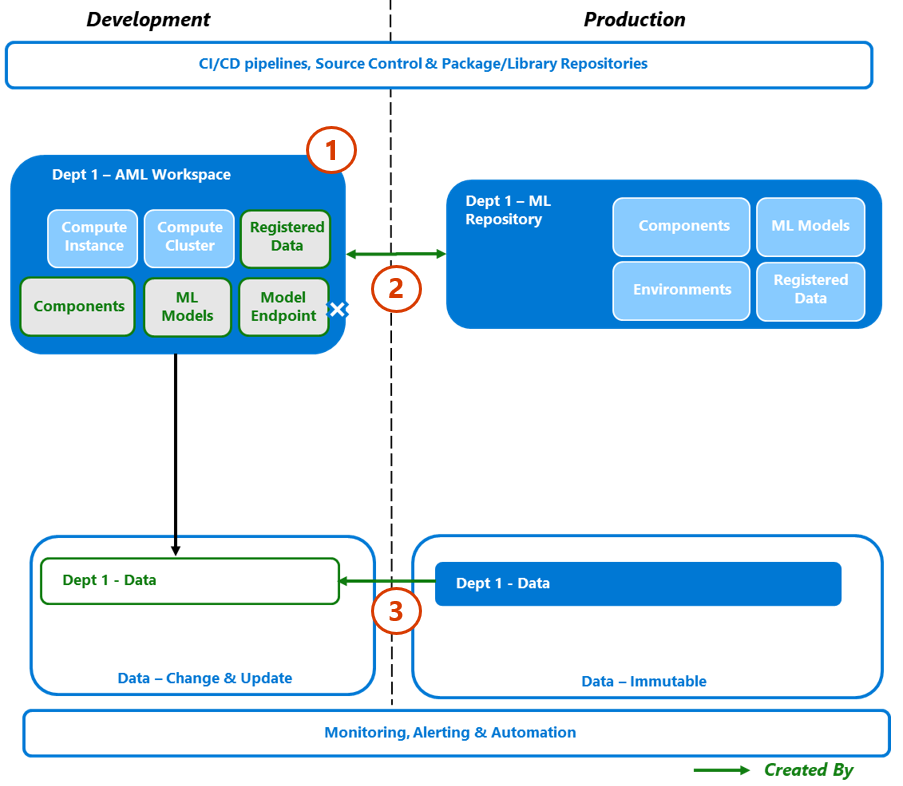

7.4. Step 4 – Iteration

A Productionized model requires iterative development to ensure it remains "fit for purpose", addressing either data or prediction drift. This iterative process is the next step in the model development lifecycle.

Prerequisites

- Signals from the production model process demonstrating a degradation in performance, output, cost, etc.

Process Flow

- Depending on timing, the development workspace may have been removed from the environment. If so, a new development deployment would be required following the Step 1 process.

- From the Production ML repository, copy across the current version model, configuration, metadata, logs, etc. providing the baseline to work from and inform the iteration refactoring activities.

- From the Immutable production data store, copy across the existing model’s datasets and register them for use in the deployment workspace

- Depending on the scope/context of the work, this connection maybe a one-off or periodically required to get the latest updates.

- The replication of data enables developers and testers to create or update data as required, without impacting the "golden record".

- Other non-ML datasets would be ingested at this stage, if needed.

From this baseline, the project team is able to start the refactoring activities.

7.5. Step 5 – Iteration Testing

As before, once the model iteration completes initial testing, it should progress through quality assurance testing. This process follows the process from Step 2 with the addition of ML Repository component.

Process Flow

- Using the CI/CD framework, the model, configuration and metadata are "pulled" into the deployment pipeline.

- The Department ML Repository is populated for the iterative deployment.

- The Enterprise Staging workspace is updated for the iterative deployment, with the ML repository compute managed identity given the access required.

- Staging Components are stood-up to complete model/manifest deployment testing.

- The Enterprise QA workspace is updated for the iterative deployment, with the ML repository compute managed identity given the access required.

- QA Components are stood-up to complete integration, Performance & volume, and security testing.

Once completed, the iterative model would be versioned and then released to production, following the process from Step 3.

7.6. Step 6 – Retirement

When a model has been replaced, depreciated or no longer delivers business value, it should be removed from the environment. For completeness, the process of removing a department installation is described here. An individual Model retirement would be a subset of these steps.

Process Flow

- Using the CI/CD framework, shut down the model serving endpoint, taking the model effectivity offline.

- This approach "smoke tests" the removal, uncovering any hidden dependant solutions or processes. - Removing the Department/model specific monitoring and alerting rules, false positives aren’t raised during this process.

- Removing the Department development workspace and related identities.

- Removing the Department development data store and data.

- Upload copies of the model, configuration, and metadata, to an archive, if necessary.

- Removing the Department ML repository, production workspace components and related identities.

- Removing the Department production data store. Data could also be transitioned to a cooler service tier, if long term retention is a requirement.

- Removing the Department specific Entra ID groups and identities.

9. Contributors

Principal authors:

- Scott Mckinnon | Cloud Solution Architect

- Nicholas Moore | Cloud Solution Architect

- Darren Turchiarelli | Cloud Solution Architect

Other contributors:

- Leo Kozhushnik | Cloud Solution Architect

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.