- Home

- Azure

- Analytics on Azure Blog

- Apache Spark on HDInsight on AKS - Submit Spark Job

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

HDInsight on AKS is on a containerized architecture, exciting enhancements for the Spark workload, adding two new open-source workloads, Trino and Flink. HDInsight on AKS delivers managed infrastructure, security, and monitoring so that teams can spend their time building innovative applications without needing to worry about the other components of their stack.

The blog objective is to provide a list of options to submit spark jobs to the Spark on HDInsight on AKS. There are a couple of ways we can submit the Spark jobs to the HDInsight on the AKS cluster:

- Interactive way using Jupyter and Zeppelin

- Spark-submit from ssh-nodes

- Livy API (Application Programming Interfaces)

Interactive Spark Job Using Jupyter and Zeppelin

Jupyter and Zeppelin Notebook are part of the Spark cluster on HDInsight on AKS. Notebooks let you interact with your data, combine code with markdown text, and do simple visualizations. You can start an interactive session to interact with the Spark cluster.

This is a good option for data science needs where the enterprise allows you to create such notebooks in the production environment to test out various scenarios.

Coming Soon: Multi-user support is in progress; please provide your feedback.

Spark-Submit Command

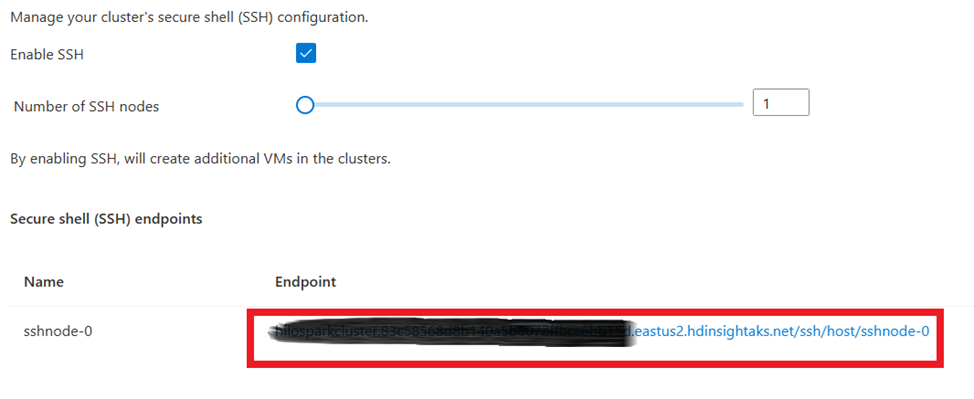

The HDInsight on the AKS Spark cluster allows us to create SSH pod(s); these pod(s) will be part of the cluster. Users can run multiple concurrent web SSH sessions. All authorized users of the cluster share these SSH pods.

The HDInsight on AKS uses modern security architecture; all the storage access is through MSI (Managed System Identity). The default storage container is the default location for the cluster logs and other application needs.

Enterprises would like to use the Spark-Submit command to submit their spark application (python file or jar file) to the HDInsight on the AKS cluster. These application binaries can be stored at the primary storage account attached to the cluster.

If you would like to use any alternative storage account for these application binaries, you must provide authorization from the cluster. Assign the “Storage Blob Data Reader” role to the user-assigned MSI for the cluster creation to this alternative storage account. This will allow the cluster to access the jar file for the execution.

To test the complete flow, you can download the Apache Spark example jar file from the git location. Click on “secure shell (SSH) from the cluster user interface from the portal and click on the endpoint for the ssh node.

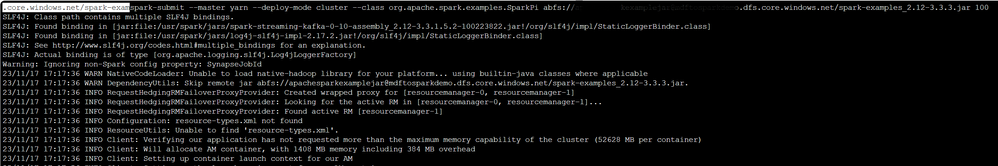

Once you log in to the SSH node, you can use the spark-submit command to submit the job to the cluster using the following command:

spark-submit - master yarn - deploy-mode cluster - class org.apache.spark.examples.SparkPi abfs://<<containername>>@<<storageaccount>>.dfs.core.windows.net/<<filesystem>>/spark-examples_2.12–3.3.3.jar 100

The output will be as follows:

Livy Batch Job API

You can access the Spark cluster's Livy APIs (Application Programming Interfaces) from the client (command line, java/python application, Air Flow, etc.) using OAuth 2.0 client credentials flows. A service principal defined in Microsoft Entra ID (formerly Azure Active Directory) acts as a client role on which authentication and authorization policies can be enforced in HDInsight on the AKS Spark cluster. It uses the OAuth 2.0 client credentials flow to authorize access to the Livy APIs.

The following steps are to be performed to access Livy REST (Representational State Transfer) APIs:

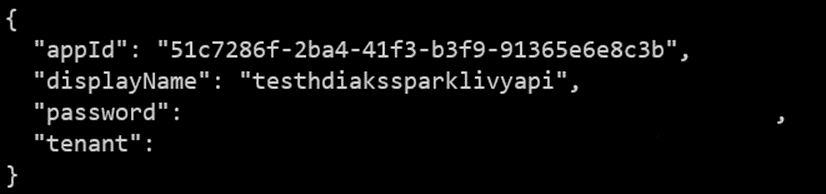

- Create a Microsoft Entra service principal to access Azure — make a note of appId, password, and tenant from the response.

az ad sp create-for-rbac -n <sp name>

-

Grant access to the cluster for the newly created service principal

There are a couple of options to access Livy API:

Option#1 — Accessing Livy API from the REST client apps like Postman, etc.

- Livy REST APIs are protected resources from the Spark cluster. You would require an OAuth token to access the Livy REST APIs; use POST request to https://login.microsoftonline.com/<<tenant id>>/oauth2/v2.0/token with following payload:

- grant_type= client_credentials

- scope=https://hilo.azurehdinsight.net/.default

- client_id=<<app id from step#1>>

- client_secret=<<password>>

-

Extract “access_token” from the response.

-

Create job submission API request, the POST URL will be https://<<your cluster dns name>>/p/livy/batches with the following payload and Bearer Token is set in the Authorization header with the OAuth token received from the previous step:

{ "className": "org.apache.spark.examples.SparkPi",

"args": [10],

"name":"testjob",

"file": "abfs://<<containername>>@<<storageaccount>>.dfs.core.windows.net/<<filesystem>>/spark-examples_2.12–3.3.3.jar"

}

Option#2 — Accessing Livy REST APIs from Azure Data Factory Managed Airflow or Azure Function App, etc.

- Build the API request flow using the application code.

-

Store “AppId” and “password” in Key Vault or have them part of the “.env” file, which will allow your application code to access the same to generate an OAuth token from the application code. For example, in the case of the “.env” file:

import json

from azure.identity import DefaultAzureCredential

from dotenv import load_dotenv

load_dotenv(".env")

token_credential = DefaultAzureCredential()

accessToken = token_credential.get_token("https://hilo.azurehdinsight.net/.default")

-

Create job submission API request; the payload has an extensive list of parameters; please refer to the API specification for more detail.

cluster_dns = "*****.hdinsightaks.net"

livy_job_url = "https://" + cluster_dns + "/p/livy/batches"

request_payload = {"className": "org.apache.spark.examples.SparkPi",

"args": [10],

"name": "testjob",

"file": "abfs://<<containername>>@<<storageaccount>>.dfs.core.windows.net/<<filesystem>>/spark-examples_2.12–3.3.3.jar"

}

headers = {'Authorization': f'Bearer {accessToken.token}'}

response = requests.post(livy_job_url, json=request_payload, headers=headers)

print(response.json())

The response to the request will be as follows:

Summary

In this blog, we have seen multiple ways you can submit your spark application to the HDInsight on the AKS Spark cluster. You can pick the right method for your implementation based on your needs.

References

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.