First published on TECHNET on Nov 18, 2016

// This post was written by Dan Lovinger, Principal Software Engineer.

Howdy,

In the weeks since the release of Windows Server 2016, the amount of interest we’ve seen in Storage Spaces Direct has been nothing short of spectacular. This interest has translated to many potential customers looking to evaluate Storage Spaces Direct.

Windows Server has a strong heritage with do-it-yourself design. We’ve even done it ourselves with the Project Kepler-47 proof of concept ! While over the coming months there will be many OEM-validated solutions coming to market, many more experimenters are once again piecing together their own configurations.

This is great, and it has led to a lot of questions, particularly about Solid-State Drives (SSDs). One dominates: "Is [some drive] a good choice for a cache device?" Another comes in close behind: "We’re using [some drive] as a cache device and performance is horrible, what gives?”

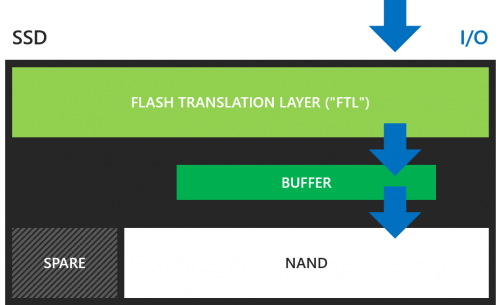

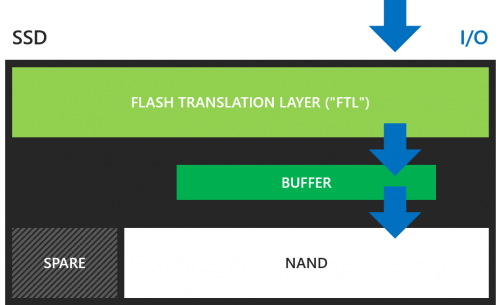

[caption id="attachment_7256" align="aligncenter" width="500"] The flash translation layer masks a variety of tricks an SSD can use to accelerate performance and extend its lifetime, such as buffering and spare capacity.[/caption]

The flash translation layer masks a variety of tricks an SSD can use to accelerate performance and extend its lifetime, such as buffering and spare capacity.[/caption]

Some background on SSDs

As I write this in late 2016, an SSD is universally a device built from a set of NAND flash dies connected to an internal controller, called the flash translation layer ("FTL").

NAND flash is inherently unstable. At the physical level, a flash cell is a charge trap device – a bucket for storing electrons. The high voltages needed to trigger the quantum tunneling process that moves electrons in and out of the cell – your data – slowly accumulates damage at the atomic level. Failure does not happen all at once. Charge degrades in-place over time and even reads aren’t without cost, a phenomenon known as read disturb.

The number of electrons in the cell’s charge trap translate to a measurable voltage. At its most basic, a flash cell stores one on/off bit – a single level cell (SLC) – and the difference between 0 and 1 is “easy”. There is only one threshold voltage to consider. On one side the cell represents 0, on the other it is 1.

However, conventional SSDs have moved on from SLC designs. Common SSDs now store two (MLC) or even three (TLC) bits per cell, requiring four (00, 01, 10, 11) or eight (001, 010, … 110, 111) different charge levels. On the horizon is 4 bit QLC NAND, which will require sixteen! As the damage accumulates it becomes difficult to reliably set charge levels; eventually, they cannot store new data. This happens faster and faster as bit densities increase.

The FTL has two basic defenses.

Both defenses work like a bank account.

Over the short term, some amount of the ECC is needed to recover the data on each read. Lightly-damaged cells or recently-written data won’t draw heavily on ECC, but as time passes, more of the ECC is necessary to recover the data. When it passes a safety margin, the data must be re-written to “refresh” the data and ECC, and the cycle continues.

Across a longer term, the over-provisioning in the device replaces failed cells and preserves the apparent capacity of the SSD. Once this account is drawn down, the device is at the end of its life.

To complete the physical picture, NAND is not freely writable. A die is divided into what we refer to as program/erase "P/E" pages. These are the actual writable elements. A page must first be erased to prepare writing it, then the entire page can be written at once. A page may be as small as 16K, or potentially much larger. Any one single write that arrives in the SSD probably won’t line up with the page size!

And finally, NAND never re-writes in place. The FTL is continuously keeping track of wear, preparing fresh erased pages, and consolidating valid data sitting in pages alongside stale data corresponding to logical blocks which have already been re-written. These are additional reasons for over-provisioning.

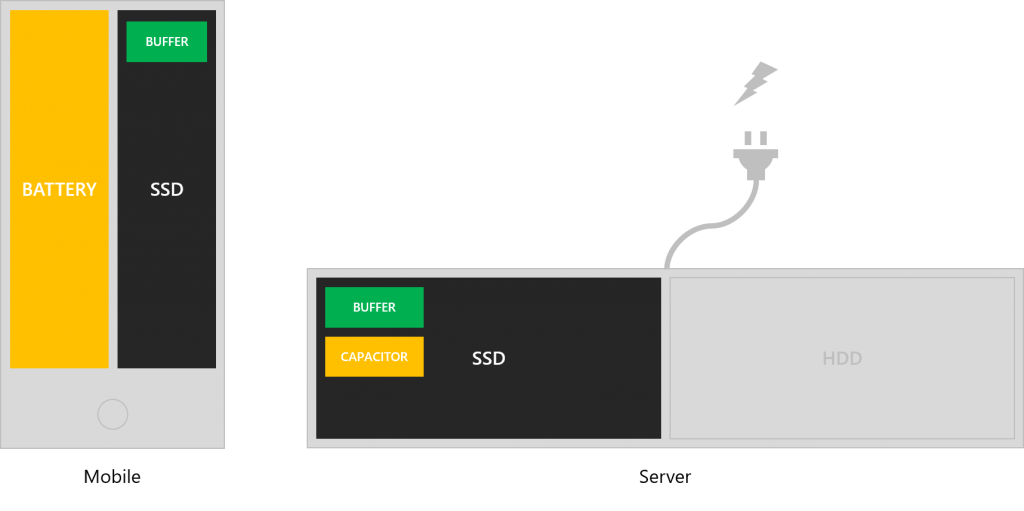

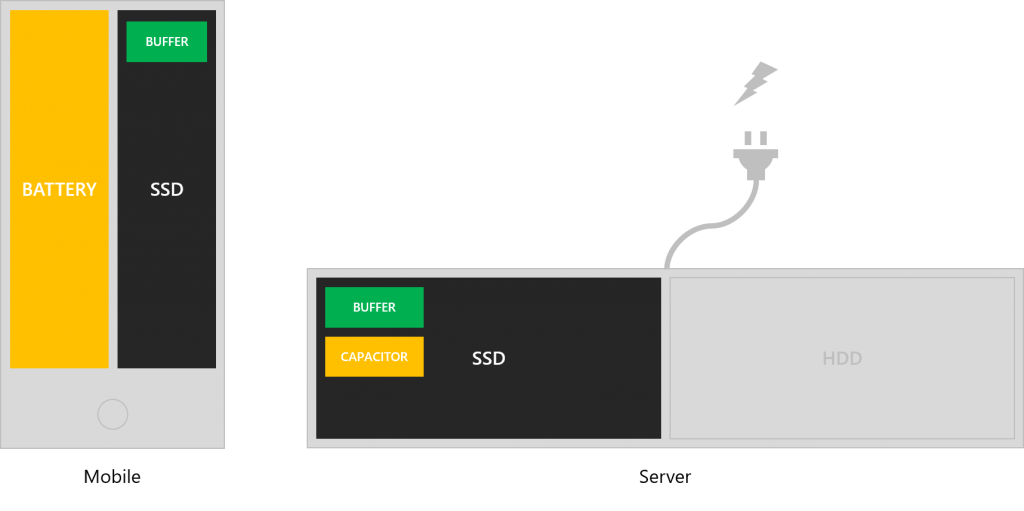

[caption id="attachment_7325" align="aligncenter" width="879"] In consumer devices, and especially in mobile, an SSD can safely leverage an unprotected, volatile cache because the device’s battery ensures it will not unexpectedly lose power. In servers, however, an SSD must provide its own power protection, typically in the form of a capacitor.[/caption]

In consumer devices, and especially in mobile, an SSD can safely leverage an unprotected, volatile cache because the device’s battery ensures it will not unexpectedly lose power. In servers, however, an SSD must provide its own power protection, typically in the form of a capacitor.[/caption]

Buffers and caches

The bottom line is that a NAND flash SSD is a complex, dynamic environment and there is a lot going on to keep your data safe. As device densities increase, it is getting ever harder. We must maximize the value of each write, as it takes the device one step closer to failure. Fortunately, we have a trick: a buffer.

A buffer in an SSD is just like the cache in the system that surrounds it: some memory which can accumulate writes, allowing the user/application request to complete while it gathers more and more data to write efficiently to the NAND flash. Many small operations turn into a small number of larger operations. Just like the memory in a conventional computer, though, on its own that buffer is volatile – if a power loss occurs, any pending write operations are lost.

Losing data is, of course, not acceptable. Storage Spaces Direct is at the far end of a series of actions which have led to it getting a write. A virtual machine on another computer may have had an application issue a flush which, in a physical system, would put the data on stable storage. After Storage Spaces Direct acknowledges any write, it must be stable.

How can any SSD have a volatile cache!? Simple, and it is a crucial detail of how the SSD market has differentiated itself: you are very likely reading this on a device with a battery! Consumer flash is volatile in the device but not volatile when considering the entire system – your phone, tablet or laptop. Making a cache non-volatile requires some form of power storage (or new technology …), which adds unneeded expense in the consumer space.

What about servers? In the enterprise space, the cost and complexity of providing complete power safety to a collection of servers can be prohibitive. This is the design point enterprise SSDs sit in: the added cost of internal power capacity to allow saving the buffer content is small.

[caption id="attachment_7295" align="aligncenter" width="879"] An (older) enterprise-grade SSD, with its removable and replaceable built-in battery![/caption]

An (older) enterprise-grade SSD, with its removable and replaceable built-in battery![/caption]

[caption id="attachment_7305" align="aligncenter" width="879"] This newer enterprise-grade SSD, foreground, uses a capacitor (the three little yellow things, bottom right) to provide power-loss protection.[/caption]

This newer enterprise-grade SSD, foreground, uses a capacitor (the three little yellow things, bottom right) to provide power-loss protection.[/caption]

Along with volatile caches, consumer flash is also universally of lower endurance. A consumer device targets environments with light activity. Extremely dense, inexpensive, fragile NAND flash - which may wear out after only a thousand writes - could still provide many years of service. However, expressed in total writes over time or capacity written per day, a consumer device could wear out more than 10x faster than available enterprise-class SSD.

So, where does that leave us? Two requirements for SSDs for Storage Spaces Direct. One hard, one soft, but they normally go together:

But … could I get away with it? And more crucially – for us – what happens if I just put a consumer-grade SSD with a volatile write cache in a Storage Spaces Direct system?

An experiment with consumer-grade SSDs

For this experiment, we’ll be using a new-out-of-box 1 TB consumer class SATA SSD. While we won’t name it, it is a first tier, high quality, widely available device. It just happens to not be appropriate for an enterprise workload like Storage Spaces Direct, as we’ll see shortly.

In round numbers, its data sheet says the following:

Note: QD ("queue depth") is geek-speak for the targeted number of IOs outstanding during a storage test. Why do you always see 32? That’s the SATA Native Command Queueing (NCQ) limit to which commands can be pipelined to a SATA device. SAS and especially NVME can go much deeper.

Translating the endurance to the widely-used device-writes-per-day (DWPD) metric, over the device’s 5-year warranty period that is

The device can handle just over 100 GB each day for 5 years before its endurance is exhausted. That’s a lot of Netflix and web browsing for a single user! Not so much for a large set of virtualized workloads.

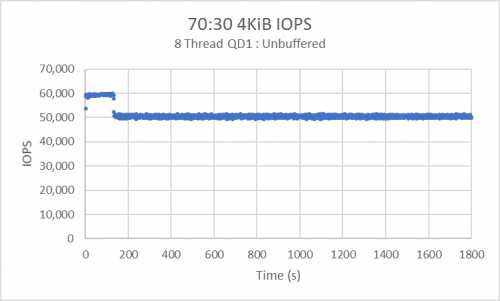

To gather the data below, I prepared the device with a 100 GiB load file, written through sequentially a little over 2 times. I used DISKSPD 2.0.18 to do a QD8 70:30 4 KiB mixed read/write workload using 8 threads, each issuing a single IO at a time to the SSD. First with the write buffer enabled:

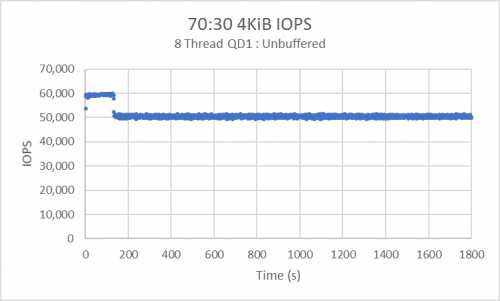

[caption id="attachment_7315" align="aligncenter" width="500"] Normal unbuffered IO sails along, with a small write cliff.[/caption]

Normal unbuffered IO sails along, with a small write cliff.[/caption]

The first important note here is the length of the test: 30 minutes. This shows an abrupt drop of about 10,000 IOPS two minutes in – this is normal, certainly for consumer devices. It likely represents the FTL running out of pre-erased NAND ready for new writes. Once its reserve runs out, the device runs slower until a break in the action lets it catch back up. With web browsing and other consumer scenarios. the chances of noticing this are small.

An aside: this is a good, stable device in each mode of operation – behavior before and after the "write cliff" is very clean.

Second, note that the IOPS are … a bit different than the data sheet might have suggested, even before it reaches steady operation. We’re intentionally using a light, QD8 70:30 4K to drive it more like a generalized workload. It still rolls over the write cliff. Under sustained, mixed IO pressure the FTL has much more work to take care of and it shows.

That’s with the buffer on, though. Now just adding write-through (with -Su w ):

[caption id="attachment_7316" align="aligncenter" width="500"] Write-through IO exposes the true latency of NAND, normally masked by the FTL/buffer.[/caption]

Write-through IO exposes the true latency of NAND, normally masked by the FTL/buffer.[/caption]

Wow!

First: it’s great that the device honors write-through requests. In the consumer space, this gives an application a useful tool for making data durable when it must be durable. This is a good device!

Second, oh my does the performance drop off. This is no longer an "SSD": especially as it goes over the write cliff – which is still there – it’s merely a fast HDD, at about 220 IOPS. Writing NAND is slow! This is the FTL forced to push all the way into the NAND flash dies, immediately, without being able to buffer, de-conflict the read and write IO streams and manage all the other background activity it needs to do.

Third, those immediate writes take what is already a device with modest endurance and deliver a truly crushing blow to its total lifetime.

Crucially, this is how Storage Spaces Direct would see this SSD. Not much of a "cache" anymore.

So, why does a non-volatile buffer help?

It lets the SSD claim that a write is stable once it is in the buffer. A write-through operation – or a flush, or a request to disable the cache – can be honored without forcing all data directly into the NAND. We’ll get the good behavior, the stated endurance, and the data stability we require for reliable, software-defined storage to a complex workload.

In short, your device will behave much as we saw in the first chart: a nice, flat, fast performance profile. A good cache device. If it’s NVMe it may be even more impressive, but that’s a thought for another time.

Finally, how do you identify a device with a non-volatile buffer cache?

Datasheet, datasheet, datasheet. Look for language like:

… along with many others across the industry. You should be able to find a device from your favored provider. These will be more expensive than consumer grade devices, but hopefully we’ve convinced you why they are worth it.

Be safe out there!

/ Dan Lovinger

// This post was written by Dan Lovinger, Principal Software Engineer.

Howdy,

In the weeks since the release of Windows Server 2016, the amount of interest we’ve seen in Storage Spaces Direct has been nothing short of spectacular. This interest has translated to many potential customers looking to evaluate Storage Spaces Direct.

Windows Server has a strong heritage with do-it-yourself design. We’ve even done it ourselves with the Project Kepler-47 proof of concept ! While over the coming months there will be many OEM-validated solutions coming to market, many more experimenters are once again piecing together their own configurations.

This is great, and it has led to a lot of questions, particularly about Solid-State Drives (SSDs). One dominates: "Is [some drive] a good choice for a cache device?" Another comes in close behind: "We’re using [some drive] as a cache device and performance is horrible, what gives?”

[caption id="attachment_7256" align="aligncenter" width="500"]

The flash translation layer masks a variety of tricks an SSD can use to accelerate performance and extend its lifetime, such as buffering and spare capacity.[/caption]

The flash translation layer masks a variety of tricks an SSD can use to accelerate performance and extend its lifetime, such as buffering and spare capacity.[/caption]

Some background on SSDs

As I write this in late 2016, an SSD is universally a device built from a set of NAND flash dies connected to an internal controller, called the flash translation layer ("FTL").

NAND flash is inherently unstable. At the physical level, a flash cell is a charge trap device – a bucket for storing electrons. The high voltages needed to trigger the quantum tunneling process that moves electrons in and out of the cell – your data – slowly accumulates damage at the atomic level. Failure does not happen all at once. Charge degrades in-place over time and even reads aren’t without cost, a phenomenon known as read disturb.

The number of electrons in the cell’s charge trap translate to a measurable voltage. At its most basic, a flash cell stores one on/off bit – a single level cell (SLC) – and the difference between 0 and 1 is “easy”. There is only one threshold voltage to consider. On one side the cell represents 0, on the other it is 1.

However, conventional SSDs have moved on from SLC designs. Common SSDs now store two (MLC) or even three (TLC) bits per cell, requiring four (00, 01, 10, 11) or eight (001, 010, … 110, 111) different charge levels. On the horizon is 4 bit QLC NAND, which will require sixteen! As the damage accumulates it becomes difficult to reliably set charge levels; eventually, they cannot store new data. This happens faster and faster as bit densities increase.

- SLC: 100,000 or more writes per cell

- MLC: 10,000 to 20,000

- TLC: low to mid 1,000’s

- QLC: mid-100’s

The FTL has two basic defenses.

- error correcting codes (ECC) stored alongside the data

- extra physical capacity, over and above the apparent size of the device, "over-provisioning"

Both defenses work like a bank account.

Over the short term, some amount of the ECC is needed to recover the data on each read. Lightly-damaged cells or recently-written data won’t draw heavily on ECC, but as time passes, more of the ECC is necessary to recover the data. When it passes a safety margin, the data must be re-written to “refresh” the data and ECC, and the cycle continues.

Across a longer term, the over-provisioning in the device replaces failed cells and preserves the apparent capacity of the SSD. Once this account is drawn down, the device is at the end of its life.

To complete the physical picture, NAND is not freely writable. A die is divided into what we refer to as program/erase "P/E" pages. These are the actual writable elements. A page must first be erased to prepare writing it, then the entire page can be written at once. A page may be as small as 16K, or potentially much larger. Any one single write that arrives in the SSD probably won’t line up with the page size!

And finally, NAND never re-writes in place. The FTL is continuously keeping track of wear, preparing fresh erased pages, and consolidating valid data sitting in pages alongside stale data corresponding to logical blocks which have already been re-written. These are additional reasons for over-provisioning.

[caption id="attachment_7325" align="aligncenter" width="879"]

In consumer devices, and especially in mobile, an SSD can safely leverage an unprotected, volatile cache because the device’s battery ensures it will not unexpectedly lose power. In servers, however, an SSD must provide its own power protection, typically in the form of a capacitor.[/caption]

In consumer devices, and especially in mobile, an SSD can safely leverage an unprotected, volatile cache because the device’s battery ensures it will not unexpectedly lose power. In servers, however, an SSD must provide its own power protection, typically in the form of a capacitor.[/caption]

Buffers and caches

The bottom line is that a NAND flash SSD is a complex, dynamic environment and there is a lot going on to keep your data safe. As device densities increase, it is getting ever harder. We must maximize the value of each write, as it takes the device one step closer to failure. Fortunately, we have a trick: a buffer.

A buffer in an SSD is just like the cache in the system that surrounds it: some memory which can accumulate writes, allowing the user/application request to complete while it gathers more and more data to write efficiently to the NAND flash. Many small operations turn into a small number of larger operations. Just like the memory in a conventional computer, though, on its own that buffer is volatile – if a power loss occurs, any pending write operations are lost.

Losing data is, of course, not acceptable. Storage Spaces Direct is at the far end of a series of actions which have led to it getting a write. A virtual machine on another computer may have had an application issue a flush which, in a physical system, would put the data on stable storage. After Storage Spaces Direct acknowledges any write, it must be stable.

How can any SSD have a volatile cache!? Simple, and it is a crucial detail of how the SSD market has differentiated itself: you are very likely reading this on a device with a battery! Consumer flash is volatile in the device but not volatile when considering the entire system – your phone, tablet or laptop. Making a cache non-volatile requires some form of power storage (or new technology …), which adds unneeded expense in the consumer space.

What about servers? In the enterprise space, the cost and complexity of providing complete power safety to a collection of servers can be prohibitive. This is the design point enterprise SSDs sit in: the added cost of internal power capacity to allow saving the buffer content is small.

[caption id="attachment_7295" align="aligncenter" width="879"]

An (older) enterprise-grade SSD, with its removable and replaceable built-in battery![/caption]

An (older) enterprise-grade SSD, with its removable and replaceable built-in battery![/caption]

[caption id="attachment_7305" align="aligncenter" width="879"]

This newer enterprise-grade SSD, foreground, uses a capacitor (the three little yellow things, bottom right) to provide power-loss protection.[/caption]

This newer enterprise-grade SSD, foreground, uses a capacitor (the three little yellow things, bottom right) to provide power-loss protection.[/caption]

Along with volatile caches, consumer flash is also universally of lower endurance. A consumer device targets environments with light activity. Extremely dense, inexpensive, fragile NAND flash - which may wear out after only a thousand writes - could still provide many years of service. However, expressed in total writes over time or capacity written per day, a consumer device could wear out more than 10x faster than available enterprise-class SSD.

So, where does that leave us? Two requirements for SSDs for Storage Spaces Direct. One hard, one soft, but they normally go together:

- the device must have a non-volatile write cache

- the device should have enterprise-class endurance

But … could I get away with it? And more crucially – for us – what happens if I just put a consumer-grade SSD with a volatile write cache in a Storage Spaces Direct system?

An experiment with consumer-grade SSDs

For this experiment, we’ll be using a new-out-of-box 1 TB consumer class SATA SSD. While we won’t name it, it is a first tier, high quality, widely available device. It just happens to not be appropriate for an enterprise workload like Storage Spaces Direct, as we’ll see shortly.

In round numbers, its data sheet says the following:

- QD32 4K Read: 95,000 IOPS

- QD32 4K Write: 90,000 IOPS

- Endurance: 185TB over the device lifetime

Note: QD ("queue depth") is geek-speak for the targeted number of IOs outstanding during a storage test. Why do you always see 32? That’s the SATA Native Command Queueing (NCQ) limit to which commands can be pipelined to a SATA device. SAS and especially NVME can go much deeper.

Translating the endurance to the widely-used device-writes-per-day (DWPD) metric, over the device’s 5-year warranty period that is

185 TB / (365 days x 5 years = 1825 days) = ~ 100 GB writable per day

100 GB / 1 TB total capacity = 0.10 DWPD

The device can handle just over 100 GB each day for 5 years before its endurance is exhausted. That’s a lot of Netflix and web browsing for a single user! Not so much for a large set of virtualized workloads.

To gather the data below, I prepared the device with a 100 GiB load file, written through sequentially a little over 2 times. I used DISKSPD 2.0.18 to do a QD8 70:30 4 KiB mixed read/write workload using 8 threads, each issuing a single IO at a time to the SSD. First with the write buffer enabled:

diskspd.exe -t8 -b4k -r4k -o1 -w30 -Su -D -L -d1800 -Rxml Z:\load.bin

[caption id="attachment_7315" align="aligncenter" width="500"]

Normal unbuffered IO sails along, with a small write cliff.[/caption]

Normal unbuffered IO sails along, with a small write cliff.[/caption]

The first important note here is the length of the test: 30 minutes. This shows an abrupt drop of about 10,000 IOPS two minutes in – this is normal, certainly for consumer devices. It likely represents the FTL running out of pre-erased NAND ready for new writes. Once its reserve runs out, the device runs slower until a break in the action lets it catch back up. With web browsing and other consumer scenarios. the chances of noticing this are small.

An aside: this is a good, stable device in each mode of operation – behavior before and after the "write cliff" is very clean.

Second, note that the IOPS are … a bit different than the data sheet might have suggested, even before it reaches steady operation. We’re intentionally using a light, QD8 70:30 4K to drive it more like a generalized workload. It still rolls over the write cliff. Under sustained, mixed IO pressure the FTL has much more work to take care of and it shows.

That’s with the buffer on, though. Now just adding write-through (with -Su w ):

diskspd.exe -t8 -b4k -r4k -o1 -w30 -Suw -D -L -d1800 -Rxml Z:\load.bin

[caption id="attachment_7316" align="aligncenter" width="500"]

Write-through IO exposes the true latency of NAND, normally masked by the FTL/buffer.[/caption]

Write-through IO exposes the true latency of NAND, normally masked by the FTL/buffer.[/caption]

Wow!

First: it’s great that the device honors write-through requests. In the consumer space, this gives an application a useful tool for making data durable when it must be durable. This is a good device!

Second, oh my does the performance drop off. This is no longer an "SSD": especially as it goes over the write cliff – which is still there – it’s merely a fast HDD, at about 220 IOPS. Writing NAND is slow! This is the FTL forced to push all the way into the NAND flash dies, immediately, without being able to buffer, de-conflict the read and write IO streams and manage all the other background activity it needs to do.

Third, those immediate writes take what is already a device with modest endurance and deliver a truly crushing blow to its total lifetime.

Crucially, this is how Storage Spaces Direct would see this SSD. Not much of a "cache" anymore.

So, why does a non-volatile buffer help?

It lets the SSD claim that a write is stable once it is in the buffer. A write-through operation – or a flush, or a request to disable the cache – can be honored without forcing all data directly into the NAND. We’ll get the good behavior, the stated endurance, and the data stability we require for reliable, software-defined storage to a complex workload.

In short, your device will behave much as we saw in the first chart: a nice, flat, fast performance profile. A good cache device. If it’s NVMe it may be even more impressive, but that’s a thought for another time.

Finally, how do you identify a device with a non-volatile buffer cache?

Datasheet, datasheet, datasheet. Look for language like:

-

"Power loss protection" or "PLP"

- Samsung SM863, and related

- Toshiba HK4E series, and related

-

"Enhanced power loss data protection"

- Intel S3510, S3610, S3710, P3700 series, and related

… along with many others across the industry. You should be able to find a device from your favored provider. These will be more expensive than consumer grade devices, but hopefully we’ve convinced you why they are worth it.

Be safe out there!

/ Dan Lovinger

Updated Apr 10, 2019

Version 2.0Cosmos_Darwin Microsoft

Microsoft

Microsoft

MicrosoftJoined December 08, 2016

Storage at Microsoft

Follow this blog board to get notified when there's new activity