- Home

- Security, Compliance, and Identity

- Security, Compliance, and Identity Blog

- Streamline the process to bring your own detections in Microsoft Purview Insider Risk Management

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Organizations often encounter significant challenges when attempting to gain a unified view of insider risks in their multicloud environments. Typically, this entails cross-checking multiple systems and manually correlating information to gain a comprehensive understanding of a specific user's activities that could potentially lead to data security incidents.

As we announced in the previous blogpost, Microsoft Purview Insider Risk Management allows you to bring your own detections and create custom indicators. Admins with the appropriate permissions can incorporate detections from homegrown analytics or SIEM/UEBA platforms like Sentinel, as well as directly from non-Microsoft systems such as Salesforce and Dropbox. These detections can then be used in Insider Risk Management policies, to detect scenarios such as data theft and data leaks. By weaving a user's risky activities across different environments into a unified timeline view, security teams can obtain a comprehensive understanding of potential security incidents across various applications.

In this blogpost, we will show you how you can automate the process to bring your own risk detections into Microsoft Purview Insider Risk Management, which correlates various signals to identify potential malicious or inadvertent insider risks, such as IP theft, data leakage, and security violations. Insider Risk Management enables customers to create policies to manage security and compliance. Built with privacy by design, users are pseudonymized by default, and role-based access controls and audit logs are in place to help ensure user-level privacy.

Automate the process of bringing in detections with Microsoft Sentinel

Here’s an example of automating the process to bring detections into Microsoft Purview Insider Risk Management through Microsoft Sentinel and Azure Logic Apps:

The Contoso organization has discovered instances in which GitHub privileged administrators or repository owners exposed confidential source code files to the public, leading to leakage of intellectual property. The Contoso security team aims to investigate these incidents and develop strategies to identify potential risky activities on GitHub before they escalate into full-blown data security incidents.

To achieve the above objectives, the team can utilize Analytics in Microsoft Sentinel to create rules that define risky activities that may lead to a data security incident by GitHub users in their organization. They can then leverage the bring-your-own-detections capability and Azure Logic Apps to automatically bring the detected risky activities into Microsoft Purview Insider Risk Management, a purpose-built solution that is designed for managing and mitigating insider risks. This approach enables Contoso to consolidate risky user activity signals across various workloads, including GitHub, Microsoft 365, endpoints, and other cloud services and apps, and conduct a holistic assessment of users' risk levels.

Here are the four steps that the Contoso security team can follow:

- Author Analytics rules in Microsoft Sentinel to detect risky user activities that may potentially lead to data security incidents in GitHub

- Stream risk detections from Microsoft Sentinel to Microsoft Purview Insider Risk Management through the Insider risk indicators connector

- Create Custom Indicators in Microsoft Purview Insider Risk Management and use them in a Data leak policy

- Conduct in-depth investigations of risky user activities that have the potential to result in data security incidents across environments

In the following sections, we will provide detailed explanations of each step, accompanied by screenshots as illustrative examples.

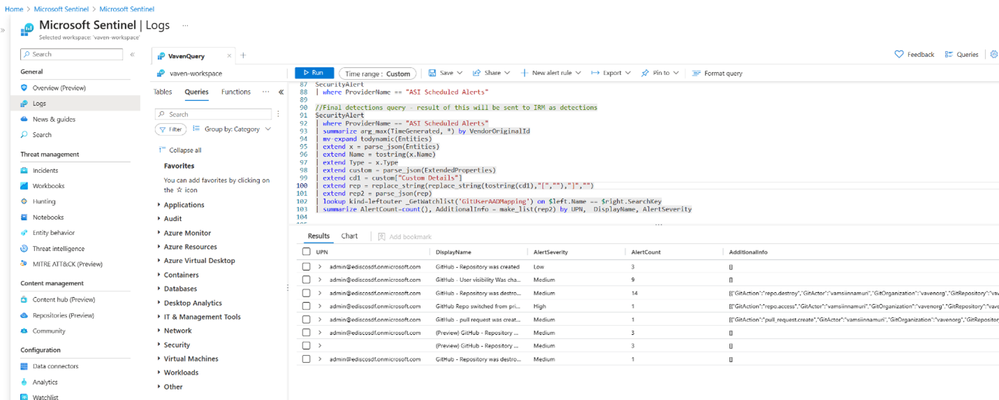

Step 1: Author Analytics rules in Microsoft Sentinel to detect risky user activities that may potentially lead to data security incidents in GitHub

Before incorporating detections into Microsoft Purview Insider Risk Management, it is essential to process activity logs to identify risky events that should be included. Step 1 guides you through connecting the log data to Microsoft Sentinel and curating them into the relevant risky activities you want to bring into Microsoft Purview Insider Risk Management.

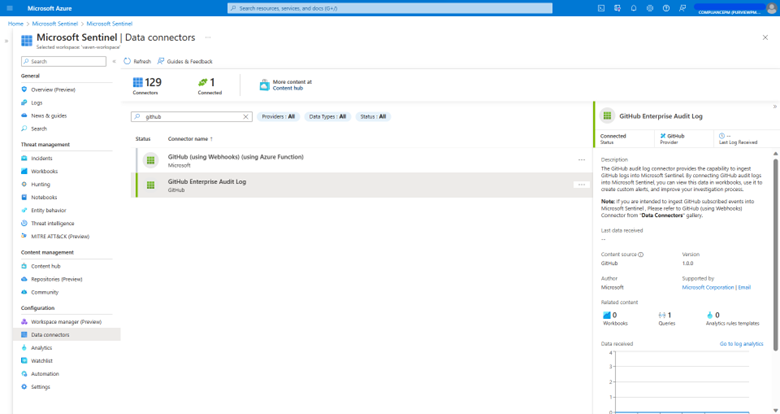

To begin, an admin can create a Microsoft Sentinel workspace and establish a connection with their enterprise GitHub account using the GitHub Enterprise Audit Log connector. Microsoft Sentinel provides data connectors for over 240 SaaS/PaaS workloads, enabling administrators to perform this process for any application relevant to their organization, in addition to GitHub.

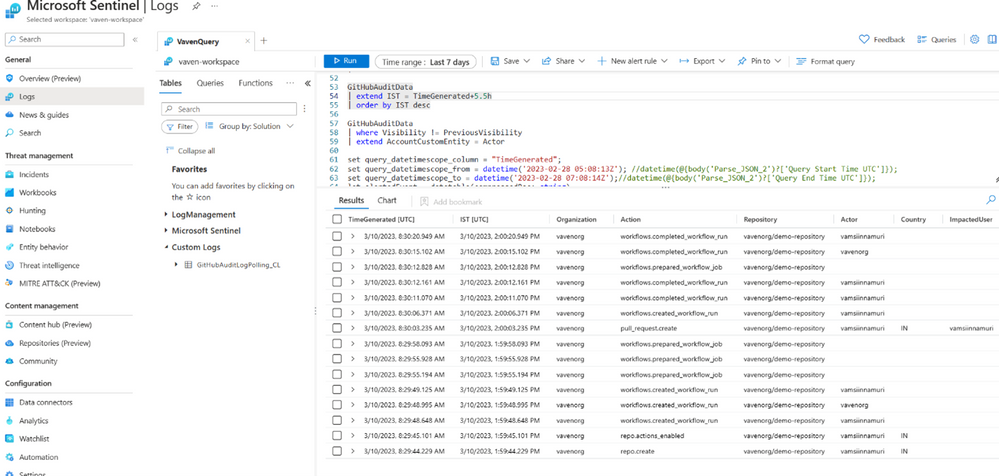

Once connected, users' GitHub activities, including repo creation, deletion, making a repo private, and adding external users, will be captured in the GitHubAuditData table within the Sentinel Logs. Security teams can leverage these logs to enhance visibility into their organization's GitHub repositories, formulate queries, and detect potential security incidents.

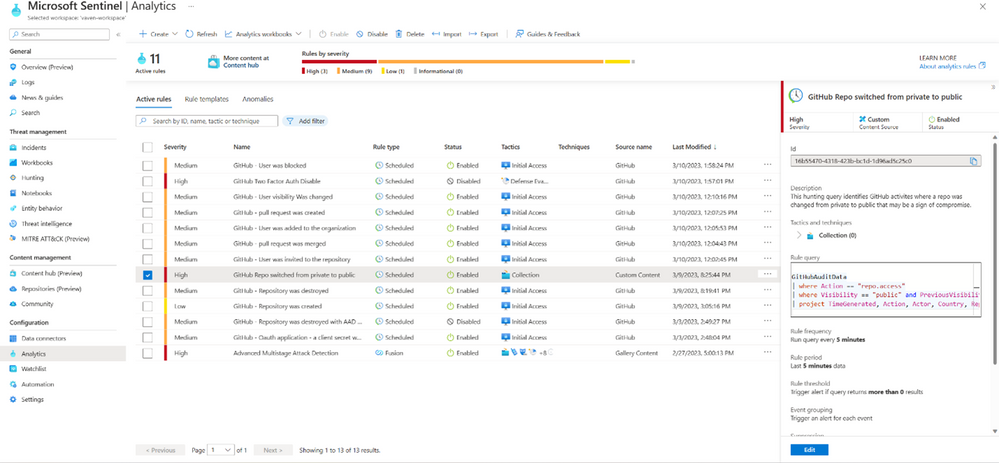

After establishing the GitHub connection in Microsoft Sentinel, admins can proceed to create custom Analytics rules that aid in identifying risks and detecting anomalous activities. These Analytics rules are designed to search for specific events or event patterns across your environment. Once certain event thresholds or conditions are met, Microsoft Sentinel would trigger alerts, generating incidents that security teams can then triage and investigate.

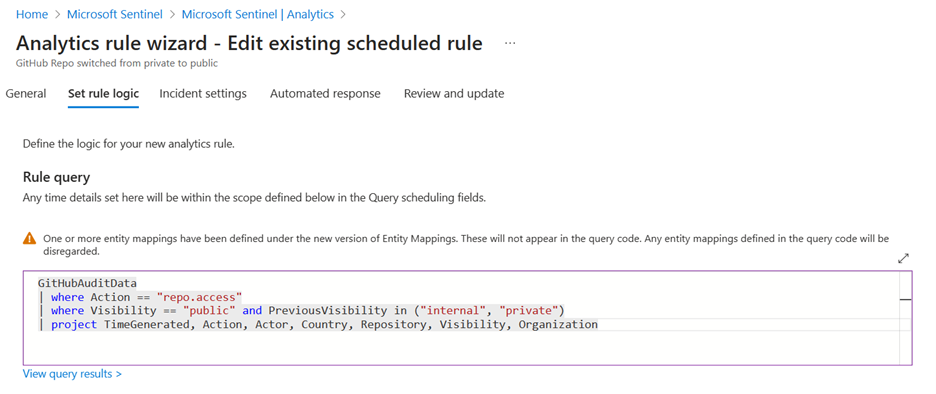

For instance, in this particular scenario, admins can develop Analytics rules that target risky source code activities, such as GitHub repository switched from private to public or adding external users to a source code project.

Once the Analytics rule is created, admins can see alerts in Microsoft Sentinel Incidents when users perform activities that match the Analytics rules.

Step 2: Stream risk detections from Microsoft Sentinel to Microsoft Purview Insider Risk Management through the Insider risk indicators connector

Security teams can use Microsoft Sentinel for their general security operations. However, when it comes to managing insider risks, organizations need to use Microsoft Purview Insider Risk Management. In Step 2, we will show you how to automate the workflows to constantly bring the detected risky activities into Insider Risk Management.

Admins with appropriate permissions can create an Insider risk indicators connector within the Data Connectors page of the Microsoft Purview Compliance portal. Firstly, they can upload a sample file containing the Sentinel detections, which assists in defining the data type and mapping of the detected activities they wish to bring in.

To automate the import of detections, an admin can create an Azure Logic App that queries Sentinel Logs periodically and streams the detections into Insider Risk Management automatically. This approach saves time by eliminating the need for manual imports and streamlines the process to bring in risk detections. For guidance on creating an Azure Logic App using the provided JSON template, please refer to the article "How to import an existing Logic App template."

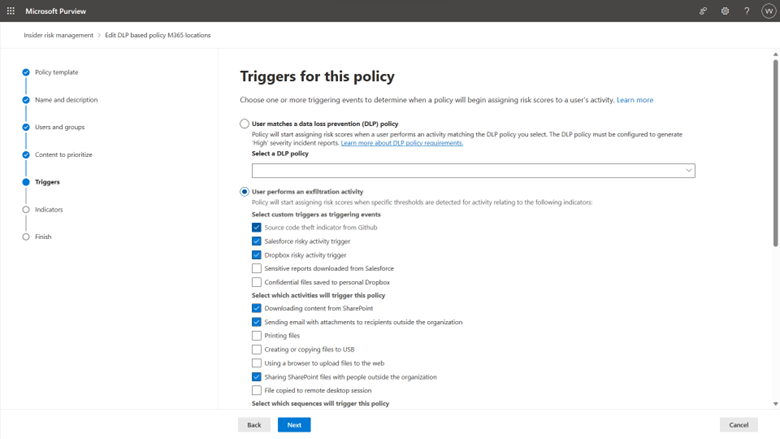

Step 3: Create custom indicators in Microsoft Purview Insider Risk Management and use them in a Data leak policy

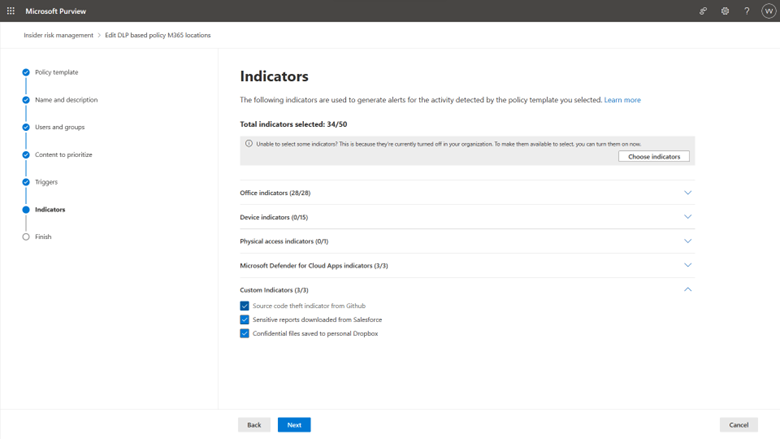

Once the detections have been imported into Microsoft Purview Insider Risk Management, you can begin incorporating them into your insider risk policies, which then can generate alerts that are derived from risk insights across environments. To achieve this, admins need to define indicators for the imported detections.

Admins with appropriate permissions can navigate to the Insider risk settings and create custom indicators. By selecting the relevant element and value from the detections imported through the connector established in Step 2, administrators can define these custom indicators and how to use them.

After the custom indicator is created, it can be used within Insider Risk Management policies, such as data leaks and data theft by departing users. The policies will then incorporate custom indicators when generating alerts and calculating risk scores.

Step 4: Conduct in-depth investigations of risky user activities that have the potential to result in data security incidents across environments

When alerts are generated based on the user activities that may lead to data security incidents, the custom indicators are integrated into the user activity timeline. This capability allows insider risk investigators to access all the insights and underlying activity in a single location, providing a comprehensive understanding of the impact and scope of a potential data security incident. By weaving together the custom indicators and other native user activity signals, the investigator gains a holistic view of a potential incident and its possible ramifications.

Explore more Insider Risk Management resources

This new feature is currently in public preview, and we eagerly await your feedback. To help you learn more about Microsoft Purview Insider Risk Management, here are some additional resources for your reference:

- Learn more about Insider Risk Management in our technical documentation.

- Insider Risk Management is part of the Microsoft Purview suite of solutions designed to help organizations manage, govern and protect their data. If you are an organization using Microsoft 365 E3 and would like to experience Insider Risk and other Purview solutions for yourself, check out our E5 Purview trial.

- If you own Insider Risk Management and are interested in learning more about Insider Risk Management, leveraging Insider Risk Management to understand your environment, or building policies for your organization or investigate potential risky user actions, check out the resources available on our “Become an Insider Risk Management Ninja” resource page.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.