- Home

- Security, Compliance, and Identity

- Security, Compliance, and Identity Blog

- Security for AI: How to Secure and govern AI usage

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Today, at the Microsoft Secure online event, Herain and I discussed best practices for securing and governing AI usage. In this blog post, we provide detailed guidance on implementing the controls demonstrated during the session.

Generative Artificial Intelligence (GenAI) is changing the game in the workplace. It sparks incredible productivity and innovation everywhere you look and across all kinds of industries and regions. According to a recent research study: 93% of businesses are either implementing or developing an AI strategy[1]. At the same time, about the same percentage of risk leaders say they're either underprepared or only somewhat prepared to tackle the risks that come with it.

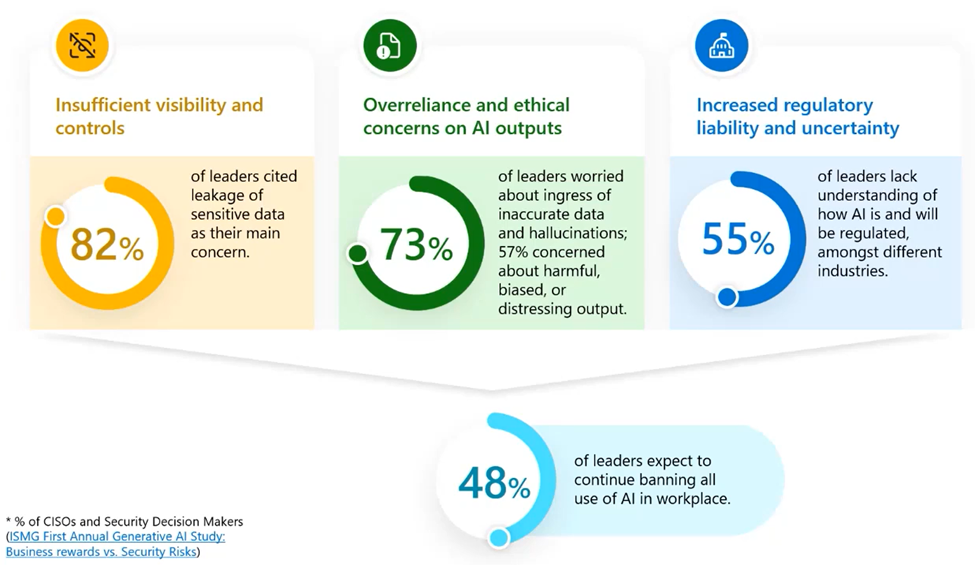

Generative AI creates an interesting conflict of both excitement and anxiety. It creates tension between the business benefits of innovation on one hand and the perceived security and governance risks that come with it on the other. To navigate these two conflicting emotions in organizations, it's crucial to first understand the specific risks that concern security and compliance leaders:

- There's a real need for organizations to improve the visibility and control over their most critical asset - their data.

- More than 70% worry about overreliance on AI due to inaccurate data and almost 60% worry about harmful, biased, or distressing outputs.

- 55% expressed lack knowledge about the impact and how to respond to AI regulations, such as the EU AI Act, which is on the horizon.

These are just some of the highlighted challenges from the research.

With all these concerns, almost half of the security leaders surveyed expect to continue restricting all use of AI in the workplace. And that is not really the best way to address the perceived security risks. Understanding and being aware of the risks associated with AI usage can help organizations alleviate their GenAI-nxiety. By gaining insights into these risks, organizations are empowered to directly confront and manage them. Without a proactive approach to gain clarity, security teams might feel as though they are navigating a foggy forest, uncertain of where the next threat may emerge. Understanding and being aware of AI-related risks includes:

- Which AI applications are being utilized by users?

- Who is using these AI applications, and how is access to these apps being governed?

- What type of data is being input into the AI applications, and what data is generated by them?

- Is sensitive data being adequately protected and governed?

Microsoft Security offers comprehensive security solutions designed to address and respond to these concerns.

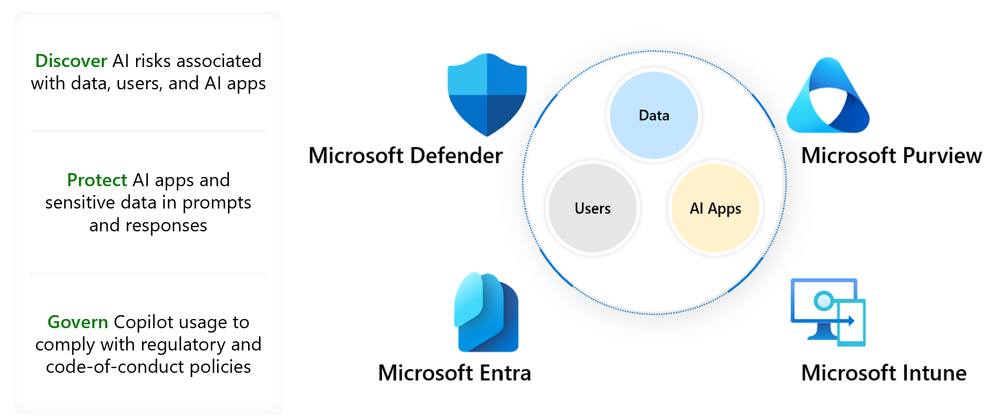

Microsoft Defender, Entra, Purview, and Intune, work together to assist you in securing data and user interactions in AI applications. Microsoft Security products enable security teams to discover risks associated with AI usage, such as potential sensitive data leaks and users accessing high-risk applications.

Once these risks are detected, security teams can utilize intelligent capabilities to protect AI applications in use and the sensitive data in them, including the AI prompts and responses. Furthermore, security teams can govern usage by retaining and logging user interactions, identifying potential non-compliance activities within AI applications, and investigating those incidents. Microsoft Security enables security teams to discover, protect, and govern AI usage and adopt AI applications confidently within their organizations, for both Copilot and third party apps.

Discover, protect, and govern the use of Copilot for Microsoft 365

Copilot for Microsoft 365 includes built-in controls for security, compliance, privacy, and responsible AI. For example - it uses the Azure OpenAI content safety filters that are built-in to help detect and block any potential jailbreak or prompt-injection attacks. In addition, Copilot for Microsoft 365 is built and guided by our Responsible AI Standard. This includes – fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. Together, these greatly reduce the probability of any biased, harmful, or inaccurate content being produced. All these built-in controls collectively make Copilot for Microsoft 365 enterprise-ready. See more details on Data, Privacy and Security for Microsoft Copilot 365 here.

In addition to the built-in controls, Microsoft Security offers integrated solutions to help you further improve your security and governance when using Copilot.

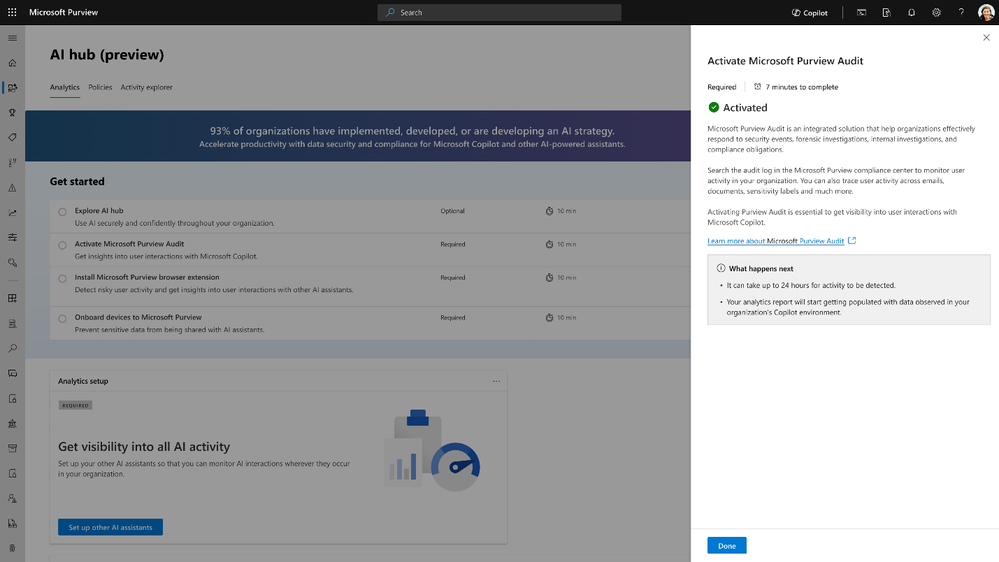

Gain visibility into AI usage and discover associated risks

It is very important to Discover and gain visibility into the potential risks in GenAI apps, including how users are using GenAI applications and the sensitive data that is involved in AI interactions. Microsoft Purview provides insights into user interactions in Copilot.

Getting started:

Step 1 – Activate - activate Microsoft Purview Audit which provides insights into user interactions in Copilot.

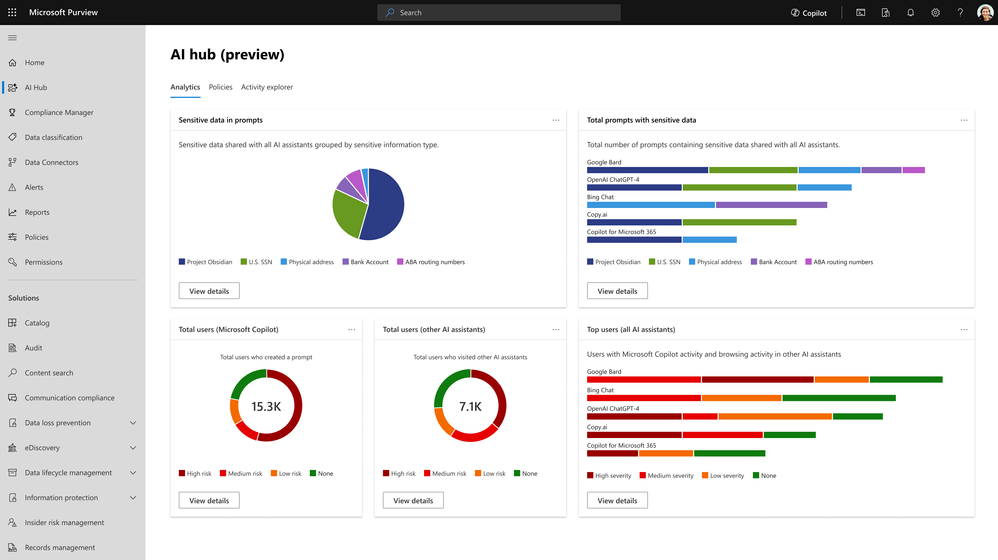

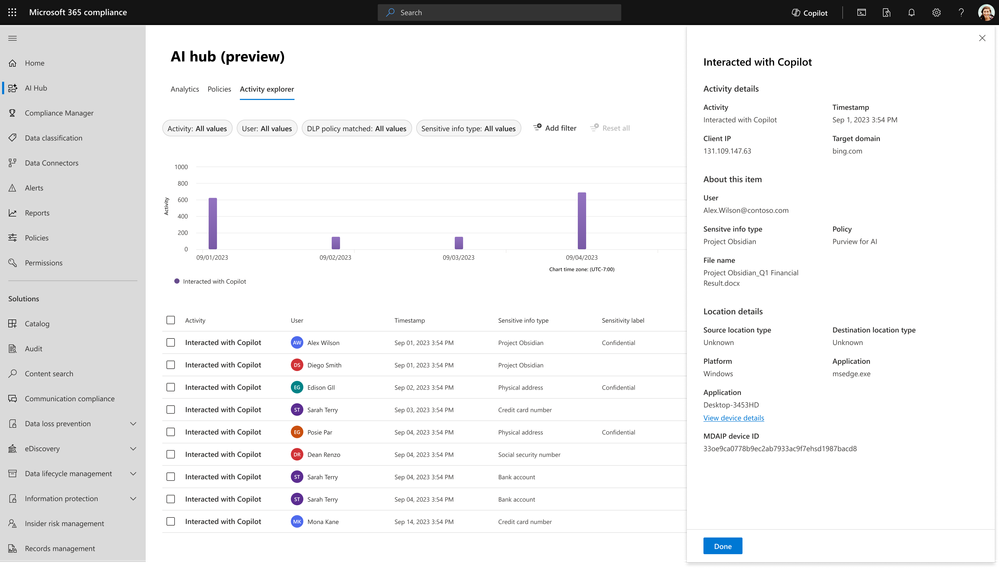

Step 2 – Discover - The AI Hub dashboard showcases aggregated insights on AI activity, such as total prompts and sensitive information in Copilot interactions. By quickly discovering how AI is being used in your organization, you can discern patterns, identify potential risks and make informed decisions on the best course of protection for your organization.

Step 3 – Drill Down - Drill down further to see what activity may involve sensitive data and how it happened. Activity explorer helps us gain more detailed insights like the time of the activity, sensitive information involved, and the specific AI application used. These insights can help you tailor your data security controls more effectively.

AI hub is built with privacy first and role-based access controls are in place. AI hub is in private preview, and you can join Microsoft Purview Customer Connection Program to get access. Sign up here, an active NDA is required. Licensing and packaging details will be announced at a later date.

Protect Sensitive Data

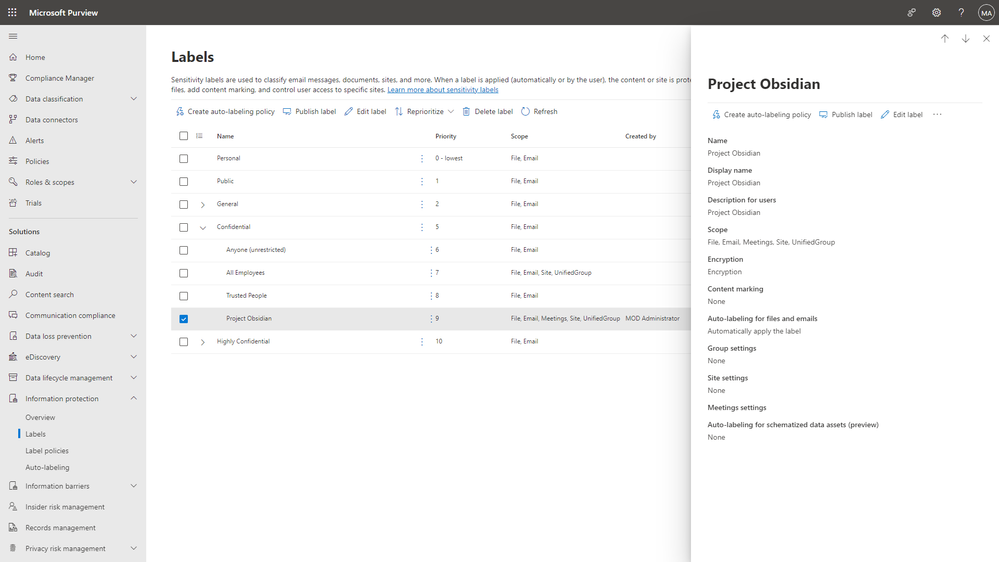

To prevent sensitive data from being overshared or accessed by unauthorized users, you can configure an auto-labeling policy, which will then scan your M365 locations and automatically label and protect all the files containing sensitive information.

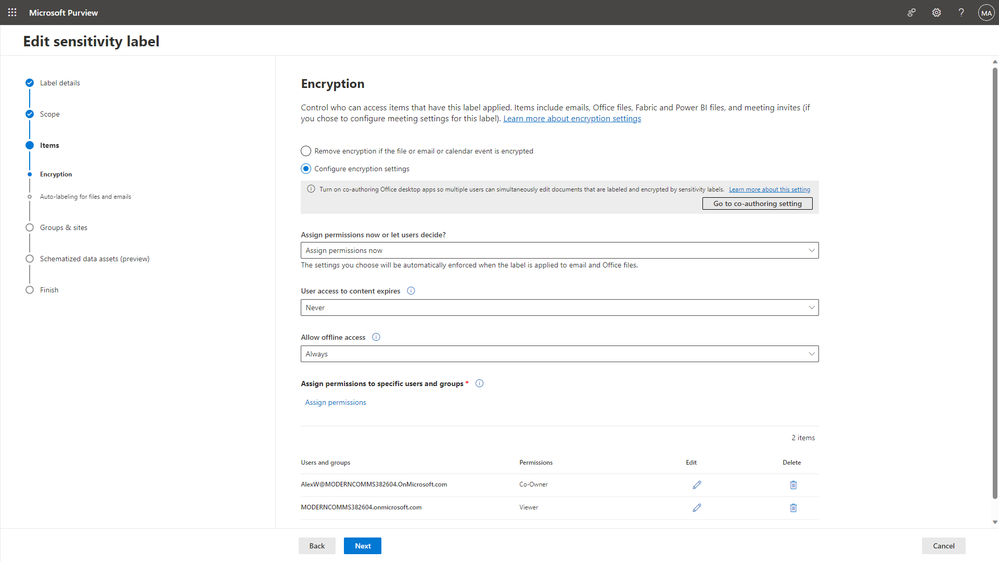

To protect labeled items, you can also configure encryption controls and watermarking.

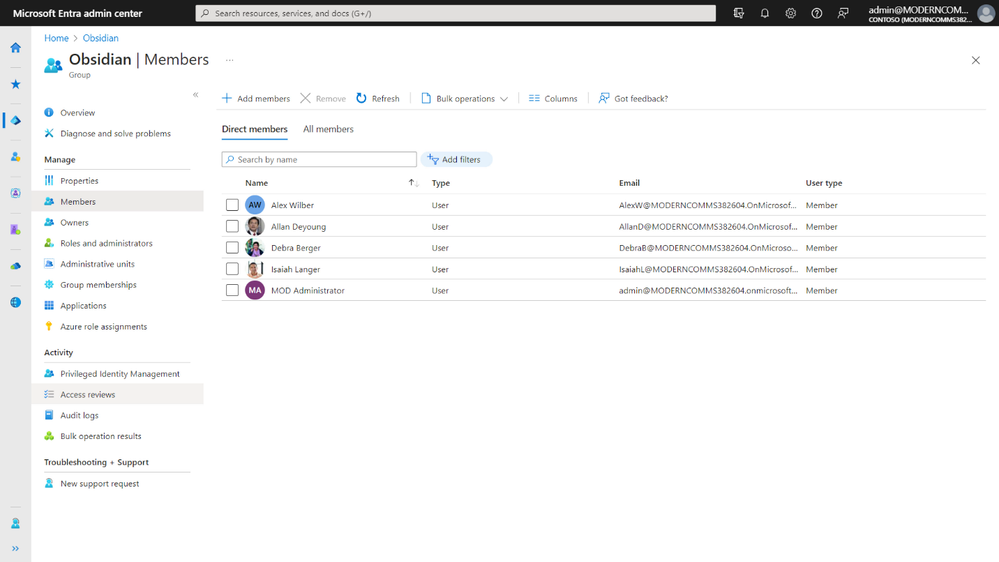

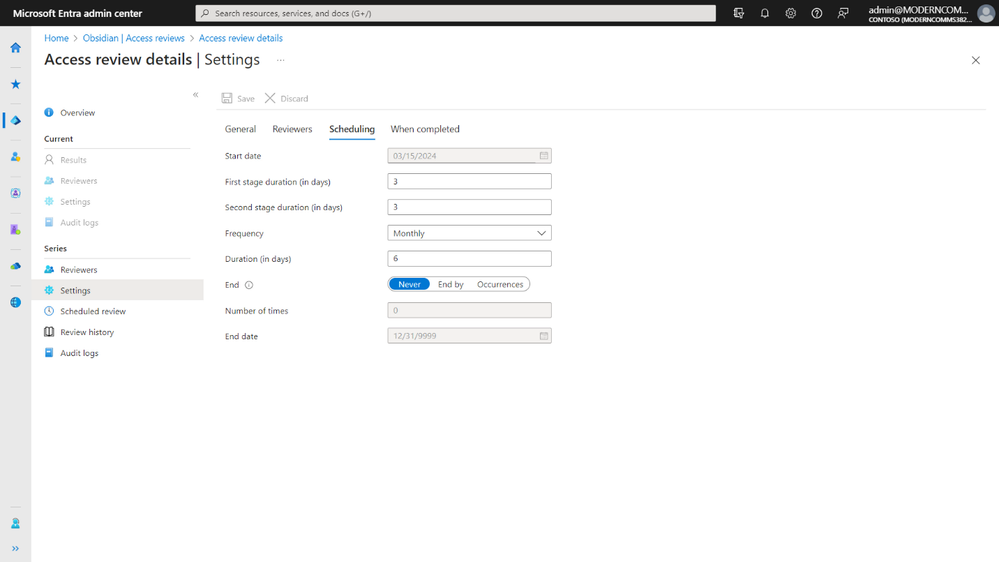

The encryption setting is associated with a Microsoft Entra ID group, so it’s important to ensure that the group membership is managed and reviewed regularly to prevent unauthorized access. You can learn more about how to set up access reviews in this documentation.

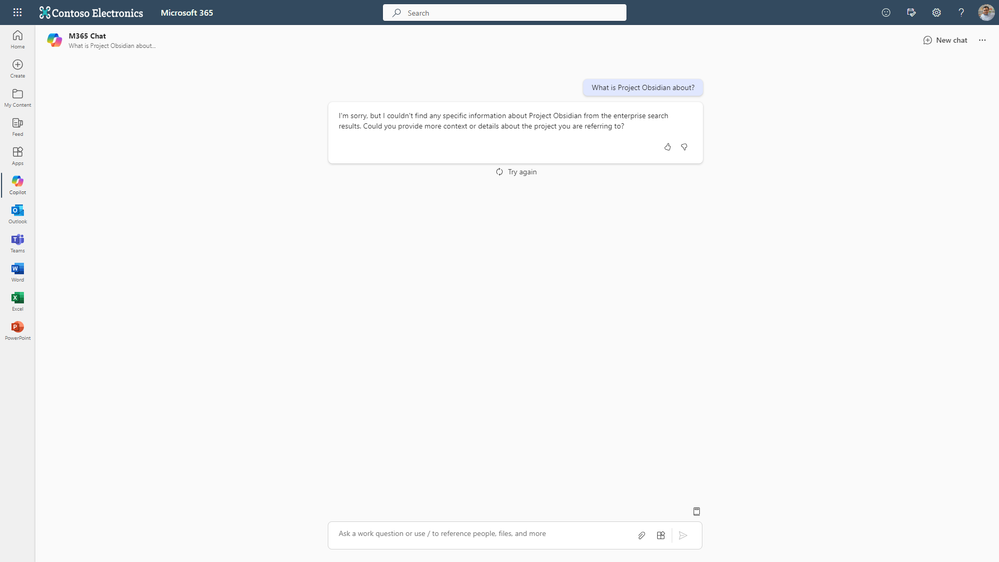

This enables only approved users to access content that’s relevant to them, so when a user whom does not have access tries to access this content, Copilot will honor permissions and respond that it could not find any data related to the topic.

Learn more on how to automatically apply sensitivity labels to your data in this documentation.

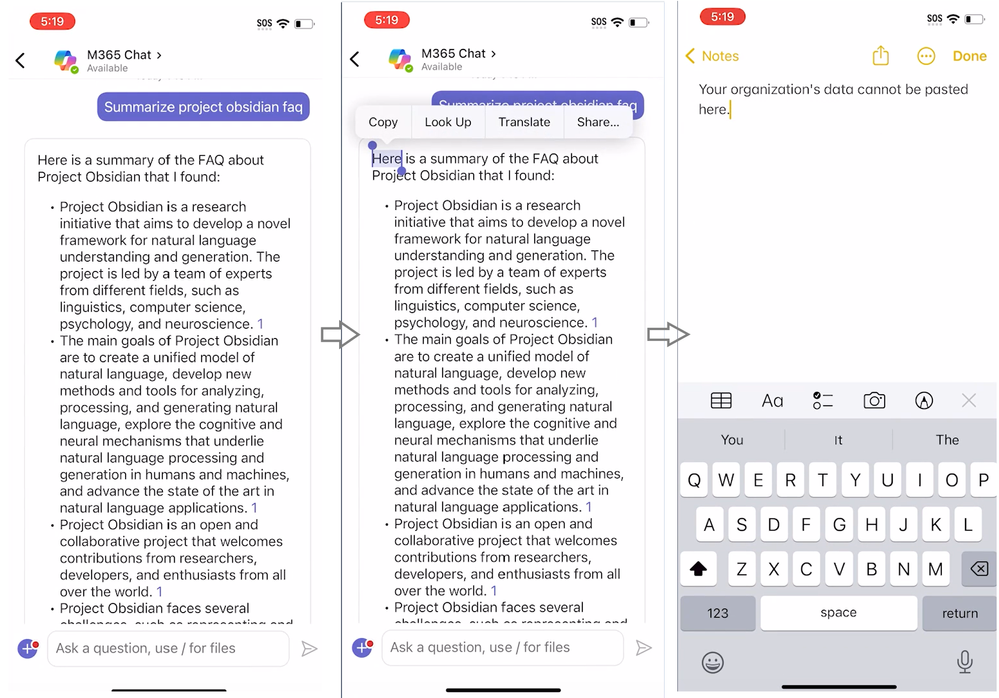

Protect your data across devices

Microsoft Intune manages devices across platforms, protecting data and devices regardless of where employees are working. In our digital-first world, every device, whether personal or company-managed, can potentially serve as a gateway for data breaches. You can protect data across managed and unmanaged devices by applying preventative controls with Microsoft Intune’s app protection policies. This ensures the corporate data is protected and cannot be shared outside of Microsoft 365 apps.

Microsoft Intune enables you to restrict users from sharing corporate data outside of designated applications, blocking them from doing so. These controls allow employees to stay productive on their devices while preventing data loss, whether it’s a mobile device or a personal Windows desktop. Intune also monitors device compliance, restricting corporate access when a device is not fully updated or has another issue.

To learn more about how to design and configure app protection policies, check out this Intune documentation.

Threat Detection

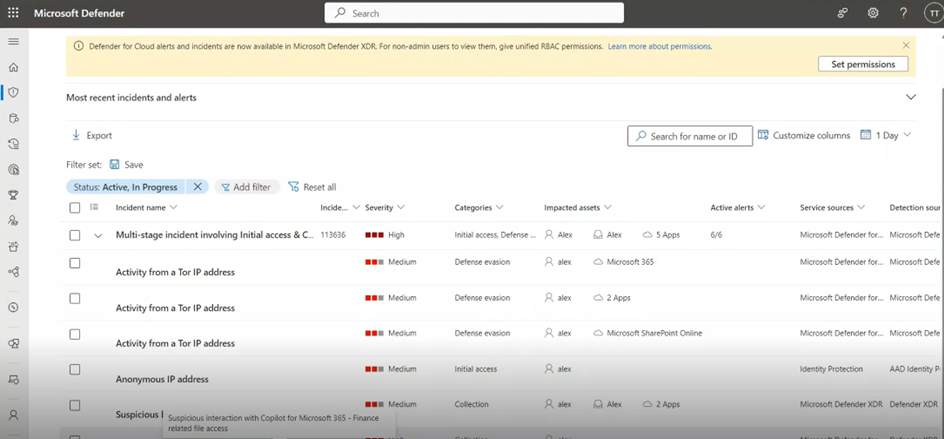

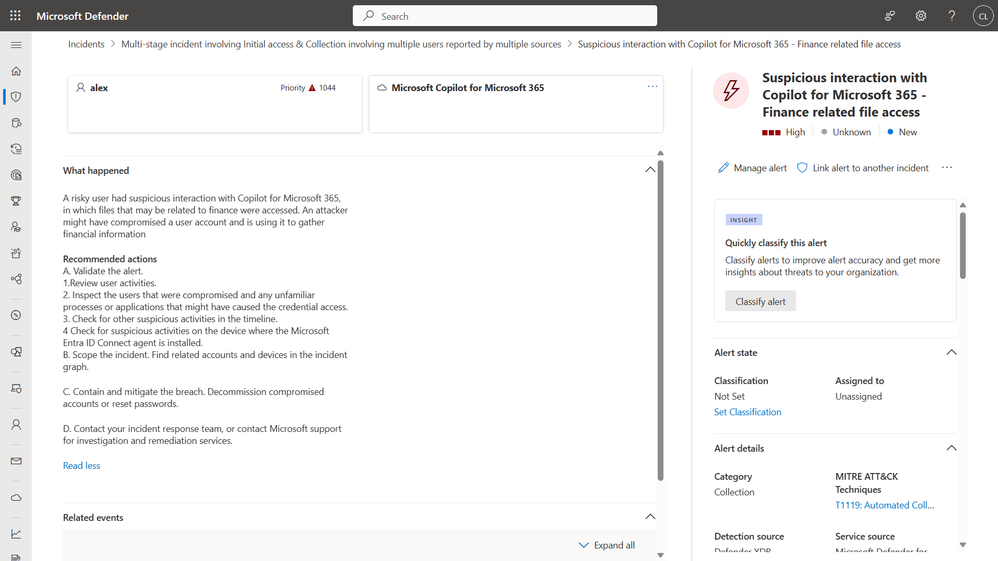

Defender for Cloud Apps will show you all the activities related to Copilot for Microsoft 365 and will detect any risky usage by adversaries.

Drill down – The alert contains details on the risky usage including the MITRE attack technique, IP address, and more.

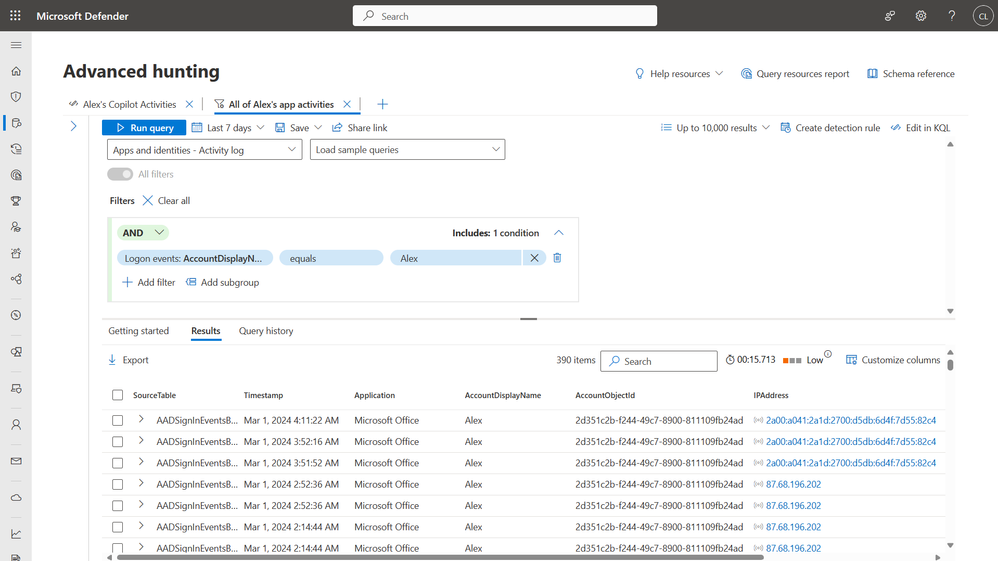

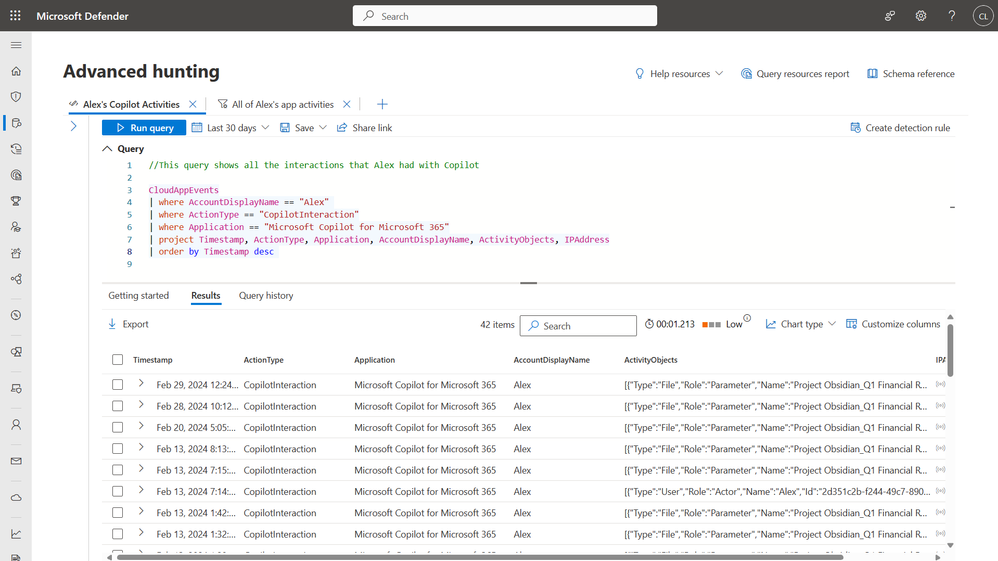

Understand – to understand the scope of the attack, Defender for Cloud Apps is part of Microsoft Defender XDR that enables you to investigate and remediate threats in a unified experience with a full view of the entire kill chain. The hunting capability in Defender XDR enables you to dive deeper into the users copilot activities.

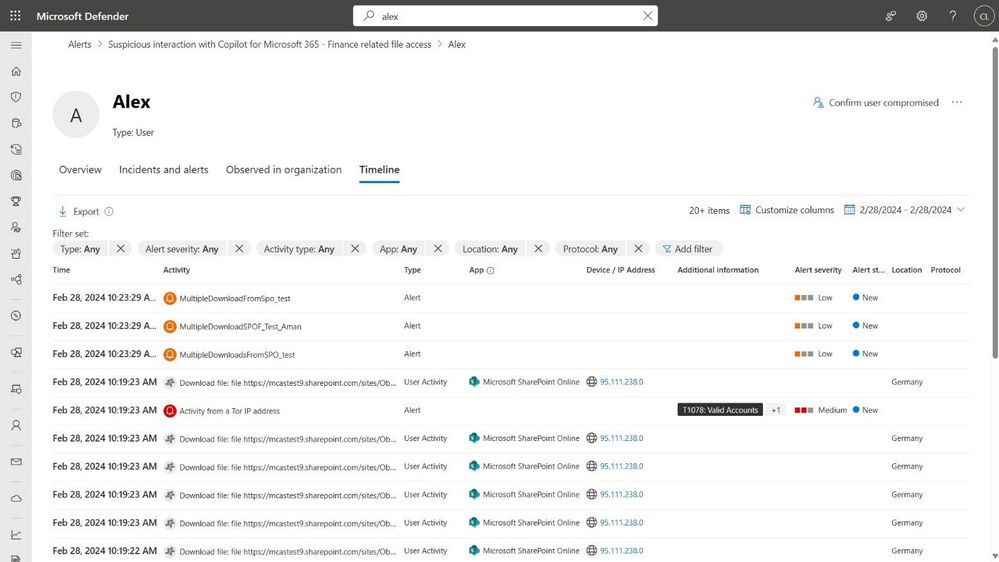

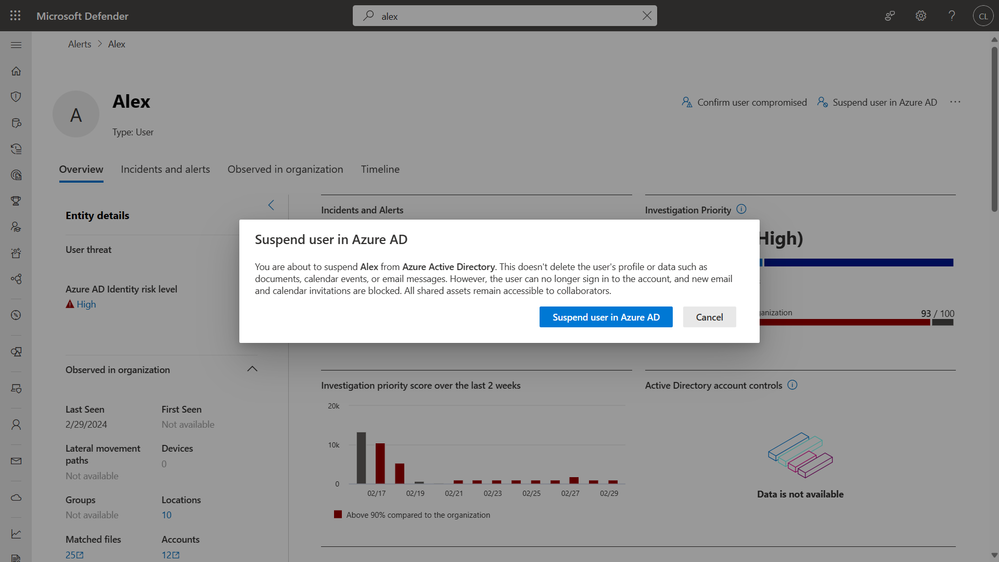

To get a broader view of the user’s activities you can navigate to a user page and here you can also see a timeline of activities that triggered the alerts and more details on the user.

You can also take action and suspend the risky user –

Connect Microsoft 365 app - Protect your Microsoft 365 environment - Microsoft Defender for Cloud Apps | Microsoft Learn

Detect and respond to incidents – Incident response with Microsoft Defender XDR | Microsoft Learn

Governance and Compliance

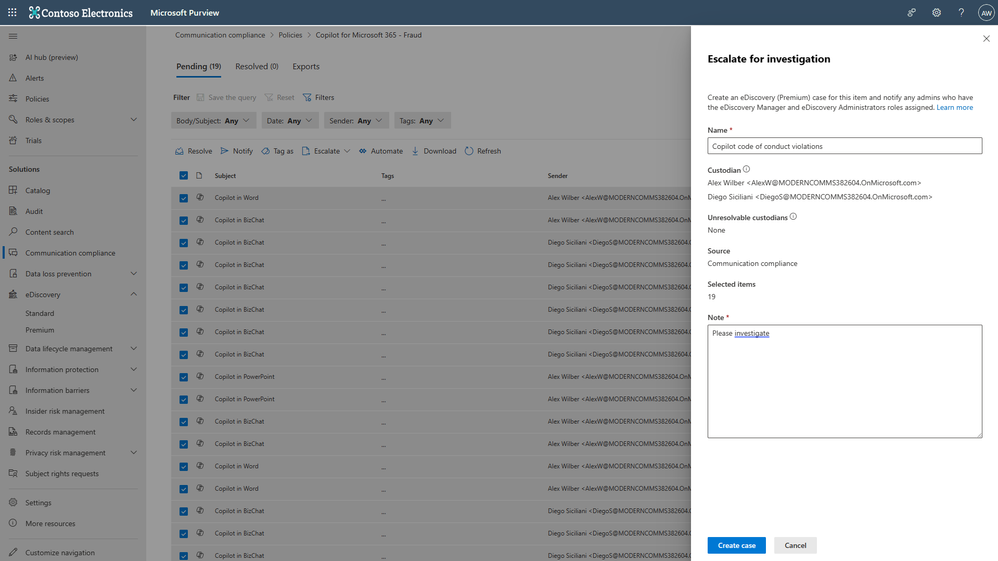

Compliance and risk managers are concerned about the non-compliant use of AI. This can lead to unintentional or even malicious organizational or regulatory policy violations, or the creation of high-risk content. Microsoft Purview uses machine-learning-powered classifiers that can help detect non-compliant use of Copilot in real-time and receive alerts.

Microsoft Purview provides ready-to-use classifiers that go beyond mere keyword detection by understanding the context of the content. Including gift-giving, unauthorized disclosure, regulatory collusion, money laundering, stock manipulation, and more. It enables you to also escalate these alerts for legal investigations by creating an eDiscovery case. All the Copilot interactions can become part of the review set in eDiscovery, along with other relevant files and evidence about user’s potential violation against your code-of-conduct policies enabling you to put Copilot interactions on legal hold, search, review, analyze, and export them to help with investigations.

Learn more Microsoft Purview data security and compliance protections for Microsoft Copilot in this documentation. Microsoft Security provides an extensive set of controls to enable you to adopt and use Copilot for Microsoft 365 in both a secure and compliant way.

Discover, protect, and govern the use of Generative AI Third party

Microsoft Security can help discover, protect, and govern third-party AI applications as well.

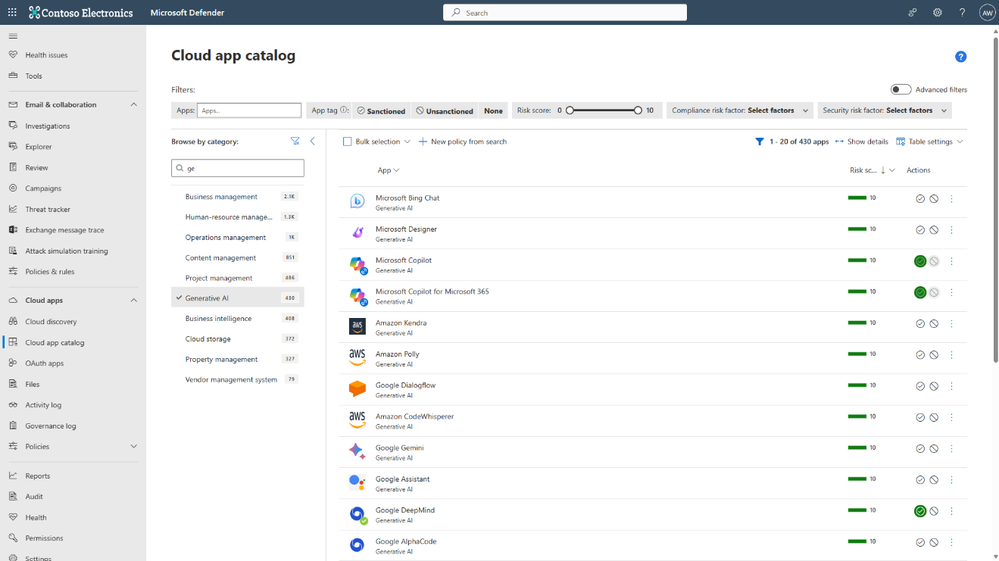

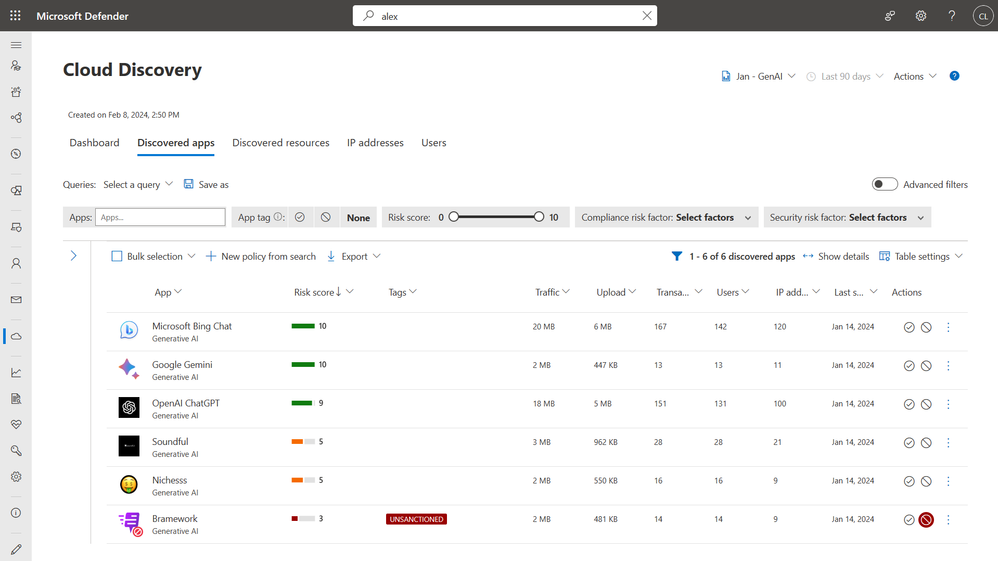

One of the most common concerns with third-party AI apps is the potential for data leakage. For example, if information from a confidential project, Project Obsidian, was publicly disclosed by an employee through a consumer AI app. Microsoft Defender for Cloud can discover over 400 GenAI apps, and the list of apps is updated continuously as new ones become popular. This means that you can see all the GenAI apps in use in your organization, know what risks they might bring, and set up controls accordingly.

With the Defender for Cloud Apps integration with Defender for Endpoint, security teams can easily identify potential high-risk apps in use and tag them as unsanctioned to prevent users from accessing these apps until they’ve had time to complete any due diligence processes.

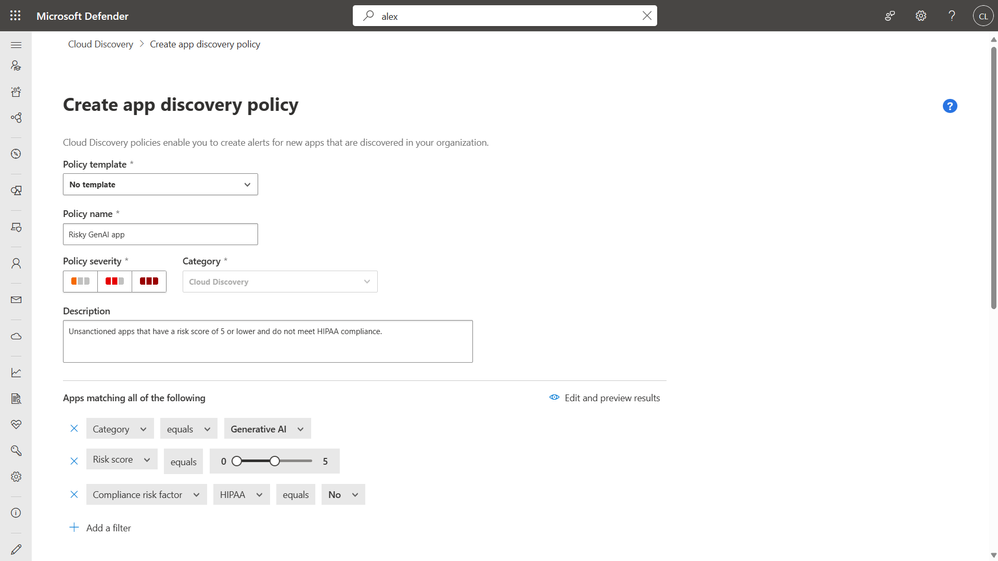

Security teams can also automate the tagging by creating policies tailored to the organization. For example, if you’re a healthcare company and you already know that you don’t want users accessing apps that have a risk score of 5 or lower and don’t meet HIPAA requirements, you can automatically tag them as unsanctioned.

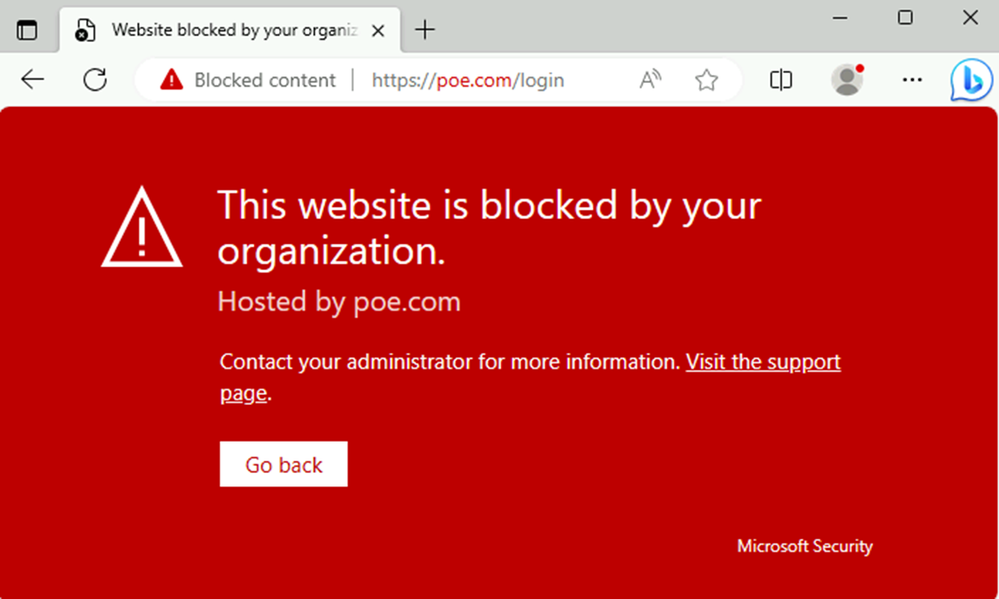

If the user tries to access a risky unsanctioned app they will see the following block screen.

Learn more and get started with Microsoft Defender for Cloud Apps Get started - Microsoft Defender for Cloud Apps | Microsoft Learn.

Getting started – Protect your Microsoft 365 environment - Microsoft Defender for Cloud Apps | Microsoft Learn

Integrate Defender for Cloud Apps with Defender for Endpoint - Govern discovered apps using Microsoft Defender for Endpoint - Microsoft Defender for Cloud Apps | M...

Protect Sensitive Data

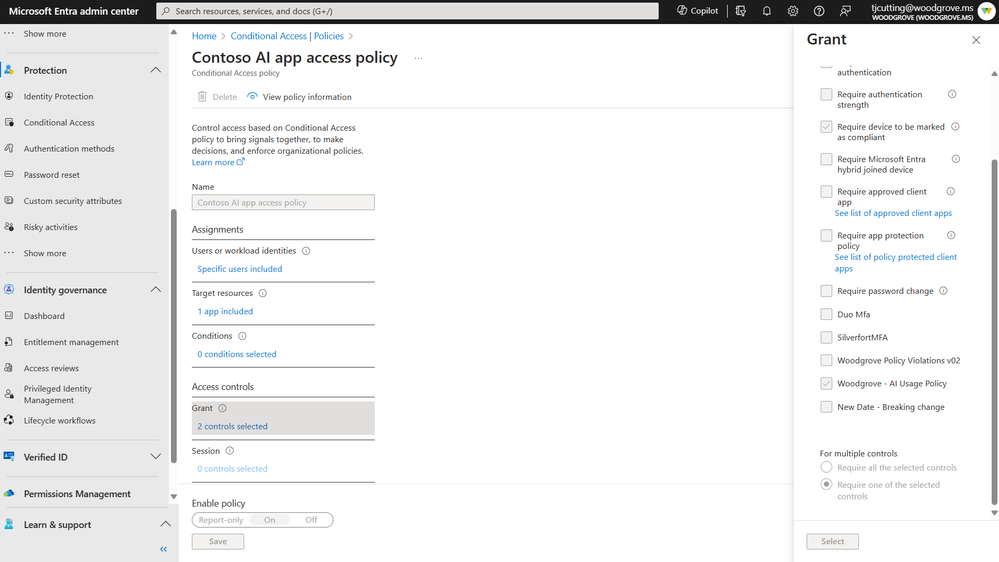

To protect sensitive data, you can start with identity and access management for apps. The goal is to protect corporate resources and let device users who comply with corporate policies access services and other company resources with single sign-on. These policies are a simple and effective way to make sure that only devices that have permission can access the organization’s data and services. Microsoft Entra Conditional Access enables identity admins to create a policy specific to a GenAI app, like ChatGPT, or a set of GenAI apps, where a condition is set that only users on compliant devices can access and only after they have accepted the Terms of Use.

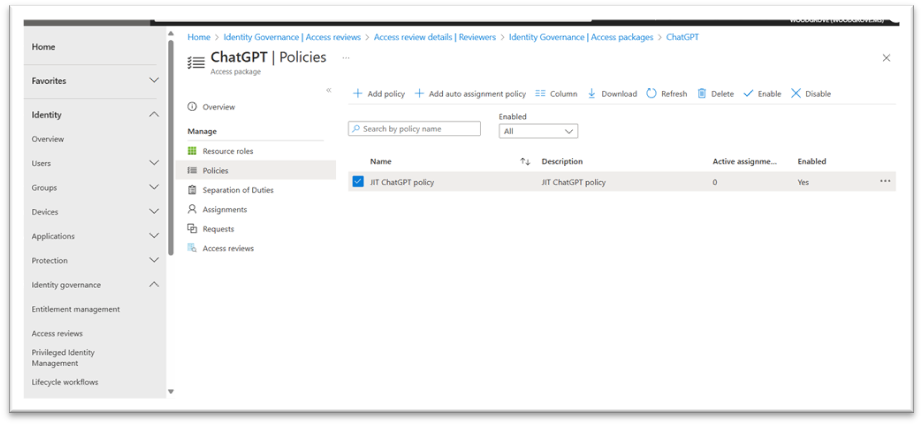

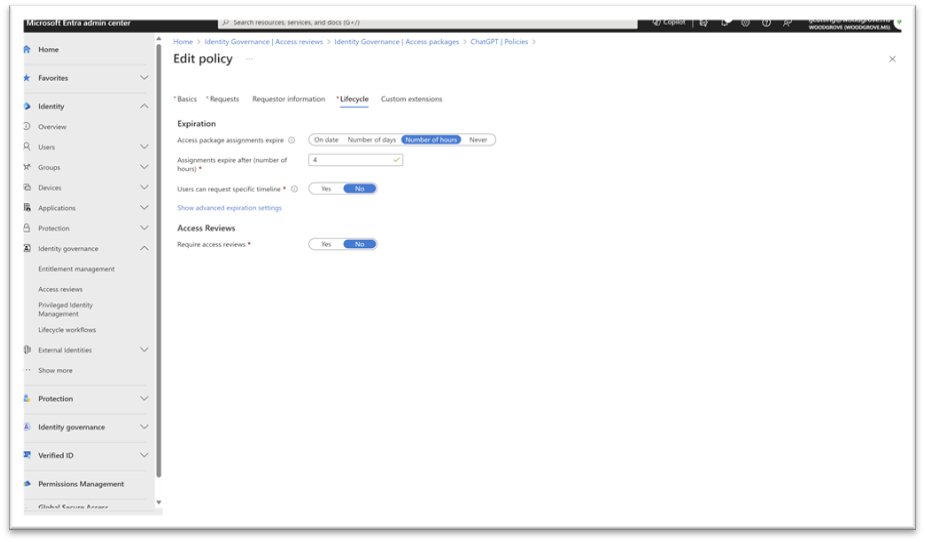

Microsoft Entra ID Governance enables you to also enforce manager or admin approval before granting access. Once granted, you can limit the amount of time the user has access to a GenAI app. This helps to reduce the risk of established privileges that attackers or malicious insiders can easily exploit.

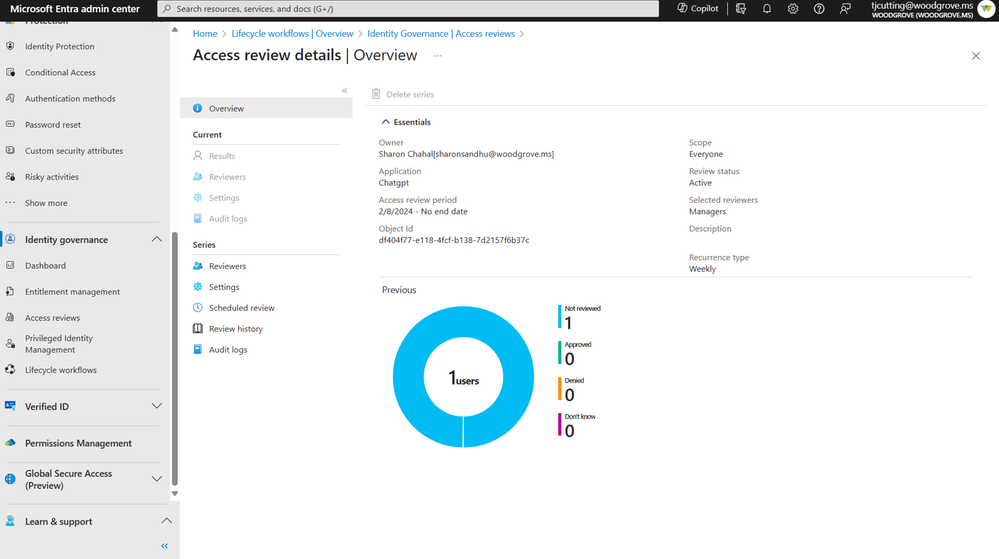

You can also do access reviews periodically to check that only people that need access have access.

Learn more about Entra ID Governance in this documentation.

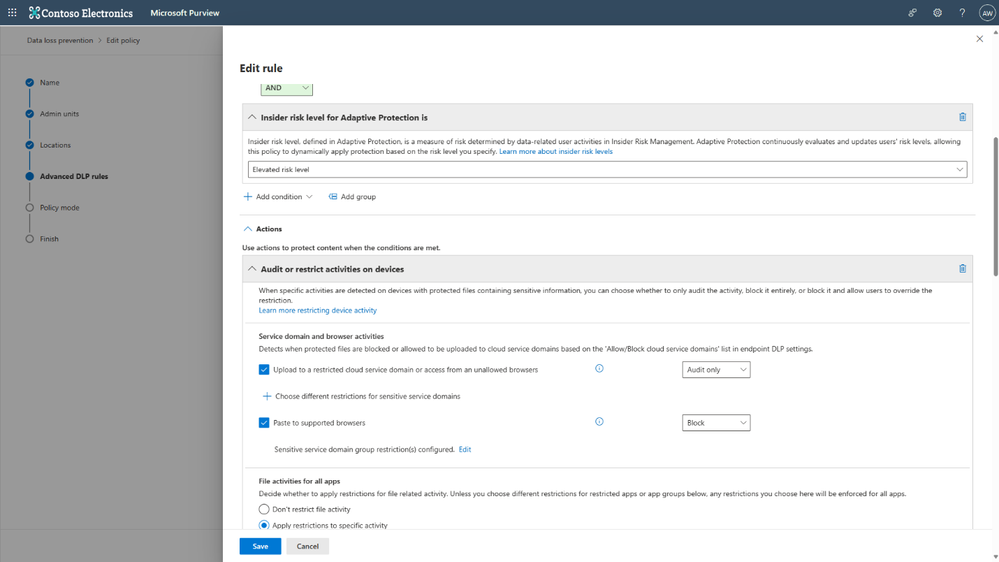

Data security or Data loss prevention (DLP) admins can create endpoint DLP policies to prevent users from pasting sensitive data into AI applications. You can also add a condition to enforce a policy based on the user’s insider risk level, making the DLP policy risk adaptive, by only restricting elevated-risk users. You can learn more about how to configure endpoint DLP in this documentation.

Microsoft Security provides controls to block high-risk apps, restrict high access to the sanctioned apps, and prevent sensitive data or high-risk content, from being shared with third-party AI applications.

These multiple layers of controls at the app, access, and content level help security teams reduce the overall risk to the business. That is what an end-to-end security for AI solution looks like. In addition, you can see all the usage across Copilot and third-party AI applications in our AI hub, including Google Bard, ChatGPT, and more. It helps you audit your controls to see if sensitive data is only used in secure AI applications.

Microsoft Security provides capabilities to discover, protect, and govern the use of AI applications, including Copilot and third-party apps. Together, they help your organization adopt AI in a responsible and secure way.

If you missed the live session, watch it now to learn about Securing and Governing AI to enable responsible AI adoption in your organization.

To learn more about Microsoft Security solutions, visit our website. Bookmark the Security blog to keep up with our expert coverage on security matters. Also, follow us on LinkedIn (Microsoft Security) and X (@MSFTSecurity) for the latest news and updates on cybersecurity.

[1] Microsoft internal research, May 2023, N=638

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.