- Home

- Security, Compliance, and Identity

- Microsoft Sentinel

- How do I create a custom data table and is it necessary in this scenario?

How do I create a custom data table and is it necessary in this scenario?

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 06:13 AM

Recently came across some documentation to push logs in an AWS S3 bucket to Sentinel using a lambda function via the log analytics API. Looking at the documentation it looks like I would have to setup a custom data table but there's nothing that covers that in the doc. Also not entirely sure where this data will go when pushed from the S3 bucket. How would I do this and is it necessary in this scenario? Link to docs below.

https://github.com/Azure/Azure-Sentinel/tree/master/DataConnectors/S3-Lambda

I am unable to use the AWS S3 Data Connector from content hub as the logs we're pushing (AWS WAF) are not supported by that connector.

- Labels:

-

Analytics

-

APIs

-

Data Collection

-

SIEM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 06:45 AM

https://github.com/Azure/Azure-Sentinel/tree/master/DataConnectors/S3-Lambda#edit-the-script

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 06:57 AM

So when this script is run, it will create the table? I see now in the github doc where it asks for the Workspace info and a custom log name.

I'm just a little confused on where this data goes, where its stored, and if there's anything more I need to do than simply run this script to get these logs into sentinel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 07:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 08:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 10:08 AM

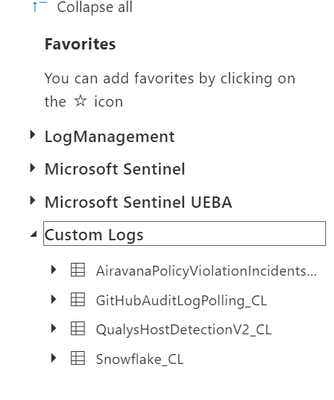

you need to ingest the data into the Custom table to be able to query it. The custom table is where it's stored in log analytics.

You can check the schema before ingestion.

https://learn.microsoft.com/en-us/azure/sentinel/data-source-schema-reference

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aug 29 2023 04:44 PM