- Home

- Security, Compliance, and Identity

- Microsoft Security Experts Blog

- Fuzzy hashing logs to find malicious activity

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

During a forensic investigation, malicious activity may be discovered in logs. An incident responder may want to identify related malicious activity across a range of related log files. Typically, responders would extract and search for indicators of compromise (IOCs) or write well-crafted queries to search logs for similar activity. One way we can help responders find this similar activity is using “fuzzy hashing”, a term that encompasses a range of different hashing techniques designed to broadly group together “similar” things (in this case, similar malicious activity.)

While there are a variety of fuzzy hash algorithms, most focus on identifying similarities across different files. Microsoft Incident Response has developed a new fuzzy hash algorithm, called "JsonHash" which is specifically designed for comparing semi-structured data, such as log entries.

In this blog we will explain how a JsonHash is calculated, then show some practical examples of how it can be used. We implemented JsonHash using the python plugin for Azure Data Explorer and used it to search for web shell activity in IIS logs. Logs corresponding to the web shell activity had similar JsonHash values, demonstrating how JsonHash can be used to find related groups of malicious activity.

Background

Cryptographic hashing algorithms such as MD5 and SHA1/SHA256/SHA512 have been widely used to compare files to determine if the contents are identical. Anti-Malware detection engines commonly use cryptographic hashes to look for known malicious files, and conversely ignore known legitimate commercial software. By design, if two files differ by even a single byte, they will produce different cryptographic hashes. For malware, this means even the slightest modification will evade detection hashes.

In contrast, fuzzy hashing algorithms (such as Locality Sensitive Hashing (LSH)) are designed to produce similar hashes for files with only minor differences. Therefore, malware variants belonging to the same family should have similar fuzzy hashes. Two popular fuzzy hash algorithms include TLSH and ssdeep.

Most fuzzy hash algorithms have been designed to compare files, such as binaries or blobs of text. However, when applied to semi-structured data, such as JSON blobs, XML or lines of a log file, they perform poorly. This may be due to the size of the input data being relatively small, as well as syntactical and structural information dominating the input data. (In fact, ssdeep has a minimum size requirement of 4,096 bytes, which is larger than a typical log entry.) Therefore, to effectively use fuzzy hashing to find similar log entries, there is a need for an effective fuzzy hash algorithm which caters for semi-structured data inputs.

Computing JsonHash

JsonHash is a Microsoft Incident Response designed fuzzy hashing algorithm, specifically for comparing data records, including semi-structured data. It supports JSON and XML inputs but can also be used to iterate over log lines in a CSV file, for example.

As with other fuzzy hashes (such as TLSH), JsonHash creates a digest for a given record, by computing the hash buckets (more on this later) of the individual fields that comprise the record. The novelty of JsonHash is prepending the field names to the field values, before calculating the hash. This improves accuracy by causing similar values that occur in different fields to be treated differently. JsonHash can be applied to any data record without requiring any feature extraction, and is not affected by field ordering.

The steps for computing JsonHash, for a given data record, are as follows:

- Flatten any nested fields.

- Tokenize the field values.

- For each field, prepend the name of the field to the field values.

- Calculate a hash for each field value and place the result into a hash bucket.

- Scale and quantize the hash bucket counts.

- Output a hash digest string.

By its nature, the calculation of a hash digest involves a bit of Mathematics, we will explain each step in more detail with an example.

Example Data Record

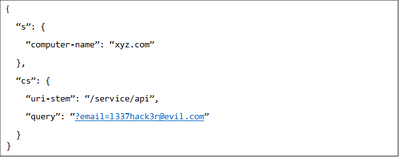

For our example we use a simplified IIS log record in nested JSON format (this method works just as well for a CSV record or other semi structured formats).

Our example record has only three fields: s.computer-name, cs.uri-stem and cs.query.

Step 1: Flatten Nested Fields

We flatten the nested structures (“s” and “cs”), by prepending the parent field name to the child fields, giving us three flat fields:

|

Field |

Value |

|

s-computer-name |

xyz.com |

|

cs-uri-stem |

/service/api |

|

cs-query |

?email= l337hack3r @evil.com |

Step 2: Tokenize Values

Next, we tokenize the values for each field.

|

Field |

Tokenized Values |

|

s-computer-name |

xyz, com |

|

cs-uri-stem |

service, api |

|

cs-query |

email, l337hack3r, evil, com |

Step 3: Prepend Field Names

For each field, we prepend the field name to the tokenized values.

|

Field |

Prepended Tokens |

|

s-computer-name |

s-computer-name:xyz, s-computer-name:com |

|

cs-uri-stem |

cs-uri-stem:service, cs-uri-stem:api |

|

cs-query |

cs-query:email, cs-query:l337hack3r, cs-query:evil, cs-query:com |

Step 4: Hash the Field Values into Buckets

We now hash the tokens for each field. Each token is hashed separately. For this step we could use a standard cryptographic hash, such as SHA256, but for our use case, since we will discard most of the resultant bits, we opted for a more efficient hashing function – Pearson Hash (as TLSH also uses.)

We then place the resultant hash for each token into a “bucket.” For JsonHash we use 64 buckets. The bucket is calculated from the lower order bits of the hash.

For example, the one-byte Pearson Hash of “cs-uri-stem:api” is 219. We take the modulo 64, giving the hash bucket 27.

219 % 64 = 27

Applying this to all the tokens yields the following hash buckets:

|

Field |

Hash Buckets |

|

s-computer-name |

14, 61 |

|

cs-uri-stem |

48, 27 |

|

cs-query |

26, 27, 9, 8 |

Step 5: Scale and Quantize the Bucket Counts

We firstly count the frequency of each bucket. In this example, for the 64 buckets, 7 buckets have counts greater than zero.

|

Bucket |

Count |

|

8 |

1 |

|

9 |

1 |

|

14 |

1 |

|

26 |

1 |

|

27 |

2 |

|

48 |

1 |

|

61 |

1 |

Regardless of the size of the records, we need to scale the counts, and round the counts to specific levels. For JsonHash we use 16 levels (from 0 to 15.) The buckets with the lowest counts are assigned 0 and the highest 15. Bucket counts in between are scaled appropriately. A variation of JsonHash sorts the bucket counts and to uses rank values (projected onto 16 levels.)

|

Bucket |

Scaled Count |

|

8 |

8 |

|

9 |

8 |

|

14 |

8 |

|

26 |

8 |

|

27 |

15 |

|

48 |

8 |

|

61 |

8 |

Step 6: Output a Hash Digest String

The final step is to output a hash digest string. For each bucket count, we output a hexadecimal. For example, ‘f’ for 15, and 8 for 8. The JsonHash for our example record is as follows:

000000008800008000000000008f000000000000000000008000000000000800

This can be thought of as the fingerprint of the example record, we used as an input.

Comparing JsonHash Values

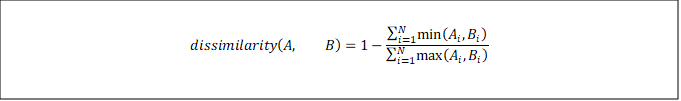

To compare two JsonHash digests, we need to compute the dissimilarity. To propose a dissimilarity function, we take inspiration from the Jaccard Index. Each value in a digest denotes the presence of one or more tokens for the corresponding hash bucket. Our dissimilarity measure approximates the number of tokens shared by both digests, as a ratio to the total number of tokens from both digests combined. For two digests, A and B, the dissimilarity is calculated as follows:

A dissimilarity of 0 indicates the two digests are identical. A dissimilarity of 1 indicates the two digests are maximally different.

Putting it all together – JsonHash in a Threat Hunting Scenario

To illustrate how JsonHash can support incident responders and cyber security analysts in identifying malicious activity, let us consider the following scenario:

A threat actor has managed to exploit a vulnerability in an internet facing IIS server operated by an organisation named Contoso. This allows for remote code execution and remote file upload to be performed.

During post exploitation, the threat actor deploys a webshell (see related article: IIS modules: The evolution of web shells and how to detect them) to the server, to gain a foothold in the network and to establish persistence. This webshell (Webshell A), is leveraged almost exclusively by the threat actor to interact with the target network and to carry out their actions on objective.

The IIS server is situated in a DMZ, and the threat actor manages to move laterally and compromise additional web facing servers. In an effort to maintain long term access to the environment, the actor chooses to deploy an alternative webshell (Webshell B) to a second IIS server. Webshell B is seldom interacted with, but serves as a backup access mechanism, in the event that Webshell A is detected and removed.

The threat actor has basic operational security awareness, and among other things takes care to ensure there is no overlap in the command-and-control infrastructure used to access each webshell.

Months later, a Security Operations Centre (SOC) analyst detects suspicious activity within the network which initiates an investigation. As a result of this investigation, Contoso’s incident responders track the source of the activity back to the initially compromised IIS server and identify Webshell A. Through forensic analysis, incident responders retrieve various Indicators of Compromise (IOCs) including the hash of Webshell A, and the user agents and IP addresses observed interacting with it.

As any good Incident Response team would, these IOCs are washed across Contoso’s SIEM to highlight any other malicious activity. In particular, the responders are trying to identify other points of presence on the network which can be tied to the same actor-controlled IP addresses. Unfortunately, no new activity is identified.

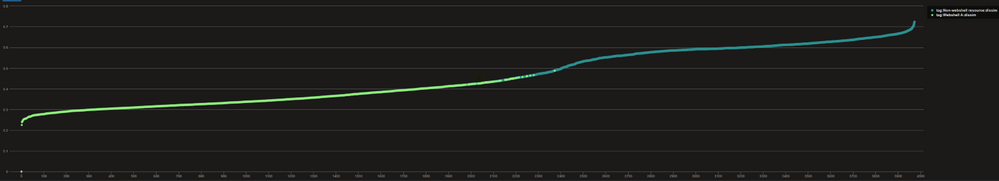

Continuing their investigation, Contoso’s responders leverage JsonHash to analyse the IIS logs retrieved from the server where Webshell A was found. The IIS logs are converted to CSV files and a JsonHash is generated for each log line. The resulting digests are then analysed to identify similarities.

The results confirm the existing findings, JsonHash analysis is able to identify and cluster log lines related to interactions with Webshell A.

Figure 1: Dissimilarity of web server logs from known compromised device. (Logs linked to Webshell A are colored in green. Other logs are shown in blue.

From the chart in Figure 1, Contoso’s IR team deduce that that log lines relating to interaction with the webshell all have relatively low dissimilarity and as such are clustered together.

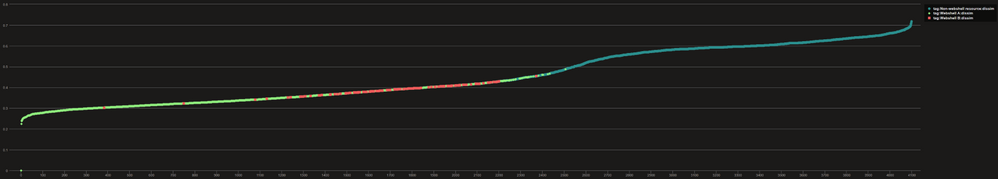

Casting their net wider, the same analysis methodology is now applied to all IIS servers in the DMZ. A subset of IIS logs are retrieved, converted to CSV files and processed for analysis using JsonHash.

To illustrate the point a subset of web resources and the dissimilarity values are included below:

|

Web Resource |

Host |

Dissimilarity Value Range |

|

Webshell A |

Compromised IIS Server |

0 - 0.4 |

|

File of interest (Webshell B) |

DMZ IIS Server |

0.3 - 0.45 |

|

ErrorFe.aspx (legitimate resource) |

Compromised IIS Server |

0.5 - 0.7 |

|

Logoff.aspx (legitimate resource) |

DMZ IIS Server |

0.6 - 0.63 |

This analysis reveals a second web resource on an IIS server which was not previously within the scope of the investigation. There were only a handful of log lines for interaction with this file, however they all had a low dissimilarity value when compared to log lines relating to the initial webshell.

As a result, Contoso’s responders identify another suspicious cluster of activity, bearing similarities to the interactions with Webshell A.

Figure 2: Dissimilarity of web server logs for all IIS Servers, showing a second cluster if interest and potentially another webshell. (Logs linked to Webshell A are colored in Green, logs linked to Webshell B coloured in red and other logs in blue.)

Returning to the raw log file of interest and the host in question reveals interactions with the previously undiscovered Webshell B.

Contoso’s security team can now harvest additional IOCs and continue their investigation before beginning remediation activity.

JsonHash in Kusto

Azure Data Explorer (ADX), also known as Kusto, is a high-performance database for analyzing large sets of logs and other kinds of semi-structured data. Microsoft Incident Response leverages ADX to support threat hunting and forensic investigation, by parsing logs and forensic artifacts into a database that can be queried efficiently.

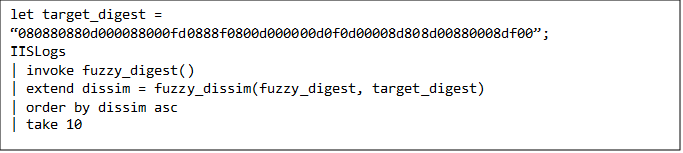

We have discussed how JsonHash can be used to look for similarities in log files. ADX supports many built-in functions for analyzing data. We will now demonstrate how we can add a custom function, using ADX’s Python plugin, to support our novel fuzzy hash. To do this, we need two user defined functions in Kusto, fuzzy_digest() to compute the JsonHash digest, and fuzzy_dissim() to calculate the dissimilarity between two hashes. Appendix A lists the implementation of how these functions may be added to Kusto. fuzzy_digest() takes an arbitrary table as input, and calculates the JsonHash digest for every row in the table. The function will return a new table with an extra column called “fuzzy_digest” that contains the JsonHash digest. fuzzy_dissim() takes two JsonHash digests and returns their dissimilarity.

We now demonstrate a scenario of how we can use JsonHash. In our scenario, we discover a Web Shell in our Web Server logs, and we want to easily discover if there are any similar logs to the malicious log we found. In this scenario we use ADX.

Applying these functions to our previous scenario, we can use fuzzy_digest() to calculate the JsonHash digest of the logs containing webshell activity. Suppose we stored out malicious logs in a table called WebshellIISLogs, we can compute the JsonHash digest with the following query.

When we ran the above query, the JsonHash digest of a malicious log was: “080880880d000088000fd0888f0800d000000d0f0d00008d808d00880008df00“. The next step is to search the rest of the logs for similar values. If the rest of our logs are in the table IISLogs, we can run the following query to discover similar values:

The above query will return ten logs with the greatest similarity to the malicious log.

Evaluation

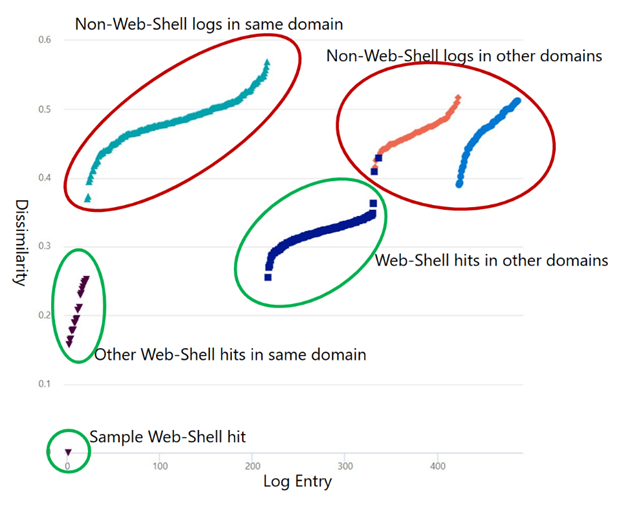

Lastly, we performed a validation to assess how accurate JsonHash is at finding webshell activity logs. We used a sample set of 1000 web server logs, with half of them being related to malicious webshell activity, and the other half unrelated. We compared fuzzy hash of these logs to a log of confirmed webshell activity. The sample set included logs from the same domain as the confirmed web shell activity, and from other domains.

Figure 3 shows the results. The dissimilarity was smallest for other web shell activity in the same domain, with dissimilarity in the range of 0.15 to 0.25. Web shell activity in other domains also had a low dissimilarity, in the range 0.25 to 0.35. Logs unrelated to Web Shell activity had the highest dissimilarity. This experiment shows JsonHash was effective at finding Web Shell activity for the sample set tested.

Figure 3: Dissimilarity of web server logs to confirmed webshell activity log

Summary and Future Work

We have shown how fuzzy hashing can be applied to log data to find similar log entries without requiring any prior knowledge about the underlying data structure. We used a novel fuzzy hashing algorithm, JsonHash, which is specifically designed for comparing semi structured data. We gave an example scenario of using JsonHash to discover Web Shell activity in web server logs.

JsonHash is an algorithm under further development. Future refinements will include improving accuracy by ignoring fields such as timestamps and UUIDs from the hash computation and conducting further experiments to identify the best parameters for a wide range of scenarios.

Appendix A – JsonHash Implementation in ADX

User defined function: fuzzy_digest()

.create-or-alter function fuzzy_digest(T:(*)) {

T

| evaluate hint.distribution=per_node python (

typeof(*, fuzzy_digest:string),

```

import hashlib

import re

import math

import pandas as pd

class JsonHash:

nbuckets: int = 64

nlevels: int = 16

scale: bool = True

# Whether to output debug column

is_debug: bool = False

# Calculates hash buckets for a given row/object (passed as a dictionary)

def digest_row_array(self, obj: dict):

if self.nbuckets is None:

return None

digest = [0.0] * self.nbuckets

for k, v in obj.items():

tokens = re.split('\\W+', str(v))

w = 1.0

if self.scale and len(tokens) > 0:

w = 1.0 / (len(tokens) * len(obj))

for tok in tokens:

s = ":".join([k, tok]).encode()

# In this example implementation lower bits of SHA256 is used.

# Change to Pearson hash for efficiency

h = int.from_bytes(hashlib.sha256(s).digest()[:8], 'little')

b = h % self.nbuckets

digest[b] += w

return digest

def digest_row(self, obj: dict):

digest = self.digest_row_array(obj)

sc_digest = self.sort_scale(digest)

sdigest = self.digest_as_string(sc_digest)

return sdigest

# Discretises bucket values to be between 0 and number of levels (n_levels)

def scale_digest(self, digest):

min = 0

max = 1

for d in digest:

if d < min:

min = d

if d > max:

max = d

s_digest = []

for d in digest:

s_d = int((d - min) * (self.nlevels-1) / (max - min))

s_digest.append(s_d)

return s_digest

# Discretises hash bucket values by ranking

def sort_scale(self, digest):

scale = []

i = 0

for d in digest:

scale.append((i, d))

i += 1

scale.sort(key=lambda x: x[1])

sc_digest = [0] * self.nbuckets

last_v = 0

last_q = 0

for j in range(len(digest)):

(i, v) = scale[j]

if v == last_v:

q = last_q

else:

q = int(j * self.nlevels / self.nbuckets)

sc_digest[i] = q

last_v = v

last_q = q

return sc_digest

# Outputs hash buckets array as a hexadecimal string

def digest_as_string(self, digest):

s = ""

for i in range(int(len(digest) / 2)):

xs = digest[(i*2):(i*2+2)]

x = min(self.nlevels-1, xs[0]) * self.nlevels + min(self.nlevels-1, xs[1])

sx = "%x" % x

if len(sx) == 1:

sx = "0" + sx

s = s + sx

return s

# This method is designed to be called from the KQL python plugin.

# Will calculate the fuzzy hash digest for all rows in a dataframe.

def digest_dataframe(self, df: pd.DataFrame):

dcol = []

if JsonHash.is_debug:

fcol = []

for _, row in df.iterrows():

sdigest = self.digest_row(row)

dcol.append(sdigest)

if JsonHash.is_debug:

adigest = self.digest_row_array(row)

fcol.append(str(adigest))

df['fuzzy_digest'] = dcol

if JsonHash.is_debug:

df['digest_debug'] = fcol

jh = JsonHash()

jh.digest_dataframe(df)

result = df

```

)

}

User Defined Function: fuzzy_dissim()

.create-or-alter function fuzzy_dissim(T:(fuzzy_digest: string), cmp_digest:string)

{

let hexcp2i = (cp: int) {

case(cp >= 97, cp - 87, cp - 48)

};

T

| extend xlen = strlen(cmp_digest)

| extend z = range(0, xlen, 1)

| extend x = unicode_codepoints_from_string(cmp_digest)

| extend y = unicode_codepoints_from_string(fuzzy_digest)

| mv-apply z to typeof(int) on (

extend xi = hexcp2i(toint(x[z])), yi = hexcp2i(toint(y[z]))

| extend dmax = case(xi > yi, xi, yi), dmin = case(xi < yi, xi, yi)

| summarize maxsum = sum(dmax), minsum = sum(dmin)

| project dissim = 1.0 - todouble(minsum) / maxsum

)

| project-away xlen, x, y

}

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.