Video Transcript:

- Is your database ready for generative AI? Not only is your database key to providing the grounding data for large language models, but it’s also critical for the speed and scale of data retrieval, and today we’ll take a look at the pivotal role of Azure Cosmos DB and running the ChatGPT service, with its hundreds of millions of users globally, along with other real-time operational solutions across the industry. And I’ll also show you how you can take advantage of the state-of-the-art built-in vector search engine and limitless scale for your own generative AI and other real-time workloads. And joining me at today’s show to go deeper is Kirill who leads the Azure Cosmos DB team. Welcome.

- Thank you, Jeremy, for having me on the show. It’s really great to be here.

- And thanks so much for joining us today. Not a lot of people know I think that Cosmos DB is actually the database behind ChatGPT and it’s widely used for real-time and operational workloads as well. So why is this such a good fit for these types of scenarios?

- Sure. Well, it’s all about flexibility of Cosmos DB. You can automatically scale as much as you want, you can distribute the data where you need it, and you can bring in any data. And this is super helpful for real-time operational workloads like generative AI with chat. For example, in ChatGPT, we can interact using natural language in our user prompts. The semi-structured data is stored and automatically indexed by Cosmos DB. This information is then presented to the underlying large language model to extract context and generate a response. And as you saw, this happens super fast. At the same time, Cosmos DB is also storing the chat history, as you can see here on the left.

- In fact, there are a lot more real-time operational use cases where Cosmos DB support for less rigid data schemas really lights up, you know, from things like metadata processing from your IoT devices and manufacturing or clickstream data from e-commerce apps, and although we’re working with semi-structured data, it’s still organizing those various built-in models for automatic indexing, right?

- That’s right. It’s all about flexibility of Cosmos DB. This multi-model support is possible because our native data model is JSON, and as a developer, you can choose from multiple APIs and you can use multiple data models to represent your data from graph to columns to key value model. And in the case of ChatGPT, we use the core NoSQL API with a document model because of its natural representation for conversational data. Also, because each chat message is stored as a document with nested data, we’re able to more easily store and retrieve information with metadata like vectors in one record.

- Now, you mentioned retrieval. So how do we solve for really fast real-time data access?

- Well, that’s one of the big advantages of Cosmos DB. We have a low-latency database engine with an SLA of less than 10 milliseconds for read and write operations. Additionally, it’s a fully managed, globally distributed database spread across multiple locations around the world. Data can be replicated across multiple regions so that as requests come in, they can get routed to the closest available region to reduce the latency. And globally distributing your data in Azure Cosmos DB is turnkey. At any time in the Replicate Data Globally blade in Azure portal, you can easily add regions to your Cosmos DB database by clicking on the map and saving.

- And this is really a big advantage compared to things like centralized databases, and you can also optimize the lowest latency depending on where users and your operations are.

- And you can maintain high availability. In fact, we have built-in geo redundancy with data replication and five nines of availability SLA.

- Right, and we saw how ChatGPT and OpenAI really grew from about zero to hundreds of millions of users just in a couple of months. So how are we able to achieve that type of scale?

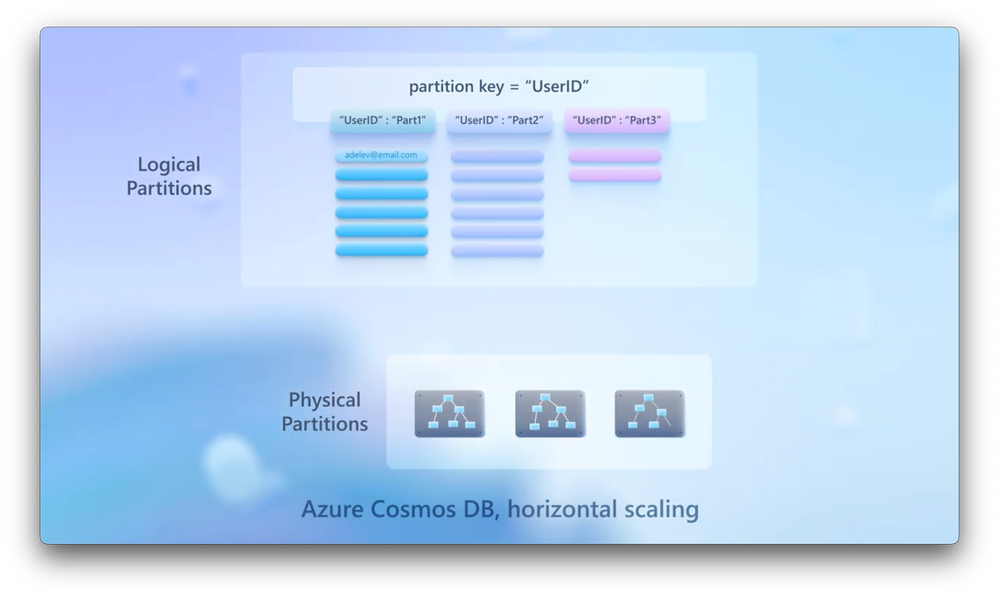

- The interesting thing here is as ChatGPT service grew in subscribers, the database scaled transparently, elastically and fully autonomously. Unlike traditional relational databases that might recite entirely on a single VM, requiring you to scale up and down with vCores and RAM, Cosmos DB instead scales horizontally in and out by adding and removing physical partitions based on the compute and storage needed. These physical partitions contain your logical partition keys that are like the address for your data that you define. ChatGPT for example, chose user ID as a partition key so that as new users subscribe to the service, new partitions were added to scale automatically to support their explosive growth. Just to give you an idea of the level of automatic scaling that Cosmos DB supported for ChatGPT globally in 2023, here’s a chart that shows the growth in daily transactions. You can see a pretty massive spike in March, 2023, when ChatGPT-4 was launched, where we went from 1.2 billion to 2.3 billion transactions. Then in November, when OpenAI announced a bunch of new capabilities, the number jumped again from 4.7 billion to 10.6 billion transactions, almost overnight. And if we overlay the infrastructure scaling that was happening behind the scenes, you can see that Cosmos DB ramped up physical partitions from around 1400 to 2,400 in early March. Then in November, the ramp is even steeper from 13,000 to 25,000 in a five-day period.

- And the great thing here is that all of this was automatic. They didn’t have to touch the database at all.

- Exactly. And interestingly, it’s not even the largest app running Cosmos DB. Microsoft Teams similarly uses Cosmos DB, and has around 7 trillion transactions daily.

- 7 trillion is a lot. I had no idea it was that many transactions. So how does Cosmos DB then work for things like generative AI and Copilot-style apps where beyond kind of storing the chat history, you need to retrieve data at the back end as part of orchestration for retrieval augmented generation?

- Well, indexing is a core requirement to retrieve relevant data efficiently. And something unique about Cosmos DB is that for vCore-based Cosmos DB for MongoDB API, we have vector indexing and vector search built into the database. You don’t need to move the data to another service. There is no data management or data consistency challenges. Your vectors and your data are all in one place. The way it typically works is you can deploy a function to calculate vectors as data is ingested into Cosmos DB. This a coordinate-like way to refer to chunks of data in your database that will be used later for lookups. Then when a user submits their prompt, that, too, is converted to a vector embedding, and the lookup will try to find the closest matches for the prompts vectors to those in the database.

- So this is mechanics there. Can we walk through an application that uses this pattern?

- I can. In fact, I’ll show you an app that global consulting firm KPMG has built. I’ll start with an ungrounded experience that uses a large language model as-is. Here, we have KPMG internal assistant, and we’ve asked a specific question about KPMG’s response to the Privacy Act review. And since the LLM is ungrounded, it does not know about KPMG’s response, the response is generic and not very helpful. Now, I’ll switch to a grounded experience using their data in Cosmos DB with a built-in vector indexing and search. I’ll prompt it with the same question, and you can see the response is now much more specific, and answers my question based on the knowledge base that KPMG has in their Cosmos DB database.

- That’s a great example, but can we go behind the scenes in terms of the code behind it?

- Of course, that’s always the most interesting part. Here, I’ve got the logic of KPMG app running as a Jupyter Notebook. It uses the vCore-based Azure Cosmos DB from MongoDB as a data store and for vector search, and Azure OpenAI to create vector embeddings and for chart completions. For the purposes of this Notebook demo, let us do the initial setup of the vectorized knowledge base in Cosmos DB. Here we’re looking at one of the records, Global Code of Conduct, in their dataset. Here we have a function to generate vector embeddings using OpenAI’s ADA2 model. Let us run that function on all of our documents in the dataset, generating a vector for each and adding it to the document as a property. If we look at the global code of contact record again, we see a vector property added. Now, we can then connect to our Cosmos DB database and set up collection for the data, and also I’ll set up a vector index to be used for vector similarity search. Then load the vectorized dataset into Cosmos DB, and here I’ve created a helper function for vector search. This function generates ADA2 embedding for prompt and executes the built-in vector search query in Cosmos DB to retrieve the most relevant records for the prompt. Here’s our function to generate completions. You will see the system prompt with more instructions for how the LLM should respond, along with variables to add our user prompt and retrieve document content. Finally, we’ve set up a loop for chatting with the LLM grounded in our dataset. Let’s try it out from the Notebook. I’ll ask it, “Tell me about your stance on ethics,” and the sentence will be parsed and sent to Azure. It generates ADA2 embeddings for the prompt, then that is sent to Azure Cosmos DB for vector search, and the most relevant documents will be passed to the large language model alongside the original prompt. And here’s the grounded response based on the underlying data in Cosmos DB.

- We’ve been talking a lot about generative AI apps, but with this type of search work also in an app that isn’t doing that.

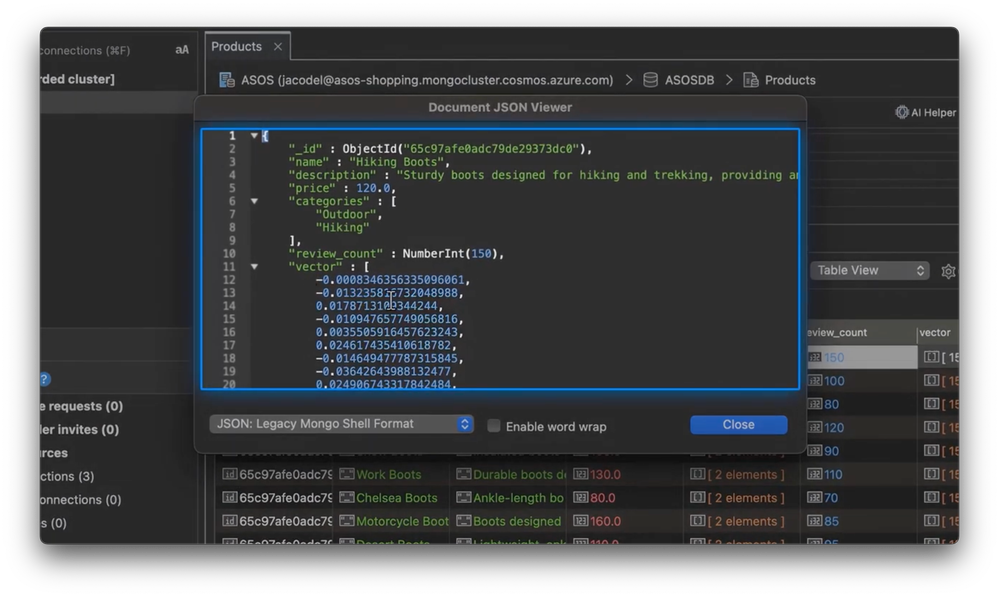

- Absolutely. Vector indexing in Cosmos DB simply makes information the retrieval more intelligent than a simple keyword search. I can give you a quick, but a great example, of using vectors by a global online fashion retailer, ASOS. Here’s a public website which uses Cosmos DB as a primary database. And when you look at different items, notice in the You Might Also Like section, it starts to make different recommendations. Items in this list have been vectorized to establish a relative likeness between them based on similar materials, style, colors, et cetera. And again, this is beyond what keyword matching could do. And this is combined with vectorized user preferences from their purchase history to inform the recommendations. And behind the scenes, you can see it is using the same types of vector embeddings like I showed before.

- So, so far we’ve shown a lot of massive enterprise scale applications, but a lot of people are probably watching wondering, does Azure Cosmos DB then work also for smaller apps?

- Absolutely. Cosmos DB is great for apps large and small. In fact, let me show you how easy it is to create a simple direct pattern application using data stored in Cosmos DB for a bike shop. Here I have a product collection in a simple e-commerce database. You can see this is a touring bike entry with properties like name, description and price. I’ve already vectorized the data, as you’ll see with the vector property, so it’s ready to be used with vector search. Now, I’m going to move to Azure OpenAI Studio. From here I can easily bring in my own data. I’ll choose Azure Cosmos DB, and then the database account, and enter a password. Next I’ll choose the database for our retail bike store, retaildb. Now, I will select the products collection that will be used for search. I need to pick the vector index used to perform vector search against our product data. When the user submits a prompt. This model has to match what our data uses, ADA2 in our case. I’ll check this box and click Next. Now, I’ll define what properties to return from our vector search for content data. I’ll use the our price collection, and in vector fields, I’ll choose the property from our document vector. That’s it. I just need to save and it’s ready to go. I can test it right here in the playground, but it’s easy to deploy an app to test it out. So using the Deploy to control, I’ll choose a New Web App that launches a simple wizard to build out a basic web app where you just fill in the standard fields. This checkbox here even provisions a Cosmos DB backend to save chat history for our Copilot-style app. And I’m done. Let’s test it out. I’ll ask our AI assistant if it can recommend socks that are good for mountain biking. You will see the response is grounded with our added data. It also saves my chat history. Everything is powered by Cosmos DB.

- This is a really great and quick example for building a Copilot-style app, but what are the costs behind something like this?

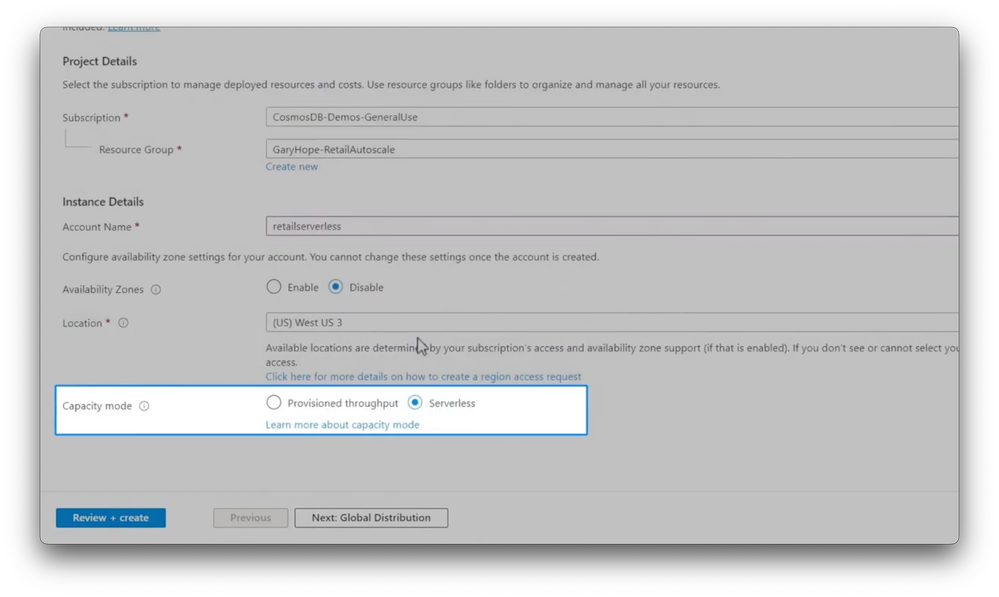

- Well, when we selected the chat history checkbox, on the backend, it can be configured to run serverless. When you provision a new account, you can configure your capacity mode as serverless, and it will only cost about 25 cents for a million requests. Also, 1000 request units are always free.

- Okay, and we also showed some of these massive, larger examples like ChatGPT or ASOS that are running at huge auto-scale. So how do we control those costs and make them efficient?

- Ah, you are always in control of your budget. One way to do this is by setting maximum throughput thresholds. Here, you can see a Cosmos DB account using a sales database with three containers, each with their own provision throughput. Then within each container at any time, you can change the maximum throughput you don’t want your application to exceed. Without the scale, it’ll scale from 10% of this max to as much as needed by the app, up to the max on an hourly basis, and you only pay for what you use. That said, of course, these containers are partitioned to allow for scale. For example, our retail transactions container uses StoragId as a partition key. Now, it’s common in retail that some stores are busier than others, and here we can see the breakdown by store. Typically to solve this, the databases scale all nodes to the same size to accommodate the needs of the busiest partition, but that could lead to some nodes being underutilized. This goes away with a new capability we’ve introduced that will automatically scale individual partitions and regions for you so that you only pay for what you use. It is in preview now and available for newly created accounts, so let us enable this capability. And now after a period of time, if we go to our cost analysis view, you will see daily charges went down about 20%. And each region is built only for the actual traffic it serves. And even though you might think that adding a second region to my account would double my costs, it doesn’t. I’ll prove it by adding a region, North Europe, to move some of the read workloads there. And after some time you will see that instead of doubling our costs, we’re paying only around 40% more. Cosmos DB only bills you for what you use.

- So you can reduce your cost then as you scale and use Cosmos DB for large or small generative AI and operational workloads, so how can the people watching right now learn more?

- So you can learn more about how Cosmos DB vector search capabilities work at aka.ms/CosmosVector. Also, every API has a free tier that does not expire, like this one for the MongoDB API. And if you are not an Azure user today, you can still get a free trial at aka.ms/trycosmosdb. Great stuff. Thanks so much for joining us today, Kirill And you can also check out our future episodes. We’re going to go hands on. We’re going to build some more generative AI apps with Cosmos DB. Also be sure to subscribe to Microsoft Mechanics. Thank you so much for watching.