Video Transcript:

-Generative AI with large language models like GPT is fast becoming a central part of everyday app experiences. With hundreds of popular apps now available and growing. But do you know which generative AI apps are being adopted via shadow IT inside your organization? And if your sensitive data is at risk?

-Today I am going to show you a unified approach to protecting your data while still getting the benefits of generative AI. With Microsoft Defender for Cloud Apps to help you quickly see what risky generative AI apps are in use and Microsoft Purview to assess your sensitive data exposure so that you can automate policy enforced protections based on data sensitivity and the AI app in use.

-Now, this isn’t to say that there aren’t safe ways to take advantage of generative AI with work data right now. Copilot for Microsoft 365, for example, has the unique advantage of data protections built in that respect your organization’s data security and compliance needs. This is based on the policies you set in Microsoft Purview for your data in Microsoft 365.

-That said, the challenge is in knowing which generative AI apps that people are using inside your organization to trust. What you want is to have policies where you can “set it and forget it” so that existing and future cloud apps are visible to IT. And if the risk thresholds you set are met, they’re blocked and audited. Let’s start with the user experience. Here, I’m on a managed device. I’m not signed in with a work account or connected to a VPN and I’m trying to access an AI app that is unsanctioned by my IT and security teams.

-You’ll see that the Google Gemini app in this case, and this could be any app you choose, is blocked with a red SmartScreen page and a message for why it was blocked. This app level block is based on Microsoft Defender for Endpoint with Cloud App policies. More on that in a second. Beyond app level policies, let’s try something else. You can also act based on the sensitivity of the data being used with generative AI. For example, the copy and paste of sensitive work data from a managed device into a generative AI app. Let me show you.

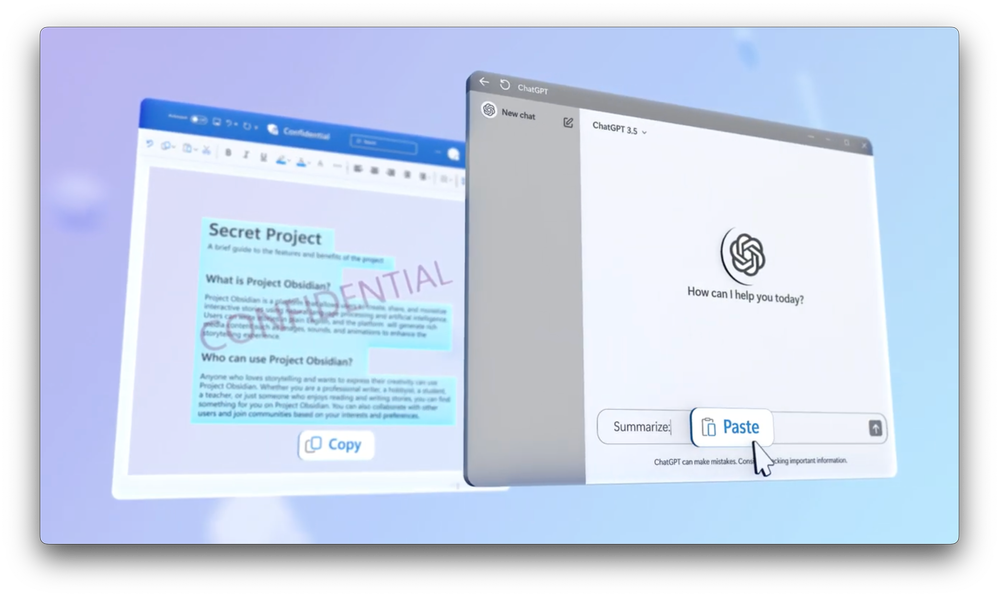

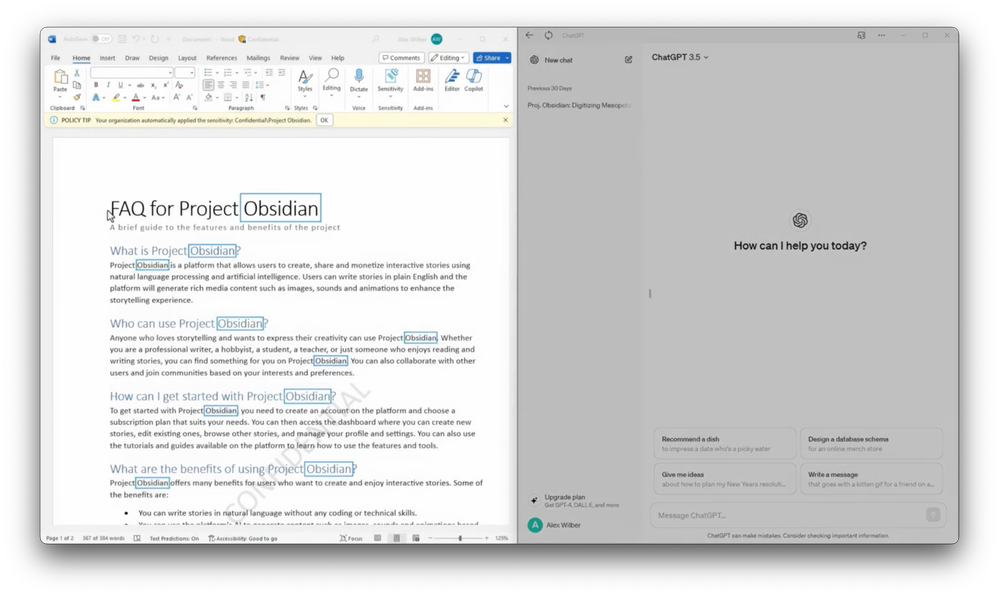

-I have a Word document open, which contains sensitive information on the left and on the right I have OpenAI’s ChatGPT web experience running, and I’m signed in using my personal account. This file is sensitive because it includes keywords we’ve flagged in data loss prevention policies for a confidential project named Obsidian. Let’s say I want to summarize the content from the confidential Word Doc.

-I’ll start by selecting all the texts I want and copied it into my clipboard, but when I try to paste it into the prompt, you’ll see that I’m blocked and the reason why. This block was based on an existing data loss prevention policy for content sensitivity defined in Microsoft Purview, which we’ll explore in a moment. Importantly, these examples did not require that my device used a VPN with firewall controls to filter sites or IP addresses, and I didn’t have to use my working email account to sign into those generative AI apps for the protections to work.

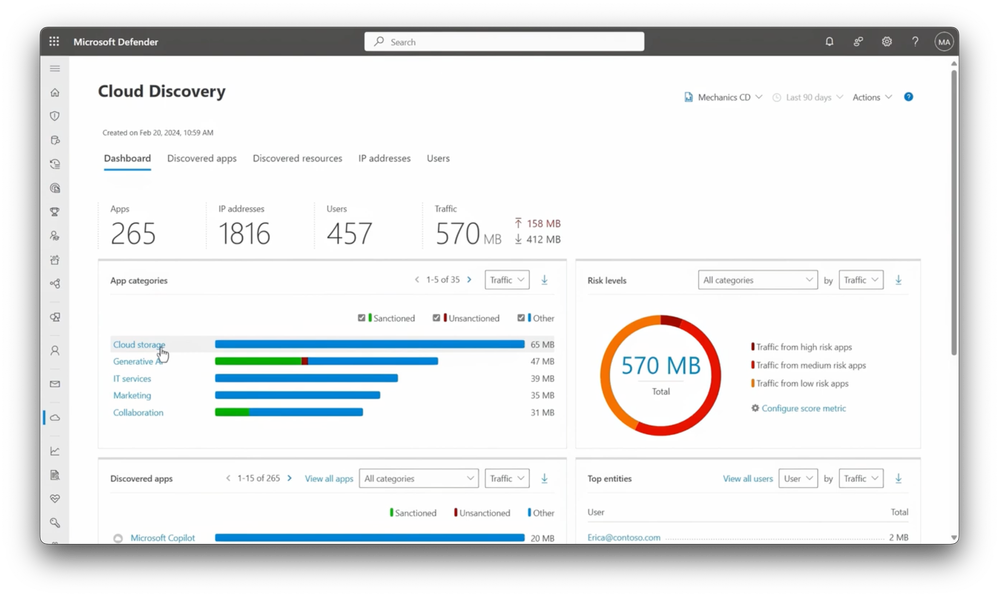

-So let’s switch gears to the admin perspective to see what you can do to find generative AI apps in use. To get started, you’ll run Cloud discovery and Microsoft Defender for cloud apps. It’s a process that can parse network traffic logs for most major providers to discover and analyze apps and use. Once you’ve uploaded your networking logs, analysis can take up to 24 hours. And that process then parses the traffic from your network logs and brings it together with Microsoft’s intelligent and continuously updated knowledge base of cloud apps.

-The reports from your cloud discovery show you app categories, risk levels from visited apps, discovered apps with the most traffic, top entities, which can be users or IPs along with where various app headquarters locations are in the world. A lot of this information is easily filtered and there are links into categories, apps, and sub reports. In fact, I’ll click into generative AI here to filter on those discovered apps and find out which apps people are using.

-From here, you can manually sanction or unsanction apps from the list, and you can create policies to automatically unsanction and block risky apps as they’re added to this category based on continuously updated risk assessments so that you don’t need to keep returning to the policy to manually add apps. Next, to protect high value sensitive information that’s where Microsoft Purview comes in.

-And now with the new AI hub, it can even show you where sensitive information is used with AI apps. AI Hub gives you a holistic view of data security risks and Microsoft Copilot and in other generative AI assistants in use. It provides insights about the number of prompts sent to Microsoft Copilot experiences over time and the number of visits to other AI assistants. Below that is where you can see the total number of prompts with sensitive data across AI assistants used in your organization, and you can also see the sensitive information types being shared.

-Additionally, there are charts that break down the number of users accessing AI apps by insider risk severity level, including Microsoft Copilot as well as other AI assistants in use. Insider risk severity levels for users reflect potentially risky activities and are calculated by insider risk management and Microsoft Purview. Next in the Activity Explorer, you’ll find a detailed view of the interactions with AI assistants, along with information about the sensitive information type, content labels, and file names. You can drill into each activity for more information with details about the sensitive information that was added to the prompt.

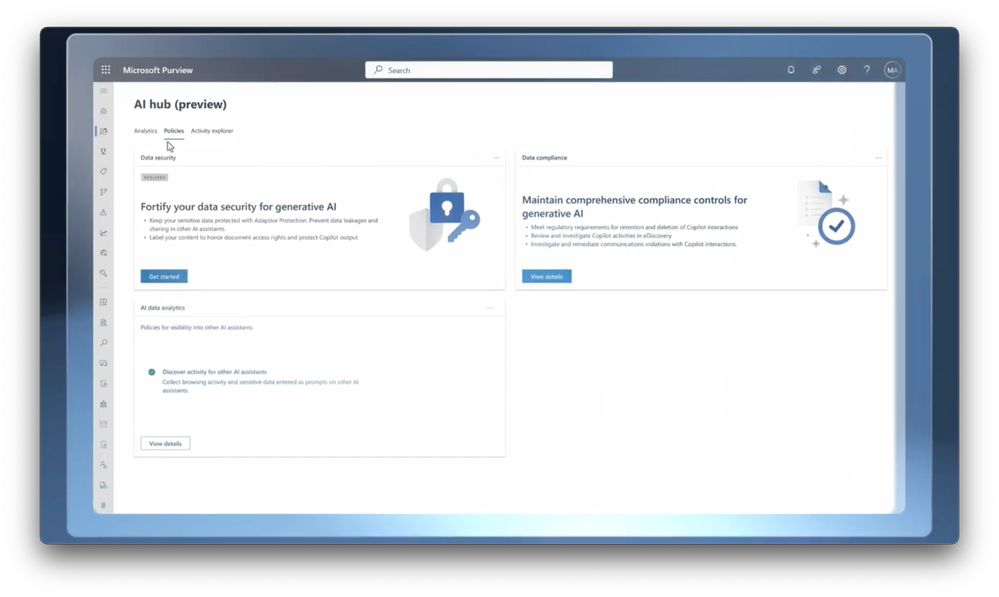

-All of this detail super useful because it can help you fine tune your policies further. In fact, let’s take a look at how simple it is to set up policies. From the policies tab, you can easily create policies to get started. I’ll choose the fortify your data security for generative AI policy template. It’s designed to protect against unwanted content sharing with AI assistants.

-You’ll see that this sets up built-in risk levels for Adaptive Protection. It also creates data loss prevention policies to prevent pasting or uploading sensitive information by users with an elevated risk level. This is initially configured in test mode, but as I’ll show you can edit this later, and if you don’t have labels already set up, default labels for content classification will be set up for you so that you can preserve document access rights in Copilot for Microsoft 365.

-After you review the details, it’s just one click to create these policies. And as I mentioned, these policies are also editable once they’ve been configured, so you can tailor them to your needs. I’m in the DLP policy view and here’s the policy we just created in AI Hub. I’ll select it and edit the policy. To save time, I’ve gone directly to the advanced rules option, and I’ll edit the first one.

-Now, I’ll add the sensitive info type we saw before. I’ll search for Obsidian, select it, and add. Now, if I save my changes, I can move to policy mode. Currently I’m in test mode, and when I’m comfortable with my configurations, I can select turn the policy on immediately, and within an hour the policy will be enforced. And for more information about data loss prevention policy options, check out our recent episode at aka.ms/DLPMechanics.

-So that’s what AI Hub and Microsoft Purview can do. And if you’re wondering how to set it up for the first time, the good news is when you open AI Hub, once you have audit enabled, and if you have Copilot for Microsoft 365, you’ll already start to see analytics insights populated. Otherwise, once you turn on Microsoft Purview audit, it takes up to 24 hours to initiate.

-Then you’ll want to install the Microsoft Purview browser extension to detect risky user activity and get insights into user interactions with other AI assistant. And onboard devices to Microsoft Purview to take advantage of endpoint DLP capabilities to protect sensitive data from being shared. So as I demonstrated today, the combination of both Microsoft Defender for Cloud Apps and Microsoft Purview gives you the visibility you need to detect risky AI apps in use with your sensitive data and enforce automated policy protections.

-To learn more about implementing Microsoft Defender for Cloud Apps, go to aka.ms/MDA. To learn more about implementing Microsoft Purview capabilities for AI, go to aka.ms/PurviewAI/docs. And for a deeper dive on Copilot for Microsoft 365 protections, check out our recent episode at aka.ms/CopilotAdminMechanics. Of course, keep watching Microsoft Mechanics for the latest tech updates, and thanks for watching.