Video Transcript:

- What does it mean to do data analytics in the era of generative AI? Well, today, we’re going to go deeper on Microsoft Fabric, our next generation managed data and analytics service, and the role it plays in ensuring you have access to quality data wherever it resides, We’ll show you updates to making your data more accessible with new data mirroring capabilities in Microsoft Fabric, and we’ll tour new AI-powered Copilot experiences in Microsoft Fabric from creating predictive models to generating Power BI reports and more. And joining me today from the engineering team is Nellie Gustafsson. Welcome to Mechanics, and also congrats on the general availability of Microsoft Fabric.

- Well, thank you, Jeremy. You know, last time we were on the show, we had just released the public preview of Fabric, and I’m very excited to be here today and talk about the Fabric GA.

- I’m really looking forward to it. It’s really timely, because both generative AI and analytics are dependent on the quality of your data, but setting up a good foundation really gets complicated if you have data sitting across different locations and also different Clouds. So how does Microsoft Fabric improve things?

- Well, we’re making your data accessible wherever it lives, across your entire data estate, without you having to integrate different sources or work across multiple toolsets. In fact, it’s a single, fully managed service that helps you derive quality data from raw fragmented data by using built-in capabilities for data integration, data engineering, and data warehousing, as well as for building data science models, real-time analytics, business intelligence, and real-time monitoring and alerts to trigger actions when your data changes. OneLake is at the foundation of these experiences. It’s one of the first true multicloud data lakes, which uses the open Delta format, so that you can keep your data where it lives and work with it. And compute for your workloads can be provisioned on demand to support everything from the smallest self-service reporting instance to the largest petabyte-scale big data analytics jobs.

- And so this makes data integration a lot less painful. It’s a SaaS experience, and you can work with the data while it’s in place, meaning you don’t have to move it and there’s no dependency on having to get compute resources set up. Now, lots of obstacles are going to go away to work with your data and prep it for analytics as you use things like generative AI too, right?

- That’s absolutely right, and as we showed you last time, it’s a very simple experience to get started. You don’t have to start in the Azure portal, or configure any Azure resources. In fact, you don’t even need an Azure subscription. From fabric.microsoft.com, you can just use your org ID for logging into Power BI or Microsoft 365 to get started. Then whether you are a data engineer building pipelines, a data scientist building predictive models, or a data analyst or business user using Power BI, your experience is tailored to your role. So, it’s one unified platform, and a single source of truth for everyone to access and work with the data.

- And, like you said, data accessibility is really core to the experience for Microsoft Fabric, and one of the most powerful ways to bring data into Fabric that we covered on the last show is actually by using shortcuts. Now, these use the Delta format to create virtual files of your data sitting in Azure storage accounts or in other clouds, and, most recently, we’ve added Dataverse for your Dynamics 365 and Power Platform data as well, and you can instantly link them into OneLake so that they’re ready to view and query without any duplication or movement, which is all powerful in itself, and now with the GA, it gets even better in terms of data accessibility, right?

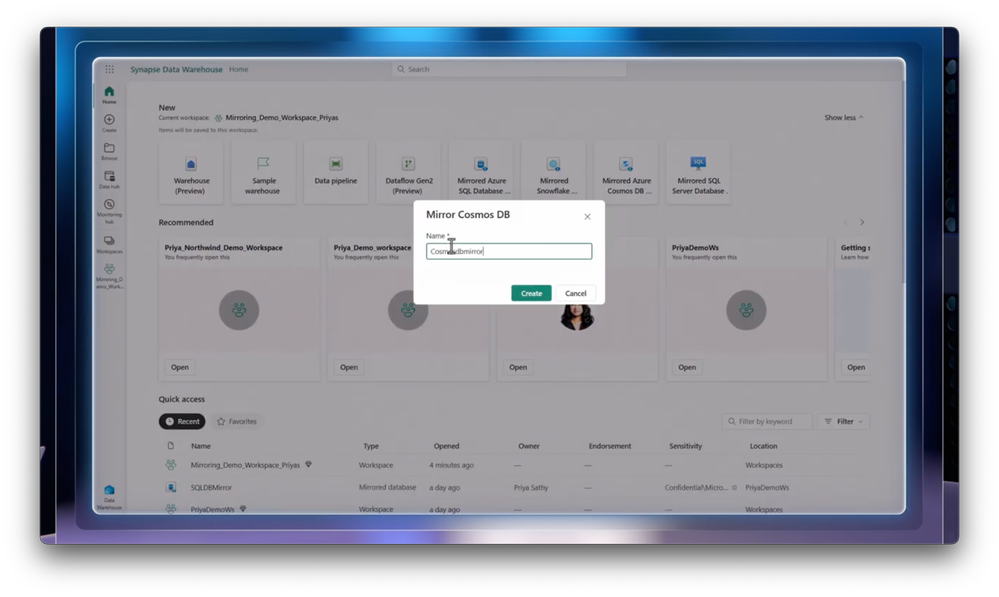

- It does, and, you know, shortcuts are great if you’re working with data in an open format, and for relational data, in less open or proprietary formats, we’re actually introducing a new option called mirroring, and this lets you create a read-only, real-time replica of your data in the open Delta format and there’s no ETL involved. Let me show you. So, here, I am in the Fabric data warehouse home page, and I can see options to mirror my data from various sources like Azure Cosmos DB, Azure SQL DB, and Snowflake. I have a variety of transactional applications in my data estate. My sales transactional data sits in Cosmos DB, and my parts supply inventory has been shared by a supplier and sits in Snowflake. Additionally, I have a CRM application built on Azure SQL DB. With mirroring, I can manage my entire data estate in one place without ever leaving Fabric, and without any ETL, we can land and access data in a unified data format that’s ready for analytics. So, let’s start mirroring the Cosmos DB data in Fabric. I’ll click on the tile, and give it a name, and that’s it. Again, I didn’t have to provision or set up any resources. It’s auto-provisioned. Here, we can pick an existing connection to Cosmos DB and pick the database with the transactions. Clicking on Mirror Database is going to initiate the mirroring process behind the scenes to replicate the Cosmos DB data to the open Delta format. I land in a Cosmos DB editor in Fabric where we can directly view all those containers that we have access to, and all the items within them. From right within this editor, we can insert or upload new items without ever leaving Fabric. Any transaction that’s possible in Azure is possible here. I start writing queries and view the transactional data. Now, to go ahead and verify that I have just updated the Azure Cosmos DB, I’m going to run the exact same query in the Azure Portal to verify the results. Mirrored databases automatically come with SQL analytics endpoints that enable us to have a unified warehouse and BI reporting experience over the data. And the best part is this gives me real-time access to changes in the source database.

- Okay, so now that your data is mirrored, what does the querying experience look like?

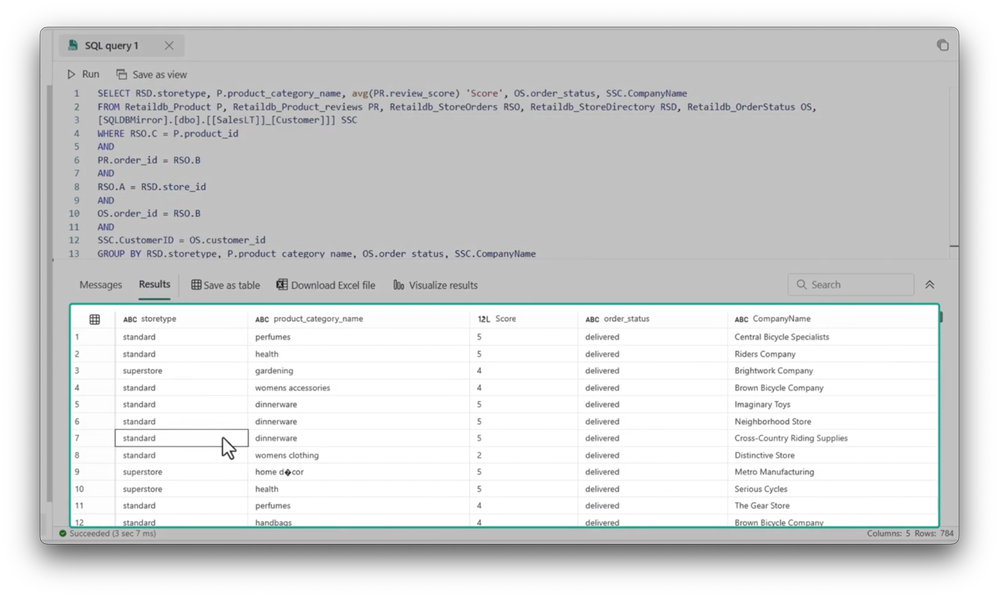

- Well, I can now cross join across my mirrored data sources, the Cosmos DB, the Azure SQL DB, and the Snowflake database. Let’s take a look. Here’s my workspace with all the mirrored data sources I need for analysis, and I can use Fabric’s warehouse capabilities to create a virtual warehouse. I’ll open my SQL analytics endpoint and I’ll go ahead and select and add my mirrored databases, and the databases will show up in my object explorer. There’s one for the SQL DB mirror and one for the Snowflake mirror, and within each database, I can also see all my tables. Next, I can use the TSQL editor to write a query that’s going to join across all my sources, my Cosmos DB, Azure SQL DB and Snowflake Database, and, here, we can see the results from the join, but Fabric also enables me to easily share my mirrored database or the SQL analytics endpoint with other users in the organization. So, now, our BI analyst, for example, can tap into this data for analysis, and because the data is mirrored, they can also get real-time insights from the data.

- And this is really a game-changing concept, that you’ve unlocked data that is typically inaccessible without ETL conversion and made it accessible for querying in this case. Now, both mirroring and shortcuts are two unique ways to bring data into Fabric without moving it and without performing ETL, but what if you do still prefer the traditional way of using things like data pipelines?

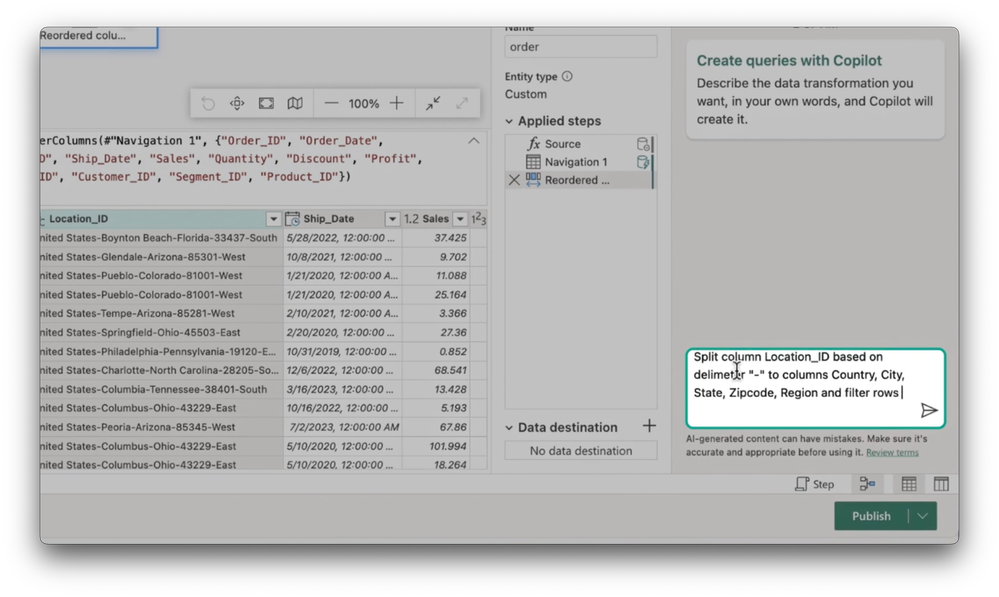

- Well, we’ve made that easier too with Copilot in Microsoft Fabric. This will be part of our Copilot for data factory experience that’s coming soon, so even if you don’t know how to code, you’ll be able to instruct generative AI to perform tasks like extracting specific data from various sources to speed up data prep and integration. With that said, an experience I can show you today is how to prepare and transform your data using Copilot for data flows in Fabric. Here, I have a data flow, with a source query that’s listing sales orders, and I’m going to split the location_id column, which contains country, city, state, area code, and region, into different columns, and filter the sales data on 2023 only. Notice here that Copilot generates a multi-step query, and I can quickly verify the query and preview the data, and, now, using data destinations in data flows, I can load the transformed data into the Lakehouse for further analysis and reporting.

- It’s really great to see just how much Copilot is semantically aware and actually works within the context of your data.

- It is, and everywhere in Microsoft Fabric, you’re going to start seeing Copilot embedded into the experiences. Now, with access to the data that I need in place, let me show you how you can use Copilot to explore and enrich the data and even build a machine learning model for predictive insights. Here I am in a Fabric data science experience using a notebook, and you’ll see that I already have my sales Lakehouse attached and have read the data from one of the tables into a Spark dataframe. I’ll convert the dataframe into a Pandas dataframe, since that’s going to help me explore the data. Now, I’m going to open up the Copilot pane and choose to get started. We’ll start with one of the proposed prompt options, to suggest data visualizations, then specify the data source we want. In this case, the name of my dataframe. I’ll hit run. So Copilot is actually aware of the data in the Lakehouse as well as dataframes in my session, and it will now generate relevant recommendations for data visualizations. Now, let’s add the code into our notebook, and when I run the cell, it’s going to give me a chart with the total sales broken down per product category. Next, I can choose one of the suggested prompts to help me understand trends in total sales over time, and, again, Copilot returns explanations and code. I’ll repeat the steps, and now I get a chart showing total sales by month with some statistical information about my data. Copilot can also works directly in the context of a notebook cell, using %%code. Here, I’m asking Copilot to build me a machine learning model to forecast the total sales. This time, it generates a new code cell below. This code creates a simple linear regression model. I’ll run the cell, and now I have a machine learning model. In this way, I can continue to interact with Copilot to complete my tasks, and as a last step, I’m going to ask Copilot to save the forecasted sales values back to OneLake. Now, if I expand my table here, there’s our sale_forecast table, and, by the way, just by loading the table into the Lakehouse, it automatically makes it available for Power BI reporting, using something we call Direct Lake mode without any additional steps.

- Okay, so, now, can you use Copilot to help generate those Power BI reports?

- It actually makes creation of beautiful reports a lot easier using natural language, which means that, even without having built the semantic data model myself, I can get insights about the data right away. Let’s take a look. Now, I’m in the Power BI experience, and you can see Copilot is showing some prompt options for me to get started. I can use these suggestions or create my own prompt and have the report page automatically generated. In this case, I’ll choose the second option, suggest content for this report, and you’ll see that it suggests an outline and a few other options for my report. I’ll paste in my own prompt to create a page looking at the revenue won, forecast by products, campaigns, and industries, and when I run that, it takes a moment, and creates a detailed visual report with everything I asked for. From here, I can modify the report. For example, I can remove this tile. I can make this one bigger, showing revenue by industry, and, now, I can return to the Copilot sidebar to get some help to interpret these visuals. For example, I’ll paste in a prompt, asking, “Which product category has the most revenue won?” And you’ll see that it outputs details consistent with what you see in the revenue won by product category tile. It even provides a citation in the response that, when I select it, highlights the corresponding chart in my report.

- So, it’s really great to see Microsoft Fabric’s role now just for analytics, but also in advancing the experience with generative AI. So, how can people learn more?

- Well, Microsoft Fabric is generally available now, so to learn more, just go to aka.ms/fabric and you can discover lots of information and resources and even sign up for a free trial.

- Thanks, Nellie, for joining us today and also sharing all the updates to Microsoft Fabric, and, of course, to stay up to date with all the latest tech, be sure to subscribe to Microsoft Mechanics, and, as always, thank you for watching.