Video Transcript:

- By now, you’ve probably experimented with generative AI like GPT, which can generate responses as part of the model’s training data. Of course, as you build your own Copilot style apps, you can augment its knowledge by grounding it with additional enterprise data. The trick is in achieving this efficiently at quality and scale and today, we unpack the secret with a closer look at vector representations that you can use with other approaches to further improve the information retrieval process so that you have the most optimal set of grounding data needed to generate the most useful AI responses. And we’ll show how Azure Search changes the game for retrieval augmented generation by combining different search strategies out of the box and at scale so you don’t have to. And joining me again as the foremost expert on the topic is distinguished engineer Pablo Castro, welcome back.

- It’s great to be back. There’s a lot going on in this space so we have a lot to share today.

- It’s really great to have you back on the show and as I mentioned, top of mind for most people building Copilot style apps is how to generate high quality AI responses based on their own application or organizational data. So how do we think about solving for this?

- So let’s start by looking at how large language models are used in conjunction with external data. At the end of the day, the model only knows what it’s been trained on. It relies on the prompt and the grounding data we include with it to generate a meaningful response. So a key element of improving quality is improving the relevance of the grounding data.

- And for the retrieval, if you don’t get that right, it’s a bit like garbage in, garbage out. So logically we need a step that decides what specific information we retrieve and then present that to the large language model.

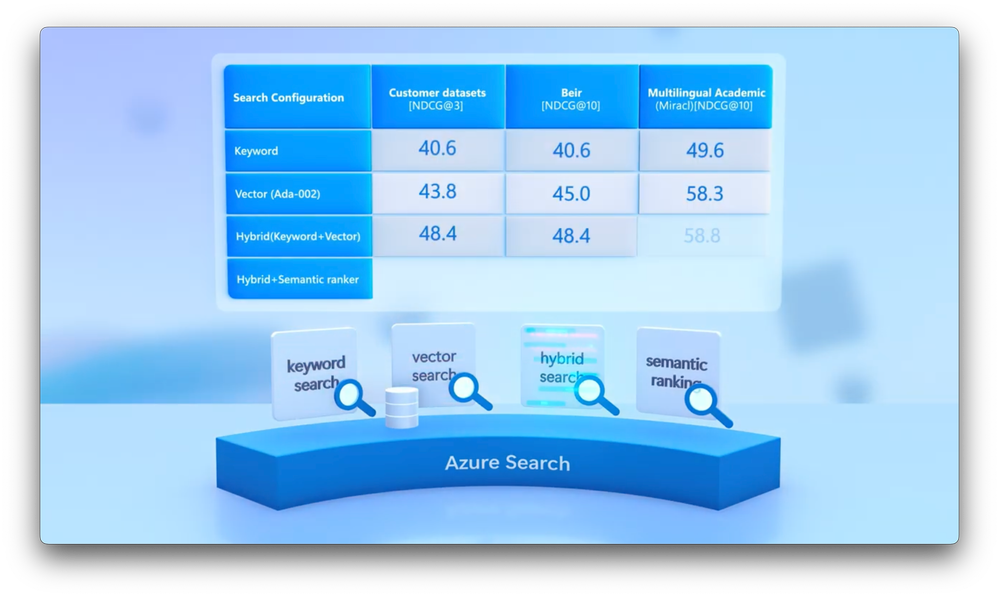

- We do, and this is key to improving the quality of generative AI outputs. The retrieval system can use different methods to retrieve information to add to your prompts. The first and most well understood is keyword search where we match the exact words to search your grounding data. Second, a method that has become very popular is vector search, which instead focuses on conceptual similarity. This is particularly useful for generative AI scenarios where the user is not entering a search-like set of keywords, but instead the app is using part of the dialogue to retrieve grounding information. Then there may be cases where you need both keyword and vector search. Here, we can take a hybrid approach with a fusion step to combine them to get the best of both worlds. These techniques focus on recall, as in they make sure we find all the candidates that should be considered. Then to boost precision, a re-ranking step can re-score the top results using a larger deep learning ranking model. For Azure Search, we invested heavily across all these components to offer great pure vector search support as well as an easy way to further refine results with hybrid retrieval and state-of-the-art re-ranking models.

- And I’ve heard that vector search is really popular for Copilot apps. Why don’t we start there with why you’d even use vectors?

- Sure, the problem is in establishing the degree of similarity between items like sentences or images. We accomplish this by learning an embedding model or encoder that can map these items into vectors such that vectors that are close together means similar things. This sounds abstract, so let me show you what this looks like. If you don’t want to start from scratch, there are prebuilt embedding models that you can use. Here, I’m using the OpenAI embeddings API. I have a convenient wrapper function from the OpenAI Python library to get the embeddings so let’s try it out and pass some text to it. As you can see, I get a long list of numbers back with these A-002 models, you get 15, 36 numbers back, which is the vector representation for my text input.

- And these numbers kind of remind me of GPS coordinates in a way.

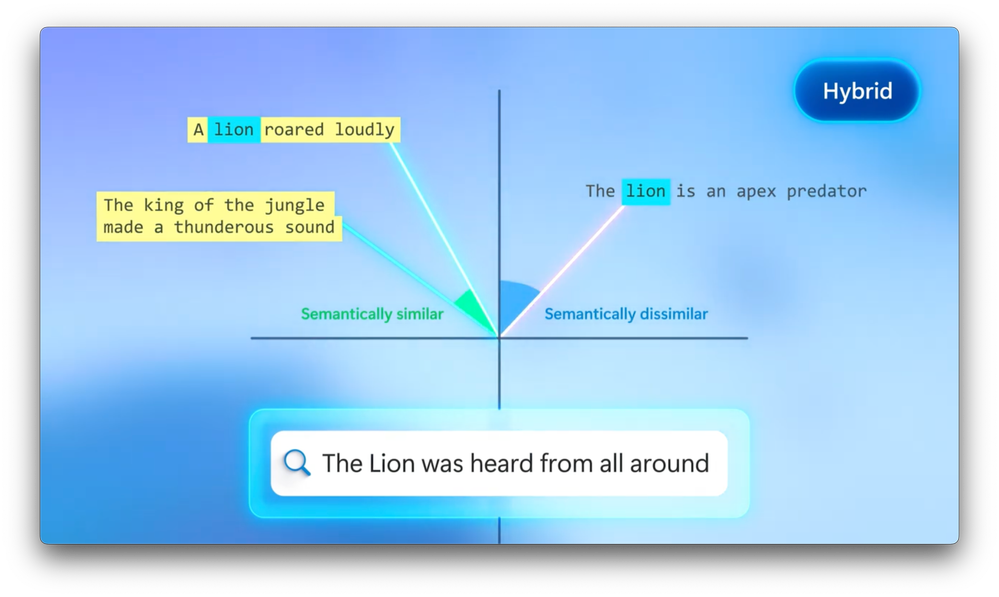

- Yeah, it’s a good way to think about them, but they are higher dimensional than latitude and longitude. They often have hundreds of dimensions or more. Now the idea is that we can compute distance metrics between these vectors so let me show you. I have two lists of sentences, and for each one, we’ll call the get embedding method to calculate a vector for each. And then we compute their similarity using cosine similarity, which is one possible distance metric that you can use. Think of the cosine similarity as using the angle that separates vectors as a measure of similarity. So I’m going to run this and the results display cosine similarities for each pair. And you can see that where sentences are unrelated, we get a lower score. If the sentences are related like in this case with different words, but sort of the same meaning, then you get a higher score. And here because we are comparing the same sentence, the similarity is highest or one. So this entire approach does not depend on how things are written. It focuses on meaning, making it much more robust on the different ways of how things can be written.

- So you’re kind of mapping the concepts together in a space just to see how close they are in terms of similarity, but how would something like this work on a much larger data set with hundreds of thousands or millions of different data points and documents? How would it scale?

- Well, you can’t keep all these embedded vectors in memory. What we really want is a system where you can store a large number of vectors and then retrieve them when you need them and that’s the job of a vector database. This requires often chunking the information into small passages and encode them into vectors. Then we take the vectors and index them in a vector database. Chunking is typically a manual effort, though that said coming soon we’ll help you automate that. Once the vector database is populated, during retrieval, the query text itself is converted into a query vector and sent to the database to quickly find indexed vectors that are close to it. Let me show you how this works in practice. In this case, we’re going to use Azure Cognitive Search and what we’ll do is start from scratch. I’m going to use the Azure Search Python library. The first thing we’ll do is create a super simple vector index with just an ID and a vector field. Let’s run this and now we’ll index some documents. As I mentioned, vectors can have hundreds or even thousands of dimensions. Here I’m using just three so we can actually see what’s going on. Here are my three documents. Each has a unique ID and each of them has three dimensions so let’s index them. And now we have indexed the data so we can search. I’ll search without text and just use the vector 123 for the query, which corresponds to the first document. And when I run this query, you can see our document with ID one is the top result. Its vector similarity is almost one. Now, if I move this vector by swapping one of the numbers, it changes the order and cosine similarity of results so now document three is the most similar.

- This is a nice and simple example than numerical representation of the distance in between each vector. So what if I wanted to apply this to real data and text? How would I do that to be able to calculate their similarities?

- Well, I wanted to start simple, but of course, this approach works with a larger knowledge base and I’ll show you using one that I’ve already indexed. This is the employee benefits handbook for Contoso and I want to search for something challenging like learning about underwater activities. This is challenging because the question itself is generic and the answer is going to be probably very specific. So when I run the query, we’ll see that I get results that are about different lessons that are covered in the Perks Plus program, including things like scuba diving lessons, which is related to underwater activities. The second document describes how Perks Plus is not just about health, but also includes other activities. And the last three results are less relevant. To be clear, keyword queries wouldn’t return results like this because the words in the query don’t match.

- And none of these documents actually matched your query words, and as the large language models become increasingly more multimodal, question is will this work for images too?

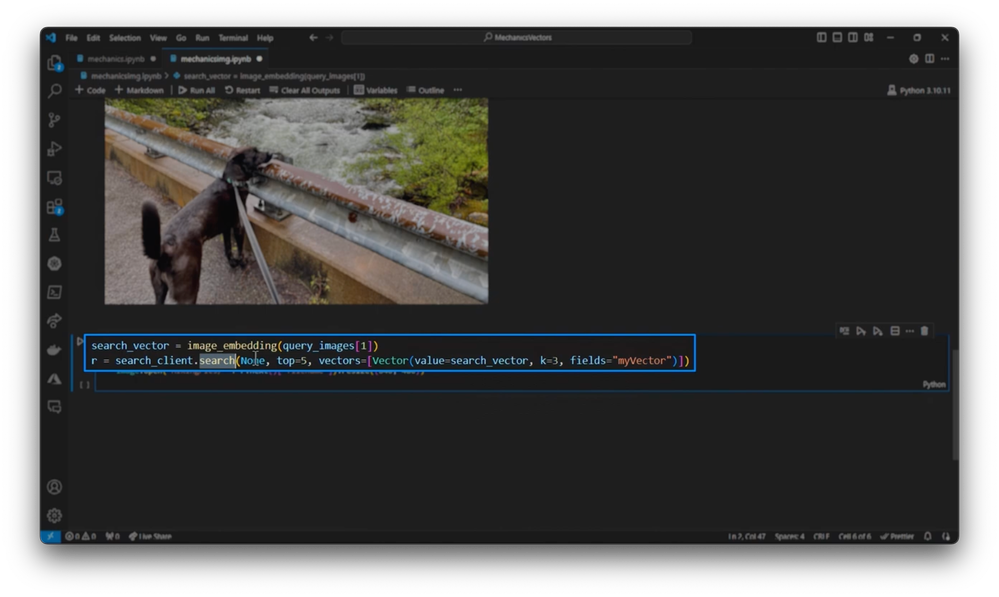

- Sure, the same approach would work for images or for that matter, other data types that have an embedding model. Here’s an example. I created another index, I called it pics, that has a file name and a vector definition. I’m using the Azure AI vision model with a vector size of 1024. I’ll define these two helper functions, one for images and one for text, each computes an embedding by calling my instance of the vision service. I’ve already run this part and this for loop indexes the images by computing the embeddings and inserting them into the database. In this example, I indexed pictures from a hiking trip. So if we pull one of the pictures, you’ll see a dog and a river in the background and trees on both sides of the river. And there are similar pictures like this already indexed in the database. Now I’ll compute that embedding for my query image just like I would for text. I’ll run this query just like before and just show the results as an image so we can see what’s going on. And this indeed is very similar. There’s a dog and the river and lots of trees.

- So we’ve seen kind of image to image discovery there. In that case, can I do image with text, maybe describe an image I want to find?

- Yes we can because this particular model has been trained on images and sentences. Let’s use text to find some images. So let’s try something pretty abstract like majestic. It’s very subjective, but you can see the top result does look pretty majestic. So this is an example for how vector databases can be used for a pure image search, pure text search, or both combined.

- And just to reiterate this, all these examples are completely void of keywords. They’re just finding related concepts. That said though, keywords are still important, so how do we use keywords now in search in this case?

- They are, think of times where you are looking for a specific port number or an email address. In those cases, keywords tend to be a better method because you’re seeking for an exact match. Now using the same index and the same kind of vector approach, let’s try something else where vectors don’t work as well. In this case, I know there is a way to report policy violations using this email address and I want to see the context of that document. So I’m going to just search for that using the same process where I’m going to compute an embedding for the query and then run the query against the same knowledge store. Now, what I did here is I filtered for which documents actually contain that email address. So you can see that zero of the top five documents actually mentions this. I’m sure that if we extend the search enough, we would’ve found it, but this needs to be one of the top documents and the reason it wasn’t there is because vector search is not that good at exact matching. So this is clearly an area where keyword search is better. Let’s try the same search using keywords this time. So here’s an equivalent version of this query where I’m querying the same index, but instead of computing and embedding and using the vector for querying, I’m literally just taking the text and using it as a text search. If I run this version of the query, you’ll find the document that contains the policy for reporting illegal or unethical activities, which makes sense given the email address. These two examples show that queries like the first one only works well if you have a vector index and queries like the second one only work well when you have a keyword index.

- So based on that, and depending on your search, you need both indexes working simultaneously.

- Exactly, and that’s why in Azure Search, we support hybrid retrieval to give you both vectors and keywords. Let’s try out our earlier email address example using hybrid retrieval. I’ll still compute in embedding, but this time I’ll pass the query as text and as a vector and then run the query. Here, I found an exact match with our email address. I can also use the same code to go back to our other example, searching for underwater activities, which represents a more conceptual query. Then change the display filter from Contoso to scuba and I’ll run that. And we can see that the results about the Perks Plus program that we saw before are in there and also the scuba lessons reference. So hybrid retrieval has been able to successfully combine both techniques and find all the documents that actually matter while running the same code.

- The results are a lot better and there’s really no compromise then over the approach.

- That’s right, and we can do better. As I mentioned earlier, you can add a re-ranking step. That lets you take the best recall that comes from hybrid and then reorder the candidates to optimize for the highest relevance using our semantic ranker, which we built in partnership with Bing, to take advantage of the huge amount of data and machine learning expertise that Bing has. Here, I’m going to take the same example query that we used before, but I’m going to enable semantic re-ranking in the query. So when I run this version of the query with the re-ranker, the results look better and the most related document about Perks Plus programs is now at the top of the list. The interesting thing is that re-ranker scores are normalized. In this case, for example, you could say anything with a score below one can be excluded from what we feed to the large language model. That means you are only providing it relevant data to help generate its responses, and it’s less likely to be confused with unrelated information. Of course, this is just a cherry picked example. We’ve done extensive evaluations to ensure you get high relevance across a variety of scenarios. We run evaluations across approved customer data sets and industry benchmarks for relevance and you can see how relevance measured using the NDCG metric in this case increases across the different search configurations from keywords to vector to hybrid and finally, for hybrid with the semantic re-ranker. Every point of increase in NDCG represents significant progress and here, we’re seeing a 22 point increase between keyword and the semantic re-ranker with hybrid. That includes some low-hanging fruit, but still a very big improvement.

- And getting the best possible relevance in search is even more critical when using retrieval augmented generation to save time, get better generated content, and also reduce computational costs related to inference.

- That’s right and to do this, you don’t need to be a search expert because we combine all the search methods for you in Azure Search, and you also have the flexibility to choose which capabilities you want for any scenario.

- So you can improve your Copilot apps with state-of-the-art information retrieval and the trick is really that these approaches all work together and are built into Azure Search. So is everything that you showed today available to use right now?

- It is and it’s easy to get started. We made the sample code from today available at aka.ms/MechanicsVectors. We also have a complete Copilot sample app at aka.ms/EntGPTSearch. Finally, you can check out the evaluation details for relevance quality across different data sets and query types at aka.ms/ragrelevance.

- Thanks so much, Pablo, for joining us today. Can’t wait to see what you build with all of this. So of course, keep checking back to Microsoft Mechanics for all the latest tech updates. Thanks for watching and we’ll see you next time.