Video Transcript:

- What if I told you that you could use GPT-powered natural language to investigate and respond to security incidents, threats, and vulnerabilities facing your organization right now? Well, today we’re going to take a look at how Microsoft’s Security Copilot, a new security AI assistant skilled with Microsoft’s vast cybersecurity expertise, can help you perform common security-related tasks quickly using generative AI. And this includes embedded experiences within the new Microsoft Defender XDR, Microsoft Intune for endpoint management, Microsoft Entra for identity and access management, and Microsoft Purview for data security and much more. And joining me today for a deeper dive into Security Copilot is Ryan Munsch, who’s on the team that built it.

- Thanks Jeremy. It’s great to be here. And I’m excited to share more about what we’ve done with generative AI and security.

- This is a topic I’ve really been looking forward to because I can see a lot of benefits here. You know, security teams, they’re stretched pretty thin these days. As we build more and more tools, though, to help them harden security or detect and respond to incidents, it’s still a struggle to parse through all that information that’s available to them and respond quickly.

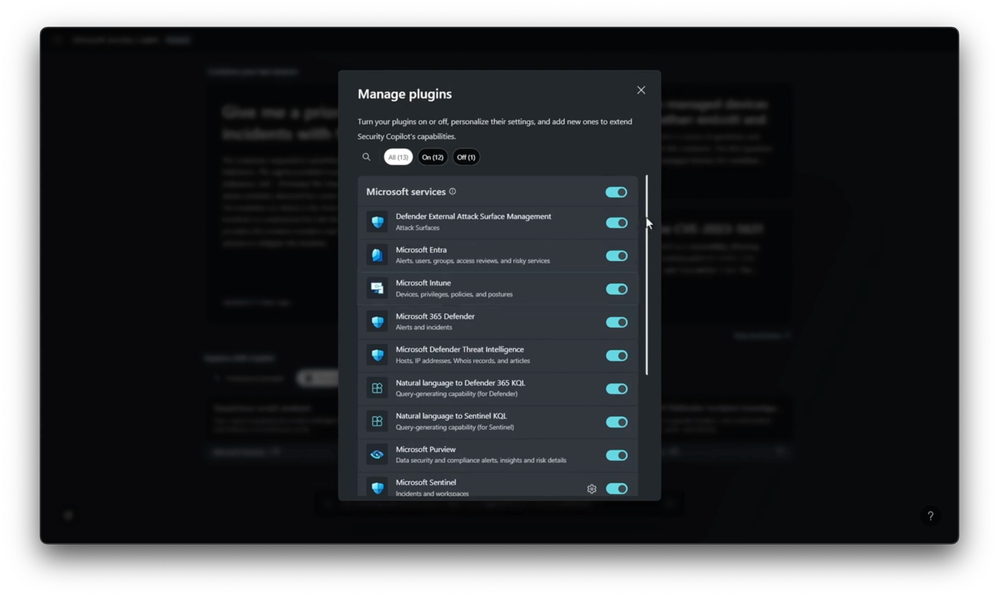

- Right, there are definitely skill and staffing shortages at play here. And when you add to that the increased frequency of attacks, even the most skilled teams can benefit from generative AI. In fact, think of Security Copilot as an enterprise-grade natural language interface to your organization’s security data. Let me show you. I’m in the Microsoft Security Copilot right now. Notice unlike other Microsoft Copilots, it’s a stateful experience to let you easily return to investigations from previous Copilot sessions. Now, this isn’t an ordinary instance of GPT. We’ve augmented the large language model training with security skills so that it can work with the signal in your environment, and the more quality security signals it has access to, the better. In the bottom left corner are your managed plugins. There’s Microsoft Entra for identity, Microsoft Intune for device endpoints, Microsoft Defender plugins for incidents, Threat Intelligence, and more, as well as Microsoft Purview for data security and Microsoft Sentinel, our cloud-based SIEM. And you even have options for third-party plugins like you see here with ServiceNow for incidents.

- This is a real breadth of information, then, that basically Security Copilot can use to help investigate incidents and also generate informed responses later.

- Yeah, that’s all data that can ground and enrich the Security Copilot experience, and the prompt experience brings additional skills too. Notice like other Copilot experiences, it proactively suggest prompts to get you started like this one: “Show high severity incidents and recent threat intelligence.” That said, it gets even more powerful with multi-step sequences using Promptbooks. I’ll open this one for suspicious script analysis to automate security process steps, and we can try it out. I have a PowerShell script in my clipboard that I’ll paste into the prompt, and in just a few moments, Copilot has safely reversed engineered the malware in the script with a step-by-step breakdown of the tactics used by the exploit in a way that’s clear and understandable.

- Okay, so what’s stopping someone, then, from using maybe an off-the-shelf large language model? And maybe a lot of people are going to say and think that if I just use the right prompts, I’ll be able to get the response that I want.

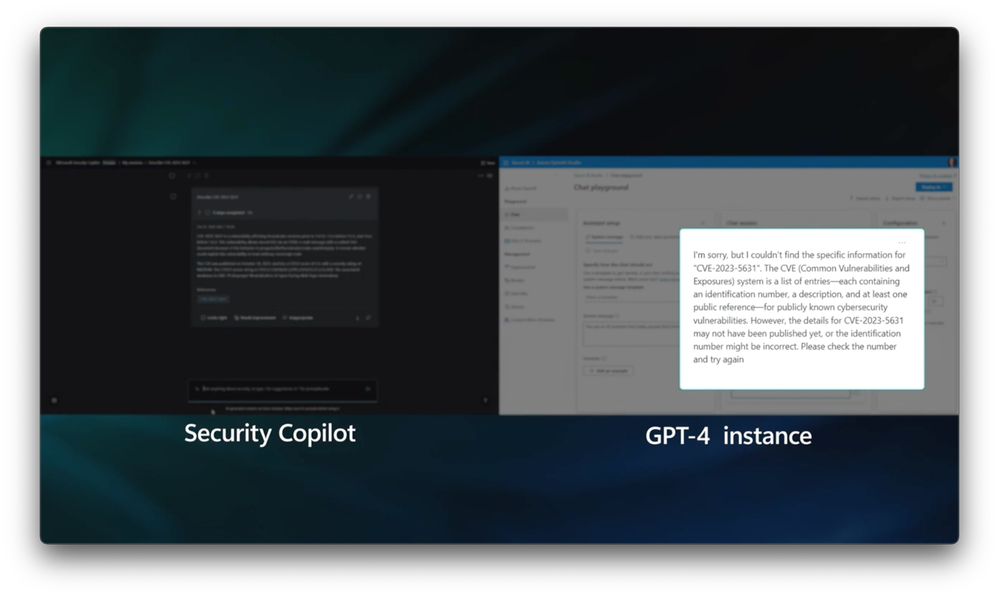

- So that’s partly true, but it’s not quite that simple. Let’s demonstrate this together. I’ll use Security Copilot, and you can use the GPT playground using an unmodified GPT in the Azure OpenAI service. We’ll run the exact same prompts.

- Yep, let’s do it. Sounds fun. So right now, I’ve actually got the GPT playground open. On the right, you’re seeing my screen, the Azure OpenAI Studio. It’s actually running a GPT-4 instance. And on the left, you’re seeing Security Copilot. Now, we’re going to start with a Common Vulnerability and Exposure, or CVE as we refer to it normally. And Ryan and I are both going to submit the same prompts and describe this particular CVE. And that’s going to take a moment to run and analyze the data, especially on the Security Copilot side. It’s chewing through that and getting everything ready. And when it’s finished, you’ll see that the off-the-shelf model on the right, it knows what a CVE is, but otherwise, this response isn’t useful at all. So you saw on the Security Copilot side, or you can see it now, that it gives us the details for this CVE.

- So let’s try something else. We’ll run another prompt. We’ll prompt it about a suspicious domain, VectorsAndArrows.com. Notice that while Security Copilot gathers the information before formulating a response, the GPT-4 instance on the right acknowledges that it actually can’t find the information. Also, we can see here in Security Copilot’s response that it knows that if we’re asking about this domain, it likely involves a security event. So it finds all of the matching IP addresses that the domain resolves to, the ASNs, or Autonomous System Numbers, related to it, and corresponding organizations.

- And the differences that you’re seeing here, it’s resulting in the fact that the large language model that’s off the shelf, it relies on general training, whereas prompts that were sent to Security Copilot on your side, they carry the security context using all that fine tuning and grounding data from all the plugins that you showed earlier.

- That said, of course the off-the-shelf model you use could be grounded with more data, and you can add up to three plugins to provide additional security data. But you’d still need to build an orchestration engine to find and retrieve the data, rank it for relevance, and then add the grounding data to your prompt with guardrails to stay below your token limits before presenting this information to the LLM to generate an informed response. And that’s just scratching the surface. For example, you’d also want to ensure that it provides citations with the right legal assurances. We do all of this and more right out of the box.

- All right, so I want to go back to the fine tuning aspect. You know, we recently had Mark Russinovich on, CTO of Azure, and he explained how a method called Low-Rank Adaptive fine-tuning, or LoRA fine-tuning, works to pinpoint LLM training specific to a skill like security, and this is a very specialized process. So it’s not as simple as building out your own solution even if you were a security expert.

- Exactly, and ultimately, you need to understand how the underlying AI processes work. And we’ve done all of that work for you based on our own extensive cybersecurity expertise. We’ve been partnered with OpenAI for years now on what it takes to build the most advanced models. And so we have built an AI supercomputer with a specialized hardware and software stack in Azure to run them. And with that foundation, we use the LoRA fine-tuning method to give the LLM more training specific to cybersecurity analysis, detection, response, summarization, and more. Then using evergreen Threat Intelligence with real-time retrieval, we also help ensure that it’s always up-to-date with new and trending threats. And like I showed earlier, we also provide built-in cyber-specific skills in Promptbooks so that you can get multi-step prompts built with insightful responses without being a prompt expert. And this is something we’ve been working on for years to get the processes and the LLMs’ underlying knowledge base up to the task of cybersecurity.

- Okay, so now you’ve explained how it works. So can we walk through a process maybe a security analyst might use as they use Security Copilot to investigate an incident?

- Definitely; in some cases, these investigations can start out in something like Microsoft Defender or simply as a support call, so let’s start there. In this case, I’m on an active call with a user who can’t access her device. I’ll prompt Security Copilot with, “What is the status of the user account for Lynne Robbins? Is it locked out?” After reasoning across information from its connected plugins, it confirms that Lynne’s account is disabled with more details. Let’s keep going. “What are the three most recent login attempts from the user?” And I got a few more clues back. Lynne not only has had multiple failed login attempts, but they’ve come from different devices and locations. This account is likely compromised. Now, I’ll ask, “Is the user considered risky? If so, why?” And it looks like she has a high risk level now, but we need to find out more details. So I’ll prompt Copilot with a forward slash to use a security-specific skill to generate and run Defender hunting queries. From the hunt, it’s clear there is a ransomware event, and lateral movement is occurring within Woodgrove. We need to correlate it now with an incident to see it in aggregate. We can do that by checking for security incidents on the same day, so I’ll enter that prompt for that day. Now, I’ll expand the response, and from the top line, I can correlate the alerts with incident 1–9–3–8–8. So now, I can simply ask for a summary of this incident. I can assess everything captured by Defender as well as anything related to the response process. And I now know it’s a potential human-operated ransomware attack, and this is bad.

- Right, and this is a big deal because all this went pretty quickly, which is a huge advantage, especially when you’re trying trying to contain a threat.

- Yes, Security Copilot can speed up investigation significantly. In fact, to save a little more time showing the rest of the investigation and also the statefulness of it, I’ll move to one that I’ve previously run. Here’s the incident we just looked up. Beyond the severity level, we can also see when the incident was first detected, the alert generation, and a bunch of other information. There are associated devices, threat actors, protocols used, processes, and login attempts from our user, Lynne. It tells me the investigation actions taken already and some remediation actions taken with real-time attack disruption to automatically contain the threat. And continuing on, I prompted it for associated entities. It provided more devices, users, and IPs. Then to start the remediation, I asked it to generate a PowerShell script to go check on the state of the device’s SMB configuration, which in our case was used to move laterally between systems.

- And the script generation here is super-useful. because when I write scripts, it’s always in short-term memory only, so I have to look it up either in reference materials and command line help, maybe ping Jeffrey Snover, and then it kind of exits out the other side of my head.

- Yeah, my algebra teacher had a similar complaint about my short-term memory. But you’re right, Security Copilot can now solve the short-term memory problem. Now, let’s go and focus on the initial machine compromised. Here, I used another skill to find host name access records for the device in question, and it found one access record. So we saw the lateral movement and a primary refresh token which generated along the way. Here I’ve generated a hunting query using Microsoft Sentinel to figure out how the attacker did it and look for security events associated with the IP address on the day of the account lockout. Security Copilot generated a Kusto Query Language, or KQL, statement for me. Then it runs that query for me here too. All of this helps me understand the level of attack infiltration across my network, and next, I can move on to the devices associated with our user, Lynne. Here I’ve checked if the PARKCITY Win10S device was compliant and it knew where to pull the device information. In this case, it’s Intune. It looks like the device was non-compliant, failing to meet a Defender for Endpoint policy. I don’t work in the IT side of the house, so next, I asked it for more information about what the policy does and why the device isn’t compliant. Looks like the device was not within the group scope for the app policy assignment, and as a result, the device was exposed and became an attack target.

- And this really shows how easy it is, then, to continue that investigation maybe in areas where you’re not an expert. So what’s the next step?

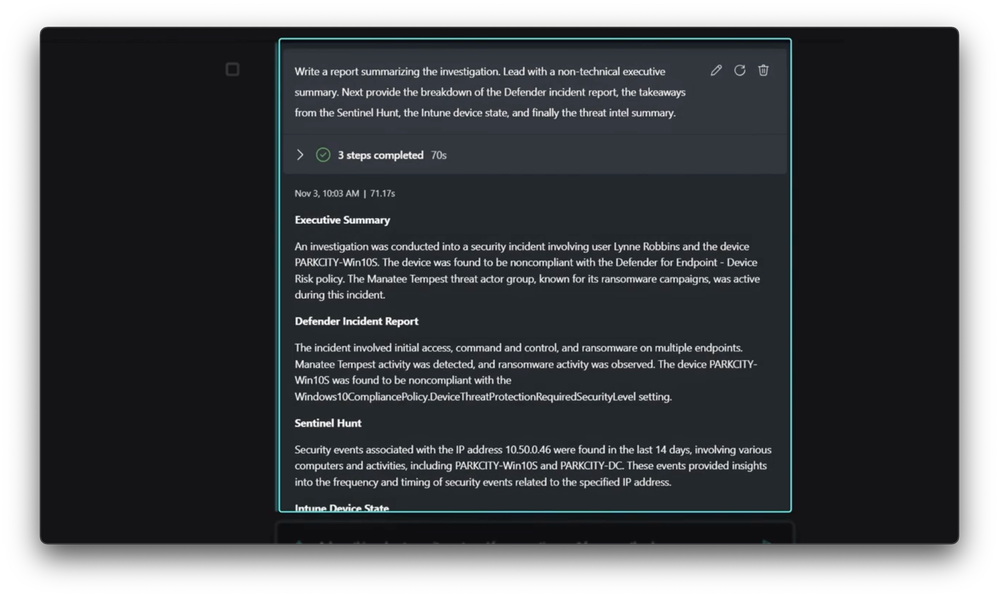

- Well, that’s the great thing about Security Copilot, is you don’t have to be an expert to get expert advice. And let’s dive into Threat Intelligence, in this case to understand the techniques in use by the actor and other entry points they could exploit. Our threat actor named from the incident summary was pretty memorable for me, so I prompted Security Copilot for some information about the sea cow of threat actor groups, Manatee Tempest. And this response, summary aside, is the insight into different techniques used for exploits. It’s likely Lynne fell victim to a drive-by download. But more importantly, what I learned is that I need to rally my security organization around analysis of any Cobalt Strike or Mythic payloads placed in my environment. And as an analyst, a lot of my time is spent writing summary reports for people who wouldn’t be as deep as I am on an incident like this. So I prompted Security Copilot to provide a non-technical executive level summary for our company leadership. And using the session context, it generated a thorough report that’s pretty easy to understand. And again, just to highlight the transparency here, if I expand the steps it took, then look at the first one, you can see what process the orchestrator used to develop a plan, gathered context from the session, then determined which skills to use as well as the rest of the logic and prompt details that are presented to the fine-tuned LLM to generate our response. When finished, from here, I can download and export it to a Word doc, Mail, or just copy it to my clipboard. And beyond high-level reports, Copilot can even help generate more immediate summaries for SecOp teams like pin boards. As I was going, I selected the turns of my investigation I wanted to highlight later using pins, then used Copilot to generate a pin board summary that I can share with my team so that any new members joining can quickly get up to speed on the work I’ve already done without duplicating effort. All of the prompts are saved to a stateful session, and the entire investigation is shared with all the context, including our pin board.

- And every phase of the investigation was sped up using all the grounding data that was pulled from quite a few different connected services, for more context. That said, though, in this case, Lynne actually called the support desk, which triggered your investigation. So what would happen if this was maybe part of an incident?

- Well, that’s actually a huge part of Security Copilot. We’ve also built integrated experiences across different Microsoft admin portals to help save you time, and this goes beyond security analyst roles too. First, and to answer your question, in Microsoft Defender, I have the incident number from before open here. In the Security Copilot sidebar, each incident automatically gets a generated summary, and many of the standalone capabilities I showed before can be done in context. As you investigate alerts, it can analyze scripts and commands in place like this one. And in Advanced Hunting, you can use natural language to author KQL queries with Security Copilot. Then beyond Microsoft Defender, let me give you a quick look at other embedded Copilot experiences that we have. For endpoint admins, Security Copilot in Microsoft Intune helps simplify policy management so you can generate policies using natural language prompts, find out more about settings, options, and their impacts, and pull up critical details about managed devices. If you’re an identity admin, Security Copilot in Microsoft Entra will let you use the natural language to ask about users, groups, sign-ins, and permissions to instantaneously get a risk summary as well as steps to remediate and guidance for each identity at risk. And in ID Governance, it can help you to create a lifecycle workflow to streamline the process to automate common task. And one more, here’s the experience for data security admins in Microsoft Purview, where Security Copilot with data loss prevention alerts will quickly generate insights about data and file activities. And when used with Insider Risk Management, alert summaries, find details about high-risk users and the related data exfiltration activities to help you respond fast. And there are more embedded experiences to look forward to. Security Copilot will also be integrated with Microsoft Defender for Cloud and other plugins soon.

- So you can stay in the context, then, of the tools that you use every day. So for anyone who’s watching right now looking to get started, what do you recommend?

- If you’re looking at options to use generative AI with your security practices, join our early access program at aka.ms/SecurityCopilot, and you can even help shape what Security Copilot can do.

- Thanks so much, Ryan, for showing us what Security Copilot can do to help us investigate and respond to incidents using generative AI. Of course, to stay up to date with all the latest tech, be sure to subscribe to Mechanics, and thanks for watching.