Video Transcript:

-What happens when you combine the new GPT-4 Turbo with Vision large language model with Vision and Search in Microsoft’s Azure AI platform? Well, the combination, as I’ll demonstrate today, can enable direct lookups from image inputs over your organizational data to ground generative AI responses.

-This marks a significant improvement in the accuracy of natural language processing and image recognition tasks to enable new generative AI scenarios. Video inputs are also uniquely supported when you combine GPT-4 Turbo with Vision AND Azure AI Vision.

-And best of all, with the new Azure AI Studio, it’s easy to build and orchestrate powerful copilot style apps that now leverage the power of both. Let’s start by exploring the baseline capabilities of GPT-4 Turbo with Vision, which I’ll refer to as GPT-4V for short. Azure AI Studio provides a single destination to directly leverage GPT-4V from the Azure OpenAI service and experiment with it in the playground.

-The model brings with it extensive open world visual understanding, which means images can now be used as an input to generate text-based responses. To give you a flavor of what it can do, I’m in the new Azure AI Studio, and I’ve uploaded an image of a right-angled triangle. I’ve visually pointed to areas in the image with hand-written questions on the math problem to solve.

-And you can see GPT-4V is describing the image. it’s also acknowledging the math problem and then generating a response with a detailed, step-by-step breakdown of its reasoning. Here’s another example, this time of temporal anticipation. I’ve uploaded three images and I’m prompting GPT-4V to predict what will happen next based on the images.

-And it’s able to predict that the player will kick the ball towards the goal, attempting to score, with the goalkeeper attempting to block the shot. Vision and language capabilities like this open up brand-new scenarios when building copilot-style apps. Here’s a practical use case: in the system message, I’ve given the model context of its purpose.

-In this case I want it to function as a vacation rental assistant. I’ve written a prompt to provide a description, along with tips for enhancing the property listing based on the images I’ve uploaded. And GPT-4V knows what vacation listings look like and how they are worded. So, first, it generates a short title and description, followed by a bulleted list of features of the property.

-And finally, it generates sample text for an enhanced listing with tips on how to customize things further, all derived from the details in the images. In fact, in all of these examples, you can see GPT-4V’s visual reasoning capabilities and how easy it is to experiment with the model using Azure AI Studio.

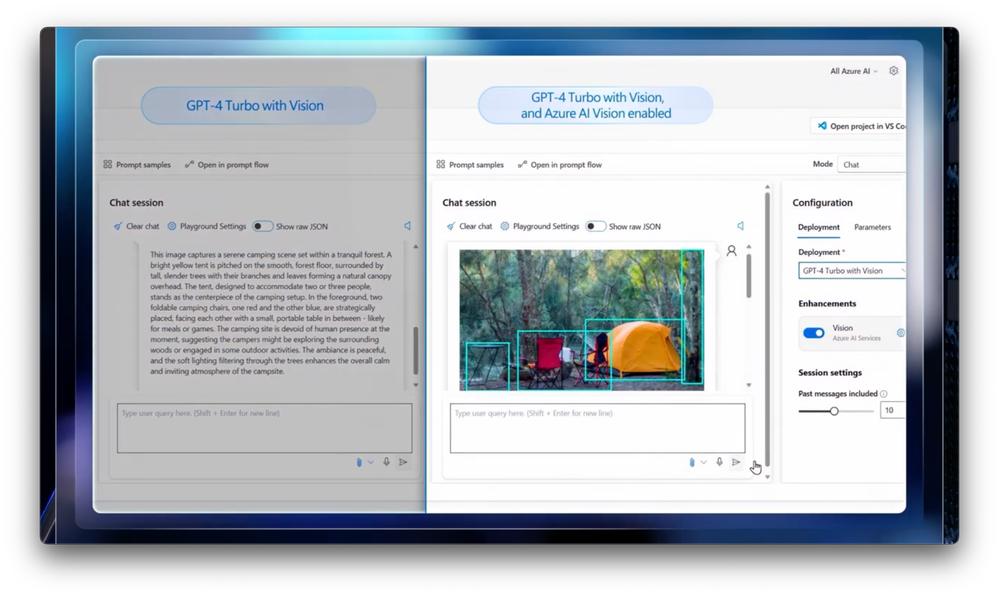

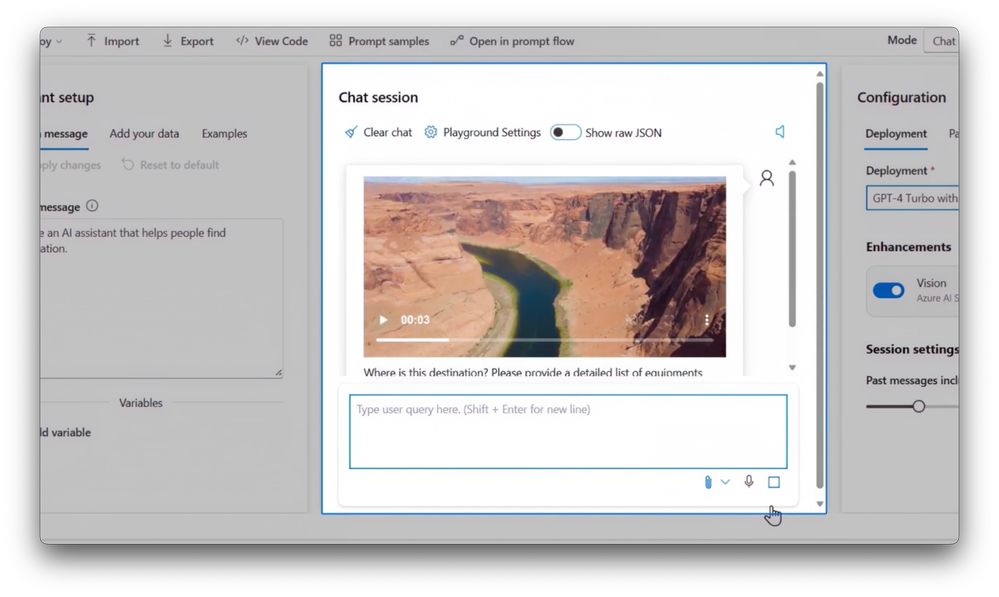

-Now let’s look at what happens when you combine the baseline capabilities of GPT-4V with Azure AI Vision. Here, for example, I’m building a chat experience that is part of an outdoor adventure site. You’ll see that I’ve now enabled the Azure AI Vision service. So now we can use video as an input for GPT-4 Turbo with Vision through the native integration of Azure AI Vision Video Retrieval.

-In my prompt, I’ll ask where this destination is with a recommendation on the type of equipment required for camping in the month of January. And you can see it knows the location, and based on the conditions for that time of year, it makes a recommendation for footwear and suggests additional equipment recommendations.

-It even recommends that I check the weather forecast to make any adjustments before my trip. Behind the scenes, the video is broken down into still image frames using Azure AI Vision’s Video Retrieval model, which is automatically deployed on the backend. The most relevant frames are presented to the GPT-4V model, which is then able to reason over images.

-And combined with the additional context provided in the prompt, it presents a list of recommended equipment based on its open-world understanding. That’s pretty powerful in itself. That said, we can do even more when we combine GPT-4V and Azure AI Vision for image analysis tasks. I’ll demonstrate this first in the Azure AI Studio playground.

-I’m going to compare the generated response from this camping image with GPT-4V on the left compared to GPT-4V with Azure AI Vision enabled. And as you can see, with Azure AI Vision, bounding boxes appear over the image and specific items are called out: the orange tent, the camping chairs, and a small black table, along with their positions, resulting in a more detailed description compared to GPT-4V on its own.

-And if I click on the text for the orange tent then scroll up, you’ll see that the tent’s highlighted with a bounding box in the image. This level of detail introduces the possibility of direct lookups of image data, especially when grounded with enterprise image data and combined with Azure AI Search for Retrieval Augmented Generation.

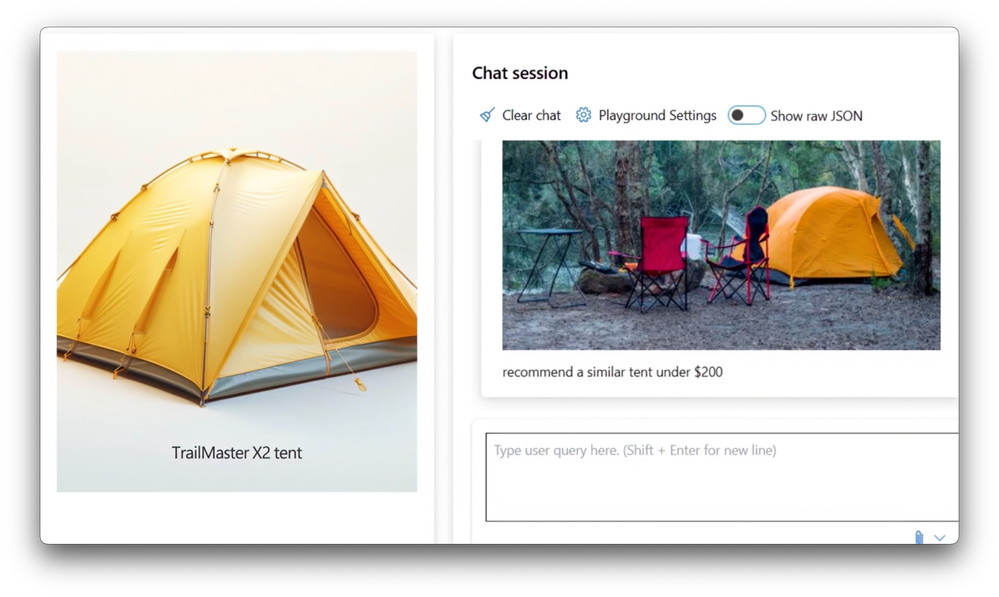

-Let me show you this combination in action with our outdoor company enterprise chat app. This time, I’ll upload the same image but prompt it to find me a tent like this one under $200. And again, you can see it’s able to reason over the image, pick out the tent, and generate a response and recommendation based on the closest item in our catalog.

-In this case, it recommends the TrailMaster X4 tent with a direct link to purchase. With retrieval augmented search enabled, this level of specificity is made possible because, behind the scenes, Azure AI Search uses vector search with image embeddings along with our state-of-the-art semantic re-ranker for information retrieval. It brings in the metadata that Azure AI Vision has derived from the image, as well as context from the user prompt and reasons over the images and metadata in the catalog to find the top results which are then presented to the GPT-4V large language model to generate an informed response.

-Let me show you how you can build this experience yourself. Back in Azure AI Studio, notice to the right we’ve deployed the GPT-4V model. Next, we are going to ground the model with our enterprise data and catalog images by selecting add your data.

-This lets you select or add a data source with three possible options: you can bring your images and metadata in from Azure Blob Storage, which will automatically index the data for you, or you can use an existing Azure AI Search instance and index, or upload files and the associated metadata manually. In this case, I’ll choose the second option with search. I’ll select the service instance I want and I’ll also choose the index I want.

-Then, I’ll agree to the terms, and finally, confirm. So, with that, we’ve provided our grounding data. Azure AI Search is enabled and working alongside GPT-4V and we can now go back to the playground to try it out. I’ll upload the same image of the camping configuration and try out the same prompt, recommend a tent like this under $200. And it responds with the TrailMaster X2 tent this time, priced at $190. If I compare the tent image in the picture and the TrailMaster X2 tent from our catalog, you can see that Azure AI with GPT-4V successfully found a similar tent at our price criteria.

-And we can take this a step further by asking is there a cheaper option? And here, the model reasons over the data in our data source and generates a response, highlighting two similar tents at a lower price. And as you create your own app, it’s easy to translate everything you do in the AI Studio into working code. You can use the View Code button to see the code behind this app with all of the parameters set.

-In fact, if I switch over to Visual Studio Code, you can easily see everything that’s happening, including my system message and more. And moving back to the Azure AI Studio, I can even deploy the code as a new web app directly from here, or update an existing web app right from within the AI Studio. This end-to-end experience of exploring, building, testing, orchestrating and deploying your generative AI apps is made possible with Azure AI Studio which abstracts much of the complexity and learning curve.

-In fact, you can watch a detailed overview at aka.ms/AzureAIStudioMechanics. So that was how the combination of the new GPT-4 Turbo with Vision large language model and Microsoft’s Azure AI platform with Vision and Search can improve the accuracy of natural language processing and image recognition tasks for your copilot-inspired apps. You can start using Azure AI Studio today at ai.azure.com. To learn more, check out our QuickStart guides at aka.ms/LearnAIStudio. Subscribe to Microsoft Mechanics for the latest in tech updates, and thanks for watching.